Natural Language Processing(NLP) 기초 내용, 실습, 논문 정리

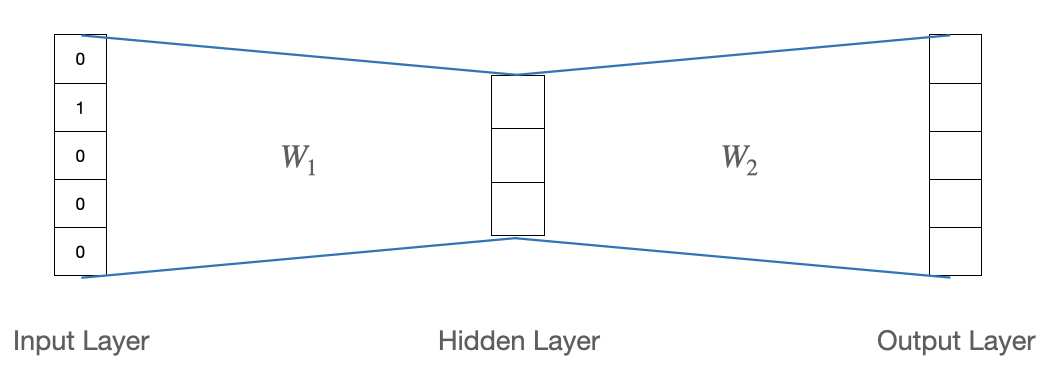

1.[NLP] Word Embedding, Word2Vec

텍스트를 벡터로 바꾸는 다양한 표현방법을 이해한다. Word Embedding을 배우고 Word2Vec의 구조를 살펴본다.

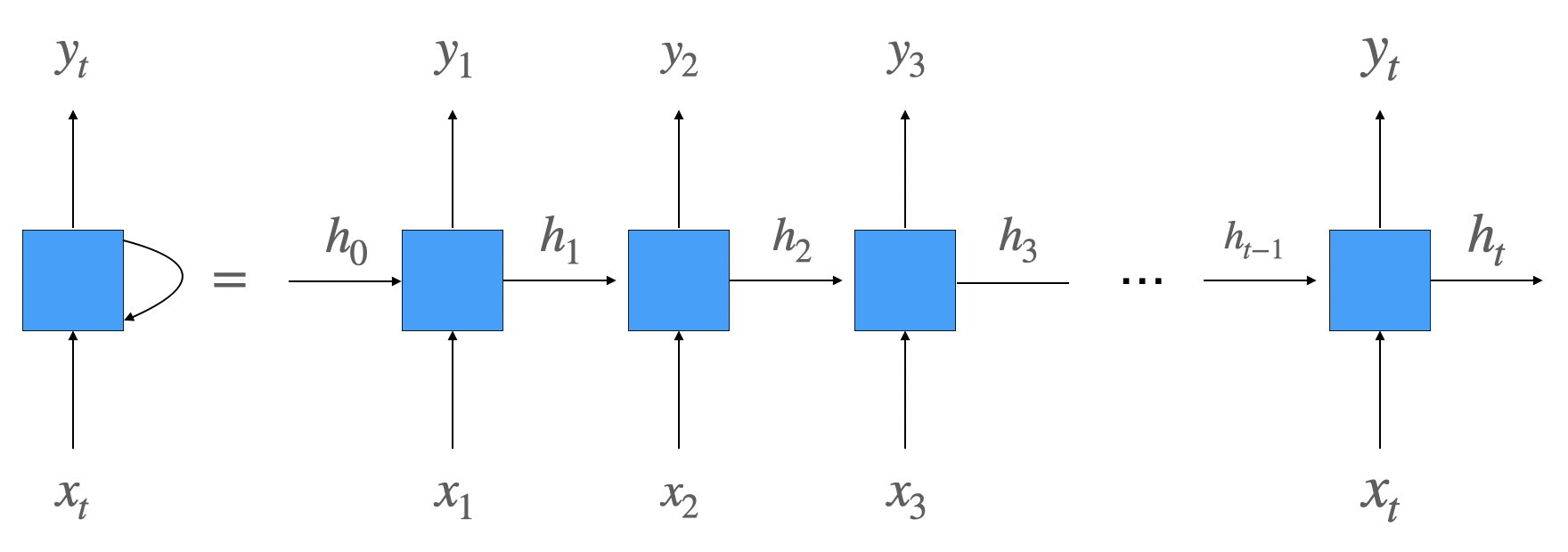

2.[NLP] RNN (Recurrent Neural Net), gradient vanishing/exploding 현상과 long-term dependency 문제

sequence형 데이터를 처리하는 모델인 RNN의 동작 원리를 이해하고 다양한 형태에 대해 배워본다. RNN이 지니는 기울기 소실과 관련된 현상과 이로 인해 일어나는 long-term dependency problem에 대해 알아본다.

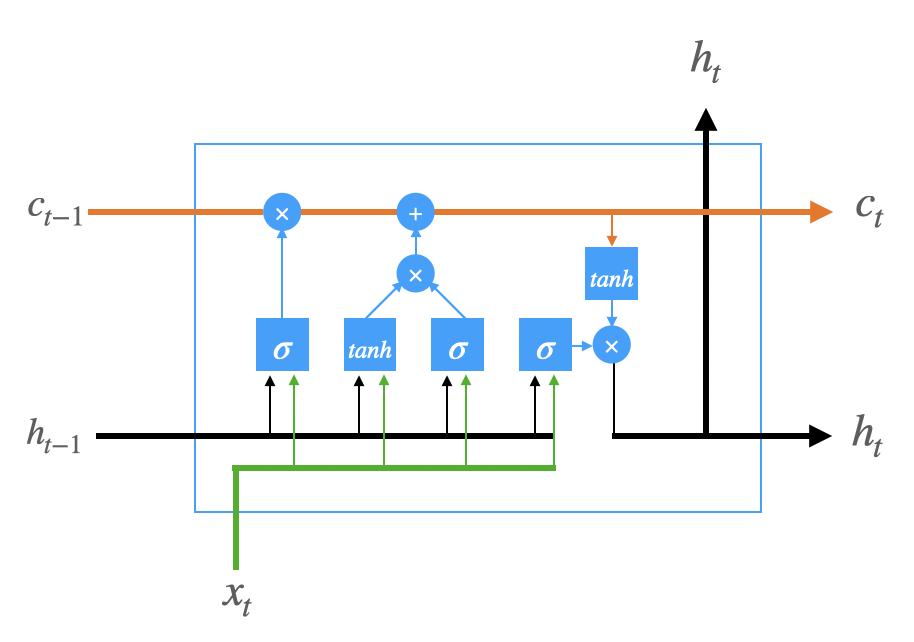

3.[NLP] Gate를 활용해 RNN의 단점을 극복한 모델: LSTM, GRU

RNN이 지닌 Short-term Memeory의 단점을 극복한 LSTM, GRU에 대해 알아보고 여러 gate 구조를 배운다.

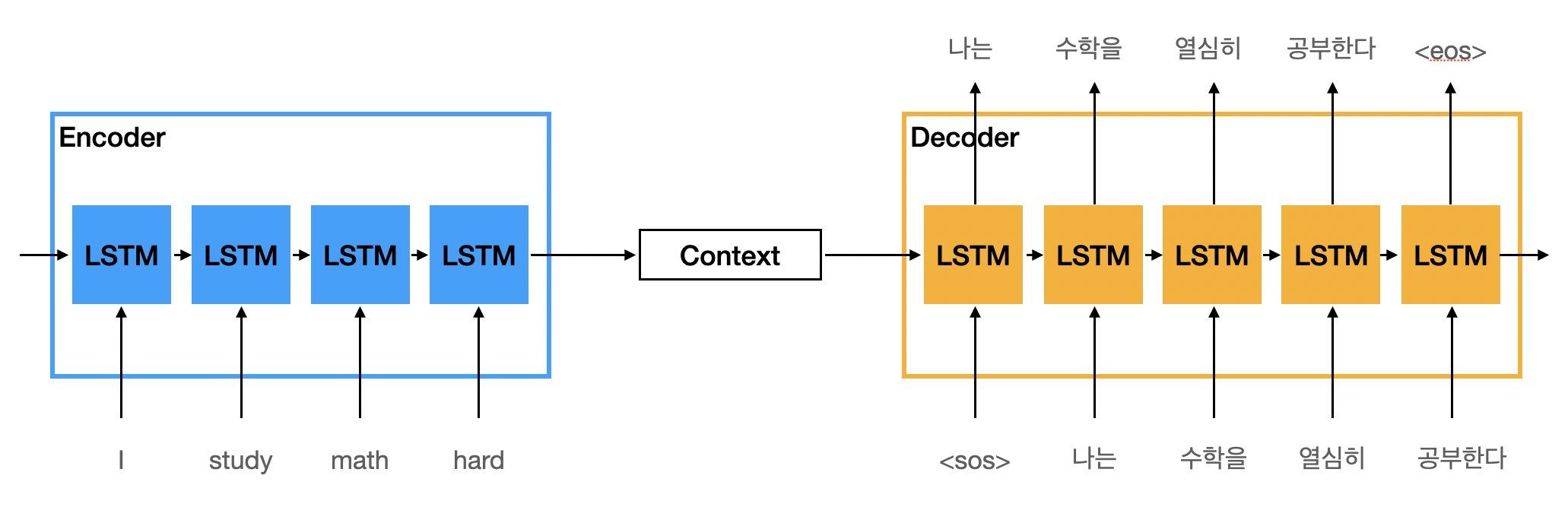

4.[NLP] Encoder∙Decoder 구조와 Seq2Seq, Seq2Seq with Attention

Encoder∙Decoder 구조를 활용해 sequence를 처리하고 sequence를 출력으로 내보내는 Seq2Seq 구조에 대해 알아보고 Seq2Seq에 Attention을 적용한 Seq2Seq with Attention에 대해 배운다.

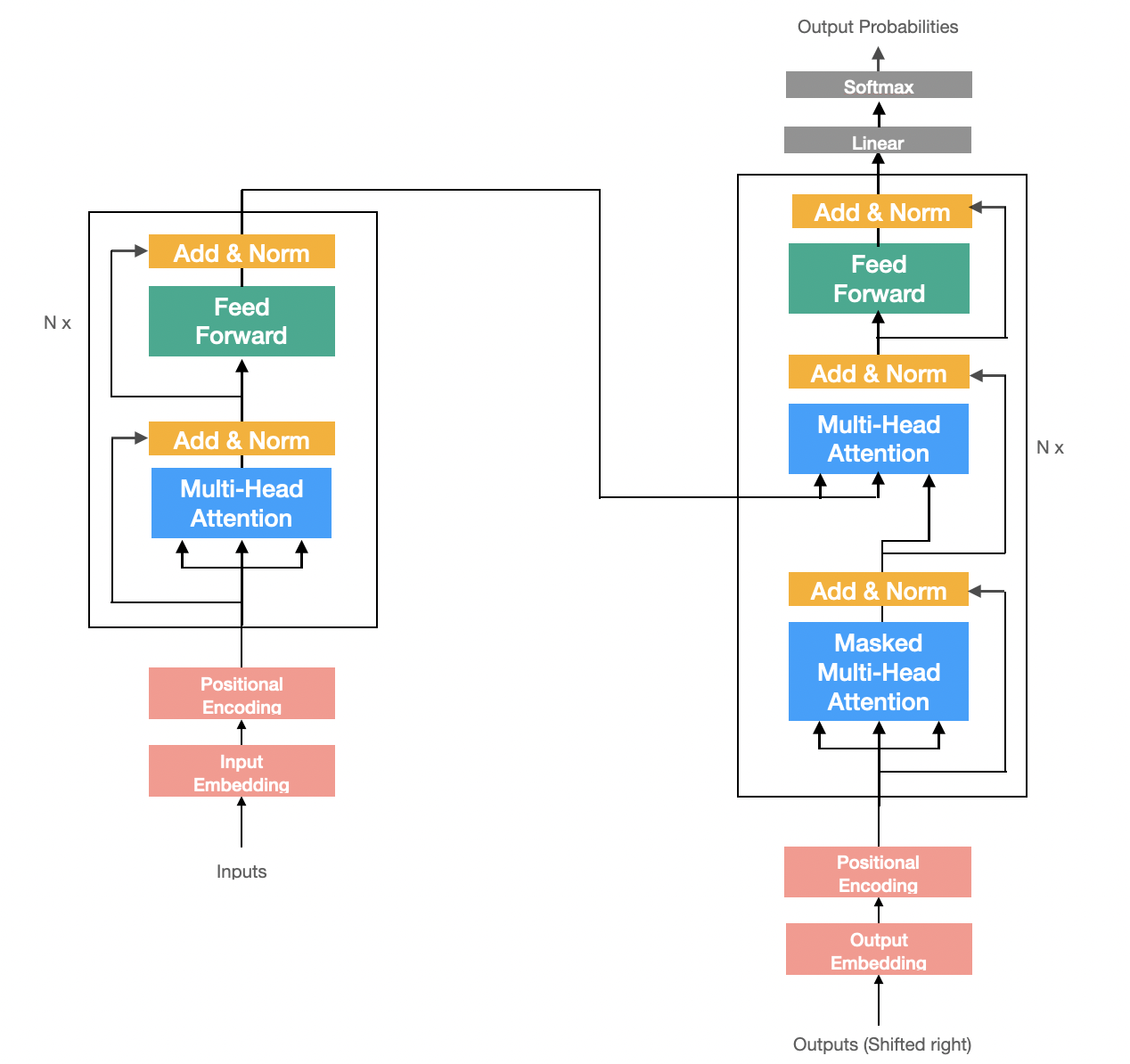

5.[NLP] Transformer와 Self-Attention

Encoder-Decoder 구조의 RNN을 모두 Self-Attention으로 대체한 모델인 Transformer에 대해 배운다.

6.[NLP] NLTK, spaCy, torchtext를 이용하여 영어 토큰화(English Tokenization)작업 수행하기

토큰화를 위해 사용되는 자연어처리 라이브러리인 NLTK, spaCy, torchtext에 대해 알아본다.

7.[NLP] torchtext, spaCy를 이용하여 Vocab 만들기

spaCy의 Tokenizer와 torchtext의 Vocab을 활용하여 말뭉치를 단어사전으로 바꾸는 실습을 진행해본다.

8.[NLP] 자연어처리에 사용되는 Dataset(데이터셋), Dataloader 만들기

spaCy의 Tokenizer와 torchtext을 활용하여 말뭉치를 단어 사전으로 바꾼다. 단어 사전을 활용해 데이터셋을 구성하고 학습을 위한 데이터로더를 구성하는 방법에 대해 배운다.

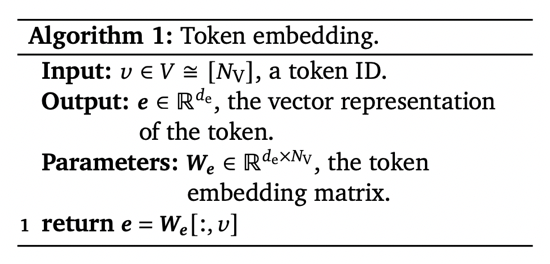

9.Formal Algorithms for Transformers 요약

Formal Algorithms for Transformers 논문 요약 정리

10.Huggingface 🤗 Transformers 소개와 설치

🤗 Transformers 소개와 설치

11.[Huggingface 🤗 Transformers Tutorial] 1. Pipelines for inference

🤗 Transformers의 pipeline() 메소드를 이용하여 자연어처리 task를 간단하게 수행합니다.

12.[Huggingface 🤗 Transformers Tutorial] 2. Load pretrained instances with an AutoClass

🤗 Transformers의 AutoClass의 종류와 활용방법에 대해 배워봅니다.

13.[Huggingface 🤗 Transformers Tutorial] 3. Preprocess

🤗 Transformers의 AutoTokenizer를 활용하여 text를 전처리하는 방법을 배워봅니다.

14.[Huggingface 🤗 Transformers Tutorial] 4. Fine-tune a pretrained model

🤗 Transformers를 이용하여 pretrained model을 fine-tuning하는 방법을 배워보고 sentiment analysis(감정 분석) task를 간단하게 수행해봅니다.

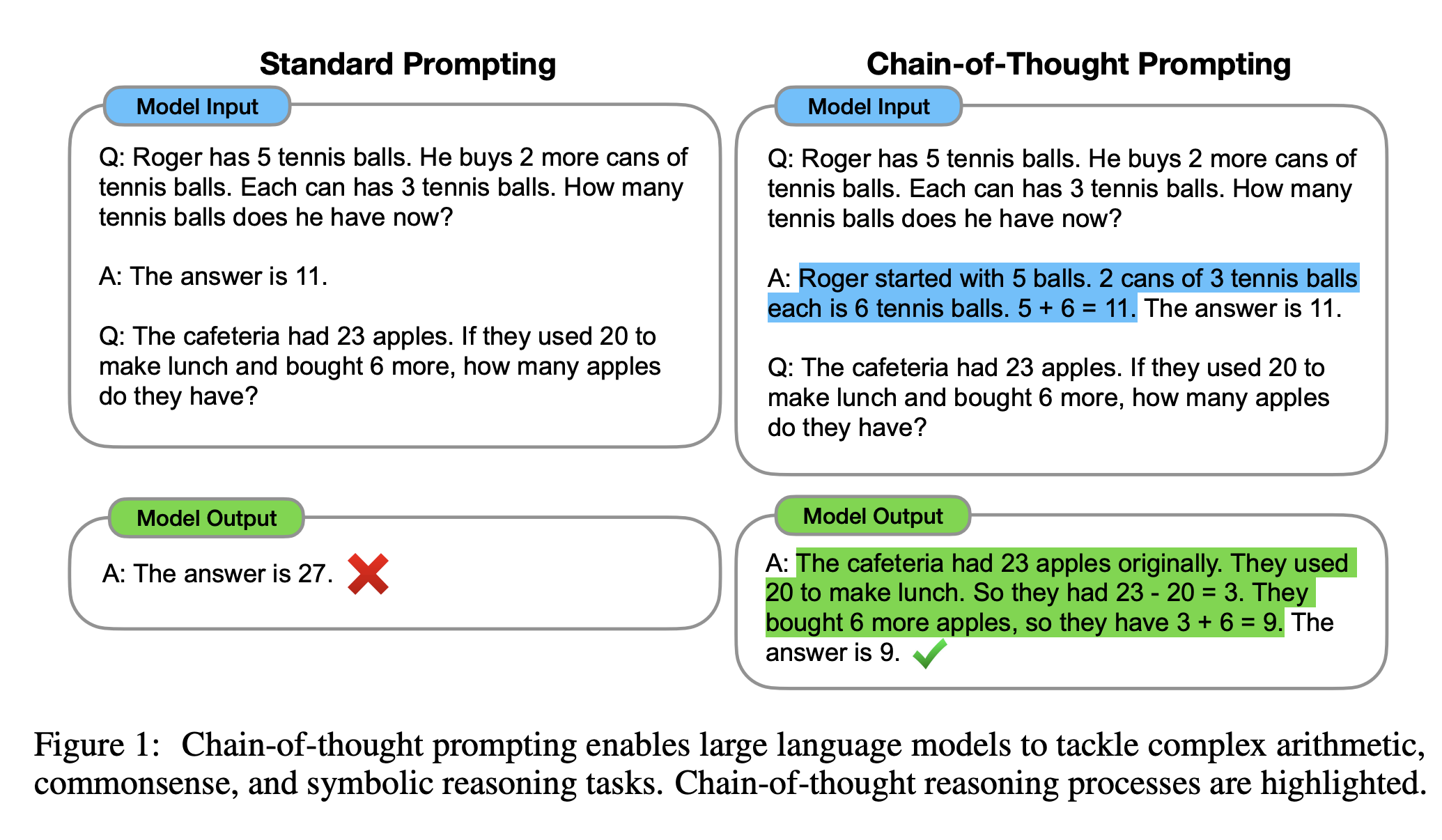

15.Chain-of-Thought Prompting Elicits Reasoning in Large Language Models 정리

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models 논문 요약 정리