사진 조정하기

용어정리

- 얕은 피사계 심도(shallow depth of field : DOF) :셸로우 포커스(shallow focus)

- 배경을 흐리게 하는 기술

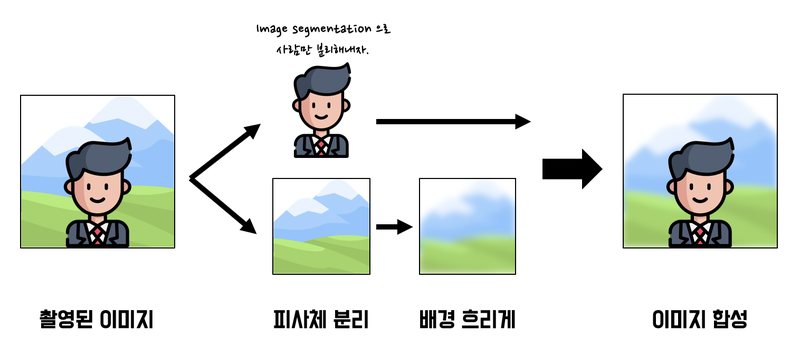

데이터 준비하기

- 배경이 있는 셀카를 촬영합니다. (배경과 사람의 거리가 약간 멀리 있으면 좋습니다.)

- 시맨틱 세그멘테이션(Semantic segmentation)으로 피사체(사람)와 배경을 분리합니다.

- 블러링(blurring) 기술로 배경을 흐리게 합니다.

- 피사체를 배경의 원래 위치에 합성합니다.

이미지에서 배경과 사람 분리하기

import os

import urllib

import cv2

import numpy as np

from pixellib.semantic import semantic_segmentation

from matplotlib import pyplot as plt

print('슝=3')- urllib는 웹에서 데이터를 다운로드할 때 사용합니다.

- cv2는 OpenCV 라이브러리로 이미지를 처리하기 위해 필요합니다.

- pixellib는 시맨틱 세그멘테이션을 편하게 사용할 수 있는 라이브러리

# 본인이 선택한 이미지의 경로에 맞게 바꿔 주세요.

img_path = '.human_segmentation/images/my_image.png'

img_orig = cv2.imread(img_path)

print(img_orig.shape)

plt.imshow(cv2.cvtColor(img_orig, cv2.COLOR_BGR2RGB))

plt.show()Image Segmentation (이미지 세그멘테이션)

- 이미지에서 픽셀 단위로 관심 객체를 추출하는 방법

시맨틱 세그멘테이션(semantic segmentation)

- 물리적 의미 단위로 인식하는 세그멘테이션

- 이미지에서 픽셀을 사람, 자동차, 비행기 등의 물리적 단위로 분류(classification)하는 방법

- 시맨틱 세그멘테이션은 '사람'이라는 추상적인 정보를 이미지에서 추출해 내는 방법

인스턴스 세그멘테이션(Instance segmentation)

- 인스턴스 세그멘테이션은 사람 개개인별로 다른 라벨을 가지게

- 여러 사람이 한 이미지에 등장할 때 각 객체를 분할해서 인식하자는 것이 목표

딥러닝이전의 이미지 세그멘테이션 방법

- 워터쉐드 세그멘테이션(watershed segmentation)

- 이미지에서 영역을 분할하는 가장 간단한 방법은 물체의 '경계'를 나누는 것

- 이미지는 그레이스케일(grayscale)로 변환하면 0~255의 값- 픽셀 값을 이용해서 각 위치의 높고 낮음을 구분

- 낮은 부분부터 서서히 '물'을 채워 나간다고 생각하면 각 영역에서 점점 물이 차오르다가 넘치는 시점

- 그 부분을 경계선으로 만들면 물체를 서로 구분

DeepLab 세그멘테이션 모델

DeepLab V3+: Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

- DeepLab에서 atrous convolution을 사용한 이유

- receptive field를 넓게 사용하기 위해 사용- 적은 파라미터로 필터가 더 넓은 영역을 보게 하기 위해

- Depthwise separable convolution

- 3x3 conv layer 의 receptive field를 1/9 수준의 파라미터로 구현할 수 있기 때문에 효율적

DeepLab 모델을 준비

- PixelLib를 이용하면 편하게 사용

PixelLib에서 제공해 주는 모델을 다운로드

# 저장할 파일 이름을 결정합니다

model_dir = os.getenv('HOME')+'/aiffel/human_segmentation/models'

model_file = os.path.join(model_dir, 'deeplabv3_xception_tf_dim_ordering_tf_kernels.h5')

# PixelLib가 제공하는 모델의 url입니다

model_url = 'https://github.com/ayoolaolafenwa/PixelLib/releases/download/1.1/deeplabv3_xception_tf_dim_ordering_tf_kernels.h5'

# 다운로드를 시작합니다

urllib.request.urlretrieve(model_url, model_file)다운로드한 모델을 이용해 PixelLib로 우리가 사용할 세그멘테이션 모델을 생성

model = semantic_segmentation()

model.load_pascalvoc_model(model_file)모델에 이미지를 입력

segvalues, output = model.segmentAsPascalvoc(img_path)- segmentAsPascalvoc라는 함수

- PASCAL VOC 데이터로 학습된 모델을 이용한다는 의미

- PASCAL VOC 데이터의 라벨 종류

LABEL_NAMES = [

'background', 'aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus',

'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike',

'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tv'

]

len(LABEL_NAMES)21

background를 제외하면 20개의 클래스

20 의 의미는 tv

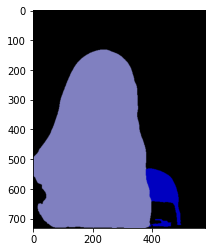

모델에서 나온 출력값 확인

plt.imshow(output)

plt.show()segvalues{'class_ids': array([ 0, 9, 15]),

'masks': array([[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False]])}

for class_id in segvalues['class_ids']:

print(LABEL_NAMES[class_id])background

chair

person

- output에는 세그멘테이션이 된 결과가 각각 다른 색상으로 담겨 있음

- segvalues에는 class_ids와 masks가 있음

- class_ids를 통해 어떤 물체가 담겨 있는지 확인 가능

물체마다 output에 어떤 색상으로 나타나 있는지 확인

# 아래 코드를 이해하지 않아도 좋습니다

# PixelLib에서 그대로 가져온 코드입니다

# 주목해야 할 것은 생상 코드 결과물이예요!

colormap = np.zeros((256, 3), dtype = int)

ind = np.arange(256, dtype=int)

for shift in reversed(range(8)):

for channel in range(3):

colormap[:, channel] |= ((ind >> channel) & 1) << shift

ind >>= 3

colormap[:20]array([[ 0, 0, 0],

[128, 0, 0],

[ 0, 128, 0],

[128, 128, 0],

[ 0, 0, 128],

[128, 0, 128],

[ 0, 128, 128],

[128, 128, 128],

[ 64, 0, 0],

[192, 0, 0],

[ 64, 128, 0],

[192, 128, 0],

[ 64, 0, 128],

[192, 0, 128],

[ 64, 128, 128],

[192, 128, 128],

[ 0, 64, 0],

[128, 64, 0],

[ 0, 192, 0],

[128, 192, 0]])

PixelLib에 따르면 위와 같은 색을 사용

사람을 나타내는 15번째 색상은

colormap[15]array([192, 128, 128])

물체를 찾아내고 싶다면 colormap[class_id] 사용

- output 이미지가 BGR 순서로 채널 배치가 되어 있다

- colormap은 RGB 순서

추출해야 하는 색상 값은 순서를 아래처럼 바꿔 줘야

seg_color = (128,128,192)seg_color로만 이루어진 마스크를 만들기

# output의 픽셀 별로 색상이 seg_color와 같다면 1(True), 다르다면 0(False)이 됩니다

seg_map = np.all(output==seg_color, axis=-1)

print(seg_map.shape)

plt.imshow(seg_map, cmap='gray')

plt.show()(731, 579)

- 3채널 가졌던 원본과는 다르게 채널 정보가 사라짐

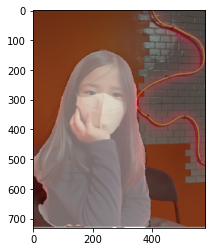

겹쳐서 보기(세그멘테이션이 잘되었는지 확인)

addWeighted

applyColorMap

img_show = img_orig.copy()

# True과 False인 값을 각각 255과 0으로 바꿔줍니다

img_mask = seg_map.astype(np.uint8) * 255

# 255와 0을 적당한 색상으로 바꿔봅니다

color_mask = cv2.applyColorMap(img_mask, cv2.COLORMAP_JET)

# 원본 이미지와 마스트를 적당히 합쳐봅니다

# 0.6과 0.4는 두 이미지를 섞는 비율입니다.

img_show = cv2.addWeighted(img_show, 0.6, color_mask, 0.4, 0.0)

plt.imshow(cv2.cvtColor(img_show, cv2.COLOR_BGR2RGB))

plt.show()잘 확인이 안되서 OCEAN으로 변경

Colormap Types 변경

- 아래 링크에서 다른 타입으로 변경

Enumeration Type Documentation

배경 흐리게

blur() 함수를 이용

# (13,13)은 blurring kernel size를 뜻합니다

# 다양하게 바꿔보세요

img_orig_blur = cv2.blur(img_orig, (13,13))

plt.imshow(cv2.cvtColor(img_orig_blur, cv2.COLOR_BGR2RGB))

plt.show()- 흐려진 이미지에서 배경만 추출

img_mask_color = cv2.cvtColor(img_mask, cv2.COLOR_GRAY2BGR)

img_bg_mask = cv2.bitwise_not(img_mask_color)

img_bg_blur = cv2.bitwise_and(img_orig_blur, img_bg_mask)

plt.imshow(cv2.cvtColor(img_bg_blur, cv2.COLOR_BGR2RGB))

plt.show()bitwise_not 함수를 이용하면 이미지가 반전

- 원래 마스크에서는 배경이 0, 사람이 255

- bitwise_not 연산을 하고 나면 배경은 255, 사람은 0

반전된 세그멘테이션 결과를 이용해서 이미지와 bitwise_and 연산을 수행하면 배경만 있는 영상을 얻을 수 있습니다. 0과 어떤 수를 bitwise_and 연산을 해도 0이 되기 때문에 사람이 0인 경우에는 사람이 있던 모든 픽셀이 0이 됩니다. 결국 사람이 사라지게 되는 거죠.

흐린 배경과 원본 영상 합성

img_concat = np.where(img_mask_color==255, img_orig, img_bg_blur)

plt.imshow(cv2.cvtColor(img_concat, cv2.COLOR_BGR2RGB))

plt.show()

세그멘테이션 마스크가 255인 부분만 원본 이미지 값을 가지고 오고 아닌 영역은 블러된 이미지 값을 사용

np.where(조건, 참일때, 거짓일때)의 형식의 코드를 사용

비교

| 원본 | 변환 |

|---|---|

|  |

- 인물과 배경의 거리 차이가 있고 거리가 일정할수록 더 자연스러워 보임

문제점 찾기

STEP 1.

- 여러분의 셀카를 이용해서 오늘 배운 내용을 수행해 봅시다. 아래와 같은 이미지를 얻어야 합니다. 최소 3장 이상의 인물모드 사진을 만들어 봅시다.

- 인물이 주인공이 아닌, 귀여운 고양이에 대한 아웃포커싱 사진도 만들어 볼 수 있을 것입니다. 시맨틱 세그멘테이션 스텝에서 힌트를 찾아봅시다.

- 배경을 blur하는 인물모드 사진이 아니라 배경사진을 다른 이미지로 교체하는 크로마키 배경 합성을 시도해 볼 수도 있을 것입니다. 여러분만의 환상적인 사진을 만들어 보면 어떨까요?

STEP 2.

- 인물 영역에 포함되어 blur되지 않고 나온다

- 인물 모드 사진 중 하나에서도 이상한 위치를 찾아 표시

STEP 3. 솔루션 제안

- 선택한 기술이 DeepLab 모델의 Semantic Segmentation 이 만들어 낸 Mask 영역에 어떻게 적용되어 문제점을 보완하게 되는지의 메커니즘이 포함된 솔루션

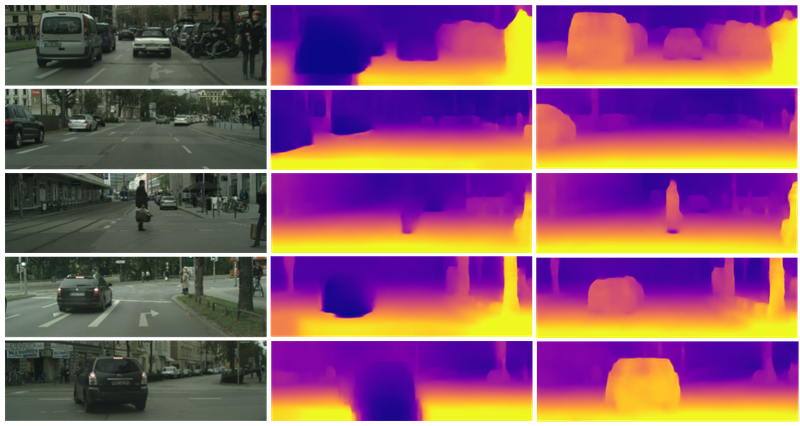

세그멘테이션의 한계

- Semantic segmentation의 부정확성이 여러 가지 문제를 발생시키는 주요 원인

- 피사계심도를 이용한 보케(아웃포커싱) 효과는 말 그대로 심도를 표현하기 때문에 초점이 잡힌 거리를 광학적으로 아주 섬세하게 구별(segmentation)

- 이를 모방한 semantic segmentation 모듈은 정확도가 1.00 이 되지 않는 한 완벽히 구현하기 어려움

피사계 심도 이해.

3D Camera 활용

- 스테레오 비전, ToF 방식 등이 자주 사용

3D 이미지센서

소프트웨어 기술 활용하기

- 구글의 struct2Depth가 대표적인 예

Unsupervised Learning of Depth and Ego-Motion: A Structured Approach

다른 기술과 융합

- 물체의 온도를 측정하는 IR 카메라와 3D 이미지

- 이를 통해 보다 멋진 3d depth sensing이 가능

uDepth: Real-time 3D Depth Sensing on the Pixel 4