IMDB 데이터셋 분석

- IMDb Large Movie Dataset은 50000개의 영어로 작성된 영화 리뷰 텍스트로 구성

- 긍정은 1, 부정은 0의 라벨

- 50000개의 리뷰 중 절반인 25000개가 훈련용 데이터,

- 나머지 25000개를 테스트용 데이터로 사용하도록 지정

imdb = tf.keras.datasets.imdb

# IMDb 데이터셋 다운로드

(x_train, y_train), (x_test, y_test) = imdb.load_data(num_words=10000)

print("훈련 샘플 개수: {}, 테스트 개수: {}".format(len(x_train), len(x_test)))Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/imdb.npz

17465344/17464789 [==============================] - 0s 0us/step

17473536/17464789 [==============================] - 0s 0us/step

훈련 샘플 개수: 25000, 테스트 개수: 25000

- imdb.load_data() 호출 시 단어사전에 등재할 단어의 개수(num_words)를 10000으로 지정하면, 그 개수만큼의 word_to_index 딕셔너리까지 생성된 형태로 데이터셋이 생성

데이터 확인

print(x_train[0]) # 1번째 리뷰데이터

print('라벨: ', y_train[0]) # 1번째 리뷰데이터의 라벨

print('1번째 리뷰 문장 길이: ', len(x_train[0]))

print('2번째 리뷰 문장 길이: ', len(x_train[1]))[1, 14, 22, 16, 43, 530, 973, 1622, 1385, 65, 458, 4468, 66, 3941, 4, 173, 36, 256, 5, 25, 100, 43, 838, 112, 50, 670, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 4536, 1111, 17, 546, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2025, 19, 14, 22, 4, 1920, 4613, 469, 4, 22, 71, 87, 12, 16, 43, 530, 38, 76, 15, 13, 1247, 4, 22, 17, 515, 17, 12, 16, 626, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2223, 5244, 16, 480, 66, 3785, 33, 4, 130, 12, 16, 38, 619, 5, 25, 124, 51, 36, 135, 48, 25, 1415, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 5952, 15, 256, 4, 2, 7, 3766, 5, 723, 36, 71, 43, 530, 476, 26, 400, 317, 46, 7, 4, 2, 1029, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2071, 56, 26, 141, 6, 194, 7486, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 5535, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 1334, 88, 12, 16, 283, 5, 16, 4472, 113, 103, 32, 15, 16, 5345, 19, 178, 32]

라벨: 1

1번째 리뷰 문장 길이: 218

2번째 리뷰 문장 길이: 189텍스트 데이터가 아닌 이미 숫자로 Encoding 된 텍스트 데이터임.

- encode에 사용한 딕셔너리 확인

word_to_index = imdb.get_word_index()

index_to_word = {index:word for word, index in word_to_index.items()}

print(index_to_word[1]) # 'the' 가 출력됩니다.

print(word_to_index['the']) # 1 이 출력됩니다.Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/imdb_word_index.json

1646592/1641221 [==============================] - 0s 0us/step

1654784/1641221 [==============================] - 0s 0us/step

the

1

데이터 보정

IMDb 데이터셋의 텍스트 인코딩을 위한 word_to_index, index_to_word는 아래와 같이 보정

데이터 제공자가 그렇게 하래

- word_to_index는 IMDb 텍스트 데이터셋의 단어 출현 빈도 기준으로 내림차수 정렬

#실제 인코딩 인덱스는 제공된 word_to_index에서 index 기준으로 3씩 뒤로 밀려 있습니다. word_to_index = {k:(v+3) for k,v in word_to_index.items()}

처음 몇 개 인덱스는 사전에 정의되어 있습니다

word_to_index[""] = 0

word_to_index[""] = 1

word_to_index[""] = 2 # unknown

word_to_index[""] = 3

index_to_word = {index:word for word, index in word_to_index.items()}

print(index_to_word[1]) # '' 가 출력됩니다.

print(word_to_index['the']) # 4 이 출력됩니다.

print(index_to_word[4]) # 'the' 가 출력됩니다.

>>\<BOS>

4

the

### Decoding 하기

```python

print(get_decoded_sentence(x_train[0], index_to_word))

print('라벨: ', y_train[0]) # 1번째 리뷰데이터의 라벨this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert \ is an amazing actor and now the same being director \ father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for \ and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also \ to the two little boy's that played the \ of norman and paul they were just brilliant children are often left out of the \ list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all

라벨: 1

- pad_sequences를 통해 데이터셋 상의 문장의 길이를 통일해야 함

- 문장 최대 길이 maxlen의 값 설정도 전체 모델 성능에 영향

- 적절한 값을 찾기 위해서는 전체 데이터셋의 분포를 확인

데이터셋의 분포 확인

total_data_text = list(x_train) + list(x_test)

# 텍스트데이터 문장길이의 리스트를 생성한 후

num_tokens = [len(tokens) for tokens in total_data_text]

num_tokens = np.array(num_tokens)

# 문장길이의 평균값, 최대값, 표준편차를 계산해 본다.

print('문장길이 평균 : ', np.mean(num_tokens))

print('문장길이 최대 : ', np.max(num_tokens))

print('문장길이 표준편차 : ', np.std(num_tokens))

# 예를들어, 최대 길이를 (평균 + 2*표준편차)로 한다면,

max_tokens = np.mean(num_tokens) + 2 * np.std(num_tokens)

maxlen = int(max_tokens)

print('pad_sequences maxlen : ', maxlen)

print('전체 문장의 {}%가 maxlen 설정값 이내에 포함됩니다. '.format(np.sum(num_tokens < max_tokens) / len(num_tokens)))문장길이 평균 : 234.75892

문장길이 최대 : 2494

문장길이 표준편차 : 172.91149458735703

pad_sequences maxlen : 580

전체 문장의 0.94536%가 maxlen 설정값 이내에 포함됩니다.위의 경우 maxlen=580

패팅을 pre로 할까 post로 할까

post 패딩

x_train = tf.keras.preprocessing.sequence.pad_sequences(x_train,

value=word_to_index["<PAD>"],

padding='post', # 혹은 'pre'

maxlen=maxlen)

x_test = tf.keras.preprocessing.sequence.pad_sequences(x_test,

value=word_to_index["<PAD>"],

padding='post', # 혹은 'pre'

maxlen=maxlen)

print(x_train.shape)(25000, 580)

pre 패딩

## RNN Pre사용

x_train = tf.keras.preprocessing.sequence.pad_sequences(x_train,

value=word_to_index["<PAD>"],

padding='pre', # 혹은 'pre'

maxlen=maxlen)

x_test = tf.keras.preprocessing.sequence.pad_sequences(x_test,

value=word_to_index["<PAD>"],

padding='pre', # 혹은 'pre'

maxlen=maxlen)

print(x_train.shape)(25000, 580)

이 결과로는 알수 없는 거 아닌가???

- RNN은 입력데이터가 순차적으로 처리되어, 가장 마지막 입력이 최종 state 값에 가장 영향을 많이 미치게 됩니다. 그러므로 마지막 입력이 무의미한 padding으로 채워지는 것은 비효율적입니다. 따라서 'pre'가 훨씬 유리하며, 10% 이상의 테스트 성능 차이를 보이게 됩니다.

RNN 모델 설계

vocab_size = 10000 # 어휘 사전의 크기입니다(10,000개의 단어)

word_vector_dim = 16 # 워드 벡터의 차원 수 (변경 가능한 하이퍼파라미터)

# model 설계 - 딥러닝 모델 코드를 직접 작성해 주세요.

model = tf.keras.Sequential()

model = tf.keras.Sequential()

model.add(tf.keras.layers.Embedding(vocab_size, word_vector_dim, input_shape=(None,)))

model.add(tf.keras.layers.GlobalMaxPooling1D())

model.add(tf.keras.layers.Dense(8, activation='relu'))

model.add(tf.keras.layers.Dense(1, activation='sigmoid'))

model.summary()Model: "sequential_6"

Layer (type) Output Shape Param #

\=================================================================

embedding_6 (Embedding) (None, None, 16) 160000

global_max_pooling1d_2 (Glob (None, 16) 0

dense_8 (Dense) (None, 8) 136

dense_9 (Dense) (None, 1) 9

\=================================================================

Total params: 160,145

Trainable params: 160,145

Non-trainable params: 0

그래서 이 결과가 모다?

model 훈련 전에, 훈련용 데이터셋 25000건 중 10000건을 분리하여 검증셋(validation set)으로 사용

# validation set 10000건 분리

x_val = x_train[:10000]

y_val = y_train[:10000]

# validation set을 제외한 나머지 15000건

partial_x_train = x_train[10000:]

partial_y_train = y_train[10000:]

print(partial_x_train.shape)

print(partial_y_train.shape)(15000, 580)

(15000,)

Model 학습 시작

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

epochs=20 # 몇 epoch를 훈련하면 좋을지 결과를 보면서 바꾸어 봅시다.

history = model.fit(partial_x_train,

partial_y_train,

epochs=epochs,

batch_size=512,

validation_data=(x_val, y_val),

verbose=1)Epoch 1/20

30/30 [==============================] - 8s 13ms/step - loss: 0.6919 - accuracy: 0.4991 - val_loss: 0.6889 - val_accuracy: 0.5412

Epoch 2/20

30/30 [==============================] - 0s 8ms/step - loss: 0.6846 - accuracy: 0.6340 - val_loss: 0.6796 - val_accuracy: 0.6835

Epoch 3/20

30/30 [==============================] - 0s 7ms/step - loss: 0.6701 - accuracy: 0.7469 - val_loss: 0.6618 - val_accuracy: 0.7336

Epoch 4/20

30/30 [==============================] - 0s 7ms/step - loss: 0.6429 - accuracy: 0.7901 - val_loss: 0.6296 - val_accuracy: 0.7706

Epoch 5/20

30/30 [==============================] - 0s 6ms/step - loss: 0.5986 - accuracy: 0.8169 - val_loss: 0.5863 - val_accuracy: 0.7821

Epoch 6/20

30/30 [==============================] - 0s 6ms/step - loss: 0.5440 - accuracy: 0.8301 - val_loss: 0.5386 - val_accuracy: 0.7953

Epoch 7/20

30/30 [==============================] - 0s 6ms/step - loss: 0.4867 - accuracy: 0.8430 - val_loss: 0.4944 - val_accuracy: 0.8038

Epoch 8/20

30/30 [==============================] - 0s 6ms/step - loss: 0.4342 - accuracy: 0.8543 - val_loss: 0.4580 - val_accuracy: 0.8120

Epoch 9/20

30/30 [==============================] - 0s 6ms/step - loss: 0.3889 - accuracy: 0.8669 - val_loss: 0.4301 - val_accuracy: 0.8173

Epoch 10/20

30/30 [==============================] - 0s 6ms/step - loss: 0.3508 - accuracy: 0.8789 - val_loss: 0.4081 - val_accuracy: 0.8233

Epoch 11/20

30/30 [==============================] - 0s 6ms/step - loss: 0.3185 - accuracy: 0.8877 - val_loss: 0.3916 - val_accuracy: 0.8276

Epoch 12/20

30/30 [==============================] - 0s 6ms/step - loss: 0.2912 - accuracy: 0.8984 - val_loss: 0.3796 - val_accuracy: 0.8309

Epoch 13/20

30/30 [==============================] - 0s 6ms/step - loss: 0.2678 - accuracy: 0.9060 - val_loss: 0.3706 - val_accuracy: 0.8361

Epoch 14/20

30/30 [==============================] - 0s 6ms/step - loss: 0.2473 - accuracy: 0.9137 - val_loss: 0.3644 - val_accuracy: 0.8371

Epoch 15/20

30/30 [==============================] - 0s 6ms/step - loss: 0.2296 - accuracy: 0.9202 - val_loss: 0.3603 - val_accuracy: 0.8391

Epoch 16/20

30/30 [==============================] - 0s 6ms/step - loss: 0.2137 - accuracy: 0.9257 - val_loss: 0.3578 - val_accuracy: 0.8413

Epoch 17/20

30/30 [==============================] - 0s 6ms/step - loss: 0.1996 - accuracy: 0.9314 - val_loss: 0.3570 - val_accuracy: 0.8418

Epoch 18/20

30/30 [==============================] - 0s 6ms/step - loss: 0.1867 - accuracy: 0.9367 - val_loss: 0.3573 - val_accuracy: 0.8428

Epoch 19/20

30/30 [==============================] - 0s 6ms/step - loss: 0.1750 - accuracy: 0.9422 - val_loss: 0.3583 - val_accuracy: 0.8430

Epoch 20/20

30/30 [==============================] - 0s 6ms/step - loss: 0.1639 - accuracy: 0.9479 - val_loss: 0.3604 - val_accuracy: 0.8423

학습이 끝난 모델을 테스트 셋으로 평가

results = model.evaluate(x_test, y_test, verbose=2)

print(results)782/782 - 1s - loss: 0.3870 - accuracy: 0.8310

[0.3870142102241516, 0.8309599757194519]

-

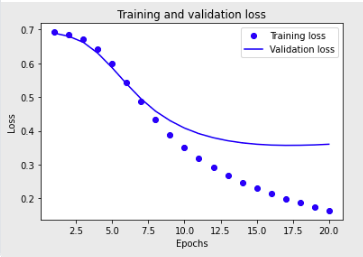

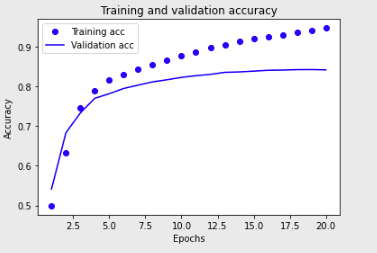

model.fit() 과정 중의 train/validation loss, accuracy 등이 매 epoch마다 history 변수에 저장

-

이 데이터를 그래프로 그려 보면, 수행했던 딥러닝 학습이 잘 진행되었는지, 오버피팅 혹은 언더피팅하지 않았는지, 성능을 개선할 수 있는 다양한 아이디어를 얻을 수 있는 좋은 자료

Epoch의 hostory 그래프로( 성능개선 확인)

history_dict = history.history

print(history_dict.keys()) # epoch에 따른 그래프를 그려볼 수 있는 항목들dict_keys(['loss', 'accuracy', 'val_loss', 'val_accuracy'])

import matplotlib.pyplot as plt

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(acc) + 1)

# "bo"는 "파란색 점"입니다

plt.plot(epochs, loss, 'bo', label='Training loss')

# b는 "파란 실선"입니다

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

- Training and validation loss를 그려 보면, 몇 epoch까지의 트레이닝이 적절한지 최적점을 추정

- validation loss의 그래프가 train loss와의 이격이 발생하게 되면 더 이상의 트레이닝은 무의미

Training and validation accuracy

plt.clf() # 그림을 초기화합니다

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()Word2Vec의 적용

-

word Embedding

- 라벨링 비용이 많이 드는 머신러닝 기반 감성분석의 비용을 절감하면서 정확도를 크게 향상시킬 수 있는 자연어처리 기법으로 단어의 특성을 저차원 벡터값으로 표현할 수 있는 워드 임베딩(word embedding) 기법 -

model의 첫 번째 레이어는 바로 Embedding 레이어

- 사전의 단어 개수 X 워드 벡터 사이즈만큼의 크기를 가진 학습 파라미터

- Embedding 레이어에 학습된 워드 벡터들도 의미 공간상에 유의미한 형태로 학습

-

워드 벡터를 다루는데 유용한 gensim 패키지 버전 확인

embedding_layer = model.layers[0]

weights = embedding_layer.get_weights()[0]

print(weights.shape) # shape: (vocab_size, embedding_dim)(10000, 300)

# 학습한 Embedding 파라미터를 파일에 써서 저장합니다.

word2vec_file_path = os.getenv('HOME')+'/aiffel/sentiment_classification/data/word2vec.txt'

f = open(word2vec_file_path, 'w')

f.write('{} {}\n'.format(vocab_size-4, word_vector_dim)) # 몇개의 벡터를 얼마 사이즈로 기재할지 타이틀을 씁니다.

# 단어 개수(에서 특수문자 4개는 제외하고)만큼의 워드 벡터를 파일에 기록합니다.

vectors = model.get_weights()[0]

for i in range(4,vocab_size):

f.write('{} {}\n'.format(index_to_word[i], ' '.join(map(str, list(vectors[i, :])))))

f.close()gensim에서 제공하는 패키지를 이용해, 위에 남긴 임베딩 파라미터를 읽어서 word vector로 사용

from gensim.models.keyedvectors import Word2VecKeyedVectors

word_vectors = Word2VecKeyedVectors.load_word2vec_format(word2vec_file_path, binary=False)

vector = word_vectors['computer']

vector위와 같이 얻은 워드 벡터를 가지고 재미있는 실험을 해볼 수 있습니다. 워드 벡터가 의미 벡터 공간상에 유의미하게 학습되었는지 확인하는 방법 중에, 단어를 하나 주고 그와 가장 유사한 단어와 그 유사도를 확인하는 방법이 있습니다. gensim을 사용하면 아래와 같이 해볼 수 있습니다.

word_vectors.similar_by_word("love")word2Vec 모델 가져오기

$ ln -s ~/data/GoogleNews-vectors-negative300.bin.gz ~/aiffel/sentiment_classification/data

from gensim.models import KeyedVectors

word2vec_path = os.getenv('HOME')+'/aiffel/sentiment_classification/data/GoogleNews-vectors-negative300.bin.gz'

word2vec = KeyedVectors.load_word2vec_format(word2vec_path, binary=True, limit=1000000)

vector = word2vec['computer']

vector # 무려 300dim의 워드 벡터입니다.array([ 1.07421875e-01, -2.01171875e-01, 1.23046875e-01, 2.11914062e-01,

-9.13085938e-02, 2.16796875e-01, -1.31835938e-01, 8.30078125e-02,

2.02148438e-01, 4.78515625e-02, 3.66210938e-02, -2.45361328e-02,

2.39257812e-02, -1.60156250e-01, -2.61230469e-02, 9.71679688e-02,

-6.34765625e-02, 1.84570312e-01, 1.70898438e-01, -1.63085938e-01,

-1.09375000e-01, 1.49414062e-01, -4.65393066e-04, 9.61914062e-02,

1.68945312e-01, 2.60925293e-03, 8.93554688e-02, 6.49414062e-02,

3.56445312e-02, -6.93359375e-02, -1.46484375e-01, -1.21093750e-01,

-2.27539062e-01, 2.45361328e-02, -1.24511719e-01, -3.18359375e-01,

-2.20703125e-01, 1.30859375e-01, 3.66210938e-02, -3.63769531e-02,

-1.13281250e-01, 1.95312500e-01, 9.76562500e-02, 1.26953125e-01,

6.59179688e-02, 6.93359375e-02, 1.02539062e-02, 1.75781250e-01,

-1.68945312e-01, 1.21307373e-03, -2.98828125e-01, -1.15234375e-01,

5.66406250e-02, -1.77734375e-01, -2.08984375e-01, 1.76757812e-01,

2.38037109e-02, -2.57812500e-01, -4.46777344e-02, 1.88476562e-01,

5.51757812e-02, 5.02929688e-02, -1.06933594e-01, 1.89453125e-01,

-1.16210938e-01, 8.49609375e-02, -1.71875000e-01, 2.45117188e-01,

-1.73828125e-01, -8.30078125e-03, 4.56542969e-02, -1.61132812e-02,

1.86523438e-01, -6.05468750e-02, -4.17480469e-02, 1.82617188e-01,

2.20703125e-01, -1.22558594e-01, -2.55126953e-02, -3.08593750e-01,

9.13085938e-02, 1.60156250e-01, 1.70898438e-01, 1.19628906e-01,

7.08007812e-02, -2.64892578e-02, -3.08837891e-02, 4.06250000e-01,

-1.01562500e-01, 5.71289062e-02, -7.26318359e-03, -9.17968750e-02,

-1.50390625e-01, -2.55859375e-01, 2.16796875e-01, -3.63769531e-02,

2.24609375e-01, 8.00781250e-02, 1.56250000e-01, 5.27343750e-02,

1.50390625e-01, -1.14746094e-01, -8.64257812e-02, 1.19140625e-01,

-7.17773438e-02, 2.73437500e-01, -1.64062500e-01, 7.29370117e-03,

4.21875000e-01, -1.12792969e-01, -1.35742188e-01, -1.31835938e-01,

-1.37695312e-01, -7.66601562e-02, 6.25000000e-02, 4.98046875e-02,

-1.91406250e-01, -6.03027344e-02, 2.27539062e-01, 5.88378906e-02,

-3.24218750e-01, 5.41992188e-02, -1.35742188e-01, 8.17871094e-03,

-5.24902344e-02, -1.74713135e-03, -9.81445312e-02, -2.86865234e-02,

3.61328125e-02, 2.15820312e-01, 5.98144531e-02, -3.08593750e-01,

-2.27539062e-01, 2.61718750e-01, 9.86328125e-02, -5.07812500e-02,

1.78222656e-02, 1.31835938e-01, -5.35156250e-01, -1.81640625e-01,

1.38671875e-01, -3.10546875e-01, -9.71679688e-02, 1.31835938e-01,

-1.16210938e-01, 7.03125000e-02, 2.85156250e-01, 3.51562500e-02,

-1.01562500e-01, -3.75976562e-02, 1.41601562e-01, 1.42578125e-01,

-5.68847656e-02, 2.65625000e-01, -2.09960938e-01, 9.64355469e-03,

-6.68945312e-02, -4.83398438e-02, -6.10351562e-02, 2.45117188e-01,

-9.66796875e-02, 1.78222656e-02, -1.27929688e-01, -4.78515625e-02,

-7.26318359e-03, 1.79687500e-01, 2.78320312e-02, -2.10937500e-01,

-1.43554688e-01, -1.27929688e-01, 1.73339844e-02, -3.60107422e-03,

-2.04101562e-01, 3.63159180e-03, -1.19628906e-01, -6.15234375e-02,

5.93261719e-02, -3.23486328e-03, -1.70898438e-01, -3.14941406e-02,

-8.88671875e-02, -2.89062500e-01, 3.44238281e-02, -1.87500000e-01,

2.94921875e-01, 1.58203125e-01, -1.19628906e-01, 7.61718750e-02,

6.39648438e-02, -4.68750000e-02, -6.83593750e-02, 1.21459961e-02,

-1.44531250e-01, 4.54101562e-02, 3.68652344e-02, 3.88671875e-01,

1.45507812e-01, -2.55859375e-01, -4.46777344e-02, -1.33789062e-01,

-1.38671875e-01, 6.59179688e-02, 1.37695312e-01, 1.14746094e-01,

2.03125000e-01, -4.78515625e-02, 1.80664062e-02, -8.54492188e-02,

-2.48046875e-01, -3.39843750e-01, -2.83203125e-02, 1.05468750e-01,

-2.14843750e-01, -8.74023438e-02, 7.12890625e-02, 1.87500000e-01,

-1.12304688e-01, 2.73437500e-01, -3.26171875e-01, -1.77734375e-01,

-4.24804688e-02, -2.69531250e-01, 6.64062500e-02, -6.88476562e-02,

-1.99218750e-01, -7.03125000e-02, -2.43164062e-01, -3.66210938e-02,

-7.37304688e-02, -1.77734375e-01, 9.17968750e-02, -1.25000000e-01,

-1.65039062e-01, -3.57421875e-01, -2.85156250e-01, -1.66992188e-01,

1.97265625e-01, -1.53320312e-01, 2.31933594e-02, 2.06054688e-01,

1.80664062e-01, -2.74658203e-02, -1.92382812e-01, -9.61914062e-02,

-1.06811523e-02, -4.73632812e-02, 6.54296875e-02, -1.25732422e-02,

1.78222656e-02, -8.00781250e-02, -2.59765625e-01, 9.37500000e-02,

-7.81250000e-02, 4.68750000e-02, -2.22167969e-02, 1.86767578e-02,

3.11279297e-02, 1.04980469e-02, -1.69921875e-01, 2.58789062e-02,

-3.41796875e-02, -1.44042969e-02, -5.46875000e-02, -8.78906250e-02,

1.96838379e-03, 2.23632812e-01, -1.36718750e-01, 1.75781250e-01,

-1.63085938e-01, 1.87500000e-01, 3.44238281e-02, -5.63964844e-02,

-2.27689743e-05, 4.27246094e-02, 5.81054688e-02, -1.07910156e-01,

-3.88183594e-02, -2.69531250e-01, 3.34472656e-02, 9.81445312e-02,

5.63964844e-02, 2.23632812e-01, -5.49316406e-02, 1.46484375e-01,

5.93261719e-02, -2.19726562e-01, 6.39648438e-02, 1.66015625e-02,

4.56542969e-02, 3.26171875e-01, -3.80859375e-01, 1.70898438e-01,

5.66406250e-02, -1.04492188e-01, 1.38671875e-01, -1.57226562e-01,

3.23486328e-03, -4.80957031e-02, -2.48046875e-01, -6.20117188e-02],

dtype=float32)

300dim의 벡터로 이루어진 300만 개의 단어입니다. 이 단어 사전을 메모리에 모두 로딩하면 아주 높은 확률로 여러분의 실습환경에 메모리 에러가 날 것입니다. 그래서 KeyedVectors.load_word2vec_format 메서드로 워드 벡터를 로딩할 때 가장 많이 사용되는 상위 100만 개만 limt으로 조건을 주어 로딩했습니다.

메모리가 충분하다면 limt=None으로 하시면 300만 개를 모두 로딩

# 메모리를 다소 많이 소비하는 작업이니 유의해 주세요.

word2vec.similar_by_word("love")[('loved', 0.6907791495323181),

('adore', 0.6816873550415039),

('loves', 0.661863386631012),

('passion', 0.6100708842277527),

('hate', 0.600395679473877),

('loving', 0.5886635780334473),

('affection', 0.5664337873458862),

('undying_love', 0.5547304749488831),

('absolutely_adore', 0.5536840558052063),

('adores', 0.5440906882286072)]

Word2Vec에서 제공하는 워드 임베딩 벡터들끼리는 의미적 유사도가 가까운 것이 서로 가깝게 제대로 학습된 것을 확인할 수 있습니다. 이제 우리는 이전 스텝에서 학습했던 모델의 임베딩 레이어를 Word2Vec의 것으로 교체하여 다시 학습시켜 볼 것

vocab_size = 10000 # 어휘 사전의 크기입니다(10,000개의 단어)

word_vector_dim = 300 # 워드 벡터의 차원수

embedding_matrix = np.random.rand(vocab_size, word_vector_dim)

# embedding_matrix에 Word2Vec 워드 벡터를 단어 하나씩마다 차례차례 카피한다.

for i in range(4,vocab_size):

if index_to_word[i] in word2vec:

embedding_matrix[i] = word2vec[index_to_word[i]]from tensorflow.keras.initializers import Constant

vocab_size = 10000 # 어휘 사전의 크기입니다(10,000개의 단어)

word_vector_dim = 300 # 워드 벡터의 차원 수

# 모델 구성

model = tf.keras.Sequential()

model.add(tf.keras.layers.Embedding(vocab_size,

word_vector_dim,

embeddings_initializer=Constant(embedding_matrix), # 카피한 임베딩을 여기서 활용

input_length=maxlen,

trainable=True)) # trainable을 True로 주면 Fine-tuning

model.add(tf.keras.layers.Conv1D(16, 7, activation='relu'))

model.add(tf.keras.layers.MaxPooling1D(5))

model.add(tf.keras.layers.Conv1D(16, 7, activation='relu'))

model.add(tf.keras.layers.GlobalMaxPooling1D())

model.add(tf.keras.layers.Dense(8, activation='relu'))

model.add(tf.keras.layers.Dense(1, activation='sigmoid'))

model.summary()Model: "sequential_11"

Layer (type) Output Shape Param #

\=================================================================

embedding_11 (Embedding) (None, 580, 300) 3000000

conv1d_10 (Conv1D) (None, 574, 16) 33616

max_pooling1d_5 (MaxPooling1 (None, 114, 16) 0

conv1d_11 (Conv1D) (None, 108, 16) 1808

global_max_pooling1d_7 (Glob (None, 16) 0

dense_18 (Dense) (None, 8) 136

dense_19 (Dense) (None, 1) 9

\=================================================================

Total params: 3,035,569

Trainable params: 3,035,569

Non-trainable params: 0

# 학습의 진행

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

epochs=20 # 몇 epoch를 훈련하면 좋을지 결과를 보면서 바꾸어 봅시다.

history = model.fit(partial_x_train,

partial_y_train,

epochs=epochs,

batch_size=512,

validation_data=(x_val, y_val),

verbose=1)# 테스트셋을 통한 모델 평가

results = model.evaluate(x_test, y_test, verbose=2)

print(results)Epoch 1/20

30/30 [==============================] - 3s 78ms/step - loss: 0.7040 - accuracy: 0.4949 - val_loss: 0.6931 - val_accuracy: 0.5030

Epoch 2/20

30/30 [==============================] - 2s 73ms/step - loss: 0.6928 - accuracy: 0.5125 - val_loss: 0.6934 - val_accuracy: 0.5054

Epoch 3/20

30/30 [==============================] - 2s 72ms/step - loss: 0.6916 - accuracy: 0.5375 - val_loss: 0.6938 - val_accuracy: 0.4943

Epoch 4/20

30/30 [==============================] - 2s 72ms/step - loss: 0.6899 - accuracy: 0.5364 - val_loss: 0.6944 - val_accuracy: 0.4943

Epoch 5/20

30/30 [==============================] - 2s 73ms/step - loss: 0.6878 - accuracy: 0.5438 - val_loss: 0.6928 - val_accuracy: 0.5160

Epoch 6/20

30/30 [==============================] - 2s 74ms/step - loss: 0.6850 - accuracy: 0.6023 - val_loss: 0.6931 - val_accuracy: 0.5050

Epoch 7/20

30/30 [==============================] - 2s 74ms/step - loss: 0.6814 - accuracy: 0.6922 - val_loss: 0.6927 - val_accuracy: 0.5073

Epoch 8/20

30/30 [==============================] - 2s 74ms/step - loss: 0.6747 - accuracy: 0.7303 - val_loss: 0.6916 - val_accuracy: 0.5308

Epoch 9/20

30/30 [==============================] - 2s 73ms/step - loss: 0.6658 - accuracy: 0.7083 - val_loss: 0.6911 - val_accuracy: 0.5227

Epoch 10/20

30/30 [==============================] - 2s 73ms/step - loss: 0.6477 - accuracy: 0.7989 - val_loss: 0.6901 - val_accuracy: 0.5374

Epoch 11/20

30/30 [==============================] - 2s 73ms/step - loss: 0.6121 - accuracy: 0.8204 - val_loss: 0.6895 - val_accuracy: 0.5319

Epoch 12/20

30/30 [==============================] - 2s 72ms/step - loss: 0.5450 - accuracy: 0.8669 - val_loss: 0.6987 - val_accuracy: 0.5382

Epoch 13/20

30/30 [==============================] - 2s 72ms/step - loss: 0.4603 - accuracy: 0.8816 - val_loss: 0.7146 - val_accuracy: 0.5394

Epoch 14/20

30/30 [==============================] - 2s 72ms/step - loss: 0.3577 - accuracy: 0.9413 - val_loss: 0.7043 - val_accuracy: 0.5624

Epoch 15/20

30/30 [==============================] - 2s 72ms/step - loss: 0.2657 - accuracy: 0.9613 - val_loss: 0.7190 - val_accuracy: 0.5716

Epoch 16/20

30/30 [==============================] - 2s 72ms/step - loss: 0.1955 - accuracy: 0.9753 - val_loss: 0.7560 - val_accuracy: 0.5704

Epoch 17/20

30/30 [==============================] - 2s 72ms/step - loss: 0.1357 - accuracy: 0.9881 - val_loss: 0.8460 - val_accuracy: 0.5593

Epoch 18/20

30/30 [==============================] - 2s 72ms/step - loss: 0.0985 - accuracy: 0.9936 - val_loss: 0.8920 - val_accuracy: 0.5555

Epoch 19/20

30/30 [==============================] - 2s 72ms/step - loss: 0.0710 - accuracy: 0.9954 - val_loss: 0.8277 - val_accuracy: 0.5821

Epoch 20/20

30/30 [==============================] - 2s 72ms/step - loss: 0.0540 - accuracy: 0.9965 - val_loss: 0.8434 - val_accuracy: 0.5819

- Word2Vec을 정상적으로 잘 활용하면 그렇지 않은 경우보다 5% 이상의 성능 향상이 발생합니다. 적절한 모델 구성, 하이퍼파라미터를 고려하여 감정 분석 모델의 성능을 최대한으로 끌어올려 봅시다