-

1,2,3,4,5,6→61+2+3+4+5+6=3.5=21+6

- in Gauss raw, n1j=1∑nj=2n+1

-

| 1, 1, 1, 1, 1, | 3, 3, | 5

-

Average of a discrete r.v.X

- E(X)=x∑xP(X=x):(value)(PMF),

summed over x with P(X=x)>0

-

X∼Bern(p)

: E(X)=1P(X=1)+0P(X=0)=p

-

X={1,0,ifAoccursotherwise (indicator r.v.)

-

Then E(X)=P(A) fundamental bridge

→ X를 지시 확률 변수라 생각할 수 있음

-

X∼Bin(n,p)

: E(X)=k=0∑nk(nk)pkqn−k

=k=1∑nn(n−1k−1)pkqn−k

=npk=1∑n(n−1k−1)pk−1qn−k

=npj=0∑n−1(n−1j)pjqn−1−j : j=k−1,k=j+1

⇒np

-

Linearity

: E(X+Y)=E(X)+E(Y)

- even if X, Y are dependent

: E(cX)=cE(X)

-

Redo Bin

-

Ex. 5 card hard, X=(# of aces).

-

Let Xj be indicator of jth card being our ace, 1≤j≤5

-

E(X)=E(X1+...+X5)=E(X1)+...+E(X5)=symmetry5E(X1)

fund. bridge, =5P(1stcardace)=135, even though Xj's are dependent.

-

This gives expected value of any Hypergeometric.

-

Geom(p)

: indep. Bern(p) trials, count # failures before 1st success.

ex. F F F F F S : P(X=5)=q5p

-

Let X∼Geom(p),q=1−p.

-

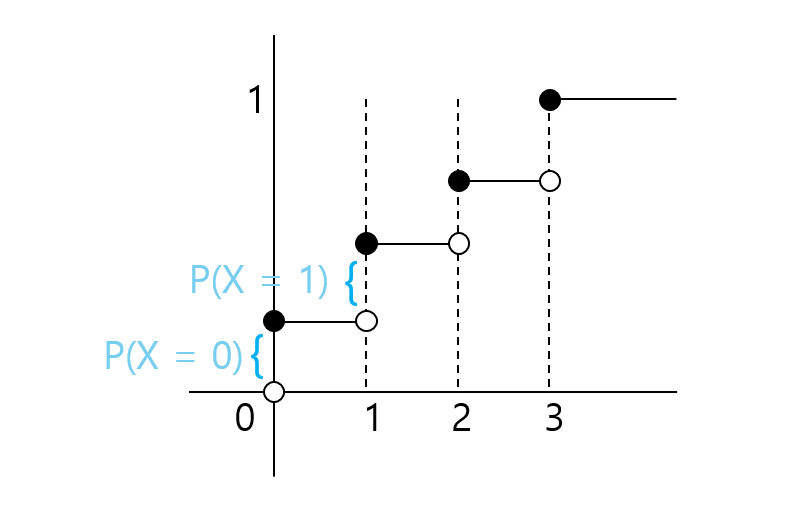

PMF: P(X=k)=qkp, k∈{0,1,2,...}

valid since k=0∑∞pqk=pk=0∑∞qk=1−qp=1 (기하 급수)

-

X∼Geom(p)

: E(X)=k=0∑∞kpqk

-

cf. k=0∑∞qk=q1 ← derivative

⇒k=0∑∞=kqk−1(1−q)21

⇒k=0∑∞kqk=(1−q)2q

-

Story Proof