Import

import torch.nn.functional as F

Softmax

Convert numbers to probabilities with softmax.

- PyTorch has a softmax function. (

F.softmax())

z = torch.FloatTensor([1,2,3]) h = F.softmax(z, dim=0) # dim=0 : row들끼리의 합 > tensor([0.0900, 0.2447, 0.6652]) h[0] = torch.exp(torch.FloatTensor([1]))/(torch.exp(torch.FloatTensor([1]))+torch.exp(torch.FloatTensor([2]))+torch.exp(torch.FloatTensor([3])))

Cross Entropy Loss

For multi-class classification, we use the cross entropy loss.

log

where is the predicted probability and y is the correct probability (0 or 1).

z = torch.rand(3, 5, requires_grad=True) h2 = F.softmax(z, dim=1) y = torch.randint(5, (3,)).long() > tensor([4, 2, 0]) y_one_hot = torch.zeros_like(h2) y_one_hot.scatter_(1, y.unsqueeze(1), 1) > tensor([[0., 1., 0., 0., 0.], [0., 0., 1., 0., 0.], [0., 0., 1., 0., 0.]])

- PyTorch has

F.log_softmax()function.

#low level torch.log(F.softmax(z, dim=1)) #high level F.log_softmax(z, dim=1) # torch에는 log_softmax함수가 존재

- PyTorch also has

F.nll_loss()function that computesthe negative loss likelihood.

#low level cost_low_level = (y_one_hot * -torch.log(F.softmax(z, dim=1))).sum(dim=1).mean() > tensor(1.7288, grad_fn=<MeanBackward0>)

# high level cost_high_level = F.nll_loss(F.log_softmax(z, dim=1), y) # NLL : Negative Log Likelihood > tensor(1.7288, grad_fn=<NllLossBackward0>)

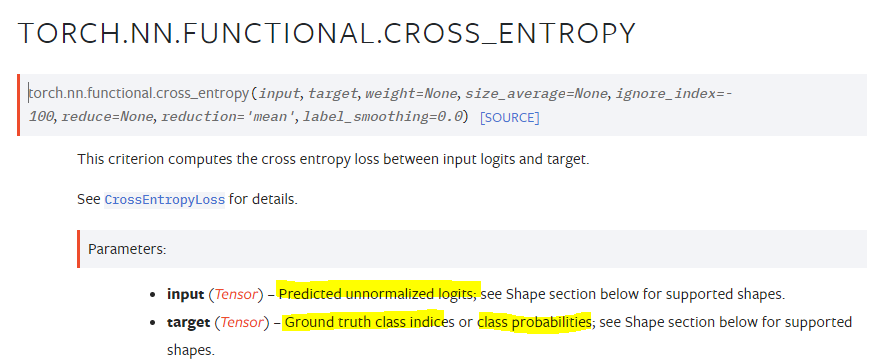

- PyTorch also has F.cross_entropy that combines

F.log_softmax()andF.nll_loss().

# F.cross_entropy = F.log_softmax() + F.nll_loss() F.cross_entropy(z,y) > tensor(1.7288, grad_fn=<NllLossBackward0>)

reference : https://velog.io/@och9854/06-1.-Softmax-Classification