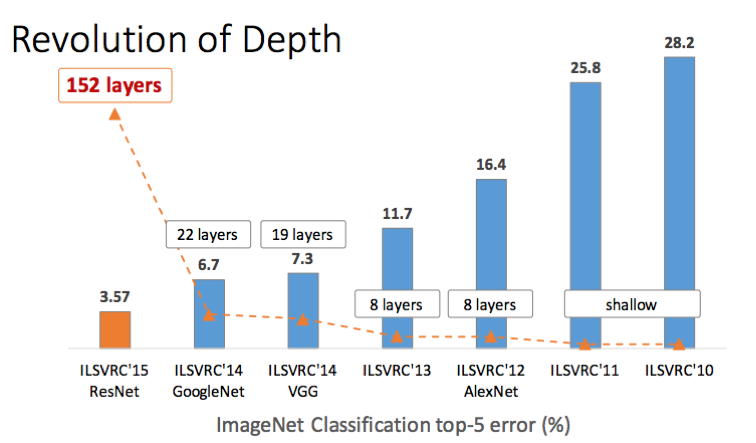

- 2015년 ILSVRC에서 우승을 차지

- 마이크로소프트에서 개발한 알고리즘(중국인 연구진이 개발)

- 2014년의 GoogLeNet(22개 층)과 비교하면 ResNet은 152개 층

그림을 보면 네트워크가 깊어지면서 Top-5 error가 낮아진 것을 확인할 수있다.

Q. 신경망이 깊어지면 질수록 성능이 좋아지는가?

더 깊은 구조를 갖는 56층의 네트워크가 20층의 네트워크 보다 더 나쁜 성능을 보임

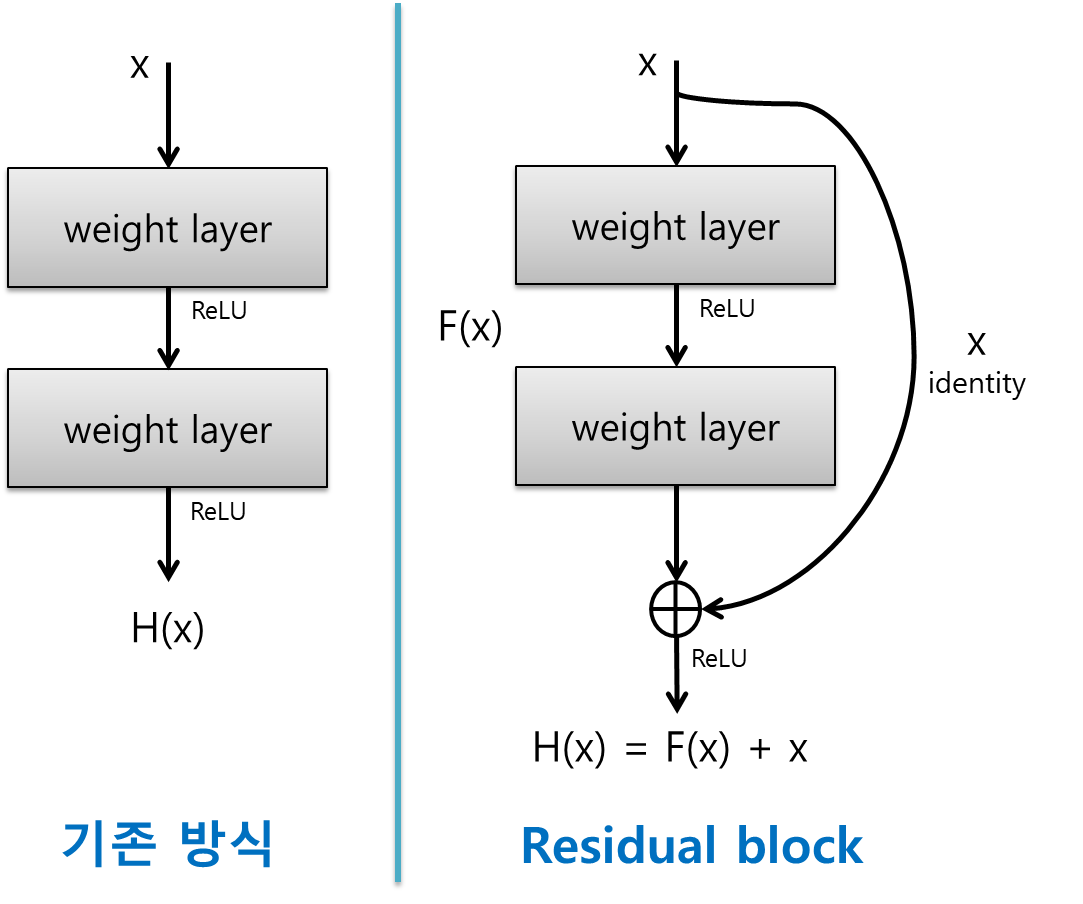

Residual Block

Shortcut을 이용해서 깊어지는 신경망에서 발생하는 문제점을 해결하였다.

F(x) + x <- 최소화 (0에 가깝게 만드는 목적)

- F(x) = H(x) - x

- F(x) < -최소

- H(x) - x 최소를 의미한다.

ResNet이란 이름의 유래

H(x) - x 를 잔차(residual)를 최소로 해주는 것

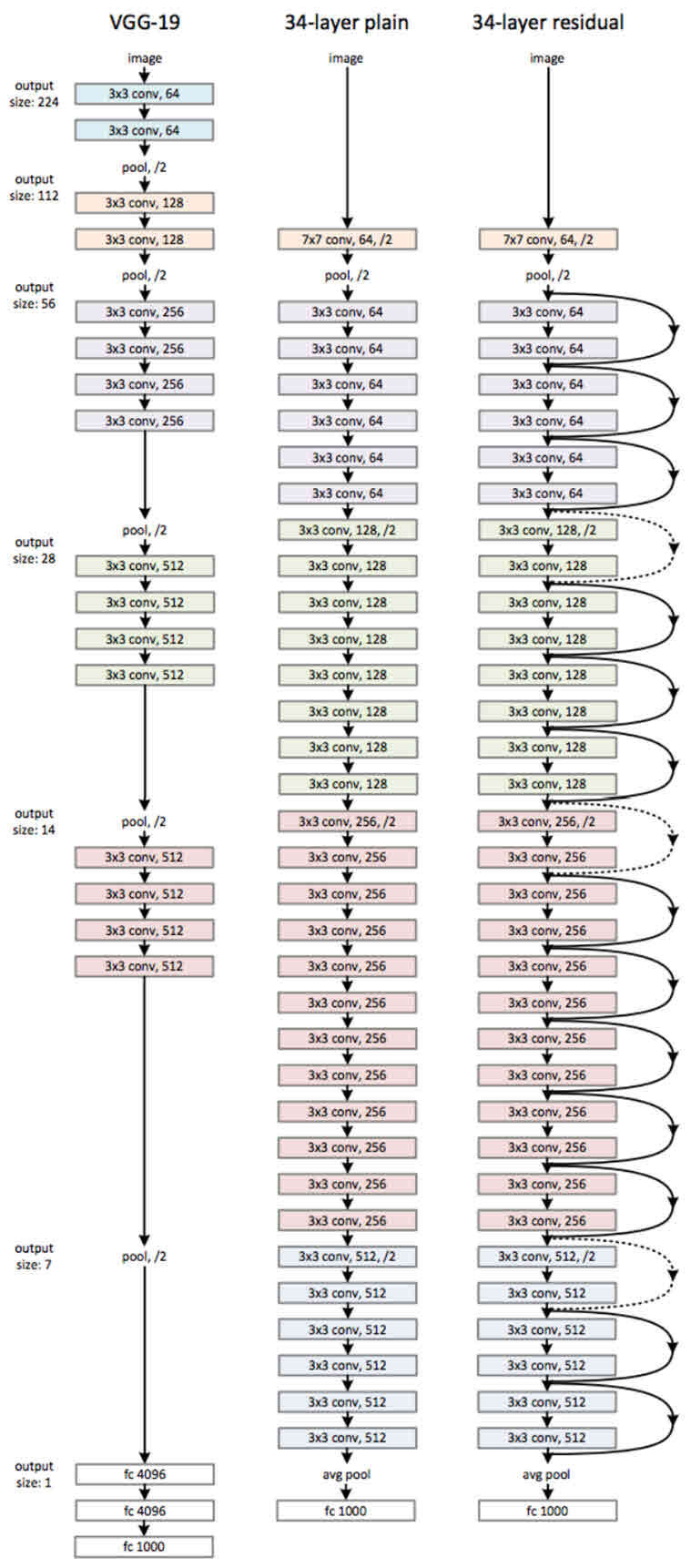

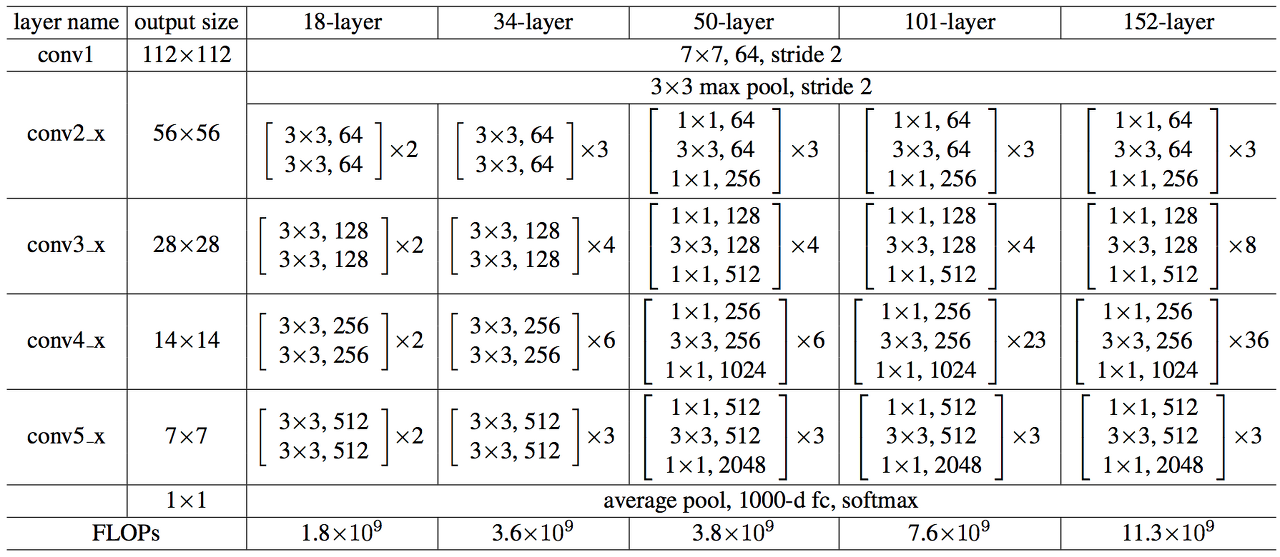

ResNet의 구조

- ResNet은 기본적으로 VGG-19의 구조를 뼈대

- 컨볼루션 층들을 추가해서 깊게 만든 후에, shortcut들을 추가하는 것

Resnet34 Code

res_input_layer = keras.layers.Input(shape=(32,32,3))

x = res_input_layer

name='resnet_34'

x = keras.layers.ZeroPadding2D(padding = (3,3))(x)

x = keras.layers.Conv2D(64, (7,7), strides=(2,2), padding='valid')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

#--------------------stage 1 : 7x7, conv, 64 / 3x3, conv, 64---------------------------------------------------

x = keras.layers.Conv2D(64, (7,7), strides=(2,2), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.MaxPool2D(pool_size=(2,2), strides=2)(x)

shortcut = x

x = keras.layers.Conv2D(64, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

#--------------------stage 2 : 3x3, conv, 64-------------------------------------------------------------------

x = keras.layers.Conv2D(64, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(64, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(64, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(64, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(64, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

#--------------------stage 3 : 3x3, conv, 128------------------------------------------------------------------

shortcut = keras.layers.Conv2D(128, (1,1), padding='same', strides=(2,2))(x)

shortcut = keras.layers.BatchNormalization()(shortcut)

x = keras.layers.Conv2D(128, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(128, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

#--------------------stage 4 : 3x3, conv, 256------------------------------------------------------------------

shortcut = keras.layers.Conv2D(256, (1,1), padding='same', strides=(2,2))(x)

shortcut = keras.layers.BatchNormalization()(shortcut)

x = keras.layers.Conv2D(256, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(256, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

#--------------------stage 5 : 3x3, conv, 512------------------------------------------------------------------

shortcut = keras.layers.Conv2D(512, (1,1), padding='same', strides=(2,2))(x)

shortcut = keras.layers.BatchNormalization()(shortcut)

x = keras.layers.Conv2D(512, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(512, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(512, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(512, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(512, (3,3), padding='same', strides=(2,2))(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Activation('relu')(x)

x = keras.layers.Conv2D(512, (3,3), padding='same')(x)

x = keras.layers.BatchNormalization()(x)

x = keras.layers.Add()([x, shortcut])

shortcut = x

x = keras.layers.Activation('relu')(x)

#-------------------------------------------------------------------------------------------------

x = keras.layers.GlobalAveragePooling2D()(x)

x = keras.layers.Flatten()(x)

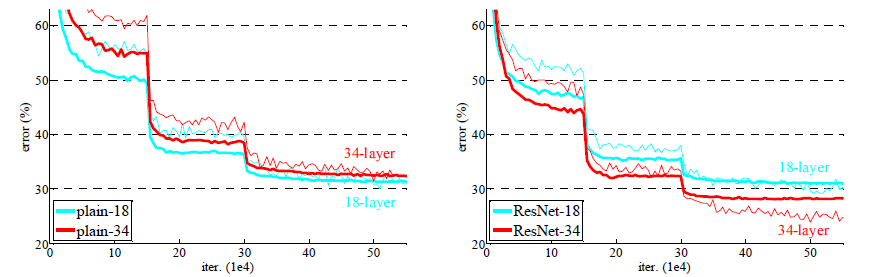

x = keras.layers.Dense(10, activation='softmax')(x)이미지넷에서 18층 및 34층의 plain 네트워크와 ResNet의 성능을 비교

- Plain 네트워크는 망이 깊어지면서 오히려 에러가 커짐

- 34층의 plain 네트워크가 18층의 plain 네트워크보다 성능이 나쁨

- 오른쪽 그래프의 ResNet은 망이 깊어지면서 에러도 역시 작아진다.

- Shortcut을 연결해서 잔차(residual)를 최소가 되게 학습한 효과입증

아래 표] 18층, 34층, 50층, 101층, 152층의 ResNet구성

152층의 ResNet이 가장 성능이 뛰어나다.