🧩Tensorflow Certification 취득하기 - part 3. 실전 (Fashion MNIST)

🌕 AI/DL -Tenserflow Certification

Fashion MNIST

- Fully Connected Layer (Dense)를 활용한 이미지 분류 (Image Classification)문제

Create a classifier for the Fashion MNIST dataset

Note that the test will expect it to classify 10 classes and that

the input shape should be the native size of the Fashion MNIST dataset which is 28x28 monochrome.

Do not resize the data. Your input layer should accept

(28,28) as the input shape only.

If you amend this, the tests will fail.

Fashion MNIST 데이터 셋에 대한 분류기 생성 테스트는 10 개의 클래스를 분류 할 것으로 예상하고 입력 모양은 Fashion MNIST 데이터 세트의 기본 크기 여야합니다.28x28 단색.

데이터 크기를 조정하지 마십시오. input_shape는 (28,28)을 입력 모양으로 만 사용합니다.

Solution

1. import 하기

필요한 모듈을 import 합니다.

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.models import Sequential

from tensorflow.keras.callbacks import ModelCheckpoint2. Load dataset

tf.keras.datasets 에는 다양한 샘플 데이터를 제공해줍니다.

fashion_mnist = tf.keras.datasets.fashion_mnist

(x_train, y_train), (x_valid, y_valid) = fashion_mnist.load_data()

x_train.shape, x_valid.shape

y_train.shape, y_valid.shape3. 이미지 정규화 (Normalization)

- 모든 이미지 픽셀(pixel)값들을 0~1 사이의 값으로 정규화 해 줍니다.

- x_train, x_valid 에 대해서만 정규화합니다.

정규화(Normalization) 전의 최소값(min), 최대값(max)을 확인합니다.

x_train.min(), x_train.max()정규화(Normalization) 합니다.

x_train = x_train / 255.0

x_valid = x_valid / 255.0정규화 후 최소값/최대값 확인

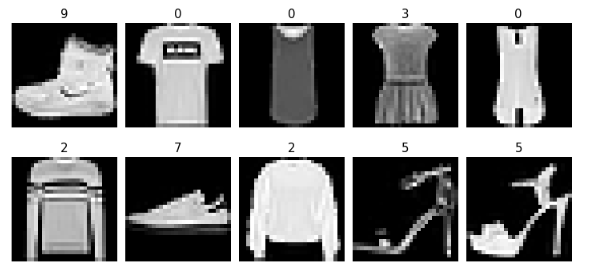

x_train.min(), x_train.max()샘플 데이터 Visualization

# 시각화

fig, axes = plt.subplots(2, 5)

fig.set_size_inches(10, 5)

for i in range(10):

axes[i//5, i%5].imshow(x_train[i], cmap='gray')

axes[i//5, i%5].set_title(str(y_train[i]), fontsize=15)

plt.setp( axes[i//5, i%5].get_xticklabels(), visible=False)

plt.setp( axes[i//5, i%5].get_yticklabels(), visible=False)

axes[i//5, i%5].axis('off')

plt.tight_layout()

plt.show()

- 0: 티셔츠/탑

- 1: 바지

- 2: 풀오버(스웨터의 일종)

- 3: 드레스

- 4: 코트

- 5: 샌들

- 6: 셔츠

- 7: 스니커즈

- 8: 가방

- 9: 앵클 부츠

활성함수 (relu, sigmoid, softmax)

from IPython.display import Image

import numpy as np

import matplotlib.pyplot as plt4. 모델 정의 (Sequential)

model = Sequential([

# Flatten으로 shape 펼치기

Flatten(input_shape=(28, 28)),

# Dense Layer

Dense(1024, activation='relu'),

Dense(512, activation='relu'),

Dense(256, activation='relu'),

Dense(128, activation='relu'),

Dense(64, activation='relu'),

# Classification을 위한 Softmax

Dense(10, activation='softmax'),

])5. 컴파일 (compile)

optimizer는 가장 최적화가 잘되는 알고리즘인 'adam'을 사용합니다.loss설정

- 출력층 activation이

sigmoid인 경우:binary_crossentropy - 출력층 activation이

softmax인 경우:- 원핫인코딩(O):

categorical_crossentropy - 원핫인코딩(X):

sparse_categorical_crossentropy)

- 원핫인코딩(O):

metrics를 'acc' 혹은 'accuracy'로 지정하면, 학습시 정확도를 모니터링 할 수 있습니다.

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['acc'])6. ModelCheckpoint: 체크포인트 생성

val_loss 기준으로 epoch 마다 최적의 모델을 저장하기 위하여, ModelCheckpoint를 만듭니다.

checkpoint_path는 모델이 저장될 파일 명을 설정합니다.ModelCheckpoint을 선언하고, 적절한 옵션 값을 지정합니다.

checkpoint_path = "my_checkpoint.ckpt"

checkpoint = ModelCheckpoint(filepath=checkpoint_path,

save_weights_only=True,

save_best_only=True,

monitor='val_loss',

verbose=1)7. 학습 (fit)

validation_data를 반드시 지정합니다.epochs을 적절하게 지정합니다.callbacks에 바로 위에서 만든 checkpoint를 지정합니다.

history = model.fit(x_train, y_train,

validation_data=(x_valid, y_valid),

epochs=20,

callbacks=[checkpoint],

)8. 학습 완료 후 Load Weights (ModelCheckpoint)

학습이 완료된 후에는 반드시 load_weights를 해주어야 합니다.

그렇지 않으면, 열심히 ModelCheckpoint를 만든 의미가 없습니다.

# checkpoint 를 저장한 파일명을 입력합니다.

model.load_weights(checkpoint_path)검증이 하고싶다면..

model.evaluate(x_valid, y_valid)