cat and dog

import matplotlib.pyplot as plt

import os

import shutil

import zipfile

import tensorflow as tf

import numpy as np

import pandas as pd

data_zip_dir = '/Users/sok/Desktop/desktop/pytest/dogs-vs-cats'

train_zip_dir = os.path.join(data_zip_dir, 'train.zip')

test_zip_dir = os.path.join(data_zip_dir, 'test1.zip')

with zipfile.ZipFile(train_zip_dir, 'r') as z:

z.extractall()

with zipfile.ZipFile(test_zip_dir, 'r') as z:

z.extractall()

train_dir = os.path.join(os.getcwd(), 'train')

test_dir = os.path.join(os.getcwd(), 'test')

train_set_dir = os.path.join(train_dir, 'train_set')

os.mkdir(train_set_dir)

train_dog_dir = os.path.join(train_set_dir, 'dog')

os.mkdir(train_dog_dir)

train_cat_dir = os.path.join(train_set_dir, 'cat')

os.mkdir(train_cat_dir)

valid_set_dir = os.path.join(train_dir, 'valid_set')

os.mkdir(valid_set_dir)

valid_dog_dir = os.path.join(valid_set_dir, 'dog')

os.mkdir(valid_dog_dir)

valid_cat_dir = os.path.join(valid_set_dir, 'cat')

os.mkdir(valid_cat_dir)

test_set_dir = os.path.join(train_dir, 'test_set')

os.mkdir(test_set_dir)

test_dog_dir = os.path.join(test_set_dir, 'dog')

os.mkdir(test_dog_dir)

test_cat_dir = os.path.join(test_set_dir, 'cat')

os.mkdir(test_cat_dir)

dog_files = [f'dog.{i}.jpg' for i in range(12500)]

cat_files = [f'cat.{i}.jpg' for i in range(12500)]

for file in dog_files[:10000]:

src = os.path.join(train_dir, file)

dst = os.path.join(train_dog_dir, file)

shutil.move(src, dst)

for file in dog_files[10000:12000]:

src = os.path.join(train_dir, file)

dst = os.path.join(valid_dog_dir, file)

shutil.move(src, dst)

for file in dog_files[12000:12500]:

src = os.path.join(train_dir, file)

dst = os.path.join(test_dog_dir, file)

shutil.move(src, dst)

for file in cat_files[:10000]:

src = os.path.join(train_dir, file)

dst = os.path.join(train_cat_dir, file)

shutil.move(src, dst)

for file in cat_files[10000:12000]:

src = os.path.join(train_dir, file)

dst = os.path.join(valid_cat_dir, file)

shutil.move(src, dst)

for file in cat_files[12000:12500]:

src = os.path.join(train_dir, file)

dst = os.path.join(test_cat_dir, file)

shutil.move(src, dst)

print(f'the number of train set : {len(os.listdir(train_dog_dir)) + len(os.listdir(train_cat_dir))}')

print(f'the number of validn set : {len(os.listdir(valid_dog_dir)) + len(os.listdir(valid_cat_dir))}')

print(f'the number of test set : {len(os.listdir(test_dog_dir)) + len(os.listdir(test_cat_dir))}')

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

valid_datagen = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255)

test_datagen = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(train_set_dir,

target_size=(150,150),

batch_size=32,

class_mode='binary')

valid_generator = valid_datagen.flow_from_directory(valid_set_dir,

target_size=(150,150),

batch_size=32,

class_mode='binary')

test_generator = test_datagen.flow_from_directory(test_set_dir,

target_size=(150,150),

batch_size=32,

class_mode='binary')

train_step = train_generator.n // 32

valid_step = valid_generator.n // 32

test_step = test_generator.n // 32

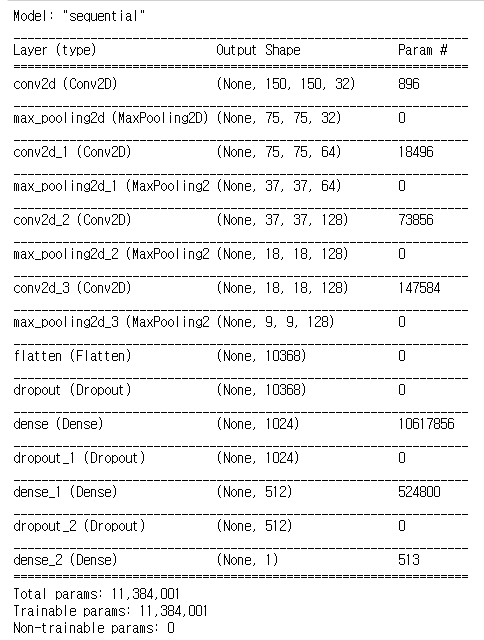

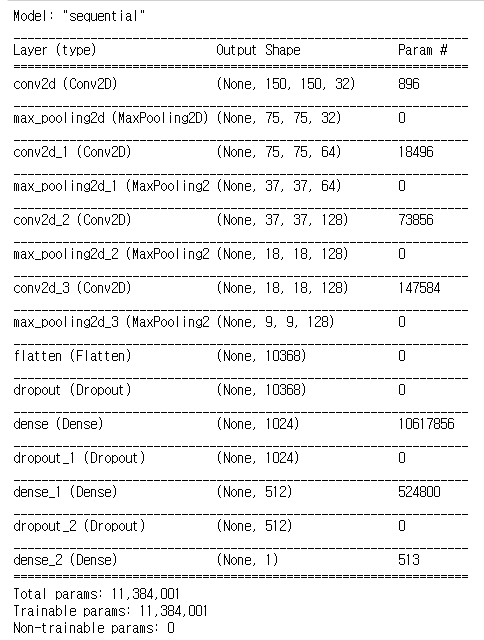

model = tf.keras.models.Sequential([

tf.keras.layers.Input(shape=(150,150,3)),

tf.keras.layers.Conv2D(filters=32, kernel_size=(3,3), strides=1, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(filters=64, kernel_size=(3,3), strides=1, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(filters=128, kernel_size=(3,3), strides=1, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(filters=128, kernel_size=(3,3), strides=1, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(1024, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dropout(0.1),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.summary()

model.compile(optimizer=tf.keras.optimizers.Adam(1e-3),

loss='binary_crossentropy',

metrics=['acc'])

model.fit_generator(train_generator,

steps_per_epoch=train_step,

epochs=10,

validation_data=valid_generator,

validation_steps=valid_step)