머리말

이번 글에서는 TPC-H 벤치마크 데이터를 로드하고 Query 를 수행하는 예제를 보여드리고자 합니다.

로드할 테이블의 크기는 다음과 같습니다.

5억3천만건의 lineitem 및 1억5천만건의 orders 테이블이 큰 테이블 들입니다.

| 테이블명 | Rows |

|---|---|

| customer | 15,000,000 |

| nation | 25 |

| orders | 150,000,000 |

| part | 20,000,000 |

| partsupp | 80,000,000 |

| region | 5 |

| supplier | 1,000,000 |

| lineitem | 538,837,902 |

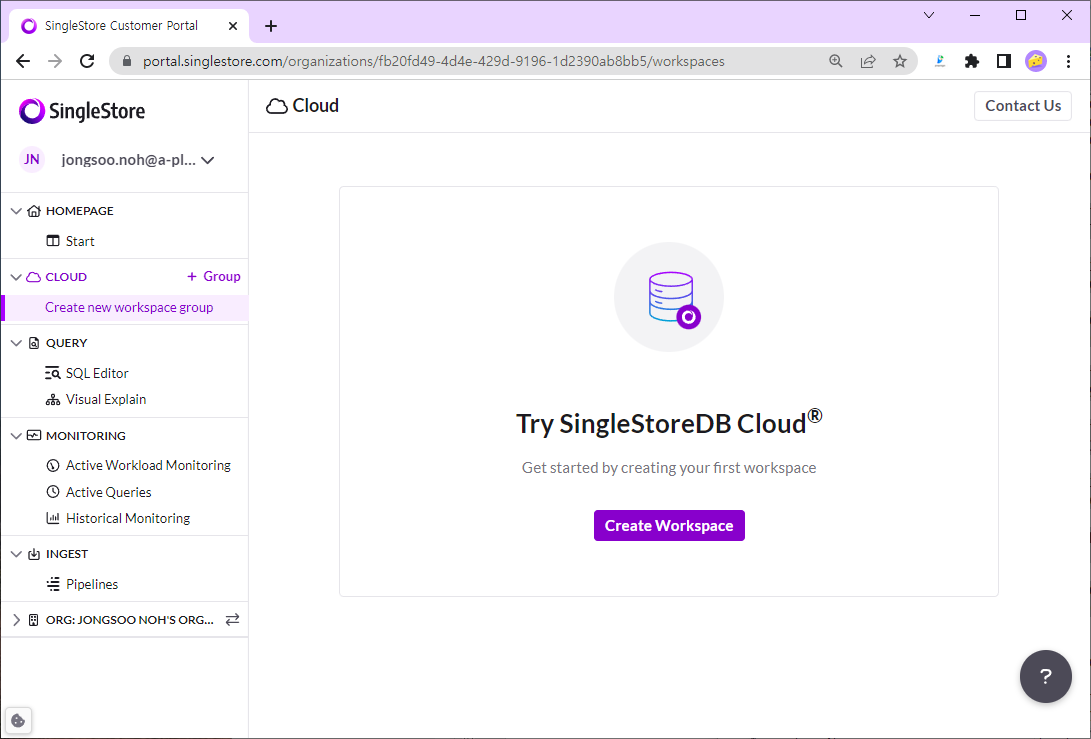

먼저 http://portal.singlestore.com 에 접속합니다.

간단한 가입 절차 후에는 500$ 상당의 credit 을 이용해서 SingleStoreDB Managed Cloud 기능을 사용하실 수 있습니다.

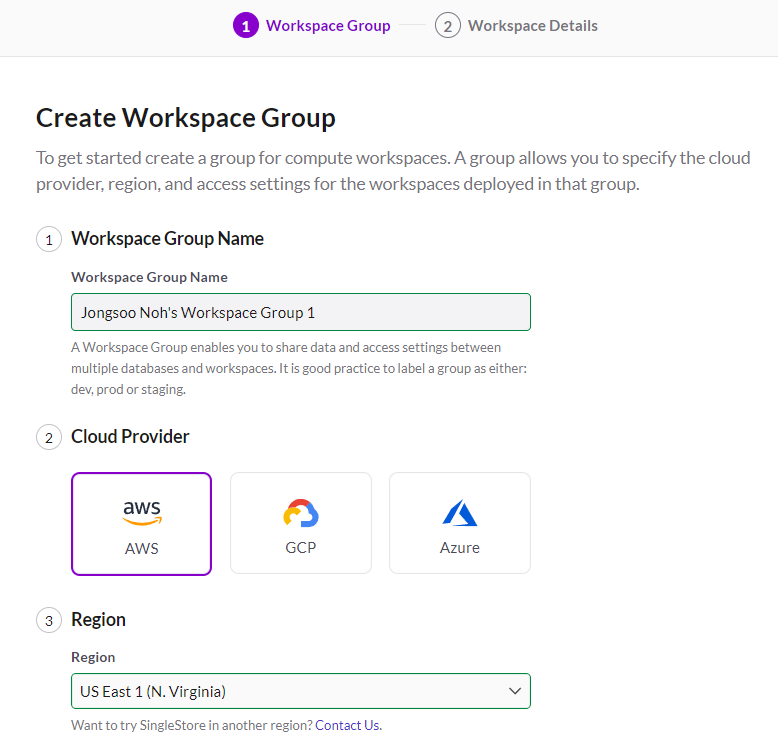

Workspace Group 생성

Workspace Group 은 US East 1(N. Virginia) 에 생성하시기 바랍니다.

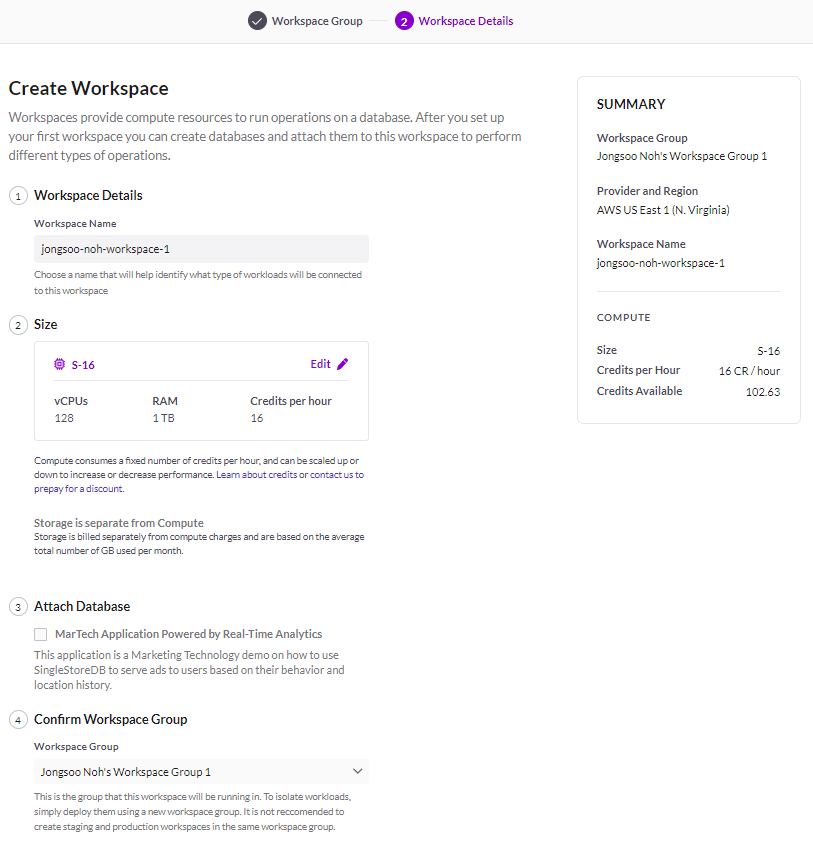

Workspace 생성

Workspace Size 는 클 수록 데이터 로딩 및 Query 속도가 빠르지만 대신 Credit 소모도 많습니다.

S-4 가 대량 5분 정도의 데이터 로드 시간이 걸렸으므로 참고하시고 선택하시기 바랍니다.

이 글에서는 제 계정에서 최대로 사용할 수 있는 S-16(128 vCPU, 1TB RAM) 을 선택해 보겠습니다.

Attach Database 항목은 제외하시기 바랍니다.

Database 생성 및 Schema 생성

Workspace 가 생성되는 시간은 가변적이지만 대략 5분~10분 내외가 소요됩니다. 그동안 왼쪽 메뉴에서 SQL Editor 를 선택하시고 다음 SQL 을 복사해 놓으시기 바랍니다.

CREATE DATABASE tpch;

USE tpch;

CREATE TABLE `customer` (

`c_custkey` int(11) NOT NULL,

`c_name` varchar(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`c_address` varchar(40) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`c_nationkey` int(11) NOT NULL,

`c_phone` char(15) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`c_acctbal` decimal(15,2) NOT NULL,

`c_mktsegment` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`c_comment` varchar(117) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`c_custkey`) UNENFORCED RELY,

SHARD KEY (`c_custkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `lineitem` (

`l_orderkey` bigint(11) NOT NULL,

`l_partkey` int(11) NOT NULL,

`l_suppkey` int(11) NOT NULL,

`l_linenumber` int(11) NOT NULL,

`l_quantity` decimal(15,2) NOT NULL,

`l_extendedprice` decimal(15,2) NOT NULL,

`l_discount` decimal(15,2) NOT NULL,

`l_tax` decimal(15,2) NOT NULL,

`l_returnflag` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_linestatus` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_shipdate` date NOT NULL,

`l_commitdate` date NOT NULL,

`l_receiptdate` date NOT NULL,

`l_shipinstruct` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_shipmode` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`l_comment` varchar(44) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`l_orderkey`, `l_linenumber`) UNENFORCED RELY,

SHARD KEY (`l_orderkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `nation` (

`n_nationkey` int(11) NOT NULL,

`n_name` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`n_regionkey` int(11) NOT NULL,

`n_comment` varchar(152) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`n_nationkey`) UNENFORCED RELY,

SHARD KEY (`n_nationkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `orders` (

`o_orderkey` bigint(11) NOT NULL,

`o_custkey` int(11) NOT NULL,

`o_orderstatus` char(1) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`o_totalprice` decimal(15,2) NOT NULL,

`o_orderdate` date NOT NULL,

`o_orderpriority` char(15) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`o_clerk` char(15) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`o_shippriority` int(11) NOT NULL,

`o_comment` varchar(79) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`o_orderkey`) UNENFORCED RELY,

SHARD KEY (`o_orderkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `part` (

`p_partkey` int(11) NOT NULL,

`p_name` varchar(55) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`p_mfgr` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`p_brand` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`p_type` varchar(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`p_size` int(11) NOT NULL,

`p_container` char(10) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`p_retailprice` decimal(15,2) NOT NULL,

`p_comment` varchar(23) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`p_partkey`) UNENFORCED RELY,

SHARD KEY (`p_partkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `partsupp` (

`ps_partkey` int(11) NOT NULL,

`ps_suppkey` int(11) NOT NULL,

`ps_availqty` int(11) NOT NULL,

`ps_supplycost` decimal(15,2) NOT NULL,

`ps_comment` varchar(199) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`ps_partkey`,`ps_suppkey`) UNENFORCED RELY,

SHARD KEY(`ps_partkey`),

KEY (`ps_partkey`,`ps_suppkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `region` (

`r_regionkey` int(11) NOT NULL,

`r_name` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`r_comment` varchar(152) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`r_regionkey`) UNENFORCED RELY,

SHARD KEY (`r_regionkey`) USING CLUSTERED COLUMNSTORE

);

CREATE TABLE `supplier` (

`s_suppkey` int(11) NOT NULL,

`s_name` char(25) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`s_address` varchar(40) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`s_nationkey` int(11) NOT NULL,

`s_phone` char(15) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`s_acctbal` decimal(15,2) NOT NULL,

`s_comment` varchar(101) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

UNIQUE KEY pk (`s_suppkey`) UNENFORCED RELY,

SHARD KEY (`s_suppkey`) USING CLUSTERED COLUMNSTORE

);Workspace 가 생성되면 위 SQL 을 각각 실행하거나 모두 선택하여 한번에 실행할 수 있습니다.

SQL Editor 오른쪽 상단에 Run 버튼을 클릭하거나 Ctrl-Enter(Windows), Cmd-Enter(Mac) 을 이용하시면 됩니다.

Pipeline 생성

SingleStore 에서 준비해 놓은 S3 Bucket 에서 데이터를 로드할 pipeline 을 생성합니다.

CREATE OR REPLACE PIPELINE tpch_100_lineitem

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/lineitem/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE lineitem

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_customer

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/customer/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE customer

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_nation

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/nation/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE nation

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_orders

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/orders/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE orders

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_part

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/part/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE part

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_partsupp

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/partsupp/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE partsupp

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_region

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/region/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE region

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';

CREATE OR REPLACE PIPELINE tpch_100_supplier

AS LOAD DATA S3 'memsql-tpch-dataset/sf_100/supplier/'

config '{"region":"us-east-1"}'

SKIP DUPLICATE KEY ERRORS

INTO TABLE supplier

FIELDS TERMINATED BY '|'

LINES TERMINATED BY '|\n';Pipeline 실행

pipeline 을 각각 start 시켜 로딩 시간을 측정하겠습니다.

start pipeline tpch_100_customer foreground;

start pipeline tpch_100_nation foreground;

start pipeline tpch_100_orders foreground;

start pipeline tpch_100_part foreground;

start pipeline tpch_100_partsupp foreground;

start pipeline tpch_100_region foreground;

start pipeline tpch_100_supplier foreground;

start pipeline tpch_100_lineitem foreground;데이터 로딩 시간은 다음과 같습니다.

5억건이 단 68초 만에 로딩되었습니다.

| 테이블명 | Rows | 로딩시간(초) |

|---|---|---|

| customer | 15,000,000 | 5 |

| nation | 25 | 0.9 |

| orders | 150,000,000 | 16 |

| part | 20,000,000 | 4.2 |

| partsupp | 80,000,000 | 10 |

| region | 5 | 0.6 |

| supplier | 1,000,000 | 2.3 |

| lineitem | 538,837,902 | 68 |

Query 실행

다음 Query 를 두번씩 실행해서 첫번째 Query Compile 시간을 제외하고 두번째 소요시간을 측정합니다.

## Query 1

select

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice * (1 - l_discount)) as sum_disc_price,

sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

from

lineitem

where

l_shipdate <= date('1998-12-01' - interval '90' day)

group by

l_returnflag,

l_linestatus

order by

l_returnflag,

l_linestatus;

403 ms, Showing 4 rows

## Query 2

select

l_orderkey,

sum(l_extendedprice * (1 - l_discount)) as revenue,

o_orderdate,

o_shippriority

from

customer,

orders,

lineitem

where

c_mktsegment = 'BUILDING'

and c_custkey = o_custkey

and l_orderkey = o_orderkey

and o_orderdate < date('1995-03-15')

and l_shipdate > date('1995-03-15')

group by

l_orderkey,

o_orderdate,

o_shippriority

order by

revenue desc,

o_orderdate

limit 10;

549 ms, Showing 10 rows

## Query 3

select

c_custkey,

c_name,

sum(l_extendedprice * (1 - l_discount)) as revenue,

c_acctbal,

n_name,

c_address,

c_phone,

c_comment

from

customer,

orders,

lineitem,

nation

where

c_custkey = o_custkey

and l_orderkey = o_orderkey

and o_orderdate >= date('1993-10-01')

and o_orderdate < date('1993-10-01') + interval '3' month

and l_returnflag = 'R'

and c_nationkey = n_nationkey

group by

c_custkey,

c_name,

c_acctbal,

c_phone,

n_name,

c_address,

c_comment

order by

revenue desc

limit 20;

456 ms, Showing 20 rows

## Query 4

select

nation,

o_year,

sum(amount) as sum_profit

from

(

select

n_name as nation,

extract(year from o_orderdate) as o_year,

l_extendedprice * (1 - l_discount) - ps_supplycost * l_quantity as amount

from

part,

supplier,

lineitem,

partsupp,

orders,

nation

where

s_suppkey = l_suppkey

and ps_suppkey = l_suppkey

and ps_partkey = l_partkey

and p_partkey = l_partkey

and o_orderkey = l_orderkey

and s_nationkey = n_nationkey

and p_name like '%green%'

) as profit

group by

nation,

o_year

order by

nation,

o_year desc;

1.259 s, Showing 175 rowsWorkspace 및 Workspace Group Termination

테스트가 끝났으면 Credit 절약을 위해 Workspace 를 termination 시킵니다.

tpch 데이터베이스는 detach 상태로 남아 있으므로 추후 다른 Size 의 workspace 를 생성하고 attach 하여 사용할 수 있습니다. 만일 필요없을 것 같으면 tpch 데이터베이스 역시 drop 할 수 있습니다.

Workspace Group 은 남겨두어도 과금되지 않습니다. 필요에 따라 Terminate 시킬 수 있습니다.

마무리

128 vCPU, 1TB 메모리 사양의 Managed Cloud 환경에서 소스 데이터 기준 5억건, 60GB 의 데이터 로드 시간이 68초밖에 걸리지 않았습니다.

빠른 데이터 Ingestion 및 빠른 분석 쿼리 실행이 장점인 SingleStoreDB 를 실제로 경험해 보시기 바랍니다.