◾개요

- 신용카드 부정 사용자 검출

- Kaggle 데이터 사용

- 신용카드 사기 검출 분류 실습용 데이터

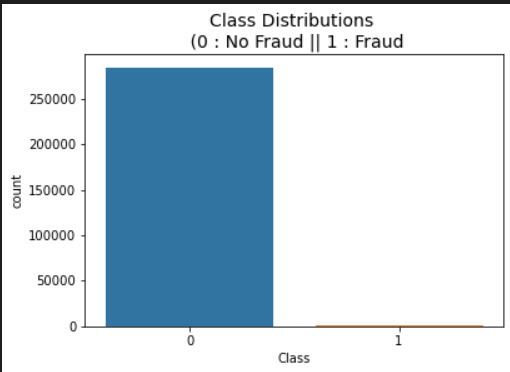

class 컬럼이 사기 유무 의미- class 컬럼의 불균형이 극심해서 전체 데이터의 약 0.172%가 1(사기 Fraud)를 의미

Amount : 사용 금액, Class : 사기 유무, v.. : 다른 특성

◾데이터 관찰

- 데이터 읽기

import pandas as pd

data_path = '../data/03/creditcard.csv'

raw_data = pd.read_csv(data_path)

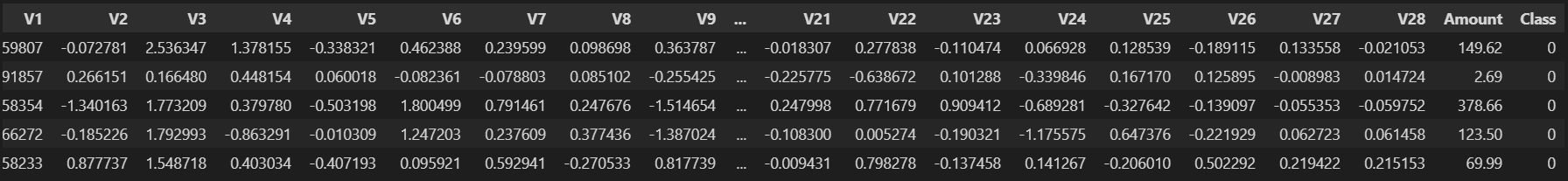

raw_data.head()

- 데이터 특성 확인

- 기밀을 유지하기 위해 대다수의 컬럼은

V..로 구성되어있다.

- 값 또한 스케일 조정이 되어있는 상태이다.

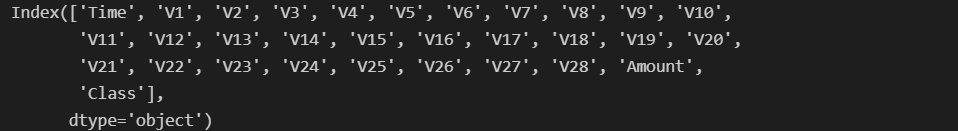

raw_data.columns

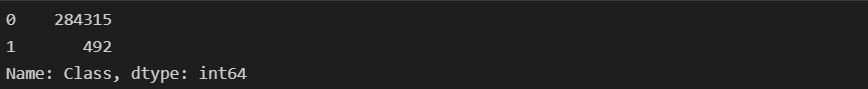

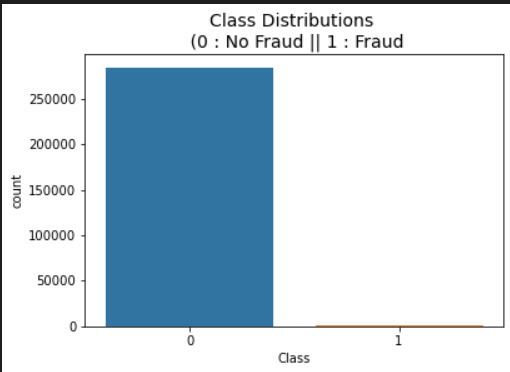

- 데이터 분포 확인

- 지극히 작은 양이 Frauds 임을 확인할 수 있다.

raw_data['Class'].value_counts()

frauds_rate = round(raw_data['Class'].value_counts()[1] / len(raw_data) * 100, 2)

print('Frauds', frauds_rate, '% of the dataset')

import seaborn as sns

import matplotlib.pyplot as plt

sns.countplot(x = raw_data['Class'])

plt.title('Class Distributions \n (0 : No Fraud || 1 : Fraud', fontsize=14)

plt.show()

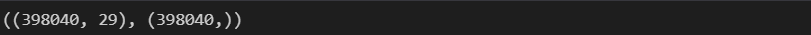

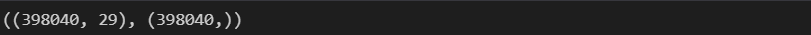

- 데이터 정리

X = raw_data.iloc[:, 1 : -1]

y = raw_data.iloc[:, -1]

X.shape, y.shape

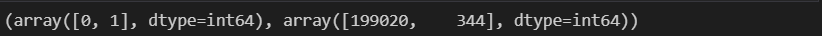

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=13, stratify=y)

import numpy as np

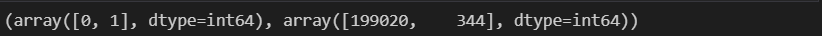

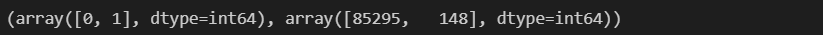

np.unique(y_train, return_counts=True)

tmp = np.unique(y_train, return_counts=True)[1]

tmp[1]/len(y_train) * 100.0

np.unique(y_test, return_counts=True)

tmp = np.unique(y_test, return_counts=True)[1]

tmp[1]/len(y_test) * 100.0

◾분석1

- 분류기 성능 반환 함수

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, roc_auc_score

def get_clf_eval(y_test, pred):

acc = accuracy_score(y_test, pred)

pre = precision_score(y_test, pred)

re = recall_score(y_test, pred)

f1 = f1_score(y_test, pred)

auc = roc_auc_score(y_test, pred)

return acc, pre, re, f1, auc

- 분류기 성능 출력 함수

from sklearn.metrics import confusion_matrix

def print_clf_eval(y_test, pred):

acc, pre, re, f1, auc = get_clf_eval(y_test, pred)

confusion = confusion_matrix(y_test, pred)

print('=> confusion matrix')

print(confusion)

print('---------------------')

print('Accruacy : {:.4f}, Precision : {:.4f}'.format(acc, pre))

print('Recall : {:.4f}, F1 : {:.4f}, AUC : {:.4f}'.format(re, f1, auc))

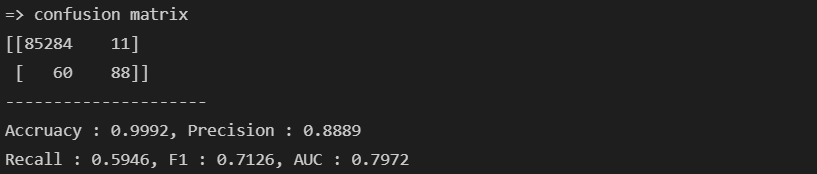

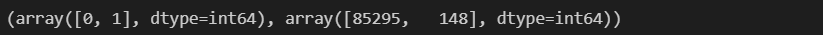

- Logistic Regression

from sklearn.linear_model import LogisticRegression

lr_clf = LogisticRegression(random_state=13, solver='liblinear')

lr_clf.fit(X_train, y_train)

lr_pred = lr_clf.predict(X_test)

print_clf_eval(y_test, lr_pred)

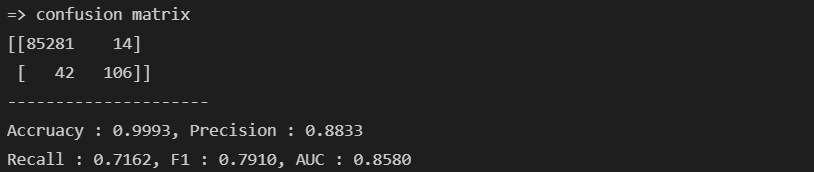

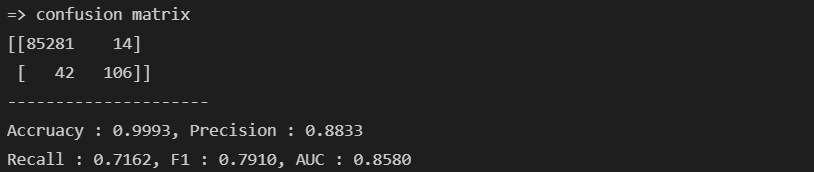

- Decision Tree

from sklearn.tree import DecisionTreeClassifier

dt_clf = DecisionTreeClassifier(random_state=13, max_depth=4)

dt_clf.fit(X_train, y_train)

dt_pred = dt_clf.predict(X_test)

print_clf_eval(y_test, dt_pred)

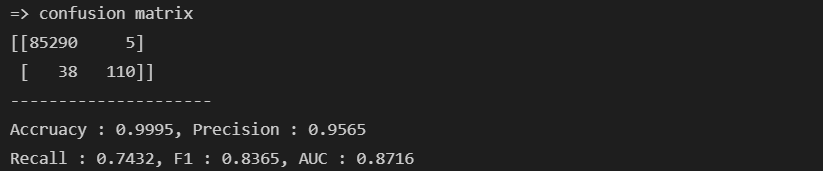

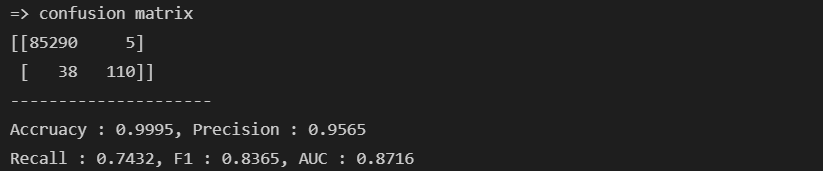

- Random Forest

from sklearn.ensemble import RandomForestClassifier

rf_clf = RandomForestClassifier(random_state=13, n_jobs=-1, n_estimators=100)

rf_clf.fit(X_train, y_train)

rf_pred = rf_clf.predict(X_test)

print_clf_eval(y_test, rf_pred)

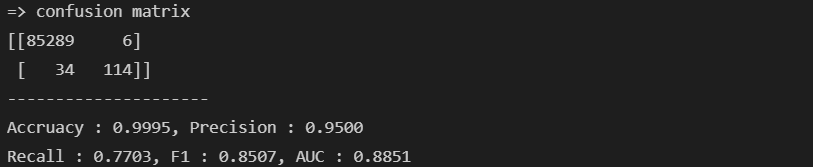

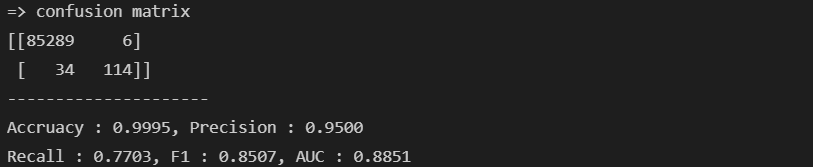

- LightGBM

from lightgbm import LGBMClassifier

lgbm_clf = LGBMClassifier(n_estimators=1000, num_leaves=64, n_jobs=-1, boost_from_average=False)

lgbm_clf.fit(X_train, y_train)

lgbm_pred = lgbm_clf.predict(X_test)

print_clf_eval(y_test, lgbm_pred)

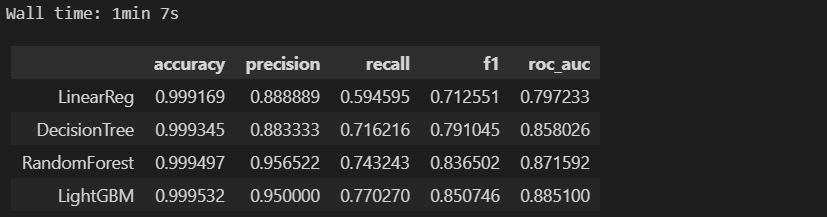

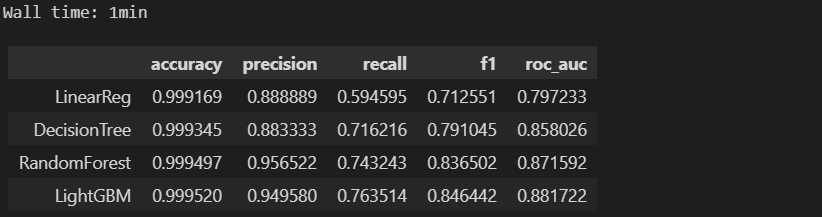

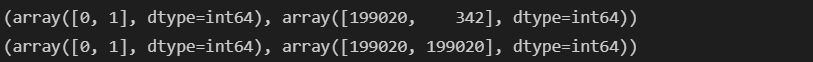

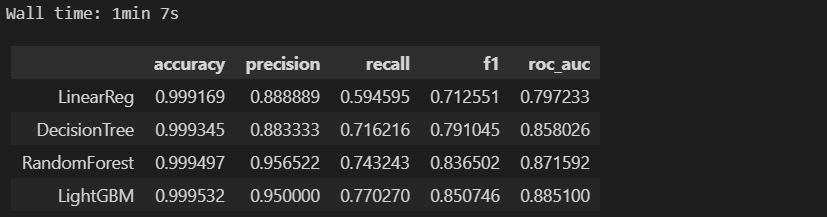

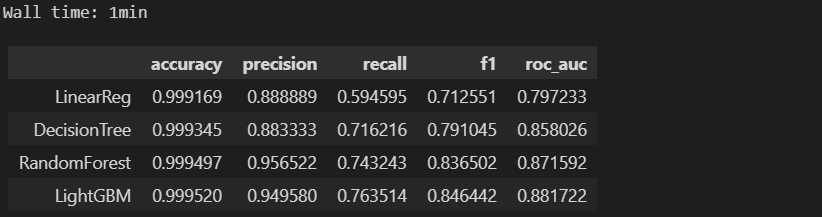

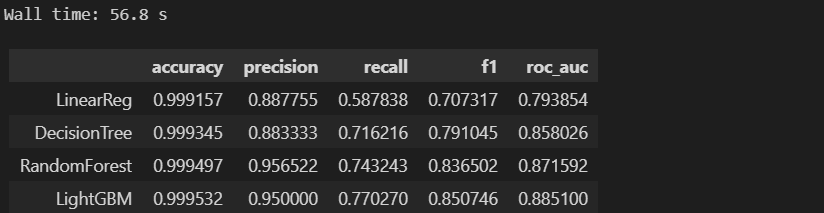

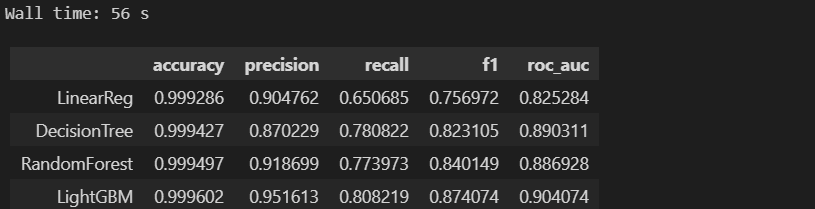

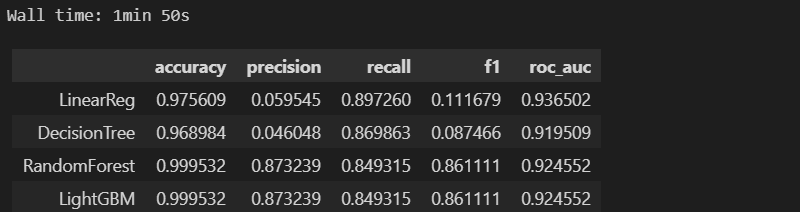

- 결과

- 은행 입장에서는 Recall이 중요하다(Fraud를 검출하는 것이 중요하므로)

- 사용자 입장에서는 Precision이 중요하다(Fraud라고 잘못 선정되지 않아야 하므로)

- 앙상블 계열의 성능이 우수하며 현재까지로는 LGBM이 가장 지표가 좋다.

- 모델, 데이터 입력시 성능 출력하는 함수

def get_result(model, X_train, X_test, y_train, y_test):

model.fit(X_train, y_train)

pred = model.predict(X_test)

return get_clf_eval(y_test, pred)

- 다수의 모델의 성능을 정리해서 DataFrame로 반환하는 함수 작성

def get_result_pd(models, model_names, X_train, X_test, y_train, y_test):

col_names = ['accuracy', 'precision', 'recall', 'f1', 'roc_auc']

tmp = []

for model in models:

tmp.append(get_result(model, X_train, X_test, y_train, y_test))

return pd.DataFrame(tmp, columns=col_names, index=model_names)

- 4개의 분류 모델 한 번에 정리

%%time

models = [lr_clf, dt_clf, rf_clf, lgbm_clf]

model_names = ["LinearReg", "DecisionTree", 'RandomForest', 'LightGBM']

results = get_result_pd(models, model_names, X_train, X_test, y_train, y_test)

results

◾분석2

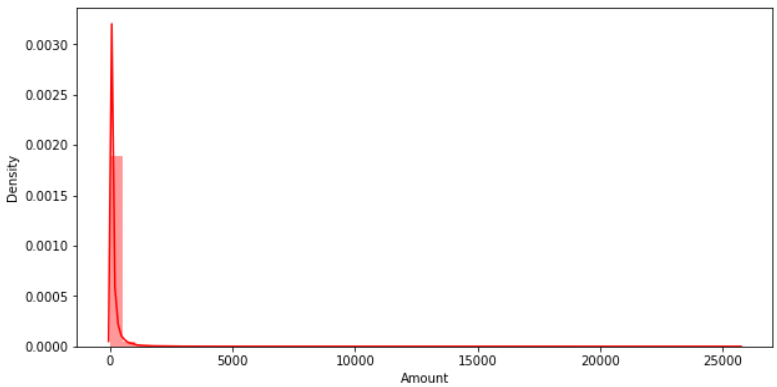

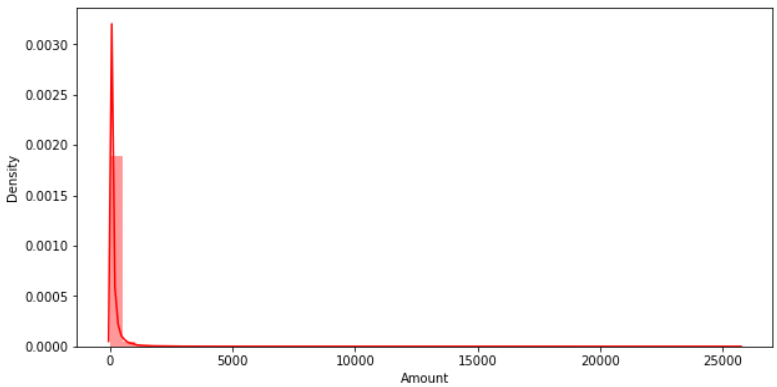

- Amount 컬럼 정리

import warnings

warnings.filterwarnings('ignore')

plt.figure(figsize=(10, 5))

sns.distplot(raw_data['Amount'], color='r')

plt.show()

- Amount 컬럼 StandardScaler 적용

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

amount_n = scaler.fit_transform(raw_data['Amount'].values.reshape(-1, 1))

raw_data_copy = raw_data.iloc[:, 1:-2]

raw_data_copy['Amount_Scaled'] = amount_n

raw_data_copy.head()

- 데이터 재분리

X_train, X_test, y_train, y_test = train_test_split(raw_data_copy, y, test_size=0.3, random_state=13, stratify=y)

- 모델 재평가

%%time

models = [lr_clf, dt_clf, rf_clf, lgbm_clf]

model_names = ['LinearReg', 'DecisionTree', 'RandomForest', 'LightGBM']

results = get_result_pd(models, model_names, X_train, X_test, y_train, y_test)

results

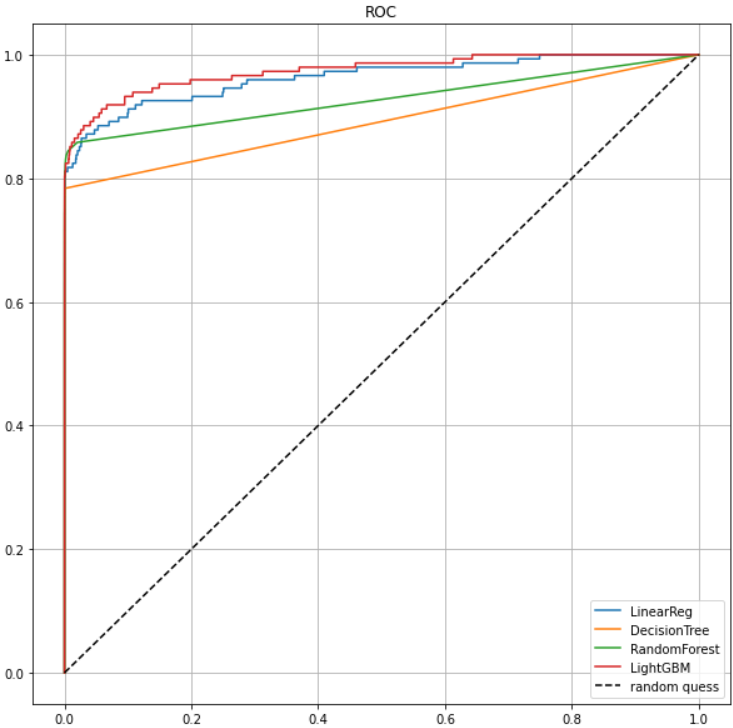

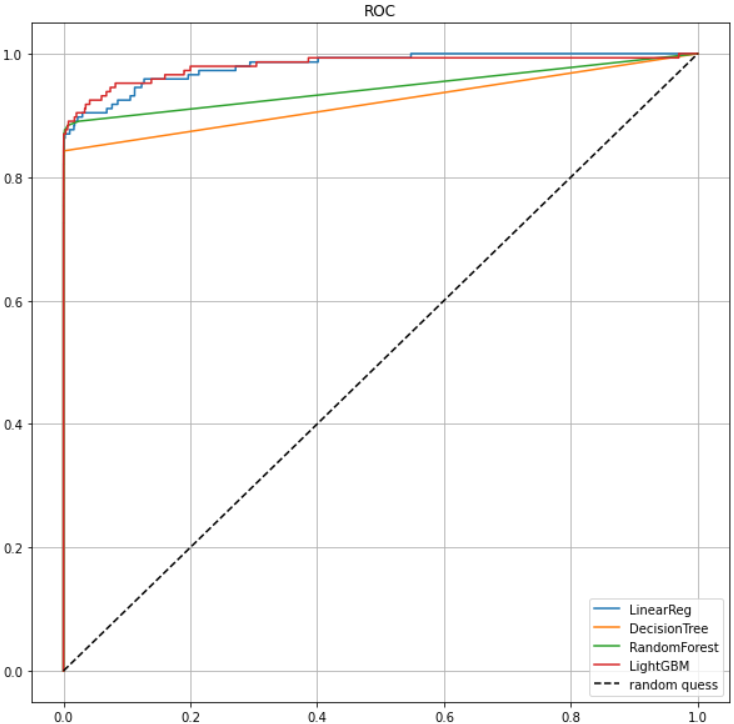

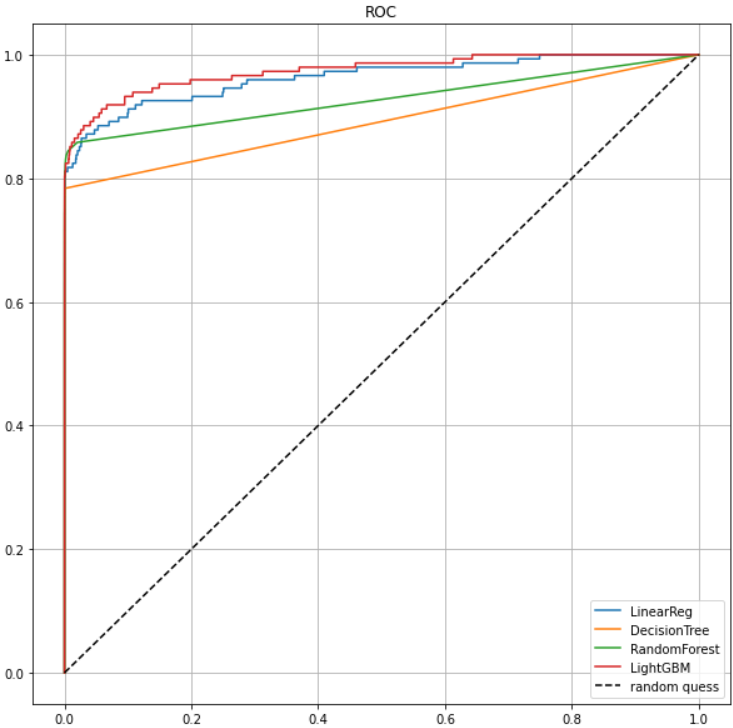

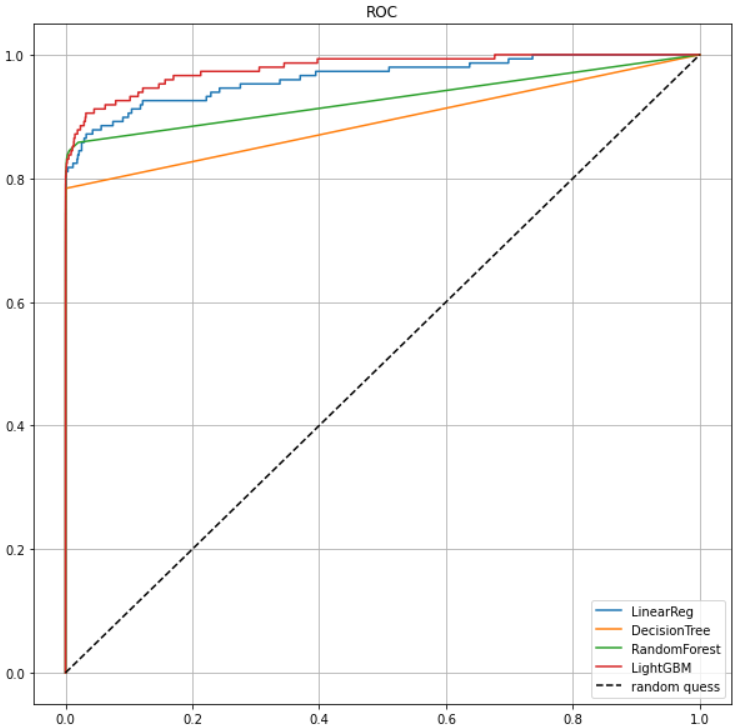

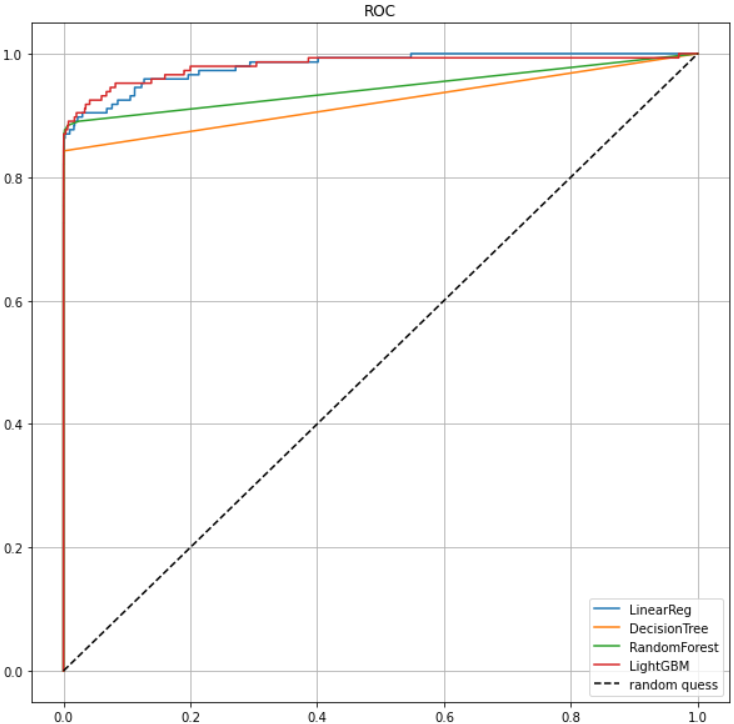

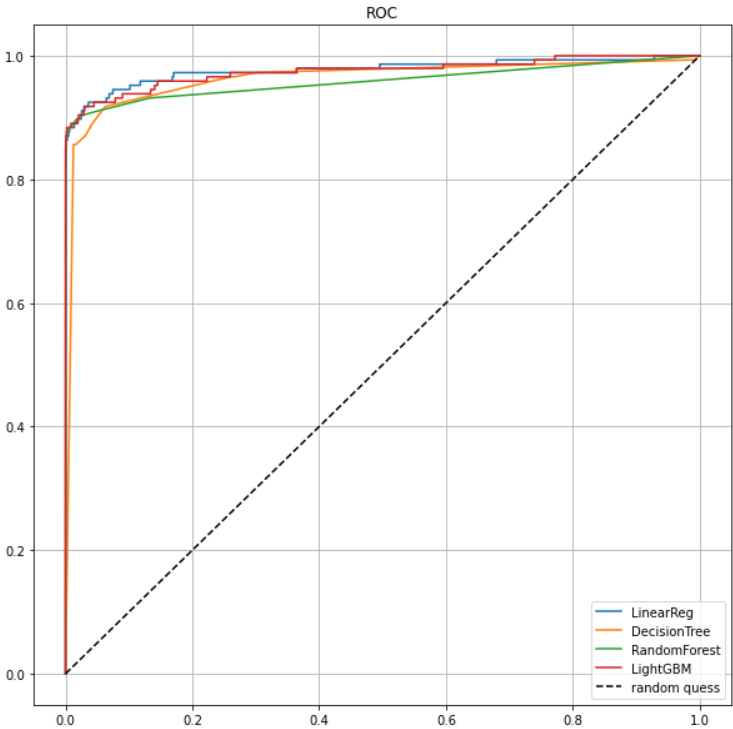

- 모델별 ROC 커브

from sklearn.metrics import roc_curve

def draw_roc_curve(models, model_names, X_test, y_test):

plt.figure(figsize=(10, 10))

for model in range(len(models)):

pred = models[model].predict_proba(X_test)[:, 1]

fpr, tpr, thresholds = roc_curve(y_test, pred)

plt.plot(fpr, tpr, label=model_names[model])

plt.plot([0, 1], [0, 1], 'k--', label='random quess')

plt.title("ROC")

plt.legend()

plt.grid()

plt.show()

draw_roc_curve(models, model_names, X_test, y_test)

- 결과

- 약간의 성능 향상이 있었지만 여전히 앙상블 계열이 좋다.

- 또한 LGBM이 가장 좋은 지표를 보인다.

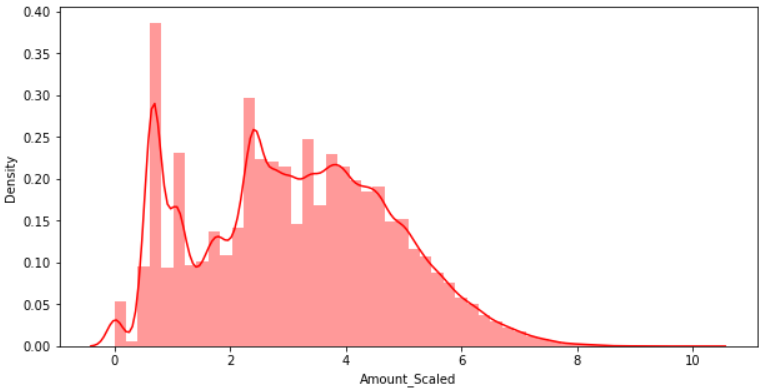

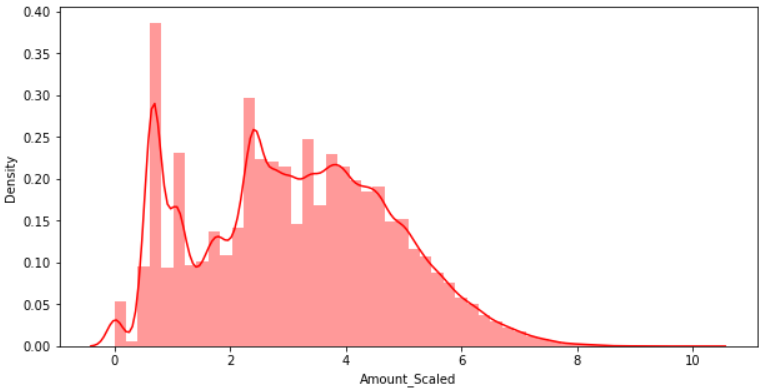

- 추가 분석 log scale

- log scale을 통해 분포가 달라짐을 볼 수 있다.

import numpy as np

amount_log = np.log1p(raw_data['Amount'])

raw_data_copy['Amount_Scaled'] = amount_log

raw_data_copy.head()

plt.figure(figsize=(10, 5))

sns.distplot(raw_data_copy['Amount_Scaled'], color='r')

plt.show()

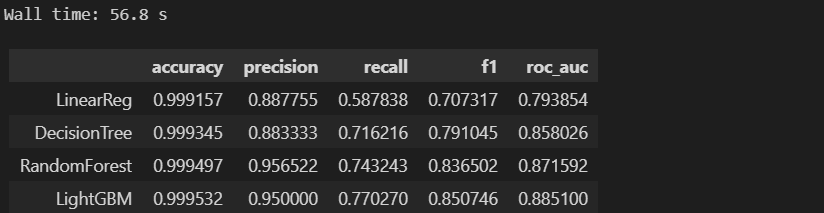

- 추가 성능 확인

%%time

X_train, X_test, y_train, y_test = train_test_split(raw_data_copy, y, test_size=0.3, random_state=13, stratify=y)

results = get_result_pd(models, model_names, X_train, X_test, y_train, y_test)

results

draw_roc_curve(models, model_names, X_test, y_test)

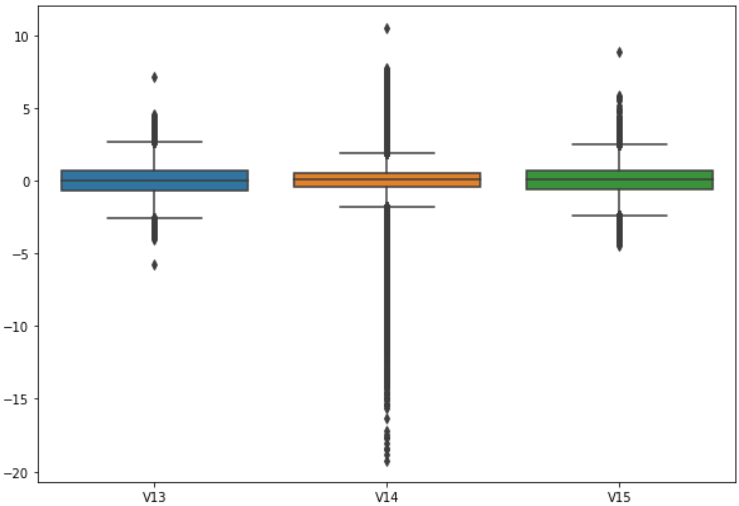

◾분석3

- Outlier 정리

import seaborn as sns

plt.figure(figsize=(10, 7))

sns.boxplot(data=raw_data[['V13', 'V14', 'V15']])

plt.show()

def get_outlier(df=None, column=None, weight=1.5):

fraud = df[df['Class']==1][column]

quantile_25 = np.percentile(fraud.values, 25)

quantile_75 = np.percentile(fraud.values, 75)

iqr = quantile_75 - quantile_25

iqr_weight = iqr * weight

lowest_val = quantile_25 - iqr_weight

highest_val = quantile_75 + iqr_weight

outlier_index = fraud[(fraud < lowest_val) | (fraud > highest_val)].index

return outlier_index

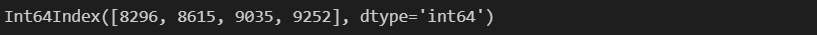

get_outlier(df=raw_data, column='V14', weight=1.5)

raw_data_copy.shape

outlier_index = get_outlier(df=raw_data, column='V14', weight=1.5)

raw_data_copy.drop(outlier_index, axis=0, inplace=True)

raw_data_copy.shape

- Outlier 제거 후 데이터 분리

X = raw_data_copy

raw_data.drop(outlier_index, axis=0, inplace=True)

y = raw_data.iloc[:, -1]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=13, stratify=y)

- 모델 평가

%%time

models = [lr_clf, dt_clf, rf_clf, lgbm_clf]

model_names = ['LinearReg', 'DecisionTree', 'RandomForest', 'LightGBM']

results = get_result_pd(models, model_names, X_train, X_test, y_train, y_test)

results

- ROC 커브

draw_roc_curve(models, model_names, X_test, y_test)

- 결과

- 앙상블 계열의 성능이 높다.

- 랜덤 포레스트가 LGBM 성능을 거의 따라잡은 걸 확인할 수 있다.

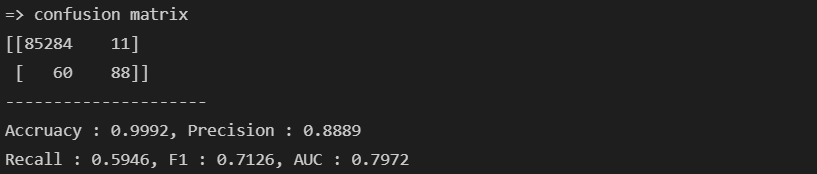

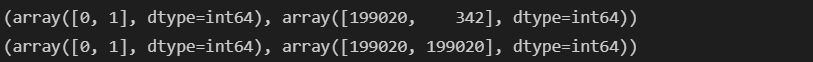

◾분석4

- 데이터의 불균형이 극심할 때 불균형한 두 클래스의 분포를 강제로 맞춰보는 작업

Undersampling : 많은 수의 데이터를 적은 수의 데이터로 강제로 조정Oversampling : 적은 수의 데이터를 많은 수의 데이터로 강제로 조정

- 원본 데이터의 피처 값들을 약간 변경하여 증식

- 대표적으로 SMOTE(Synthetic Minority Over-sampling Technique)

- 적은 데이터 세트에 있는 개별 데이터를 k-최근접이웃 방법으로 찾아서 데이터의 분포 사이에 새로운 데이터를 만드는 방식

- imbalanced-learn이라는 python pkg가 있다.

- pip install imbalanced-learn

- SMOTE 적용

from imblearn.over_sampling import SMOTE

smote = SMOTE(random_state=13)

X_train_over, y_train_over = smote.fit_resample(X_train, y_train)

X_train.shape, y_train.shape

X_train_over.shape, y_train_over.shape

print(np.unique(y_train, return_counts=True))

print(np.unique(y_train_over, return_counts=True))

- 모델 평가

%%time

models = [lr_clf, dt_clf, rf_clf, lgbm_clf]

model_names = ['LinearReg', 'DecisionTree', 'RandomForest', 'LightGBM']

results = get_result_pd(models, model_names, X_train_over, X_test, y_train_over, y_test)

results

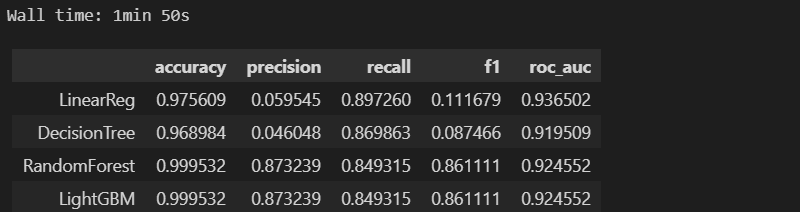

- ROC 커브

draw_roc_curve(models, model_names, X_test, y_test)

- 결과

- 지표가 유의미하게 증가한 것을 볼 수 있다.

- 랜덤 포레스트의 지표가 처음으로 LGBM을 넘어선 것을 볼 수 있다.