디카프리오는 정말 생존할 수 없었을까?

머신러닝을 이용해서 타이타닉 생존자를 예측해보자.

!pip install plotly_express1. EDA

import pandas as pd

titanic_url = '경로'

titanic = pd.read_excel(titanic_url)1-1. 한 번에 그래프 여러 개 그리기

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

# 1행 2열 그림판

# subplots가 반환하는 fig, ax를 f, ax 변수에 저장

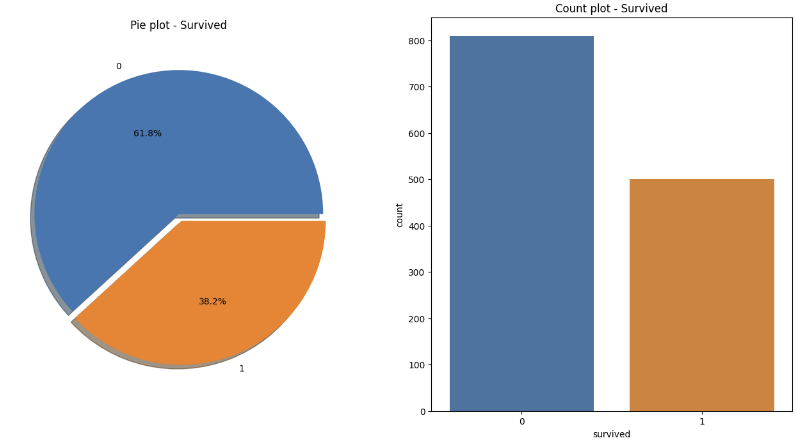

f, ax = plt.subplots(1, 2, figsize=(16, 8))

# explode : 조각들 간 거리

# autopct : 숫자(비율) 넣기

# shadow : 그림자 넣기

titanic['survived'].value_counts().plot.pie(ax=ax[0],

explode=[0, 0.05],

autopct='%1.1f%%',

shadow=True)

ax[0].set_title('Pie plot - Survived')

ax[0].set_ylabel('')

sns.countplot(x='survived', data=titanic, ax=ax[1])

ax[1].set_title('Count plot - Survived')

plt.show()

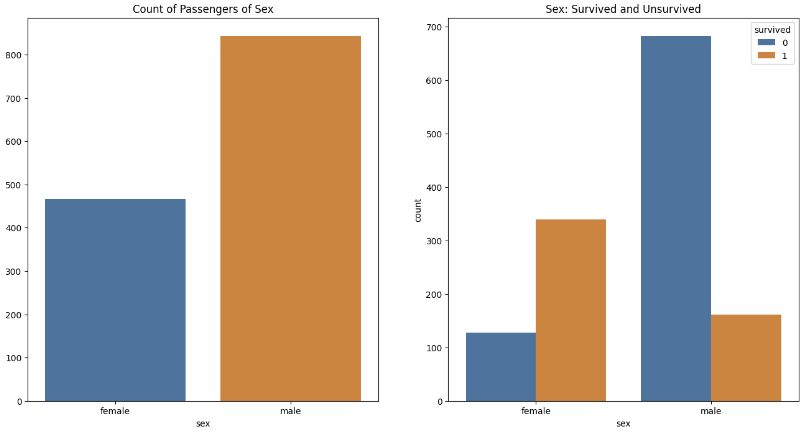

f, ax = plt.subplots(1, 2, figsize=(16, 8))

sns.countplot(x='sex', data=titanic, ax=ax[0])

ax[0].set_title('Count of Passengers of Sex')

ax[0].set_ylabel('')

sns.countplot(x='sex', hue='survived', data=titanic, ax=ax[1])

ax[1].set_title('Sex: Survived and Unsurvived')

plt.show()

1-2. 어떤 조건에서 생존율이 높았을까?

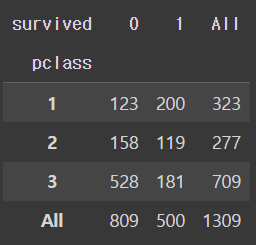

# 경제력 대비 생존율

pd.crosstab(titanic['pclass'], titanic['survived'], margins=True)

1등실 승객의 생존율이 높다. 1등실에 여성이 많이 타고 있었을까?

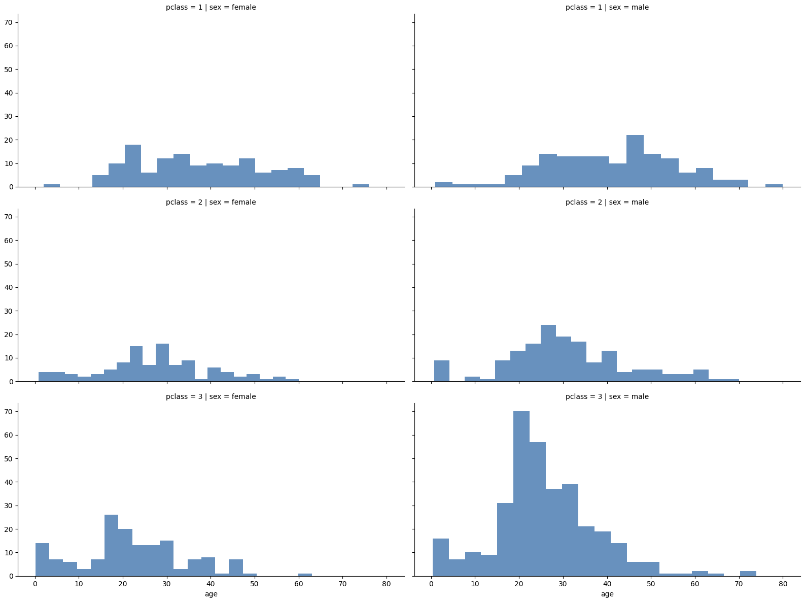

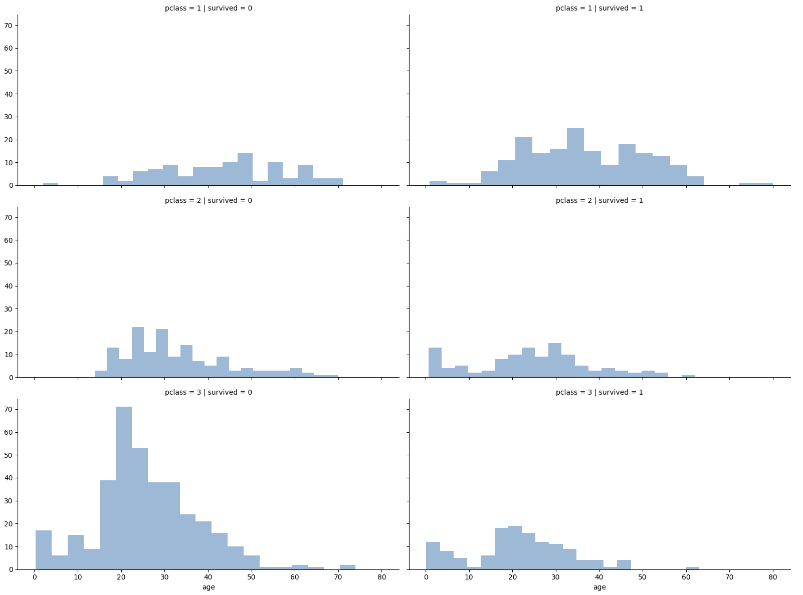

# class별 성별을 연령별로

grid = sns.FacetGrid(titanic, row='pclass', col='sex', height=4, aspect=2)

grid.map(plt.hist, 'age', alpha=0.8, bins=20);

3등실에 남성이 많이 타고 있었다.

3등실 남성들의 생존율이 낮아서 여성의 생존율이 높아보이고, 1등실과 2등실의 생존율도 높아보인 게 아닐까?

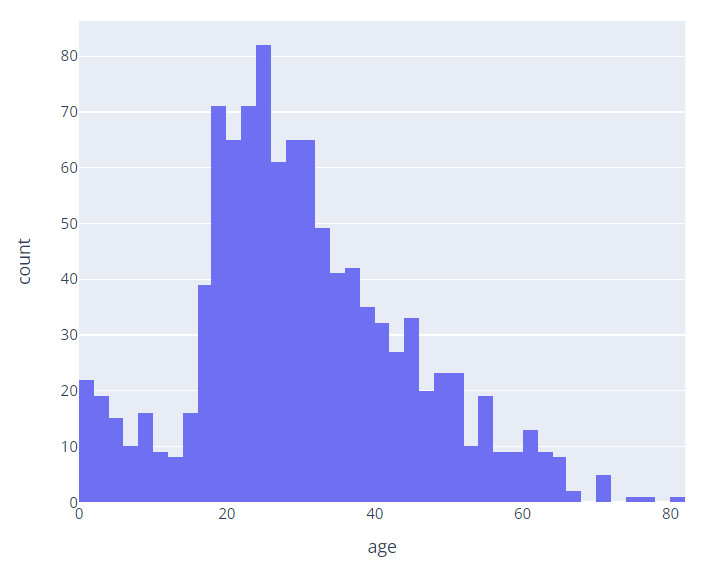

# 나이별 승객 현황

import plotly.express as px

fig = px.histogram(titanic, x='age')

fig.show()

# class별 생존율을 연령별로

grid = sns.FacetGrid(titanic, row='pclass', col='survived', height=4, aspect=2)

grid.map(plt.hist, 'age', alpha=0.5, bins=20);

class가 높으면 생존율이 높은 듯하다.

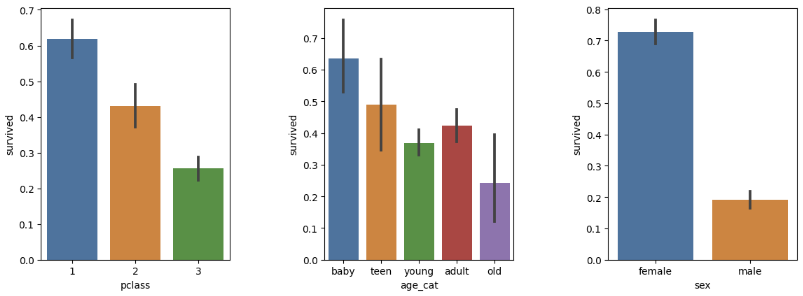

# 나이를 5단계로 정리하기

titanic['age_cat'] = pd.cut(titanic['age'], bins=[0, 7, 15, 30, 60, 100],

include_lowest=True, labels=['baby', 'teen', 'young', 'adult', 'old'])# 나이, 성별, class별 생존율

plt.figure(figsize=(12, 4))

# 1행 3열 - 첫 번째

plt.subplot(131)

sns.barplot(x='pclass', y='survived', data=titanic)

plt.subplot(132)

sns.barplot(x='age_cat', y='survived', data=titanic)

plt.subplot(133)

sns.barplot(x='sex', y='survived', data=titanic)

plt.subplots_adjust(top=1, bottom=0.1,

left=0.1, right=1,

hspace=0.5, wspace=0.5)

1등실이고, 어리고, 여성일수록 생존하기 유리했을까?

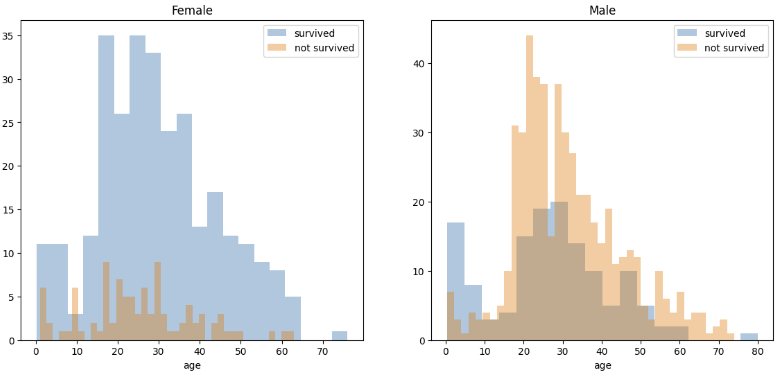

# 남여의 나이별 생존 현황

f, ax = plt.subplots(nrows=1, ncols=2, figsize=(14, 6))

women = titanic[titanic['sex'] == 'female']

men = titanic[titanic['sex'] == 'male']

sns.distplot(women[women['survived'] == 1]['age'],

bins=20, label='survived',

ax=ax[0], kde=False)

sns.distplot(women[women['survived'] == 0]['age'],

bins=40, label='not survived',

ax=ax[0], kde=False)

ax[0].legend()

ax[0].set_title('Female')

sns.distplot(men[men['survived'] == 1]['age'],

bins=18, label='survived',

ax=ax[1], kde=False)

sns.distplot(men[men['survived'] == 0]['age'],

bins=40, label='not survived',

ax=ax[1], kde=False)

ax[1].legend()

ax[1].set_title('Male')

탑승객의 이름에서 신분을 알 수 있다.

import re

title = []

for idx, dataset in titanic.iterrows():

tmp = dataset['name']

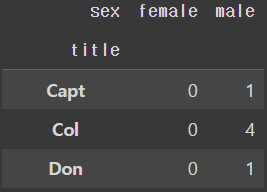

title.append(re.search('\,\s\w+(\s\w+)?\.', tmp).group()[2:-1])pd.crosstab(titanic['title'], titanic['sex'])

각 title에 어떤 성별이 많이 분포하는지 확인하여 Miss, Mr, Mrs, Rare_m, Rare_f로 합쳐준다.

titanic['title'] = titanic['title'].replace('Mlle', 'Miss')

titanic['title'] = titanic['title'].replace('Mme', 'Miss')

titanic['title'] = titanic['title'].replace('Ms', 'Miss')

Rare_f = ['Dona', 'Lady', 'the Countess']

Rare_m = ['Capt', 'Col', 'Don', 'Dr', 'Jonkheer',

'Major', 'Master', 'Rev', 'Sir']

for each in Rare_f:

titanic['title'] = titanic['title'].replace(each, 'Rare_f')

for each in Rare_m:

titanic['title'] = titanic['title'].replace(each, 'Rare_m')titanic[['title', 'survived']].groupby(['title'],

as_index=False).mean()

2. 머신러닝 모델 구축

sex 컬럼을 숫자로 변경 ⬅ LabelEncoder

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

le.fit(titanic['sex'])

titanic['gender'] = le.transform(titanic['sex'])

titanic.head(2)le.classes_

>>>

array(['female', 'male'], dtype=object)결측치는 제거

titanic = titanic[titanic['age'].notnull()]

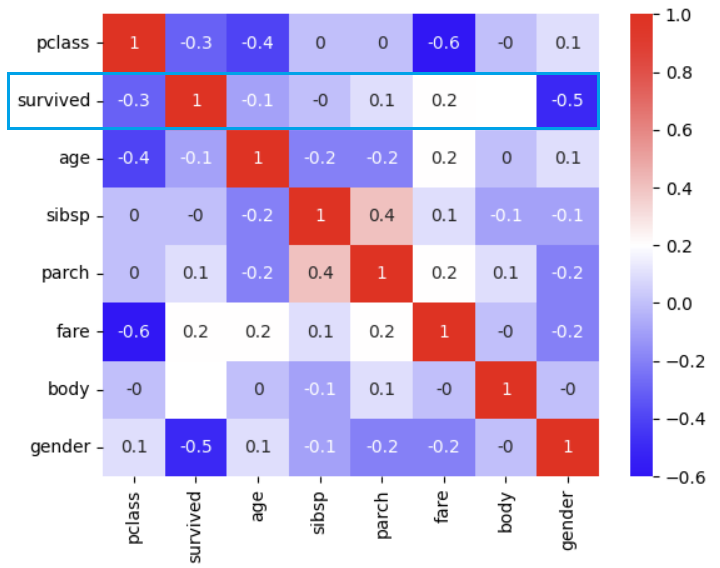

titanic = titanic[titanic['fare'].notnull()]상관관계 확인

correlation_matrix = titanic.corr().round(1)

sns.heatmap(data=correlation_matrix, annot=True, cmap='bwr');

훈련 세트, 테스트 세트 나누기

from sklearn.model_selection import train_test_split

X = titanic[['pclass', 'age', 'sibsp', 'parch', 'fare', 'gender']]

y = titanic['survived']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)모델링 및 평가

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

dt = DecisionTreeClassifier(max_depth=4, random_state=13)

dt.fit(X_train, y_train)

pred = dt.predict(X_test)

accuracy_score(y_test, pred)

>>>

0.7655502392344498Jack, Rose의 생존 확률은?

# Jack

import numpy as np

jack = np.array([[3, 18, 0, 0, 5, 1]])

print('Jack :', dt.predict_proba(jack)[0, 1])

>>>

Jack : 0.16728624535315986# Rose

rose = np.array([[1, 16, 1, 1, 100, 0]])

print('Rose :', dt.predict_proba(rose)[0, 1])

>>>

Rose : 1.0