💡 1. 파이토치(Pytorch)

- 텐서플로우와 함께 머신러닝, 딥러닝에서 가장 널리 사용되는 프레임워크

- 초기에는 Torch라는 이름으로 Lua언어 기반으로 만들어졌으나, 파이썬 기반으로 변경한 것이 파이토치

- 뉴욕대학교와 페이스북(메가)이 공동으로 개발하였고, 현재 가장 대중적이고 사용자가 많은 머신러닝, 딥러닝 프레임워크

🐹 모듈 임포트

import torch

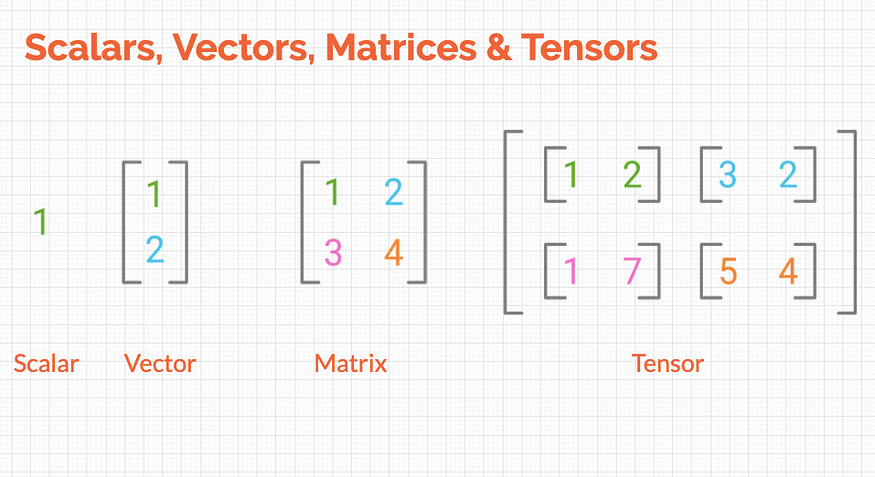

print(torch.__version__) # 버전1-1. 스칼라(Scalar)

하나의 상수를 의미

var1 = torch.tensor([10]) # 스칼라

var1> tensor([10])type(var1)torch.Tensorvar2 = torch.tensor([10.5]) # 다양한 자료형 사용 가능

var2> tensor([10.5000])# 두 스칼라의 사칙 연산

print(var1 + var2)

print(var1 - var2)

print(var1 * var2)

print(var1 / var2)> tensor([20.5000])

> tensor([-0.5000])

> tensor([105.])

> tensor([0.9524])1-2. 벡터(Vector)

상수가 두 개 이상 나열된 경우

vec1 = torch.tensor([1, 2, 3])

vec1> tensor([1, 2, 3])vec2 = torch.tensor([1.4, 2.5, 3.3])

vec2> tensor([1.4000, 2.5000, 3.3000])# 두 벡터의 사칙 연산 (같은 포지션끼리 계산됨)

print(vec1 + vec2)

print(vec1 - vec2)

print(vec1 * vec2)

print(vec1 / vec2)> tensor([2.4000, 4.5000, 6.3000])

> tensor([-0.4000, -0.5000, -0.3000])

> tensor([1.4000, 5.0000, 9.9000])

> tensor([0.7143, 0.8000, 0.9091])vec3 = torch.tensor([5, 10])

vec3> tensor([ 5, 10])vec1 + vec3> RuntimeError: The size of tensor a (3) must match the size of tensor b (2) at non-singleton dimension 01-3. 행렬(Matrix)

2개 이상의 벡터값을 가지고 만들어진 값으로, 해과 열의 개념을 가진 데이터들의 나열

mat1 = torch.tensor([[1, 2], [3, 4]])

mat1> tensor([[1, 2],

[3, 4]])mat2 = torch.tensor([[7, 8], [9, 10]])

mat2> tensor([[ 7, 8],

[ 9, 10]])# 두 행렬의 사칙연산

print(mat1 + mat2)

print(mat1 - mat2)

print(mat1 * mat2)

print(mat1 / mat2)> tensor([[ 8, 10],

[12, 14]])

> tensor([[-6, -6],

[-6, -6]])

> tensor([[ 7, 16],

[27, 40]])

> tensor([[0.1429, 0.2500],

[0.3333, 0.4000]])1-4. 텐서(Tensor)

- 다수의 행렬이 모이면 텐서

- 배열이나 행렬과 매우 유사한 특수 자료구조

- 파이토치는 텐서를 사용하여 모델의 입력과 출력, 모델의 매개변수들을 처리

tensor1 = torch.tensor([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

tensor1> tensor([[[1, 2],

[3, 4]],

[[5, 6],

[7, 8]]])tensor2 = torch.tensor([[[9, 10], [11, 12]], [[13, 14], [15, 16]]])

tensor2# 두 텐서의 사칙 연산

print(tensor1 + tensor1)

print(tensor1 - tensor1)

print(tensor1 * tensor1)

print(tensor1 / tensor1)> tensor([[[ 2, 4],

[ 6, 8]],

[[10, 12],

[14, 16]]])

> tensor([[[0, 0],

[0, 0]],

[[0, 0],

[0, 0]]])

> tensor([[[ 1, 4],

[ 9, 16]],

[[25, 36],

[49, 64]]])

> tensor([[[1., 1.],

[1., 1.]],

[[1., 1.],

[1., 1.]]])# 함수를 이용한 사칙 연산

print(torch.add(tensor1, tensor2))

print(torch.subtract(tensor1, tensor2))

print(torch.multiply(tensor1, tensor2))

print(torch.divide(tensor1, tensor2))

print(torch.matmul(tensor1, tensor2)) # 행렬곱> tensor([[[10, 12],

[14, 16]],

[[18, 20],

[22, 24]]])

> tensor([[[-8, -8],

[-8, -8]],

[[-8, -8],

[-8, -8]]])

> tensor([[[ 9, 20],

[ 33, 48]],

[[ 65, 84],

[105, 128]]])

> tensor([[[0.1111, 0.2000],

[0.2727, 0.3333]],

[[0.3846, 0.4286],

[0.4667, 0.5000]]])

> tensor([[[ 31, 34],

[ 71, 78]],

[[155, 166],

[211, 226]]])print(tensor1.add_(tensor2)) # tensor1에 결과를 다시 저장

print(tensor1.subtract_(tensor2))> tensor([[[10, 12],

[14, 16]],

[[18, 20],

[22, 24]]])

> tensor([[[1, 2],

[3, 4]],

[[5, 6],

[7, 8]]])💡 2. 파이토치와 텐서

data = [[1, 2], [3, 4]]

data> [[1, 2], [3, 4]]data_tensor = torch.tensor(data)

data_tensor> tensor([[1, 2],

[3, 4]])import numpy as np

ndarray = np.array(data_tensor)

ndarray> array([[1, 2],

[3, 4]])data_tensor = torch.tensor(ndarray)

data_tensor> tensor([[1, 2],

[3, 4]])a = torch.ones(2, 3) # 요소가 모두 1인 2행 3열짜리 행렬 만듦

a> tensor([[1., 1., 1.],

[1., 1., 1.]])b = torch.zeros(3, 4) # 요소가 모두 0인 3행 4열짜리 행렬 만듦

b> tensor([[0., 0., 0., 0.],

[0., 0., 0., 0.],

[0., 0., 0., 0.]])c = torch.full((3, 4), 7) # 요소가 모두 7인 3*4 행렬

c> tensor([[7, 7, 7, 7],

[7, 7, 7, 7],

[7, 7, 7, 7]])d = torch.empty(2, 3) # 2*3 행렬에 의미없는 값

d> tensor([[ 0.0000e+00, 0.0000e+00, -8.1644e+17],

[ 3.1483e-41, -1.2856e+17, 3.1483e-41]])e = torch.eye(5) # 5*5 단위행렬

print(e)> tensor([[1., 0., 0., 0., 0.],

[0., 1., 0., 0., 0.],

[0., 0., 1., 0., 0.],

[0., 0., 0., 1., 0.],

[0., 0., 0., 0., 1.]])f = torch.arange(10) # 0부터 9

f> tensor([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])g = torch.rand(2, 3) # 2*3 랜덤값

g> tensor([[0.2585, 0.7093, 0.5408],

[0.2508, 0.8948, 0.3658]])h = torch.randn(2, 3) # 평균이 0이고, 표준편차가 1인 정규분포에서 무작위 샘플링

h> tensor([[ 0.4006, -0.1566, 1.3604],

[ 0.1733, 0.8811, -1.5512]])i = torch.arange(16).reshape(2, 2, 4) # 2행 4열짜리 2개 (a, b, c -> b행 c열짜리 a개)

i, i.shape> (tensor([[[ 0, 1, 2, 3],

[ 4, 5, 6, 7]],

[[ 8, 9, 10, 11],

[12, 13, 14, 15]]]),

torch.Size([2, 2, 4]))# permute(): 차원을 지정한 인덱스로 변환

# i.shape = ([2, 2, 4])

j = i.permute((2, 1, 0)) # [2, 2, 4] -> [4, 2, 2]

j, j.shape> (tensor([[[ 0, 8],

[ 4, 12]],

[[ 1, 9],

[ 5, 13]],

[[ 2, 10],

[ 6, 14]],

[[ 3, 11],

[ 7, 15]]]),

torch.Size([4, 2, 2]))a = torch.arange(1, 13).reshape(3, 4)

a> tensor([[ 1, 2, 3, 4],

[ 5, 6, 7, 8],

[ 9, 10, 11, 12]])a[1]> tensor([5, 6, 7, 8])a[0, -1]> tensor(4) a[1:-1]> tensor([[5, 6, 7, 8]])a[:2, 2:]> tensor([[3, 4],

[7, 8]])💡 3. 코랩에서 GPU 사용하기

코랩에서 device 변경하는 법:

상단 메뉴 -> 런타임 -> 런타임 유형변경 -> 하드웨어 가속기를 GPU롤 변경 -> 저장 -> 세션 다시 시작 및 모두 실행