💡 1. 단항 선형 회귀

한 개의 입력이 들어가서 한 개의 출력이 나오는 구조

import torch

import torch.nn as nn

import torch.optim as optim

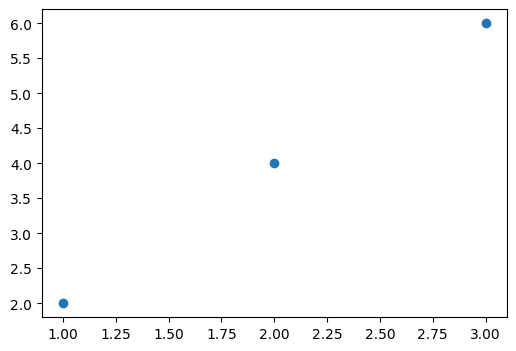

import matplotlib.pyplot as plt torch.manual_seed(2024)> <torch._C.Generator at 0x7f09a27705f0>x_train = torch.FloatTensor([[1], [2], [3]])

y_train = torch.FloatTensor([[2], [4], [6]])

print(x_train, x_train.shape)

print(y_train, y_train.shape)> tensor([[1.],

[2.],

[3.]]) torch.Size([3, 1])

> tensor([[2.],

[4.],

[6.]]) torch.Size([3, 1])plt.figure(figsize=(6, 4))

plt.scatter(x_train, y_train)

# y = Wx + b

model = nn.Linear(1, 1) # 데이터 하나 들어오면 출력 하나

model> Linear(in_features=1, out_features=1, bias=True)y_pred = model(x_train) # 아직은 학습 안돼있음

y_pred> tensor([[0.7260],

[0.7894],

[0.8528]], grad_fn=<AddmmBackward0>)list(model.parameters()) # W: 0.0634, b: 0.6625

# y = Wx + b

# x=1, 0.0634*1 + 0.6625 = 0.7259

# x=2, 0.0634*2 + 0.6625 = 0.7893> [Parameter containing:

tensor([[0.0634]], requires_grad=True),

Parameter containing:

tensor([0.6625], requires_grad=True)]((y_pred - y_train)**2).mean() # 오차 계산> tensor(12.8082, grad_fn=<MeanBackward0>)loss = nn.MSELoss()(y_pred, y_train) # 함수로 오차 계산

loss> tensor(12.8082, grad_fn=<MseLossBackward0>)mse = nn.MSELoss() # 객체 생성

mse(y_pred, y_train)> tensor(12.8082, grad_fn=<MseLossBackward0>)💡 2. 최적화(Optimization)

- 학습 모델의 손실함수(loss function)의 최소값을 찾아가는 과정

- 학습 데이터를 입력하여 파라미터를 걸쳐 예측 값을 받음 -> 예측 값과 실제 정답과의 차이를 비교하는 것이 손실함수이고, 예측값과 실젯값의 차이를 최소화하는 파라미터를 찾는 과정이 최적화

2-1. 경사하강법(Gradient Descent)

- 딥러닝 알고리즘 학습 시 사용되는 최적화 방법 중 하나

- 최적화 알고리즘을 통해 a와 b를 찾아내는 과정을 '학습'이라고 부름

2-2. 학습률(Learning rate)

- 한 번의 W를 움직이는 거리(increment step)

- 0~1 사이의 실수

- 학습률이 너무 크면 한 지점으로 수렴하는 것이 아니라 발산할 가능성이 존재

- 학습률이 너무 작으면 수렴이 늦어지고, 시작점을 어디로 잡느냐에 따라 수렴 지점이 달라짐

2-3. 경사 하강법의 한계

- 많은 연산량과 컴퓨터 자원을 소모

- 데이터(입력값) 하나가 모델을 지날 때마다 모든 가중치를 한 번씩 업데이트 함

- 가중치가 적은 모델의 경우 문제가 없으나, 모델의 가중치가 매우 많다면 모든 가중치에 대해 연산을 적용하기 때문에 많은 연산량을 요구

- Global Minimum은 목표 함수 그래프 전체를 고려했을 때 최솟값을 의미하고, Local Minimum은 그래프 내 일부만 고려했을 때 최솟값을 의미 -> 경사 하강법으로 최적의 값인 줄 알았던 값이 Local Minimum으로 결과가 나올 수 있음

# SGD(Stochastic Gradient Descent)

# 랜덤하게 데이터를 하나씩 뽑아서 loss를 만듦

# 데이터를 넣고 다시 데이터를 뽑고 반복

# 빠르게 방향을 결정

optimizer = optim.SGD(model.parameters(), lr=0.01) # 옵티마이저 객체loss = nn.MSELoss()(y_pred, y_train)# optimizer를 초기화

# loss.backward() 호출될 때 초기설정은 gradient를 더해주는 것으로 되어 있음

# 학습 loop를 돌 때 이상적으로 학습이 이루어지기 위해서 한 번의 학습이 완료되면 graidient를 항상 0으로 만들어줘야 함

optimizer.zero_grad()

# 역전파: 비용 함수를 미분하여 gradient 계산

loss.backward()

# 가중치 업데이트: 계산된 gradient를 사용하여 파라미터를 업데이트

optimizer.step()# list(model.parameters()) # W: 0.0634, b: 0.6625

list(model.parameters()) # W: 0.2177 b: 0.7267> [Parameter containing:

tensor([[0.2177]], requires_grad=True),

Parameter containing:

tensor([0.7267], requires_grad=True)]# 반복 학습을 통해 오차가 있는 W, b를 수정하면서 오차를 계속 줄여나감

# epochs: 반복 학습 횟수(에포크)

epochs = 1000

for epoch in range(epochs + 1):

y_pred = model(x_train)

loss = nn.MSELoss()(y_pred, y_train)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 100 == 0:

print(f'Epoch: {epoch}/{epochs} Loss: {loss:.6f}')> Epoch: 0/1000 Loss: 10.171454

> Epoch: 100/1000 Loss: 0.142044

> Epoch: 200/1000 Loss: 0.087774

> Epoch: 300/1000 Loss: 0.054239

> Epoch: 400/1000 Loss: 0.033517

> Epoch: 500/1000 Loss: 0.020711

> Epoch: 600/1000 Loss: 0.012798

> Epoch: 700/1000 Loss: 0.007909

> Epoch: 800/1000 Loss: 0.004887

> Epoch: 900/1000 Loss: 0.003020

> Epoch: 1000/1000 Loss: 0.001866print(list(model.parameters())) # W: 1.9499, b: 0.1138> [Parameter containing:

tensor([[1.9499]], requires_grad=True), Parameter containing:

tensor([0.1138], requires_grad=True)]x_test = torch.FloatTensor([[5]])

y_pred = model(x_test)

y_pred> tensor([[9.8635]], grad_fn=<AddmmBackward0>)💡 3. 다중 선형 회귀

- 여러 개의 입력이 들어가서 한 개의 출력이 나오는 구조

X_train = torch.FloatTensor([[73, 80, 75],

[93, 88, 93],

[89, 91, 90],

[96, 98, 100],

[73, 66, 70]])

y_train = torch.FloatTensor([[220], [270], [265], [290], [200]])print(X_train, X_train.shape)

print(y_train, y_train.shape)> tensor([[ 73., 80., 75.],

[ 93., 88., 93.],

[ 89., 91., 90.],

[ 96., 98., 100.],

[ 73., 66., 70.]]) torch.Size([5, 3])

> tensor([[220.],

[270.],

[265.],

[290.],

[200.]]) torch.Size([5, 1])# y = w1x1 + w2x2 + w3x3 + b

model = nn.Linear(3, 1) # 입력3개 출력1개

model> Linear(in_features=3, out_features=1, bias=True)optimizer = optim.SGD(model.parameters(), lr=0.00001)epochs = 20000

for epoch in range(epochs + 1):

y_pred = model(X_train)

loss = nn.MSELoss()(y_pred, y_train)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 100 == 0:

print(f'Epoch: {epoch}/{epochs} Loss: {loss:.6f}')> Epoch: 0/20000 Loss: 75967.109375

> Epoch: 100/20000 Loss: 15.825180

> Epoch: 200/20000 Loss: 15.499573

...list(model.parameters()) # W: 0.6814, 0.8616, 1.3889 b: -0.2950> [Parameter containing:

tensor([[0.6814, 0.8616, 1.3889]], requires_grad=True),

Parameter containing:

tensor([-0.2950], requires_grad=True)]x_test = torch.FloatTensor([[100, 100, 100]])

y_pred = model(x_test)

y_pred> tensor([[292.8991]], grad_fn=<AddmmBackward0>)

```~~텍스트~~