serverless vs container

We have so-called serverless approaches that are rapidly growing in popularity.

The name serverless is a bit deceiving, though we still have servers, but the cloud manages the configuration of these servers, and we don't worry about things like what operating system will be using.

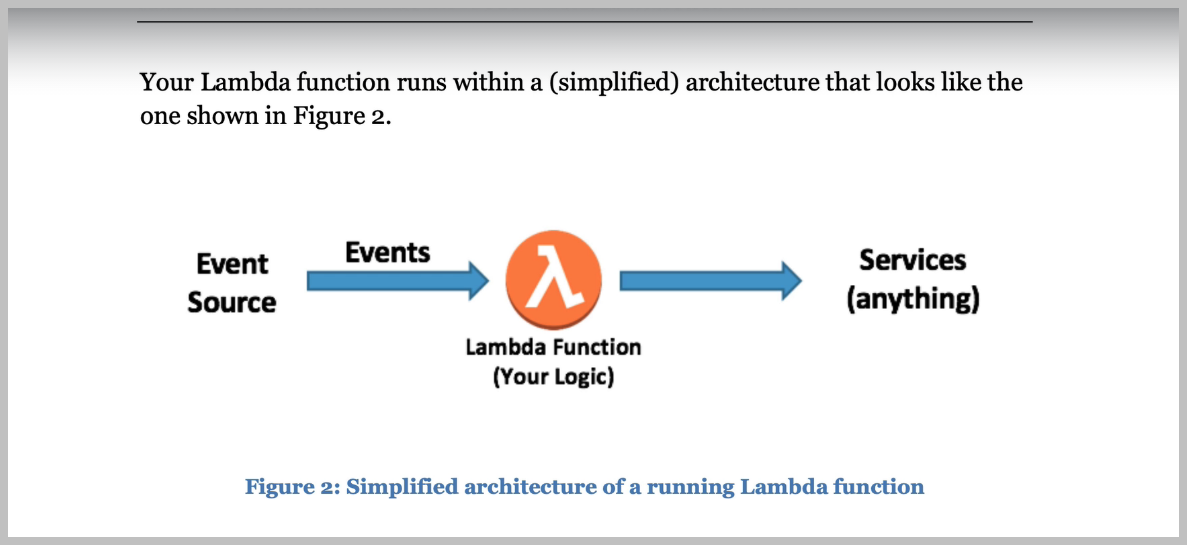

You might have heard of AWS Lambda Functions, which is a popular serverless platform from Amazon.

And it allows us to build containers that can be used to bundle and share our applications so they can run anywhere on any computer and any server.

Docker containers are small boxes containing our applications that run anywhere on our desktop, using

However, it shares the operating system it's running on, while virtual machines have to create this

For now, just remember that containers contain your running application, while images are a collection of all the files, all the dependencies that we need for our container to run and Docker Hub has a large collection of these images, and we can go to Docker hub by going to Hub Dot Docker dot com.

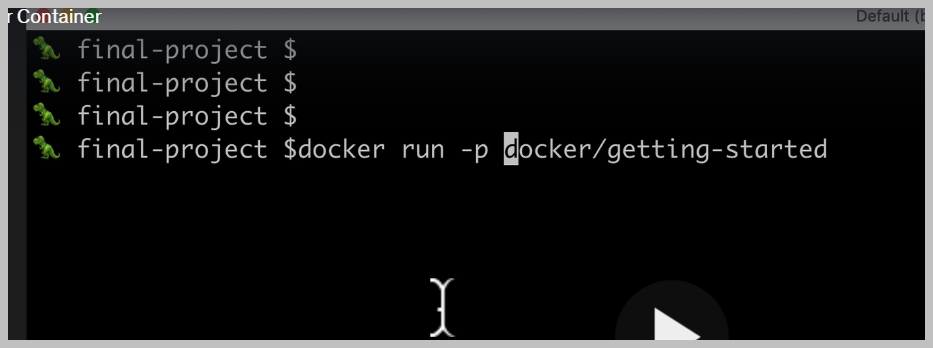

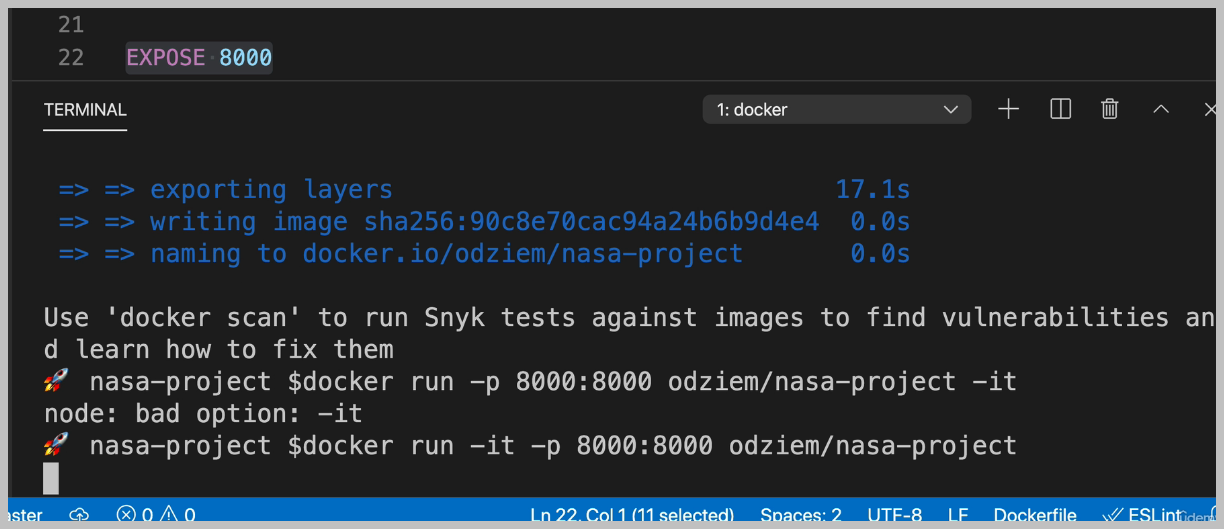

for publish, which will map a port from our container to my computer here doing something called port forwarding. For example, we could see that Port 80 on the container maps to Port 80 on my computer. So now we can access the server running on Port 80 in our container.

How do we use this to deploy our node applications?

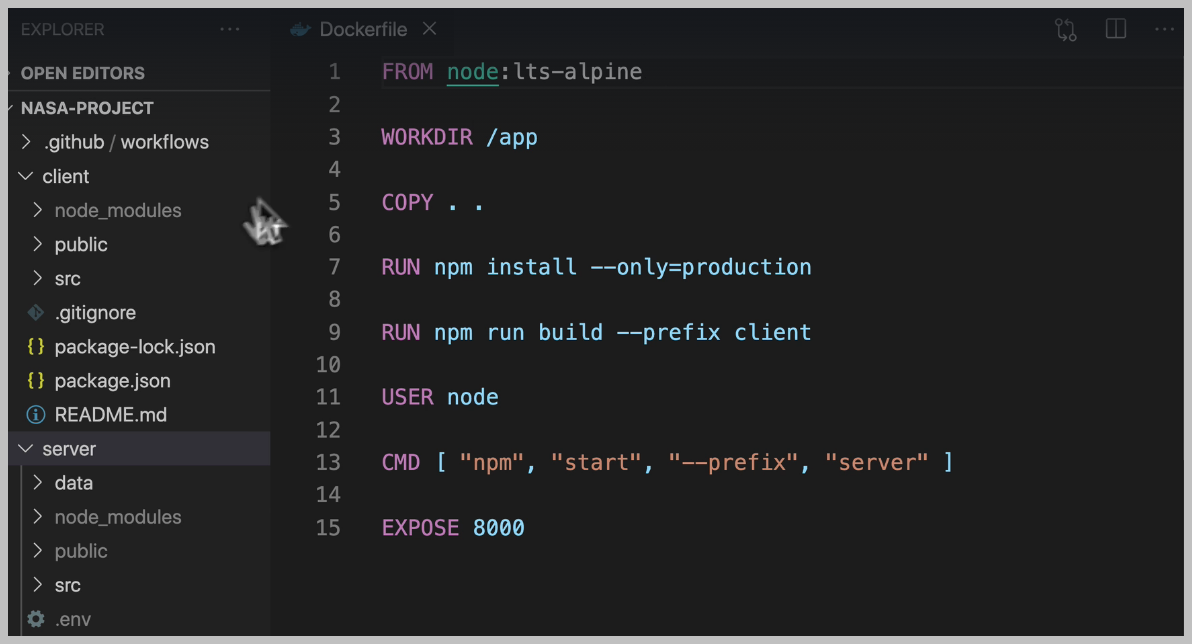

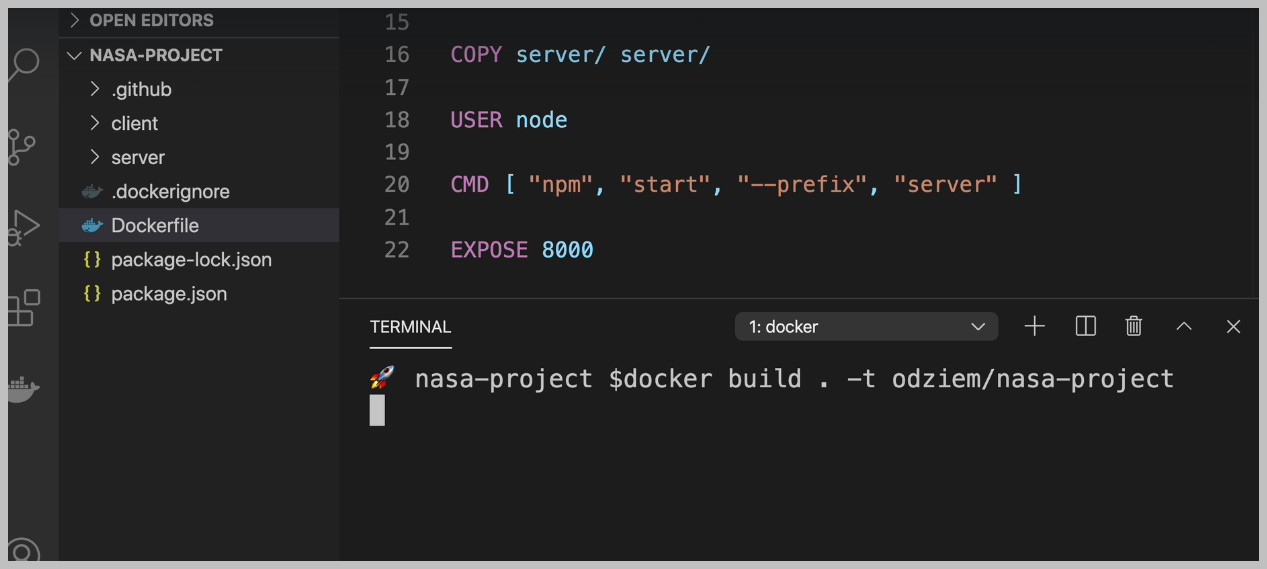

We start by building a darker image.

For our application, this will be a Docker image that contains all of our node files and dependencies, everything required to run our project.

Long story short, we want to make sure that we're not copying our existing node modules, but that we're installing them fresh when we build our Docker image so that they're being installed and set up specifically for the current operating system.

a list of files and folders that we want to exclude from our Docker image when we use the copy command.

Now our local dependencies, the ones installed on our machine will not be sent to our Docker image,

which will make sure that all of our packages and dependencies are installed fresh specifically for

the operating system used in our Docker image.

And it's also a good idea for us to exclude any build artifacts from our local machine that is anything that can be derived from the rest of our source code.

For example, the public folder in our server.

Is the result of running the npm build command in our clients to make sure that everything is being

built from scratch in Docker?

AWS

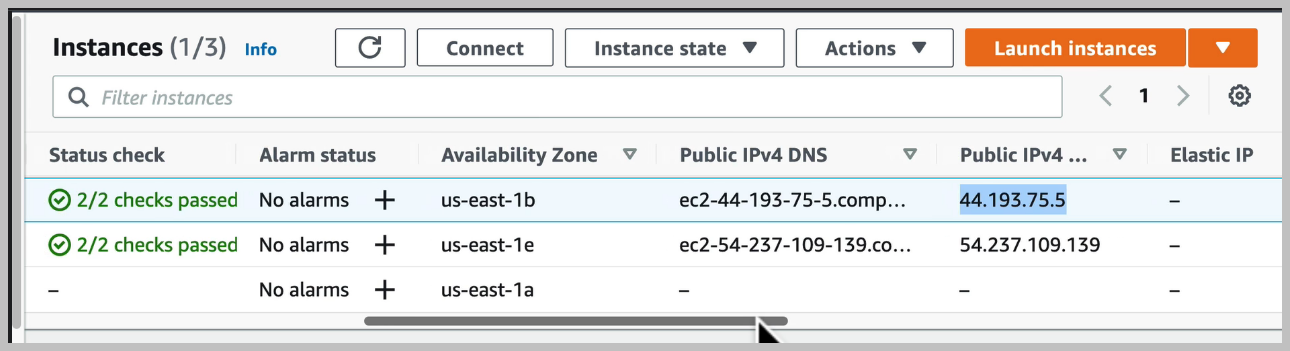

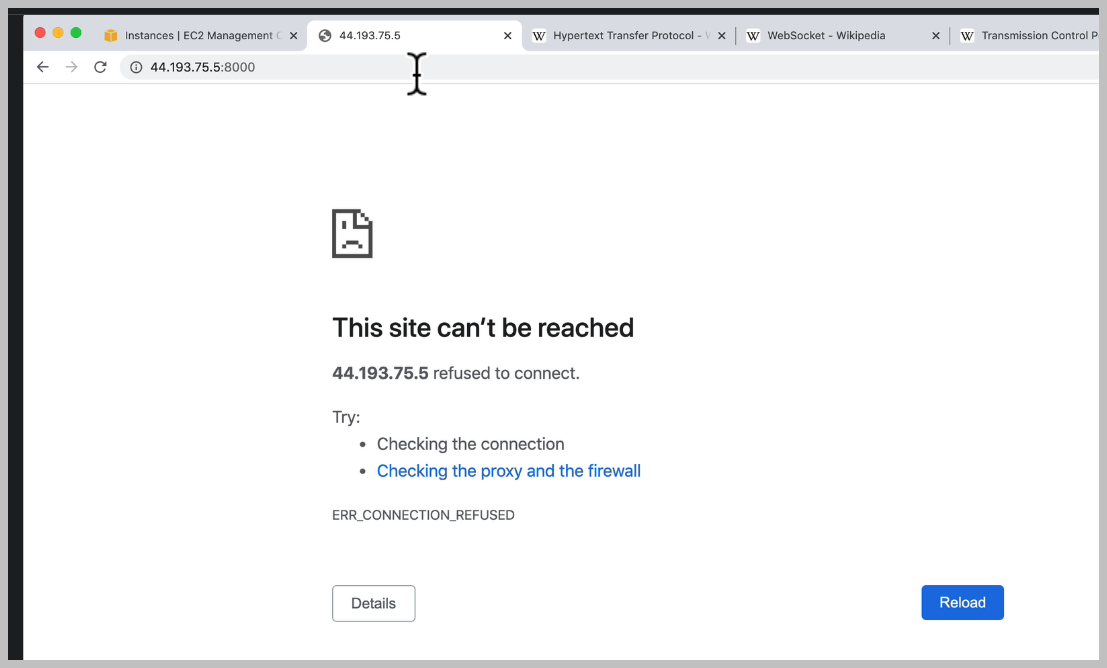

There's nothing actually running on our server, even though of our port is exposed. We need to deploy our application and we're going to do that next.

SSH

Well, we can see that S.H. is a cryptographic network protocol for operating network services securely over an unsecured network.

SSH is a comparable secure protocol for communicating between two devices, not just a browser and an HTTP server, but instead two terminals, two shells.

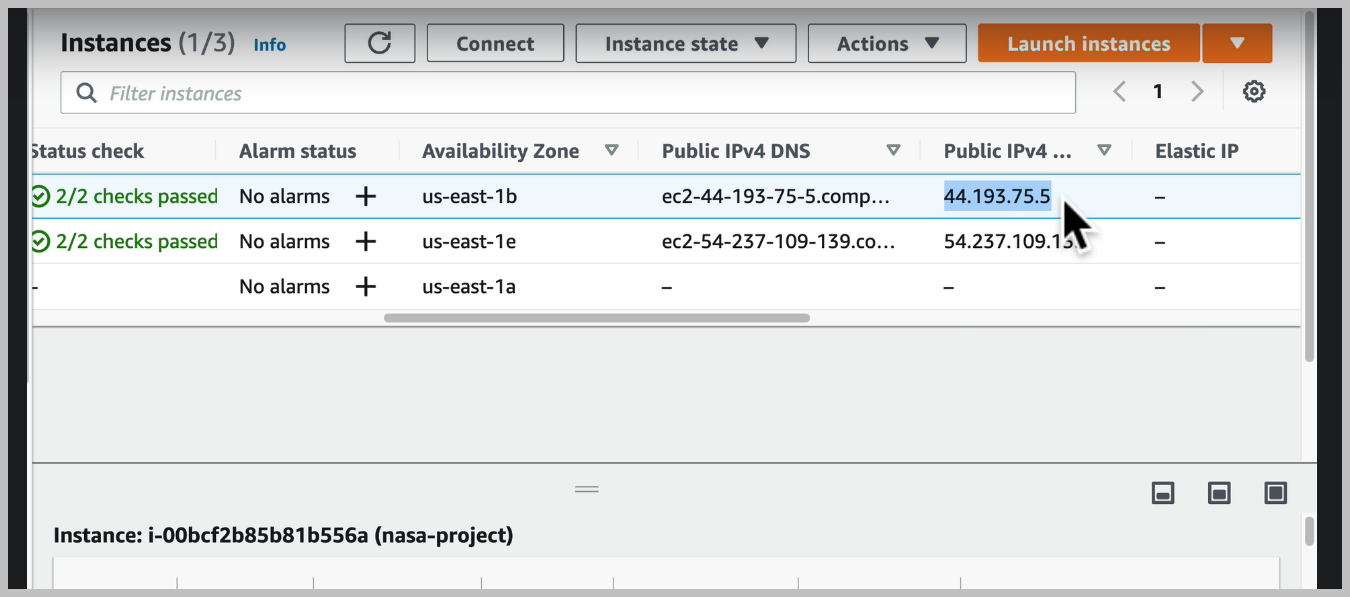

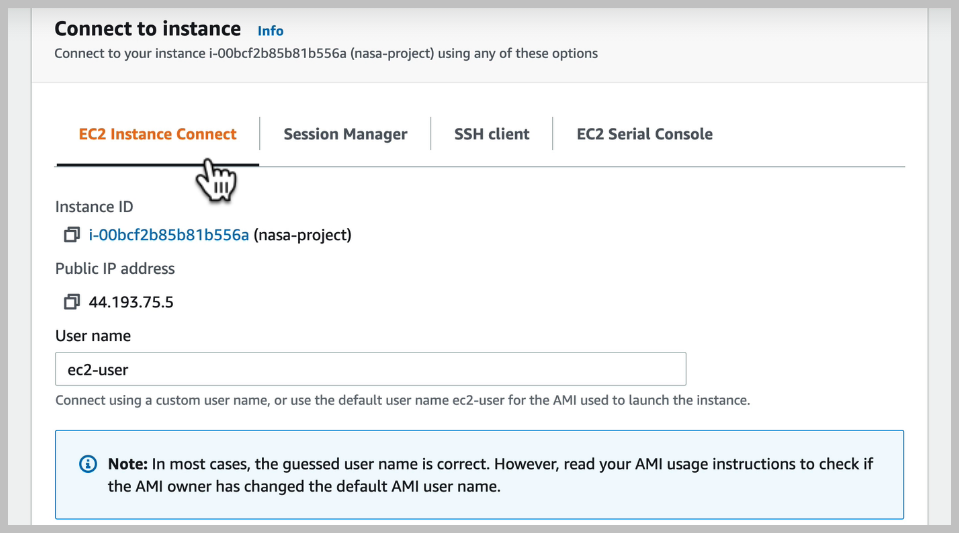

EC2 인스턴스와 SSH 연결하기

connect 클릭

on how to access our instance using an SSH client.

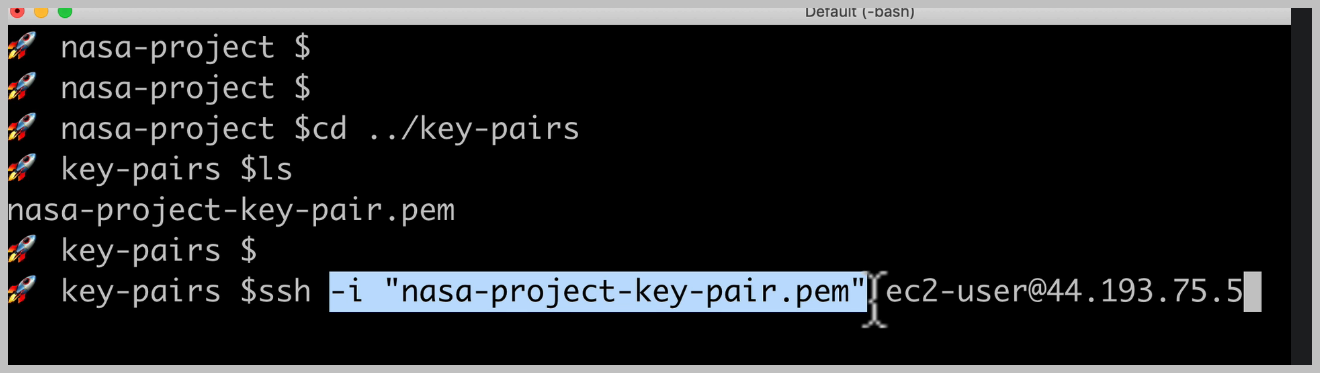

We have to locate our private key file, which we remember is this dot pm file that we downloaded,

and this is going to give us access from our computer to access Amazon's easy to server that we set up.

And we can see that we're going to need to run some commands in our terminal, Amazon even gives us

So I'm going to copy that and in our terminal, I'm going to go to the folder where I have that private

It's important that we don't include this private key in our repository with our source code.

This should live in a separate location, which we never share with anyone else on the internet.

This will ensure that SSH uses this key to try to connect to our server.

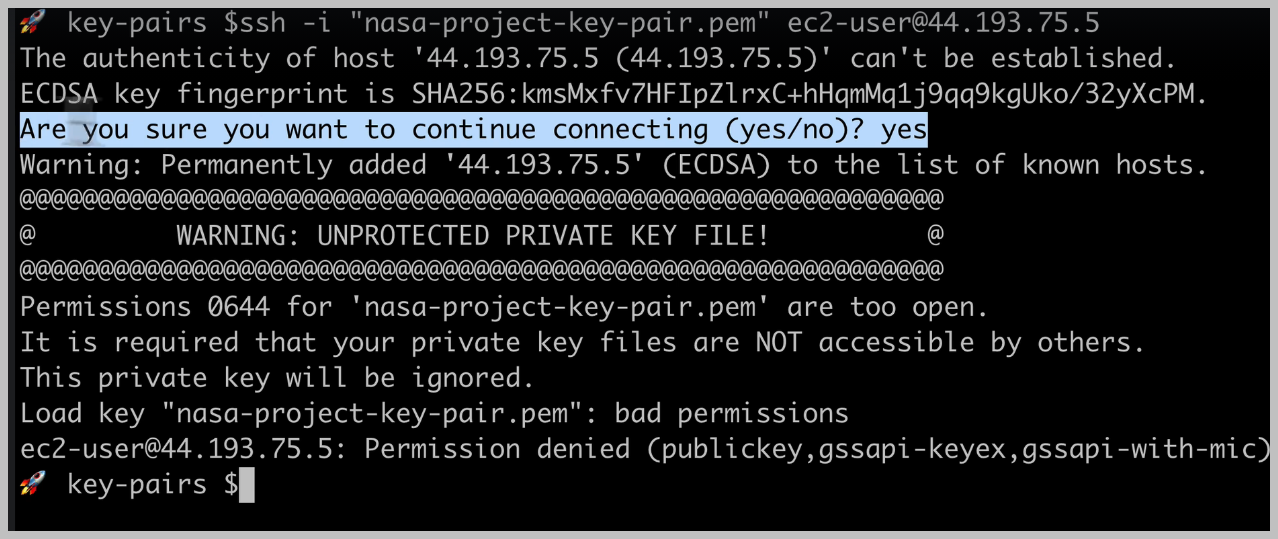

And we can see that now S.H. has permanently added this server to a list of known hosts.

sending our commands to the right server and on Mac OS or Linux, we'll get this warning, which tells us that the permissions for our PEM file are too open and it refuses the connection.

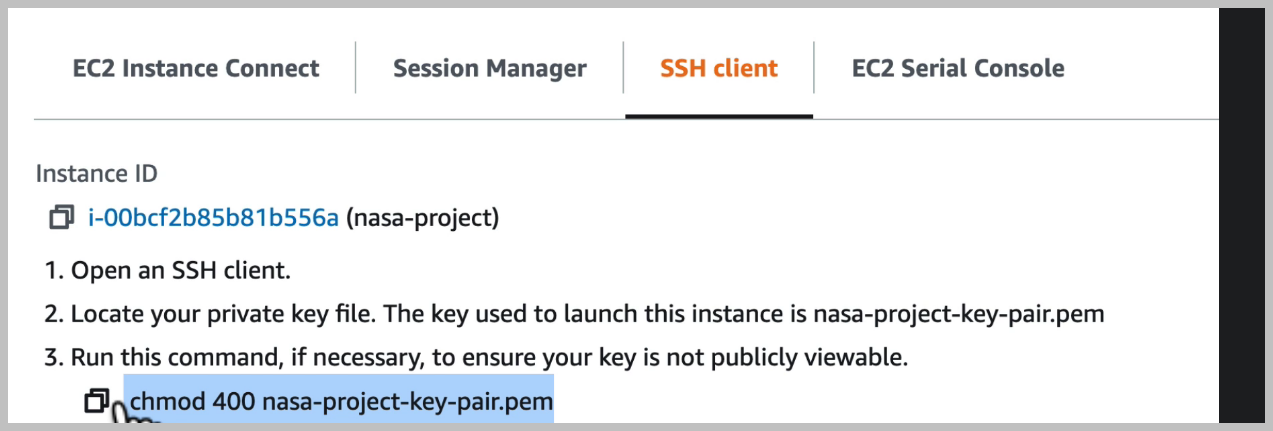

To lock down the permissions for our file.

Now we could talk about Linux and Mac OS permissions for a whole series of videos, but essentially what this command is doing is it's making this private key file only accessible to my user and not to other users on my computer.

And it's also setting this file as read only so that this file is never modified.

Now, if we go back to the issue to dashboard, we can see that there's another option here to connect This will use a browser based SSH client and we can see this by pressing connect here.

EC2 서버 셋팅

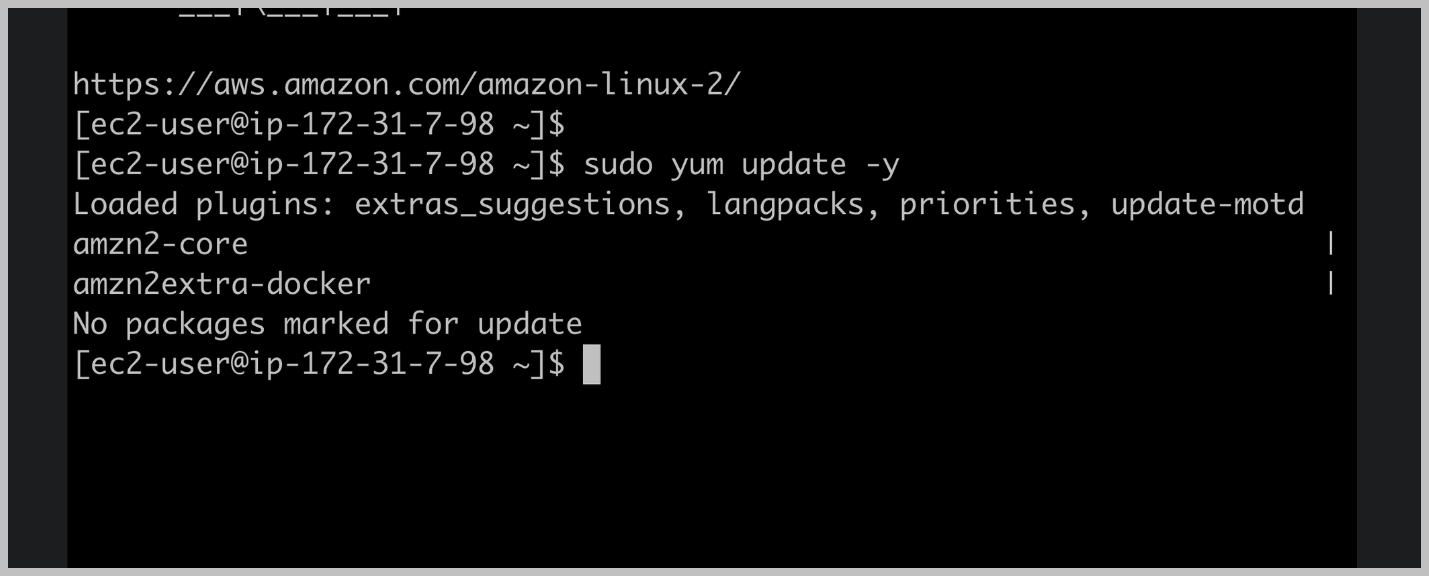

We need to install Docker so that we can run our Docker container inside of Amazon Linux here.

We have a shell which allows us to run Linux commands.

One command that we can use on Amazon Linux is the Yammer command. Yum is a package manager similar to npm for note. It allows us to install and update the applications and packages that we have on Amazon Linux.

We are going to use this young tool to install Docker.

We'll use the Yum Update command passing in the Dash Y flag, which will just confirm that yes, we

do want to update everything that can be updated and will run our command as an administrative user So that's the pseudo command which we add to the start of our command.

So that's the pseudo command which we add to the start of our command.

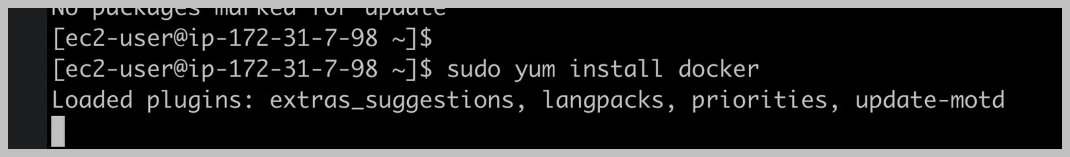

In this case, we have this darker package and we're going to install as a administrative user again In this case, we have this darker package and we're going to install as a administrative user again because we're making changes to our machine.

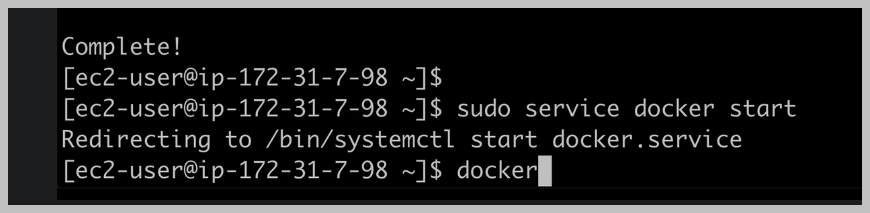

Now we need to start Docker inside of our easy to incidents right here, which we can do using pseudo and Docker is a service that runs in the background of our server. So we're going to use this service command to Docker start, which will start the Docker service in the background.

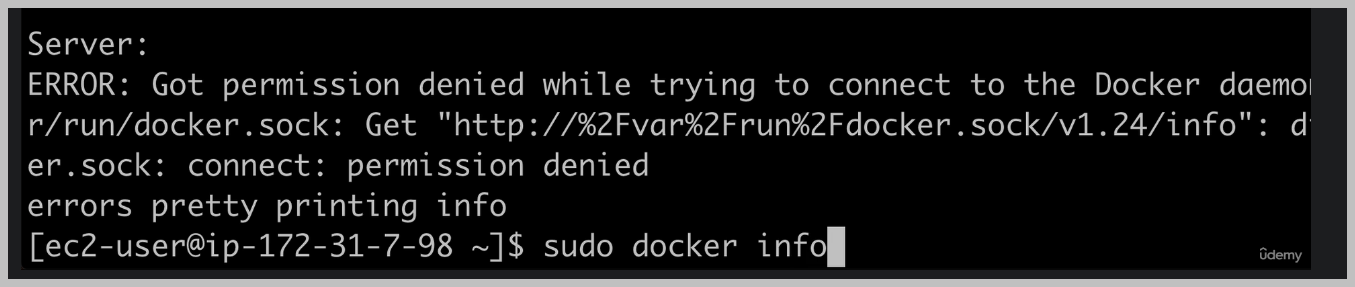

And then the command we want to run so we can get information about Docker when we use the pseudo command.

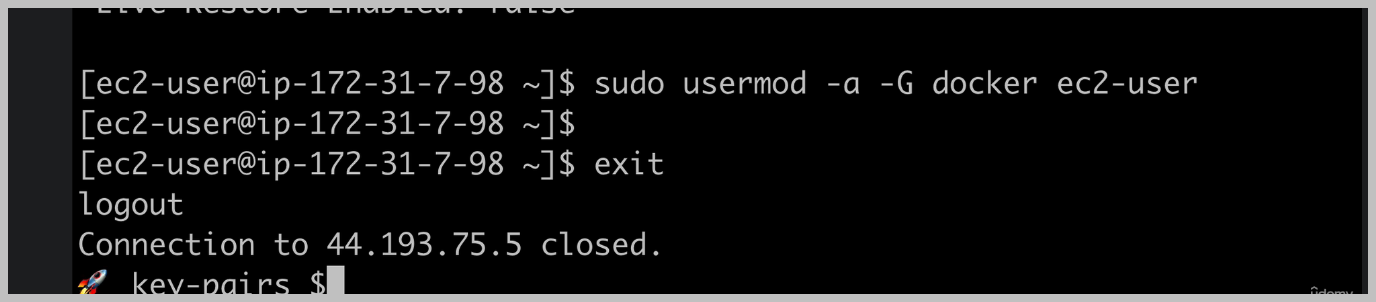

Now, if we want to run commands without doing this pseudo to run the command as an administrative user with full administrative privileges on our server, we first need to do this pseudo user mode command.

What we'll do is add this easy to user to the Docker group, so the easy user has access to use Docker, so we'll add the easy to user using the Dash a flag to the group.

Now, in order for this change to be picked up inside this, shall we first need to exit to log out of our EC2 instance.

And let me just clear the console and log right back in. Hopefully now we can do darker info without running, it has the administrative user with the pseudo command.

We're now following the principle of least privilege when running Docker commands.

And the first of the instructions is going to be to select the base image using the from the statement.