🍦 모델 설계

실습 - 피마 인디언의 당뇨병 예측

model = Sequential()

model.add(Dense(12, input_dim=8, activation='relu', name='Dense_1'))

model.add(Dense(8, activation='relu', name='Dense_2'))

model.add(Dense(1, actvation='sigmoid', name='Dense_3'))

model.summary()

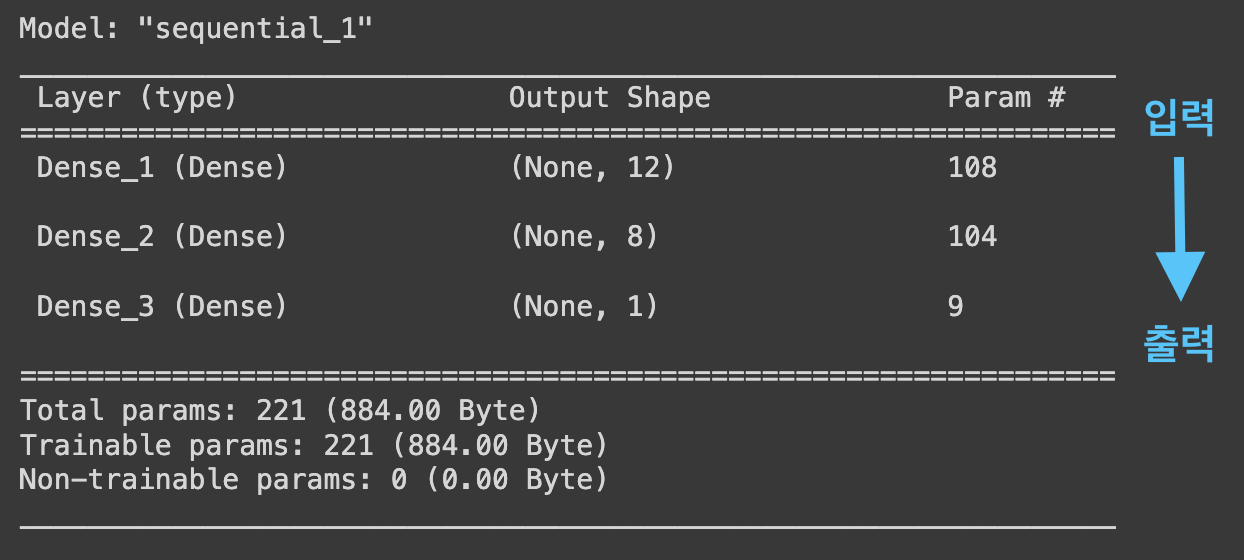

- Layer: 층의 이름, 유형

- Dense_1층: 입력층과 첫 번째 은닉층 연결

- Dense_2층: 첫 번째 은닉층과 두 번째 은닉층 연결

- Dense_3층: 두 번째 은닉층과 출력층 연결

- Output Shape: 각 층에 몇 개의 출력이 발생하는지 나타냄

(행(샘플)의 수, 열(속성)의 수)- 행의 수는 batch_size에 정한 만큼 입력되므로 모델에서 특별히 세지 않음, None으로 표시됨

- 입력값 8개 -(Dense_1)-> 12개 -(Dense_2)-> 8개 -(Dense_3)-> 1개 출력

- Params: 파라미터 수, 즉 가중치과 bias 수의 합

- 첫 번째 층: 입력 값 8개가 12개 노드로 분산

=> 가중치 = 8*12 = 96개, 각 노드에 bias 한 개씩 있음

=> 전체 파라미터 수 = 96+12 = 108개

- 첫 번째 층: 입력 값 8개가 12개 노드로 분산

- Total params: 전체 파라미터 합산한 값

- Trainable params: 학습을 진행하면서 업데이트가 된 파라미터들

- Non-trainable params: 업데이트가 되지 않은 파라미터

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

import pandas as pd

!git clone https://github.com/taehojo/data.git

df = pd.read_csv('./data/pima-indians-diabete3.csv')

X = df.iloc[:, 0:8]

y = df.iloc[:, 8]

# 모델 설정

model = Sequential()

model.add(Dense(12, input_dim=8, activation='relu', name='Dense_1'))

model.add(Dense(8, activation='relu', name='Dense_2'))

model.add(Dense(1, activation='sigmoid', name='Dense_3'))

# 모델 컴파일

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# 모델 실행

history = model.fit(X, y, epochs=100, batch_size=5)실습 - 아이리스 품종 예측

- 다항 분류 활성화 함수: softmax

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

import pandas as pd

!git clone https://github.com/taehojo/data.git

df = pd.read_csv('./data/iris3.csv')

X = df.iloc[:, 0:4]

y = df.iloc[:, ,4]

# 원-핫 인코딩

y = pd.get_dummies(y)

# 모델 설정

model = Sequential()

model.add(Dense(12, input_dim=4, activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(3, actiavtion='softmax'))

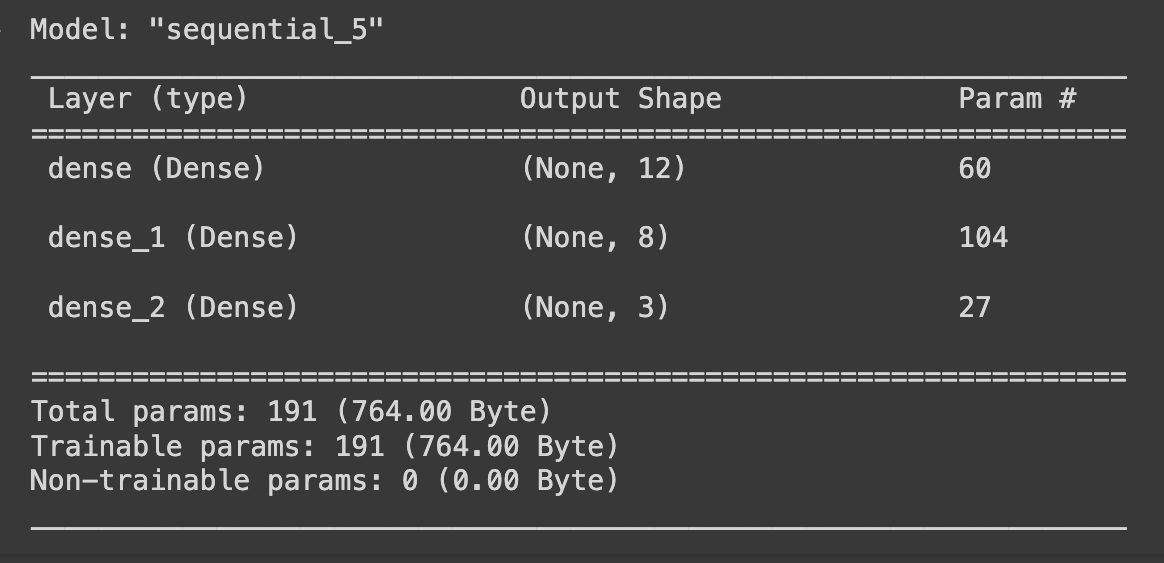

model.summary()

# 모델 컴파일

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# 모델 실행

history = model.fit(X, y, epochs=50, batch_size=5)

- 두 개의 은닉층에 각각 12개와 8개의 노드 만들어짐

- 출력은 세 개

🍦 모델 성능 검증

실습 - 초음파 광물 예측하기

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from sklearn.model_selection import train_test_split

import pandas as pd

# 데이터

!git clone https://github.com/taehojo/data.git

df = pd.read_csv('./data/sonar3.csv', header=None)

# 음파 관련 속성을 X, 광물의 종류를 y

X = df.iloc[:, 0:60]

y = df.iloc[:, 60]

# train test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, shuffle=True)

# 모델 설정

model = Sequential()

model.add(Dense(24, input_dim=60, activation='relu'))

model.add(Dense(10, activaion='relu'))

model.add(Dense, 1, activation='sigmoid')

# 모델 컴파일

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# 모델 실행

history = model.fit(X_train, y_train, epochs=200, batch_size=10)

# 모델을 테스트셋에 적용해 정확도를 구함

score = model.evaluate(X_test, y_test)

print('Test accuracy: ', score[1])모델 저장, 재사용

# 모델 이름, 저장할 위치

model.save('./data/model/my_model.hdf5')

# 불러오기

from tensorflow.keras.models import load_model

model = load_model('./data/model/my_model.hdf5')k겹 교차 검증 (KFold)

실습 - 초음파 광물 예측하기(2)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from sklearn.model_selection import KFold

from sklearn.metrics import accuracy_score

import pandas as pd

# 데이터

!git clone https://github.com/taehojo/data.git

df = pd.read_csv('./data/sonar3.csv', header=None)

# 음파 관련 속성을 X, 광물의 종류를 y

X = df.iloc[:, 0:60]

y = df.iloc[:, 60]

# 몇 겹으로 나눌 것인지 정함

k = 5

# KFold

kfold = KFold(n_splits=k, shuffle=True)

acc_score = []

# 모델 구조

def model_fn():

model = Sequential()

model.add(Dense(24, input_dim=60, activation='relu'))

model.add(Dense(10, activaion='relu'))

model.add(Dense, 1, activation='sigmoid')

return model

# KFold 이용하여 k번 학습 실행

# split()에 의해 k개의 학습셋, 테스트셋으로 분리

for train_index, test_index in kfold.spliit(X):

X_train, X_test = X.iloc[train_index,:], X.iloc[test_index,:]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

model = model_fn()

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(X_train, y_train, epochs=200, batch_size=10, verbose=0)

accuracy = model.evaluate(X_test, y_test)[1] # 정확도 구함

acc_score.append(accuracy)

# k번 실시된 정확도의 평균

avg_acc_score = sum(acc_score) / k

# 결과 출력

print('정확도: ', acc_score)

print('정확도 평균: ', avg_acc_score)verbose=0: 학습 과정 출력 생략

🍦 모델 성능 향상

실습 - 와인의 종류 예측하기, epoch=50

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from sklearn.model_selection import train_test_split

import pandas as pd

# 데이터

!git clone https://github.com/taehojo/data.git

df = pd.read_csv('./data/wine.csv', header=None)

# 와인의 속성을 X, 와인의 분류를 y

X = df.iloc[:, 0:12]

y = df.iloc[:, 12]

# train test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, shuffle=True)

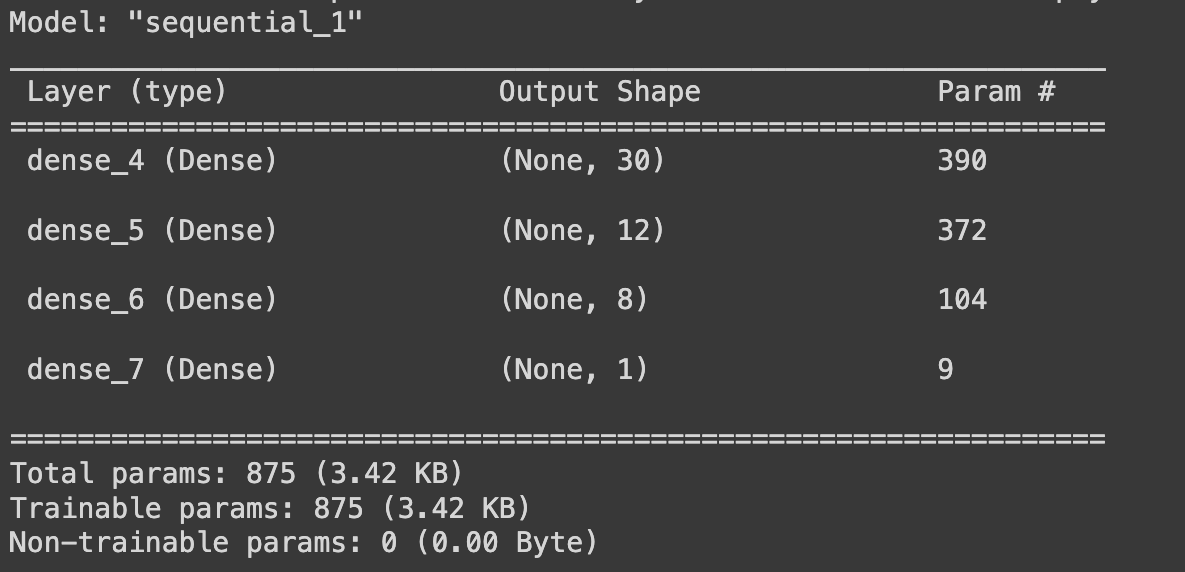

# 모델 구조

model = Sequential()

model.add(Dense(30, input_dim=12, activation='relu'))

model.add(Dense(12, activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.summary()

# 모델 compile

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# 모델 실행

history = model.fit(X_train, y_train, epochs=50, batch_size=500,

validation_split=0.25) # 0.8 x 0.25 = 0.2

# 테스트 결과 출력

score = model.evaluate(X_test, y_test)

print('Test accuracy: ', score[1])

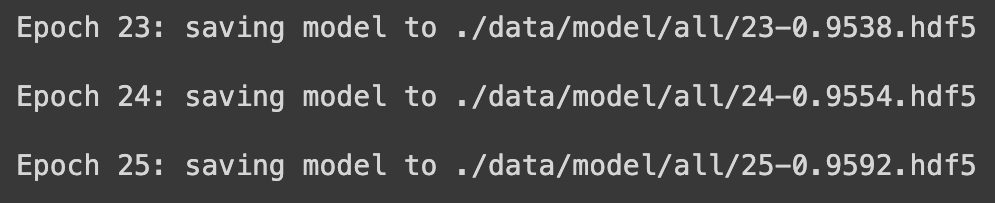

📑 학습 중인 모델 저장

실습 - 와인의 종류 예측하기

- epoch마다 모델의 정확도를 함께 기록하면서 저장

- 학습 중인 모델을 저장하는 함수: ModelCheckpoint()

from tensorflow.keras.callbacks import ModelCheckpoint

checkpointer = ModelCheckpoint(filepath=modelpath, verbose=1)

# 모델이 저장되는 조건 설정

modelpath = './data/model/all/{epoch:02d}-{val_accuracy:.4f}.hdf5'

checkpointer = ModelCheckpoint(filepath=modelpath, verbose=1)

# 모델 실행

history = model.fit(X_train, y_train, epochs=50, batch_size=500,

validation_split=0.25, verbose=0, callbacks=[checkpointer])

# 테스트 결과 출력

score = model.evaluate(X_test, y_test)

print('Test accuracy: ', score[1])

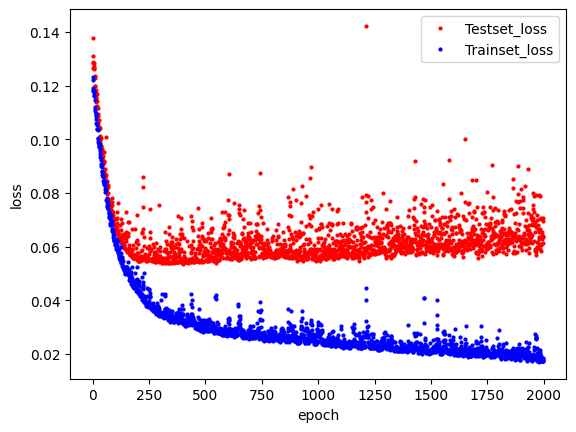

📑 history 활용

- epoch=2000

- history 활용

history: model.fit()의 결과를 가진 파이썬 객체history.params: model.fit()의 설정값history.epoch: epoch 정보history.history: loss, accuracy, val_loss, val_accuracy

# epoch = 2000으로 학습

history = model.fit(X_train, y_train, epochs=2000, batch_size=500, validation_split=0.25)

# history에 저장된 학습 결과 확인

hist_df = pd.DataFrame(history.history)

hist_df

# y_vloss에 검증셋의 오타 저장

y_vloss = hist_df['val_loss']

# y_loss에 학습셋의 오차 저장

y_loss = hist_df['loss']

# x 값을 지정하고 검증셋의 오차를 빨간색으로, 학습셋의 오차를 파란색으로 표시

x_len = np.arange(len(y_loss))

plt.plot(x_len, y_vloss, 'o', c='red', markersize=2, label='Testset_loss')

plt.plot(x_len, y_loss, 'o', c='blue', markersize=2, label='Trainset_loss')

plt.legend(loc='upper right')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()

- 학습잉 오래 진행될수록 train loss 줄어들지만 test loss 커짐.

- 과적합

📑 자동 중단

- EarlyStopping(): 학습이 진행되어도 테스트셋 오차가 줄어들지 앟으면 학습을 자동으로 멈춤

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

# 학습이 언제 자동 중단될지 설정

early_stopping_callback = EarlyStopping(monitor='val_loss', patience=20)

# 최적화 모델이 저장될 폴더와 모델 이름

modelpath = './data/model/bestmodel.hdf5'

# 최적화 모델을 업데이트하고 저장

checkpointer = ModelCheckpoint(filepath=modelpath, monitor='val_loss', verbose=0, save_best_only=True)

# 모델 실행

history = model.fit(X_train, y_train, epochs=2000, batch_size=500,

validation_split=0.25, verbose=1, callbacks=[early_stopping_callback, checkpointer])