IrisFlower 학습

목표

-

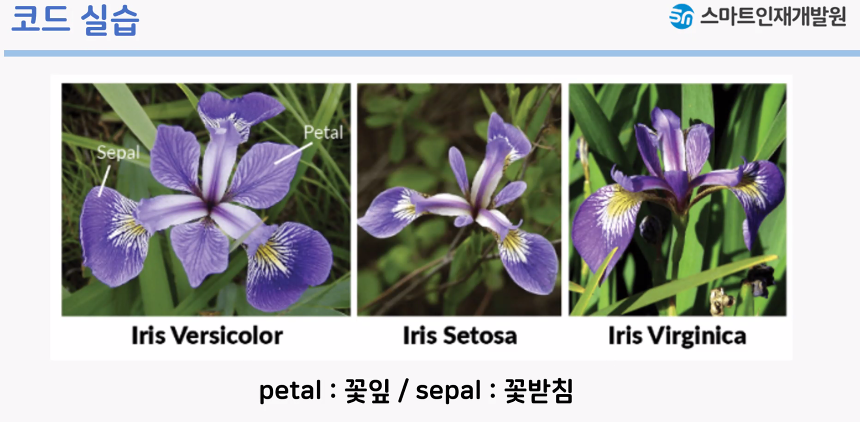

붓꽃의 꽃잎 갈이, 꽃잎 너비, 꽃받침 길이, 꽃받침 머비 특징을 활용해 3가지 품종을 분류해보자

-

KNN모델의 이웃의 숫자를 조절해보자

-

petal: 꽃잎/sepal: 꽃받침 🌸🌺

#사이킷런에서 제공하는 데이터 세트 생성하는 모듈

from sklearn.datasets import load_iris

iris_data = load_iris()

iris_data #Bunch 클래스 객체(dictionary와 유사함)# iris_dataset의 키 값

iris_data.keys()# DESCR키에는 데이타셋에 대한 설명

print(iris_data['DESCR'])# target_names: 정답/예측한고자 하는 붓꽃 품종의 이름을 문자열 배열로 가자고데이터

print(iris_data['target_names'])#feature_names: 각 특성을 설명하는 문자열

iris_data['feature_names']# 꽃잎의 길이, 푹, 꽃받침의 길이, 묵->2차원numpy배열의 형태

iris_data['data']# target값 1차원 numpy배열

#0은 setoda 1은 versocolor , 2는 virginica

iris_data['target']datasets 구성하기

- 문제와 답 데이터 분리

- 훈련세트와 평가 세트로 분리

- 훈련세트/데이터: 머신러닝 모델을 학습할 때 사용

- 평가세트/데이터: 모델이 얼마나 잘 작동하는지 특정하는데 사용

# library import

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split #데이터mixed up

# 사이킥런 library 가져오기

from sklearn.neighbors import KNeighborsClassifier

from sklearn import metrics

plt.rcParams['font.family']='Malgun Gothic'#문자데이터 2차원 데이터프레임 생성

#columns name 설장

iris_df = pd.DataFrame(iris_data['data'],columns = ['sepal length (cm)','sepal width (cm)',

'petal length (cm)',

'petal width (cm)'])

iris_df#문제와 답데이터 분리

x = iris_df.values

y = iris_data['target']#훈련세트와 평가세트로 분리

#train_test_split:데이터를 나누기 전에 유사 난수 생성기를 사용해

#데이터셋을 무작위로 섞어준다 train: 70% test 30%

x_train,x_test,y_train,y_test=train_test_split(x,y,

test_size = 0.3,random_state = 65)

# random_state 메개변수: 함수를 실행해도 결과가 똑같이 나오게 된다

#훈련용 세트 크기 확인

print(x_train.shape)

print(y_train.shape)

print(x_test.shape)

print(y_test.shape)#model생성

knn = KNeighborsClassifier(n_neighbors = 3)#훈련/학습

# 모델명.fit(훈련용 문제,훈련용 답)

knn.fit(x_train,y_train)#예측

#모델명.predict(테스트용 문제)

pre =knn.predict(x_test)

pre#평가하기

metrics.accuracy_score(pre,y_test)