학습내용

Metric

- precision

negative를 positive로 잘못 판단하면 큰 손실이 있는 경우(ex 스팸메일)

-> 높은 threshold

- recall

positive를 negative로 잘못 판단하면 큰 손실이 있는 경우에 사용. ex 암

-> 낮은 threshold

-

F-beta score

F_beta = -

ROC curve

FPR(False Positive Rate)이 변할 때 TPR(True Positive Rate)이 어떻게 변하는지를 나타내는 곡선

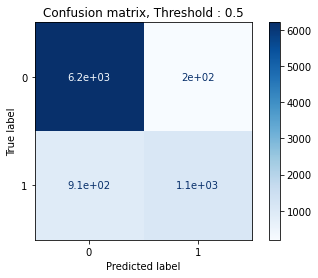

Confusion matrix

from sklearn.metrics import classification_report, confusion_matrix

pcm = plot_confusion_matrix(

pipe,

X_val,

y_val,

cmap = 'Blues'

)

plt.title('Confusion matrix, Threshold : 0.5')

plt.show()

#threshold에 따른 confusion matrix 및 모델 평가

threshold = [0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]

for thr in threshold:

print('threshold : ', thr)

y_pred_proba = pipe.predict_proba(X_val)[:,1]

y_pred = (y_pred_proba > thr)

con = confusion_matrix(

y_val,

y_pred

)

print('confusion matrix : \n',con)

print()

print(classification_report(y_val, y_pred))

print()threshold : 0.1

confusion matrix :

[[3225 3182][ 137 1873]]

precision recall f1-score support

0 0.96 0.50 0.66 6407

1 0.37 0.93 0.53 2010

accuracy 0.61 8417 macro avg 0.66 0.72 0.60 8417

weighted avg 0.82 0.61 0.63 8417

threshold : 0.2

confusion matrix :

[[4880 1527][ 411 1599]]

precision recall f1-score support

0 0.92 0.76 0.83 6407

1 0.51 0.80 0.62 2010

accuracy 0.77 8417 macro avg 0.72 0.78 0.73 8417

weighted avg 0.82 0.77 0.78 8417

threshold : 0.3

confusion matrix :

[[5606 801][ 604 1406]]

precision recall f1-score support

0 0.90 0.87 0.89 6407

1 0.64 0.70 0.67 2010

accuracy 0.83 8417 macro avg 0.77 0.79 0.78 8417

weighted avg 0.84 0.83 0.84 8417

threshold : 0.4

confusion matrix :

[[5955 452][ 725 1285]]

precision recall f1-score support

0 0.89 0.93 0.91 6407

1 0.74 0.64 0.69 2010

accuracy 0.86 8417 macro avg 0.82 0.78 0.80 8417

weighted avg 0.86 0.86 0.86 8417

threshold : 0.5

confusion matrix :

[[6207 200][ 913 1097]]

precision recall f1-score support

0 0.87 0.97 0.92 6407

1 0.85 0.55 0.66 2010

accuracy 0.87 8417 macro avg 0.86 0.76 0.79 8417

weighted avg 0.87 0.87 0.86 8417

threshold : 0.6

confusion matrix :

[[6312 95][1128 882]]

precision recall f1-score support

0 0.85 0.99 0.91 6407

1 0.90 0.44 0.59 2010

accuracy 0.85 8417 macro avg 0.88 0.71 0.75 8417

weighted avg 0.86 0.85 0.83 8417

threshold : 0.7

confusion matrix :

[[6363 44][1309 701]]

precision recall f1-score support

0 0.83 0.99 0.90 6407

1 0.94 0.35 0.51 2010

accuracy 0.84 8417 macro avg 0.89 0.67 0.71 8417

weighted avg 0.86 0.84 0.81 8417

threshold : 0.8

confusion matrix :

[[6399 8][1556 454]]

precision recall f1-score support

0 0.80 1.00 0.89 6407

1 0.98 0.23 0.37 2010

accuracy 0.81 8417 macro avg 0.89 0.61 0.63 8417

weighted avg 0.85 0.81 0.77 8417

threshold : 0.9

confusion matrix :

[[6407 0][1877 133]]

precision recall f1-score support

0 0.77 1.00 0.87 6407

1 1.00 0.07 0.12 2010

accuracy 0.78 8417 macro avg 0.89 0.53 0.50 8417

weighted avg 0.83 0.78 0.69 8417