encoder and scaler

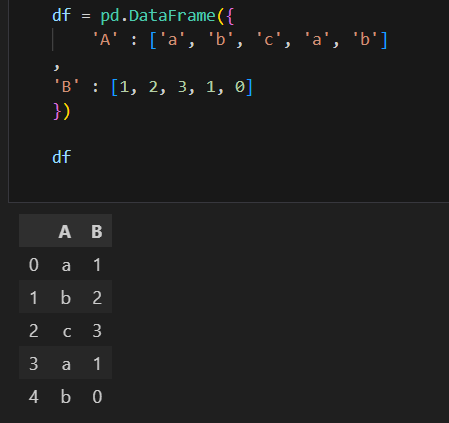

- 데이터 프레임

1. LabelEncoder

LabelEncoder fit

- 문자를 숫자로 변환

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

le.fit(df['A'])le.classes_array(['a', 'b', 'c'], dtype=object)

le.transform(df['A'])array([0, 1, 2, 0, 1])

fit_transform

- fit과 transform 한 번에 변환하기

le.fit_transform(df['A'])array([0, 1, 2, 0, 1])

inverse_transform

- 역변환하기

le.inverse_transform(df['le_A'])array(['a', 'b', 'c', 'a', 'b'], dtype=object)

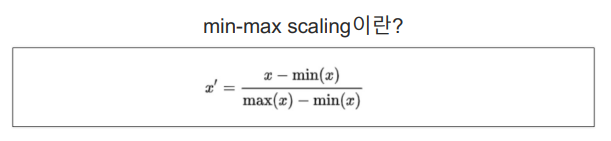

min-max scaling

- 분모는 1에 가까워지게 보내고, 분자는 0에 가까워지게 보냄

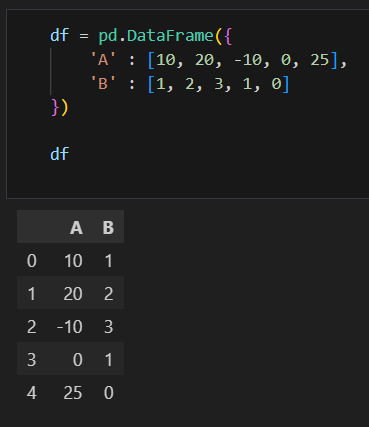

- 데이터 프레임

MinMaxScaler fit

from sklearn.preprocessing import MinMaxScaler

mms = MinMaxScaler()

mms.fit(df)# data_range_ : 분모 역할, 전체 길이

mms.data_max_, mms.data_min_, mms.data_range_(array([25., 3.]), array([-10., 0.]), array([35., 3.]))

transform

df_mms = mms.transform(df)

df_mmsarray([[0.57142857, 0.33333333],

[0.85714286, 0.66666667],

[0. , 1. ],

[0.28571429, 0.33333333],

[1. , 0. ]])

역변환

mms.inverse_transform(df_mms)array([[ 10., 1.],

[ 20., 2.],

[-10., 3.],

[ 0., 1.],

[ 25., 0.]])

한번에

mms.fit_transform(df)array([[0.57142857, 0.33333333],

[0.85714286, 0.66666667],

[0. , 1. ],

[0.28571429, 0.33333333],

[1. , 0. ]])

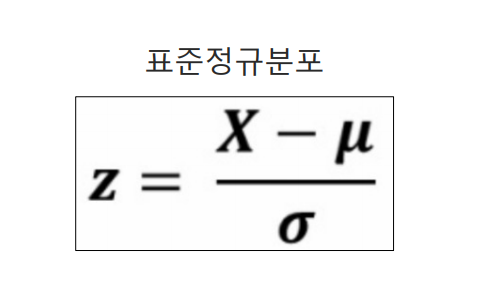

StandardScaler

- 표준화 시키기

StandardScaler fit

from sklearn.preprocessing import StandardScaler

ss = StandardScaler()

ss.fit(df)평균과 표준편차

# scale_ : 표준편차

ss.mean_, ss.scale_ (array([9. , 1.4]), array([12.80624847, 1.0198039 ]))

transfrom

df_ss = ss.transform(df)

df_ssarray([[ 0.07808688, -0.39223227],

[ 0.85895569, 0.58834841],

[-1.48365074, 1.56892908],

[-0.70278193, -0.39223227],

[ 1.2493901 , -1.37281295]])

fit_transform

ss.fit_transform(df)array([[ 0.07808688, -0.39223227],

[ 0.85895569, 0.58834841],

[-1.48365074, 1.56892908],

[-0.70278193, -0.39223227],

[ 1.2493901 , -1.37281295]])

역변환

ss.inverse_transform(df_ss)array([[ 10., 1.],

[ 20., 2.],

[-10., 3.],

[ 0., 1.],

[ 25., 0.]])

RobustScaler

- q2 를 0으로 보고, q3~q1 : 50%의 데이터 길이를 1로 봄

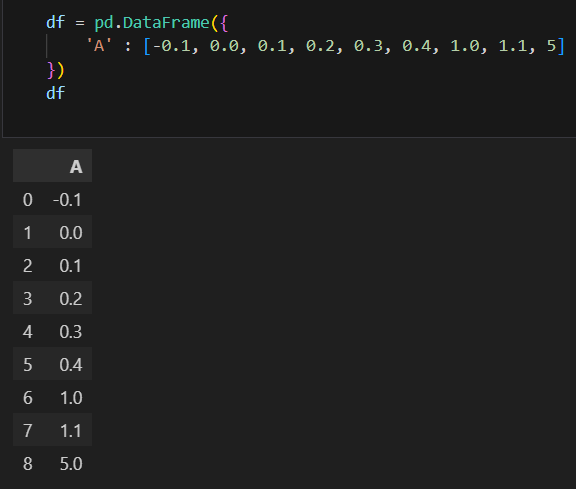

- 데이터 만들기

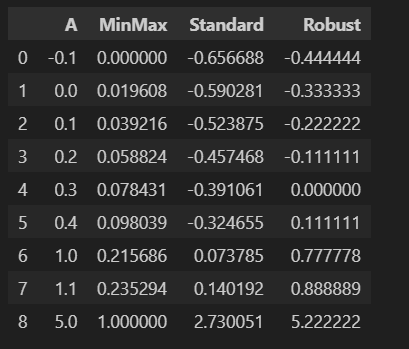

MinMaxScaler, StandardScaler, RobustScaler 실행하기

from sklearn.preprocessing import MinMaxScaler, StandardScaler, RobustScaler

mm = MinMaxScaler()

ss = StandardScaler()

rs = RobustScaler()df_scaler = df.copy()

df_scaler['MinMax'] = mm.fit_transform(df)

df_scaler['Standard'] = ss.fit_transform(df)

df_scaler['Robust'] = rs.fit_transform(df)

df_scaler

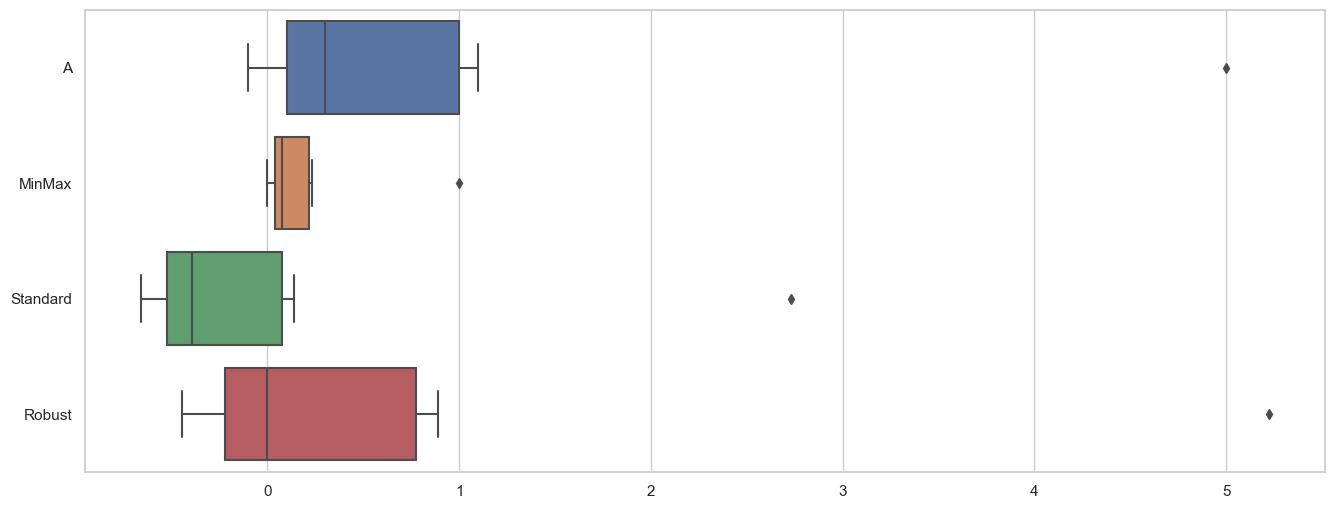

box_plot 만들기

sns.set_theme(style='whitegrid')

plt.figure(figsize=(16,6))

sns.boxplot(data=df_scaler, orient='h');

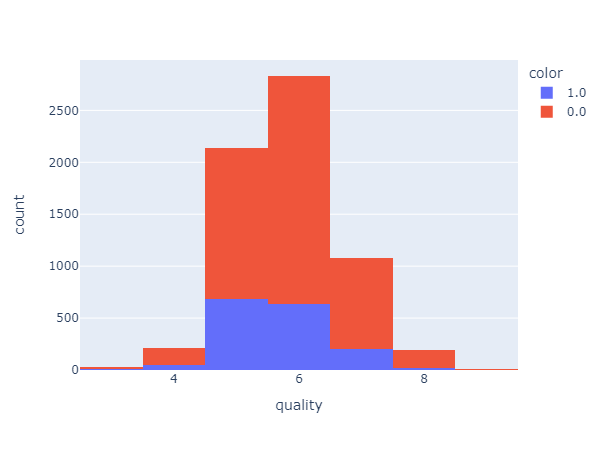

wine using Decision Tree

데이터 확인하기

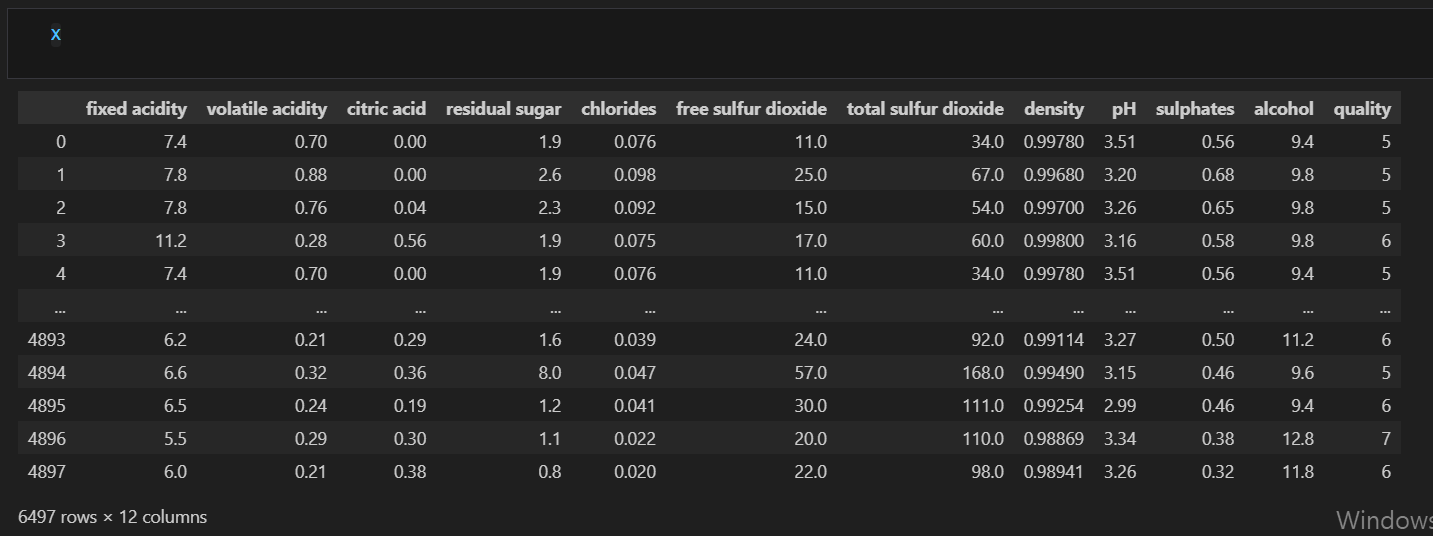

데이터 읽기

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url ='https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')red_wine과 white_wine의 데이터 구조와 컬럼의 종류는 동일

두 데이터 합치기

# color 컬럼 추가해서 헷갈리지 않게 표기

red_wine['color'] = 1.

white_wine['color'] =0.

wine = pd.concat([red_wine, white_wine])

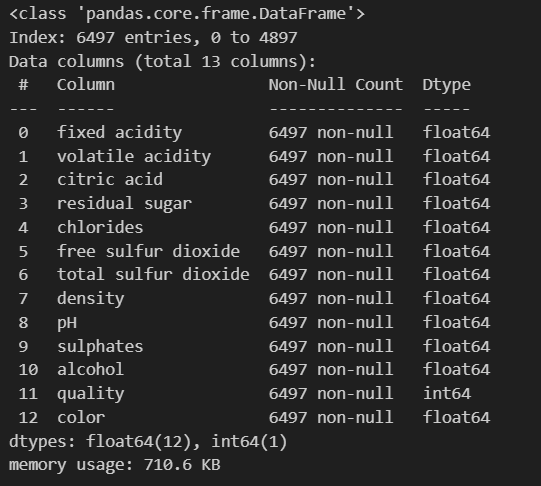

wine.info()

wine.head()

등급 확인하기

wine['quality'].unique()array([5, 6, 7, 4, 8, 3, 9], dtype=int64)

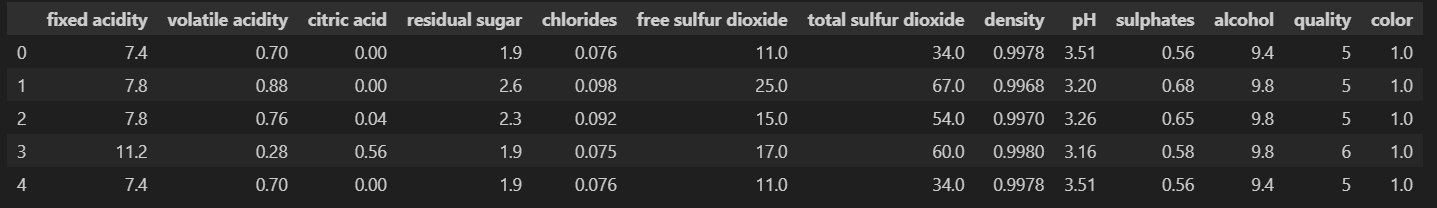

quality 별 histogram 그리기

import plotly.express as px

fig = px.histogram(wine, x='quality')

fig.show()

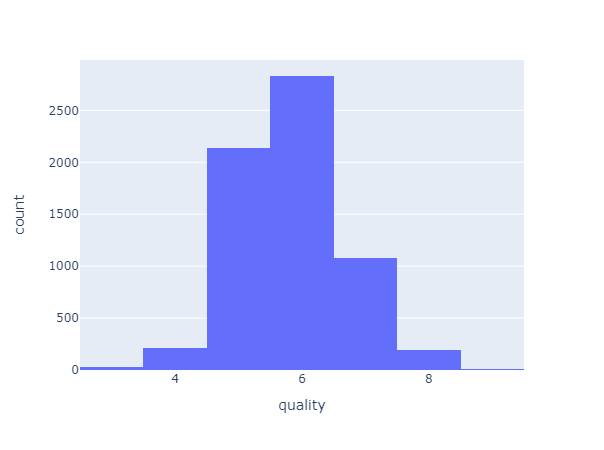

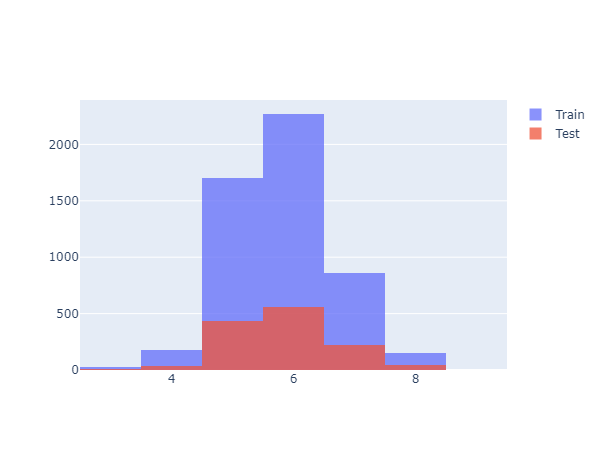

레드, 화이트 와인별로 등급 histogram 그리기

# 1 : red / 0 : white

fig = px.histogram(wine, x='quality', color='color')

fig.show()

레드 와인, 화이트 와인 분류기

라벨 분류하기

X = wine.drop(['color'], axis=1)

y = wine['color']

데이터를 훈련용과 테스트용으로 나누기

from sklearn.model_selection import train_test_split

import numpy as np

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)

# y_train 확인하기

np.unique(y_train, return_counts=True)(array([0., 1.]), array([3913, 1284], dtype=int64))

훈련용과 데스트용이 레드, 화이트 와인에 따라 어느정도 구분되었는지 확인

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x=X_train['quality'], name='Train'))

fig.add_trace(go.Histogram(x=X_test['quality'], name='Test'))

fig.update_layout(barmode='overlay')

fig.update_traces(opacity=0.75)

fig.show()

결정나무 훈련

fit

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)성능 확인하기

from sklearn.metrics import accuracy_score

y_pred_tr = wine_tree.predict(X_train) # train accuracy

y_pred_test = wine_tree.predict(X_test) accuracy_score(y_train, y_pred_tr), accuracy_score(y_test, y_pred_test)(0.9553588608812776, 0.9569230769230769)

print('Train ACC : ', accuracy_score(y_train, y_pred_tr))

print('Test ACC : ', accuracy_score(y_test, y_pred_test))Train ACC : 0.9553588608812776

Test ACC : 0.9569230769230769

데이터 전처리

- MinMaxScaler와 StandardScaler

X.columnsIndex(['fixed acidity', 'volatile acidity', 'citric acid', 'residual sugar',

'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density',

'pH', 'sulphates', 'alcohol', 'quality'],

dtype='object')

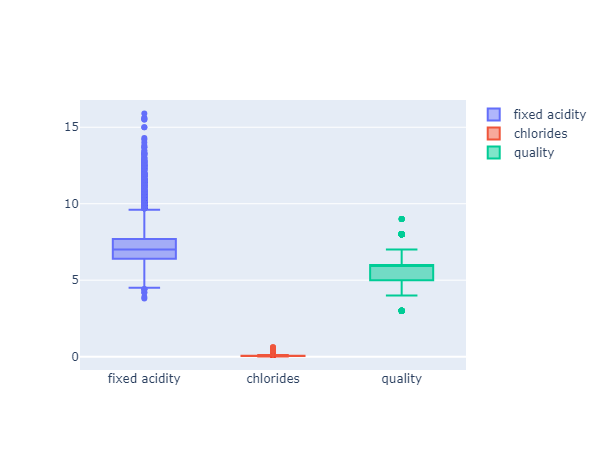

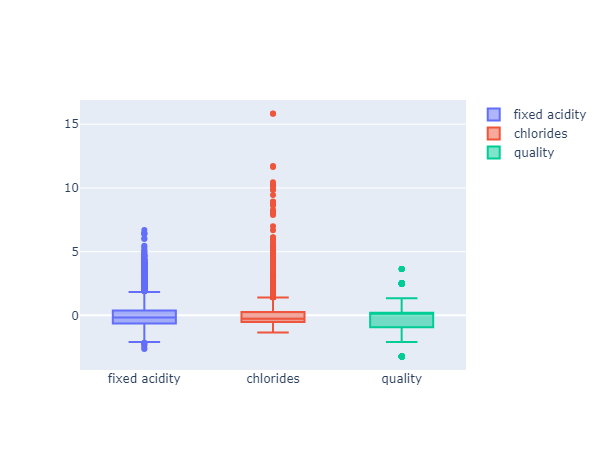

와인데이터의 몇 개 항목의 boxplot 그리기

fig = go.Figure()

fig.add_traces(go.Box(y=X['fixed acidity'], name='fixed acidity'))

fig.add_traces(go.Box(y=X['chlorides'], name='chlorides'))

fig.add_traces(go.Box(y=X['quality'], name='quality'))

fig.show()

- 컬럼들의 최대.최소 범위가 각각 다르고, 평균과 분산이 각각 다르다

- 특성 (feature)의 편향 문제는 최적의 모델을 찾는데 방해가 될 수도 있다.

MinMaxScaler와 StandardScaler 활용하기

from sklearn.preprocessing import MinMaxScaler, StandardScaler

mms = MinMaxScaler()

ss = StandardScaler()

ss.fit(X)

mms.fit(X)

X_ss = ss.transform(X)

X_mms = mms.transform(X)

X_ss_pd = pd.DataFrame(X_ss, columns=X.columns)

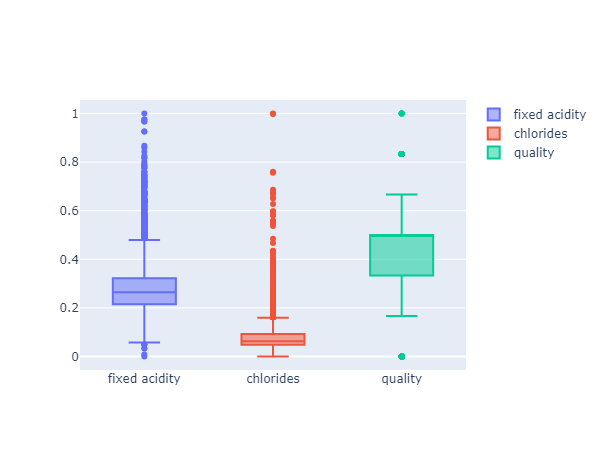

X_mms_pd = pd.DataFrame(X_mms, columns=X.columns)MinMaxScaler로 그래프 그리기

#최대 최소값을 1과 0으로 강제로 맞추는 것

fig = go.Figure()

fig.add_traces(go.Box(y=X_mms_pd['fixed acidity'], name='fixed acidity'))

fig.add_traces(go.Box(y=X_mms_pd['chlorides'], name='chlorides'))

fig.add_traces(go.Box(y=X_mms_pd['quality'], name='quality'))

fig.show()

StandardScaler 그래프 그리기

# 평균을 0으로 표준편차를 1로 맞추는 것

fig = go.Figure()

fig.add_traces(go.Box(y=X_ss_pd['fixed acidity'], name='fixed acidity'))

fig.add_traces(go.Box(y=X_ss_pd['chlorides'], name='chlorides'))

fig.add_traces(go.Box(y=X_ss_pd['quality'], name='quality'))

fig.show()

- 결정나무에서는 이런 전처리는 의미를 가지지 않는다.

- 주로 Cost Function을 최적화할 때 유효할 때가 있다.

- MinMaxScaler와 StandardScaler 중 어떤 것이 좋을지는 해봐야 안다

MinMaxScaler를 적용해서 다시 학습

X_train, X_test, y_train, y_test = train_test_split(X_mms_pd, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train ACC : ', accuracy_score(y_train, y_pred_tr))

print('Test ACC : ', accuracy_score(y_test, y_pred_test))Train ACC : 0.9553588608812776

Test ACC : 0.9569230769230769

결정나무에서는 이런 전처리는 거의 효과가 없음

StandardScaler를 적용해서 다시 학습

X_train, X_test, y_train, y_test = train_test_split(X_ss_pd, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train ACC : ', accuracy_score(y_train, y_pred_tr))

print('Test ACC : ', accuracy_score(y_test, y_pred_test))Train ACC : 0.9553588608812776

Test ACC : 0.9569230769230769

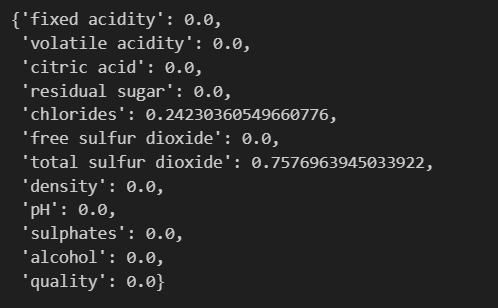

레드와인과 화이트와인을 구분하는 중요 특성

dict(zip(X_train.columns, wine_tree.feature_importances_))

- maxDepth를 높이면 수치에도 변화가 온다.

- 레드, 화이트 구분하는 칼럼 => 'total sulfur dioxide': 0.7576963945033922

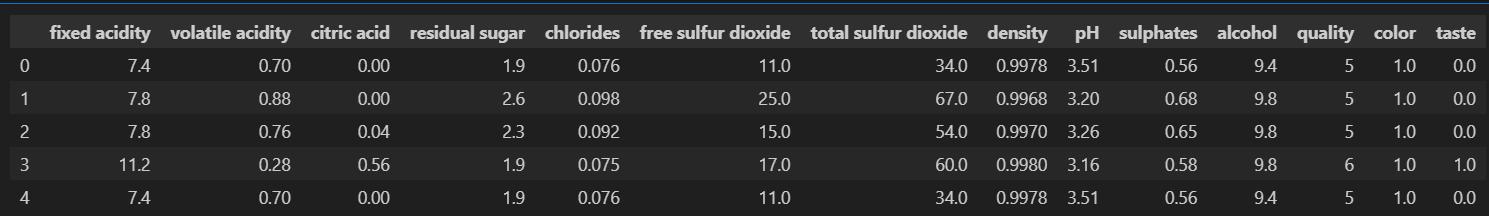

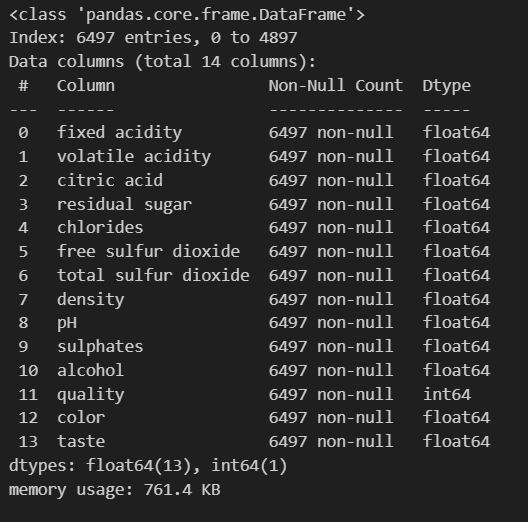

와인 맛에 대한 분류 : 이진 분류

quality 컬럼을 이진화하기

wine['taste'] = [1. if grade>5 else 0. for grade in wine['quality']]

wine.head()

wine.info()

레드/화이트 와인 분류와 동일 과정 거치기

X = wine.drop(['taste'], axis=1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train ACC : ', accuracy_score(y_train, y_pred_tr))

print('Test ACC : ', accuracy_score(y_test, y_pred_test))Train ACC : 1.0

Test ACC : 1.0

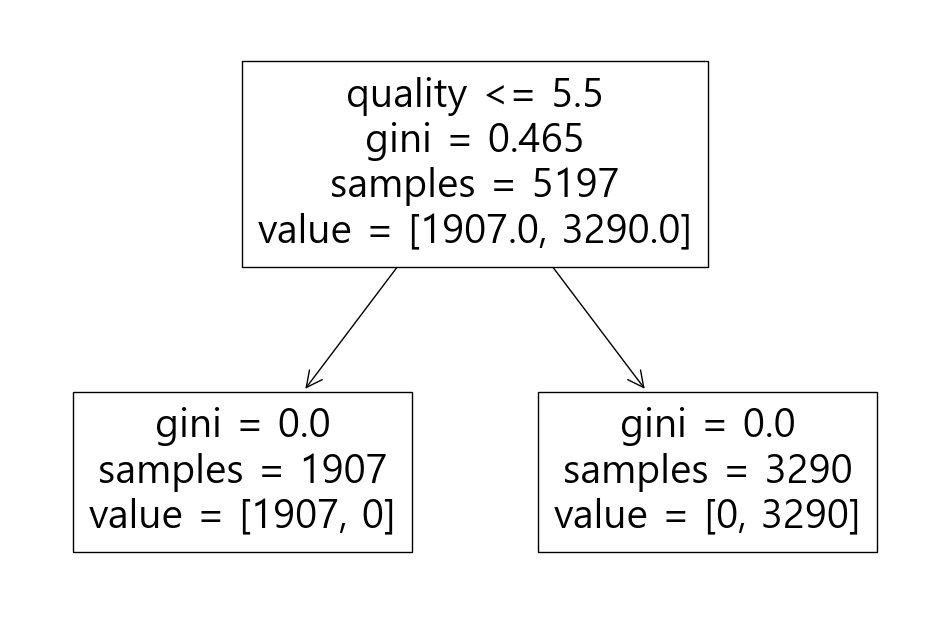

100% 나온 이유

import sklearn.tree as tree

plt.figure(figsize=(12,8))

tree.plot_tree(wine_tree, feature_names=X.columns);

quality 컬럼으로 taste 컬럼으로 만들었으니 taste, qulity 둘 다 제거해야 함

quality 지우고 확인하기

X = wine.drop(['taste', 'quality'], axis=1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train ACC : ', accuracy_score(y_train, y_pred_tr))

print('Test ACC : ', accuracy_score(y_test, y_pred_test))Train ACC : 0.7294593034442948

Test ACC : 0.7161538461538461

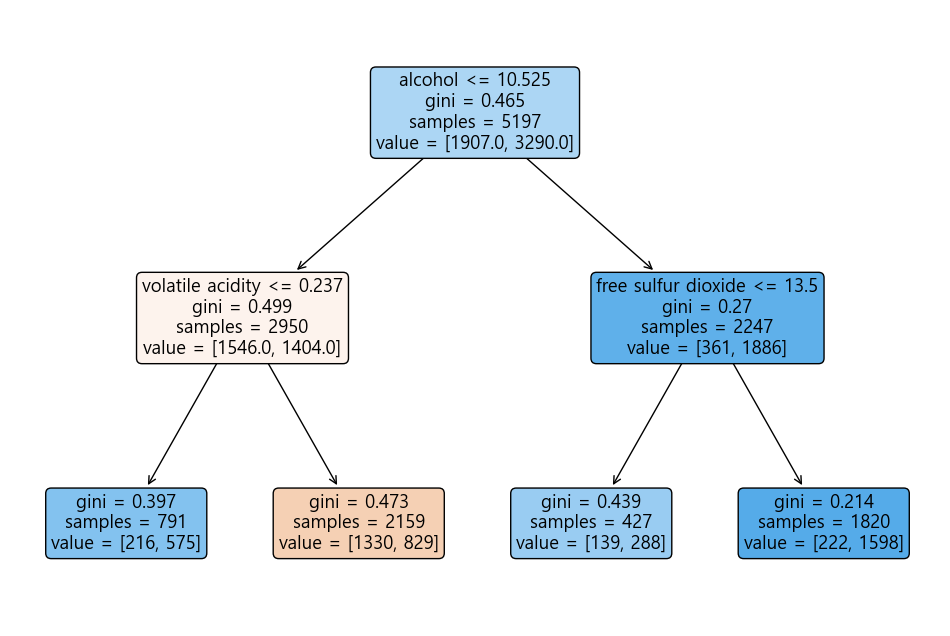

어떤 와인이 맛있다 할 수 있는지

plt.figure(figsize=(12,8))

tree.plot_tree(wine_tree, feature_names=X.columns, rounded=True, filled=True);

plt.show()

"이 글은 제로베이스 데이터 취업 스쿨의 강의 자료 일부를 발췌하여 작성되었습니다.”