이번 3주차 과제는 EKS의 Storage 부분이며 주요 내용은 EKS에서 사용 가능한 Storage 종류별 사용 방법 , 그리고 Snapshot 생성 및 복구 방법이다.

1. Storage 과제 시험 환경 구성

1.1 기본 설정

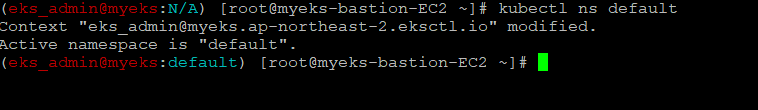

1.1.1 Default NameSpace 적용

$> kubectl ns default

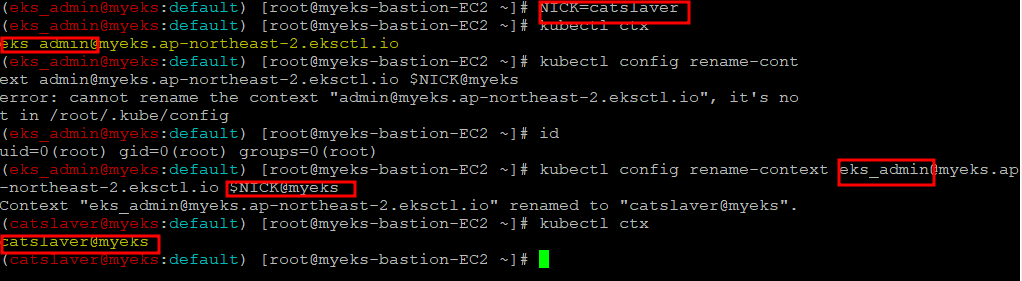

1.1.2 Context 변경

$> NICK=catslaver

$> kubectl ctx

$> kubectl config rename-context admin@myeks.ap-northeast-2.eksctl.io $NICK@myeks

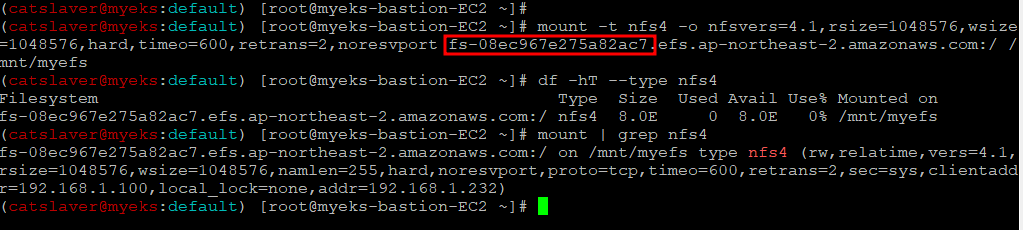

1.1.3 EFS Mount

$> mount -t nfs4 -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport fs-08ec967e275a82ac7.efs.ap-northeast-2.amazonaws.com:/ /mnt/myefs

$> df -hT --type nfs4

$> mount | grep nfs4

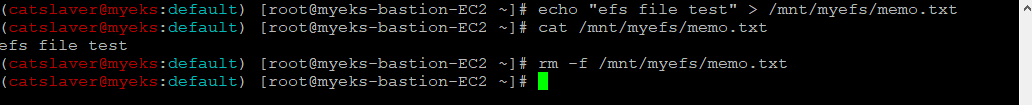

$> echo "efs file test" > /mnt/myefs/memo.txt

$> cat /mnt/myefs/memo.txt

$> rm -f /mnt/myefs/memo.txt

1.2 설정 확인

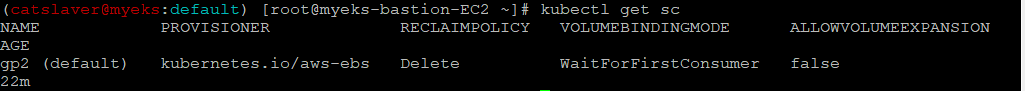

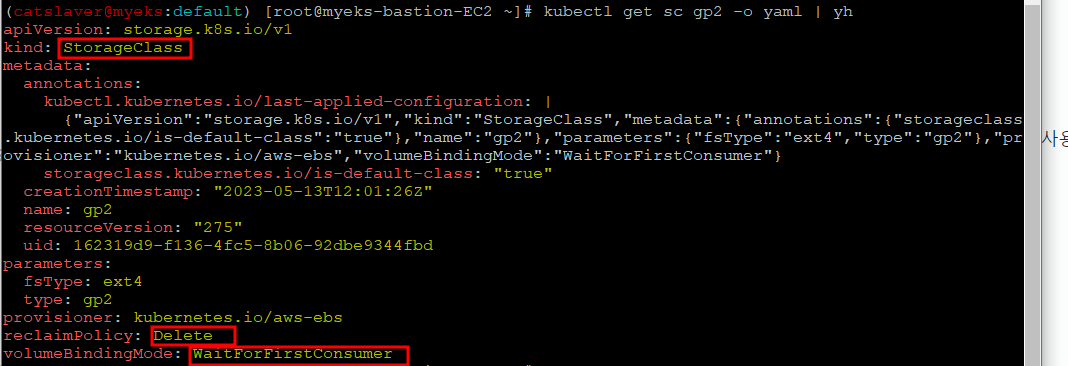

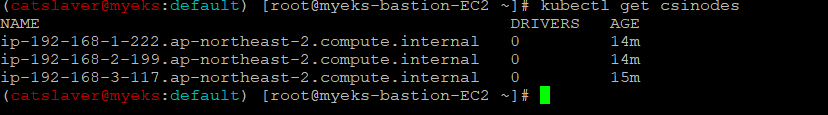

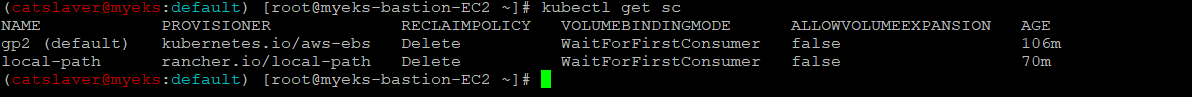

1.2.1 스토리지클래스 및 CSI 노드 확인

$> kubectl get sc

$> kubectl get sc gp2 -o yaml | yh

$> kubectl get csinodes

1.2.2 기타 확인 사항

노드 정보 확인, 노드 IP 확인 및 PrivateIP 변수 지정, 노드 보안그룹 ID 확인, 워커 노드 SSH 접속, 노드에 툴 설치는 2주차 과제 수행시와 동일

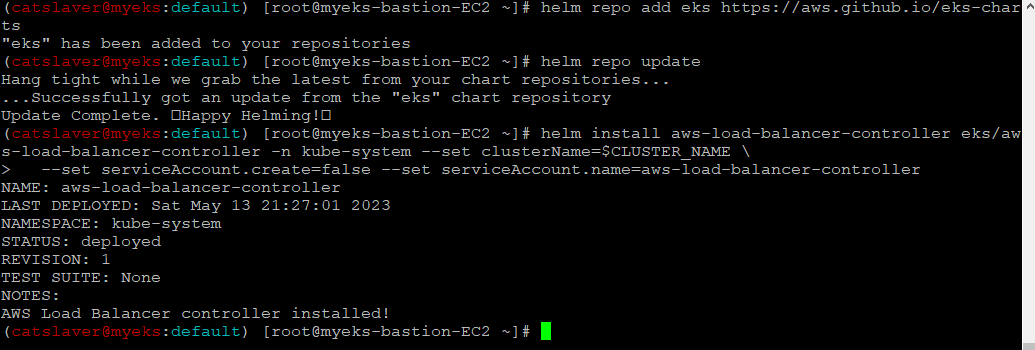

1.3 AWS LB/ExternalDNS, kube-ops-view 설치

1.3.1 AWS LB Controller

$> helm repo add eks https://aws.github.io/eks-charts

$> helm repo update

$> helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

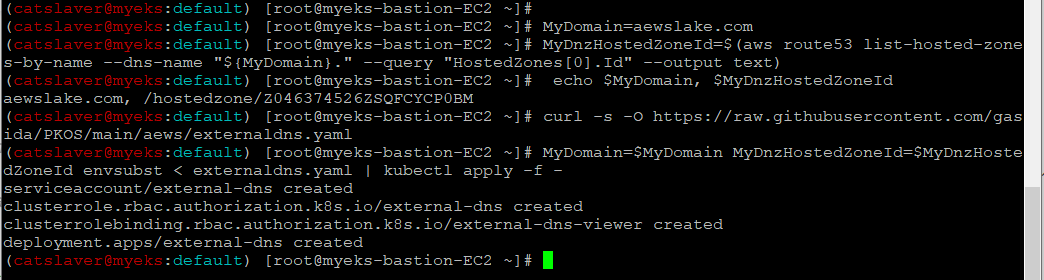

1.3.2 ExternalDNS

$> MyDomain=aewslake.com

$> MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

$> echo $MyDomain, $MyDnzHostedZoneId

$> curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

1.4 kube-ops-view

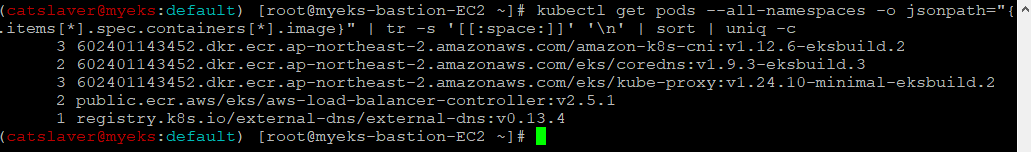

1.4.1 이미지 정보 확인

$> kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

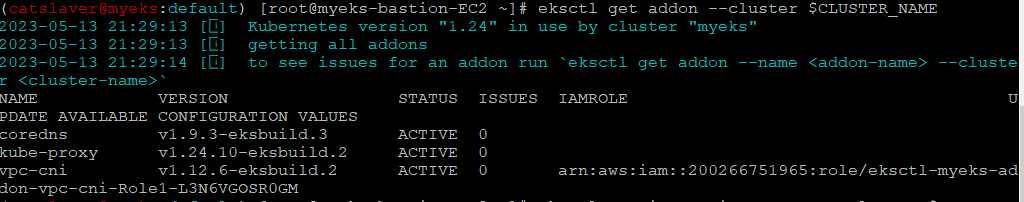

1.4.2 eksctl 설치/업데이트 addon 확인

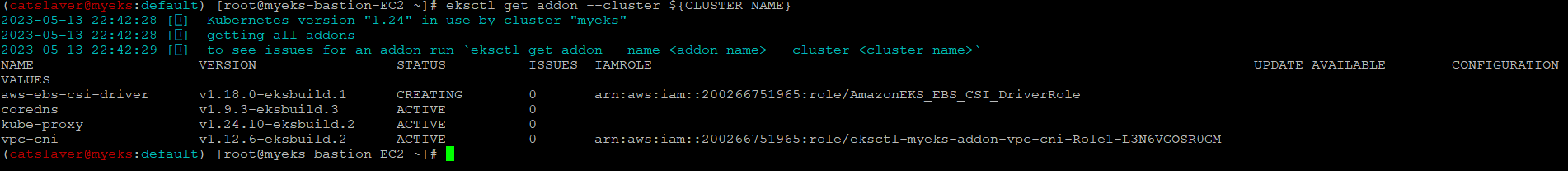

$> eksctl get addon --cluster $CLUSTER_NAME

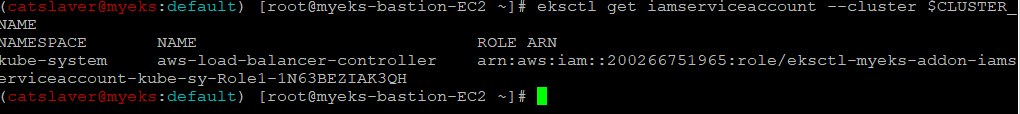

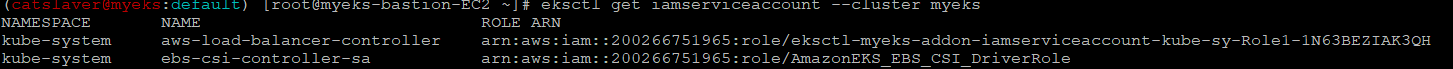

1.4.3 IRSA 확인

$> eksctl get iamserviceaccount --cluster $CLUSTER_NAME

2. 임시 파일 시스템(임시 Storage)

Pod가 생성한 파일들이 Pod 종료 후 사용 불가한 경우

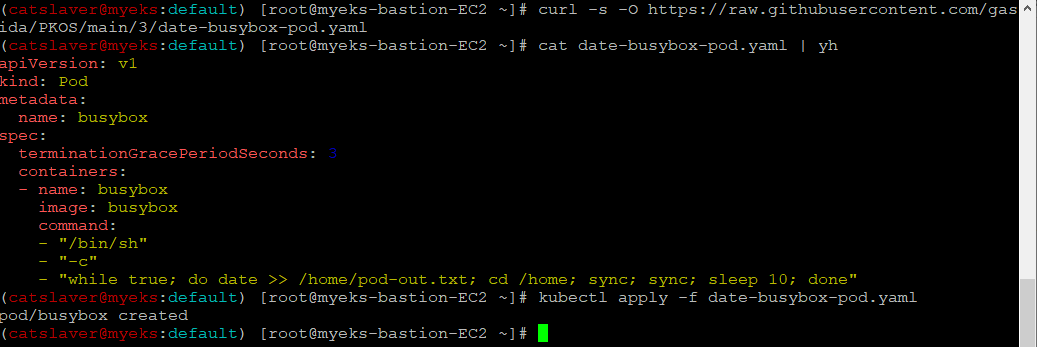

아래는 Script는 임시파일 시스템에 대한 간단한 예시로 Pod 종료후 Pod가 운용중일때 생성한 파일을 접근 할 수 없다는 상황을 보여준다

시험용 사용될 Pod의 내용은 10초 주기로 /home/pod-out.txt 파일에 현재 날짜 정보를 추가

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

terminationGracePeriodSeconds: 3

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "while true; do date >> /home/pod-out.txt; cd /home; sync; sync; sleep 10; done"2.1 Pod 배포

$> curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/date-busybox-pod.yaml

$> cat date-busybox-pod.yaml | yh

$> kubectl apply -f date-busybox-pod.yaml

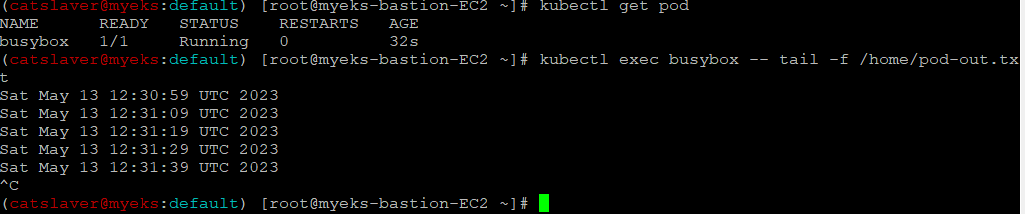

2.2 /home/pod-out.txt 날짜 데이터 확인

$> kubectl get pod

$> kubectl exec busybox -- tail -f /home/pod-out.txt

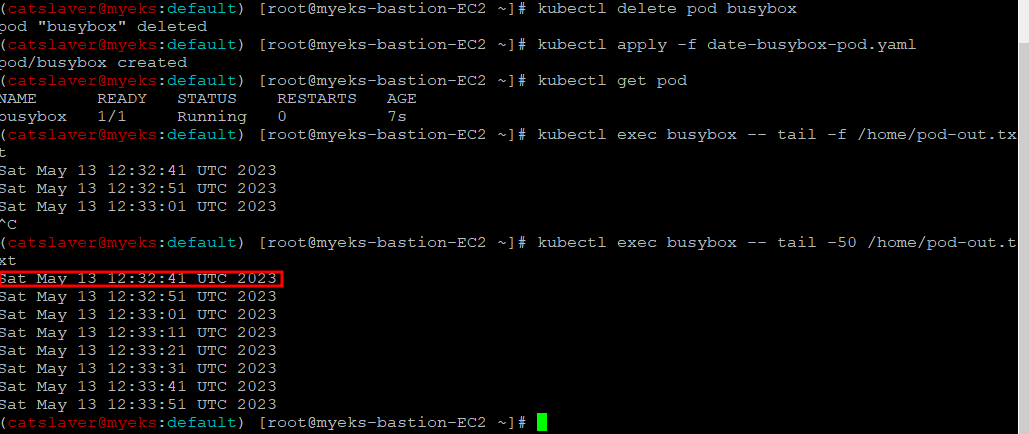

2.3 Pod 종료

$> kubectl delete pod busybox2.4 다시 동일한 Pod 배포

$> kubectl apply -f date-busybox-pod.yaml2.5 이전에 생성된 Pod가 만든 내용 유무 확인

$> kubectl exec busybox -- tail -f /home/pod-out.txtpod-out.txt의 첫번째 라인의 내용과 바로 Pod 삭제전의 pod-out.txt 파일 내용과 일치하는 부분이 없음

2.6 Pod 삭제

$> kubectl delete pod busybox3. 임시파일 시스템이 아닌 영구적인 Volume 생성

Host Path 를 사용하는 PV/PVC : local-path-provisioner 스트리지 클래스 배포

EKS 자원 생성 Script 구성

Namespace : local-path-storage

ServiceAccount,ClusterRole, ClusterRoleBinding : local-path-provisioner에 할당된 권한

Deployment : local-path-provisioner Pod 배포, /etc/config/ mount

StorageClass : rancher.io/local-path 정의

ConfigMap : rancher.io/local-path 관련 Config 인듯(?)

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [ "" ]

resources: [ "nodes", "persistentvolumeclaims", "configmaps" ]

verbs: [ "get", "list", "watch" ]

- apiGroups: [ "" ]

resources: [ "endpoints", "persistentvolumes", "pods" ]

verbs: [ "*" ]

- apiGroups: [ "" ]

resources: [ "events" ]

verbs: [ "create", "patch" ]

- apiGroups: [ "storage.k8s.io" ]

resources: [ "storageclasses" ]

verbs: [ "get", "list", "watch" ]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: rancher/local-path-provisioner:master-head

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-provisioner"]

}

]

}

setup: |-

#!/bin/sh

set -eu

mkdir -m 0777 -p "$VOL_DIR"

teardown: |-

#!/bin/sh

set -eu

rm -rf "$VOL_DIR"

helperPod.yaml: |-

apiVersion: v1

kind: Pod

metadata:

name: helper-pod

spec:

containers:

- name: helper-pod

image: busybox

imagePullPolicy: IfNotPresent

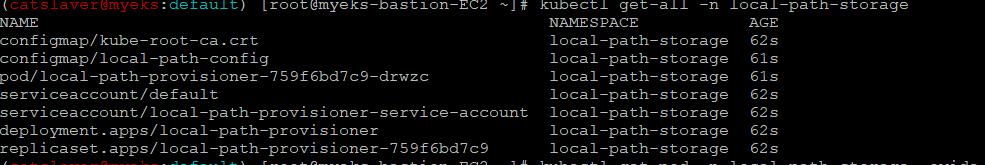

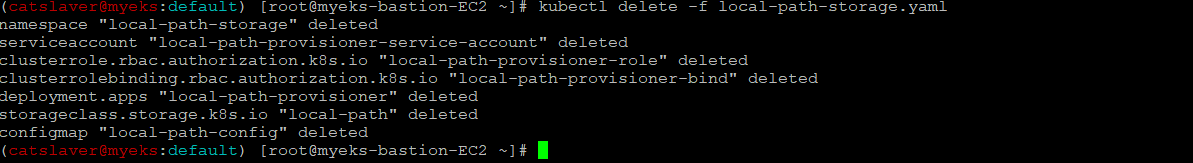

3.1 local-path provisioner 배포

$> curl -s -O https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

$> kubectl apply -f local-path-storage.yaml

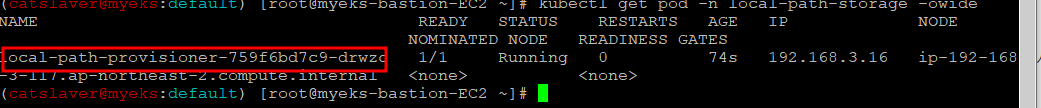

3.2 배포 확인

$> kubectl get-all -n local-path-storage

$> kubectl get pod -n local-path-storage -owide

$> kubectl describe cm -n local-path-storage local-path-config

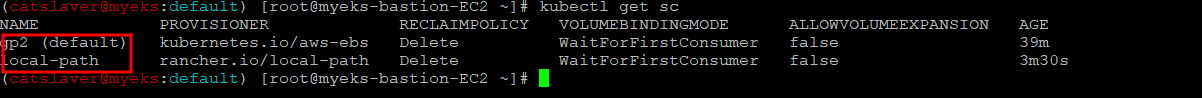

$> kubectl get sc

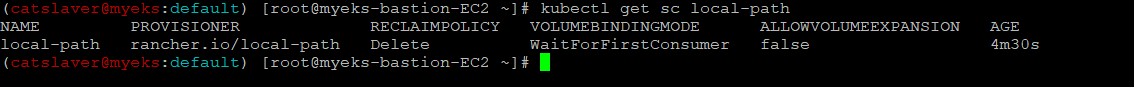

$> kubectl get sc local-path

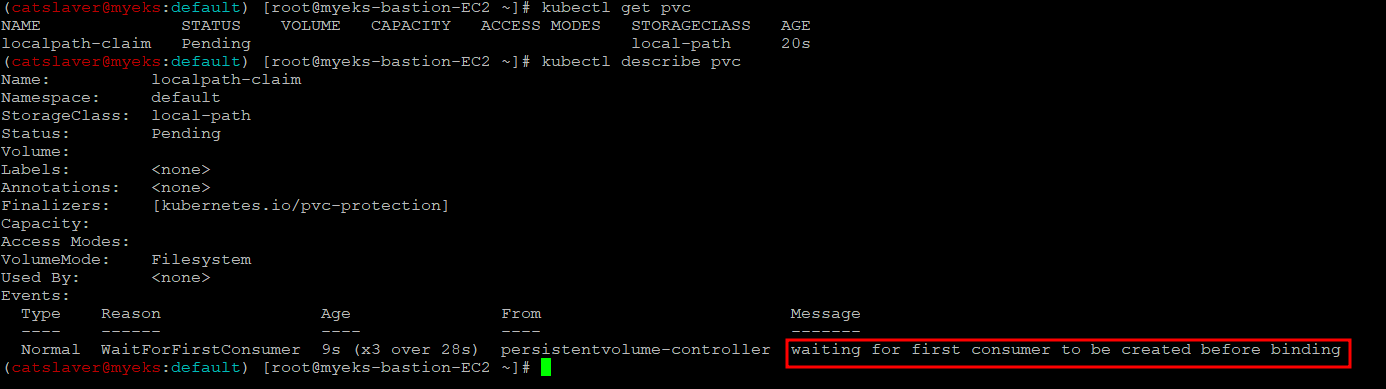

3.3 PVC 생성

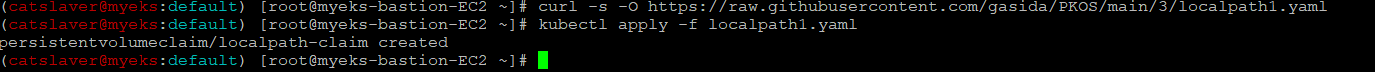

Storage Class가 local-path 인 PVC 생성

$> curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/localpath1.yaml

$> kubectl apply -f localpath1.yaml

3.4 PVC 생성 확인

$> kubectl get pvc

$> kubectl describe pvc

3.5 PV/PVC 를 사용하는 Pod 배포

/data/out.txt에 5초마다 날짜 값을 추가하는 Pod를 실행

$> curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/localpath2.yaml

$>kubectl apply -f localpath2.yaml

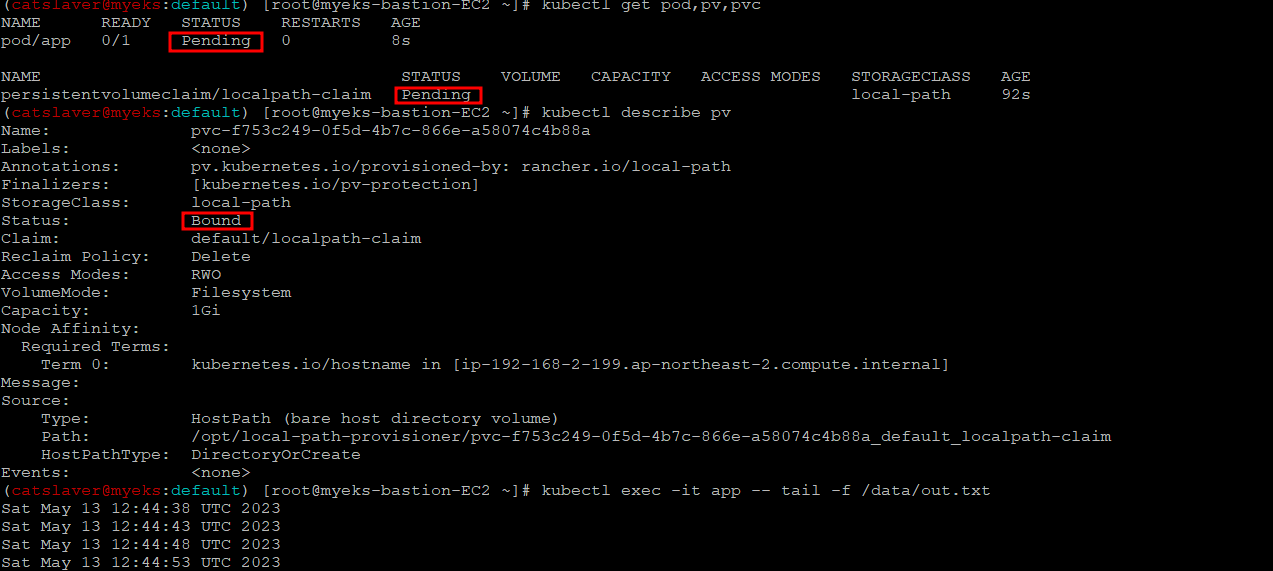

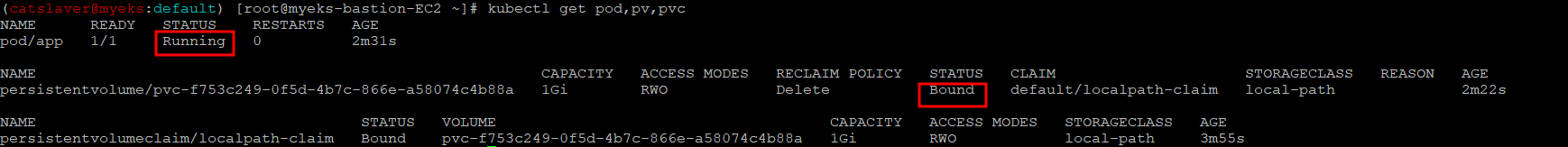

3.6 파드 및 /data/out.txt 데이터 생성 확인

$> kubectl get pod,pv,pvc

$> kubectl describe pv # Node Affinity 확인

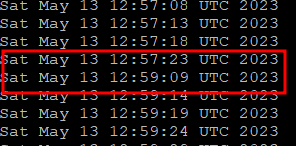

$> kubectl exec -it app -- tail -f /data/out.txt

Pod 생성 후에 Status 변화에서 약간의 시간이 필요한것 같다

3.7 직접 Node에 접속하여 확인

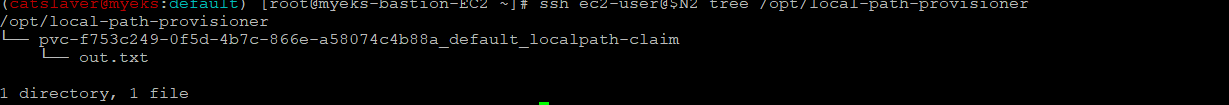

$> ssh ec2-user@$N2 tree /opt/local-path-provisionerPod가 생성된 Node에 접속하여 확인

3.8 파드 삭제 후 데이터 유지 확인

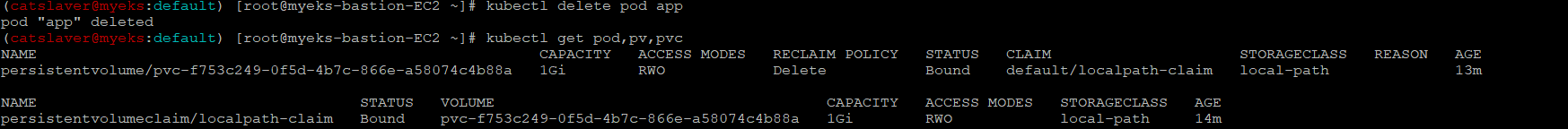

$>kubectl delete pod app

$>kubectl get pod,pv,pvcPod만 삭제

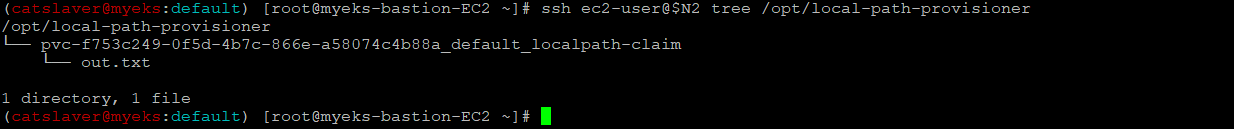

$>ssh ec2-user@$N2 tree /opt/local-path-provisioner파일은 그대로 유지

3.9 파드 재 실행

$> kubectl apply -f localpath2.yaml3.10 데이터 확인

$> kubectl exec -it app -- head /data/out.txt

$> kubectl exec -it app -- tail -f /data/out.txtPod가 종료후에도 파일은 그대로 유지가 되었으며 , Pod가 재 실행시 유지되어 있던 파일에 추가로 데이터 생성

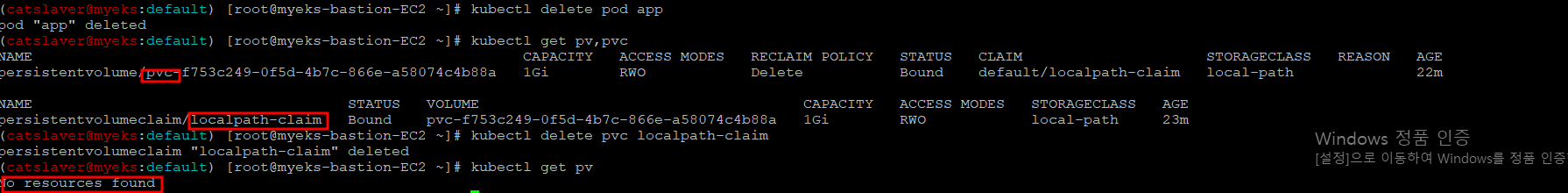

3.11 파드와 PVC 삭제

$> kubectl delete pod app

$> kubectl get pv,pvc

$> kubectl delete pvc localpath-claim

$>kubectl get pv

Pod 삭제 후에도 Pv와 Pvc는 유지가 되고, PVC 삭제시 자동으로 Pv도 삭제

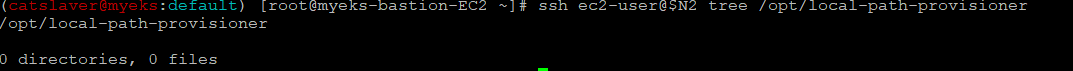

$>ssh ec2-user@$N2 tree /opt/local-path-provisionerPod가 참조했던 파일은 삭제

4. AWS EBS Controller with CSI(Container Storage Interface)

지금까지의 EKS Worknode의 파일 시스템 Storage 에대해서 실습을 하였고, 이번 Part부터는 AWS에서 제공하는 Storage와의 연동에 대해서 진행을 하고자 한다.

그 첫번째로 EKS에서 AWS의 EBS에 접근을 하는 실습을 진행을 한다.

4.1 IRSA 설정_AWS관리형 정책 AmazonEBSCSIDriverPolicy 사용

$> eksctl create iamserviceaccount \

--name ebs-csi-controller-sa \

--namespace kube-system \

--cluster ${CLUSTER_NAME} \

--attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \

--approve \

--role-only \

--role-name AmazonEKS_EBS_CSI_DriverRole

4.2 IRSA 확인

$> kubectl get sa -n kube-system ebs-csi-controller-sa -o yaml | head -5

$> eksctl get iamserviceaccount --cluster myeks

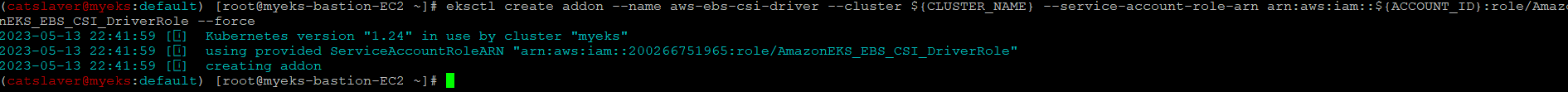

4.3 Amazon EBS CSI driver addon 추가

$> eksctl create addon --name aws-ebs-csi-driver --cluster ${CLUSTER_NAME} --service-account-role-arn arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKS_EBS_CSI_DriverRole --force

4.4 EBS CSI Driver 생성 확인

$> eksctl get addon --cluster ${CLUSTER_NAME}

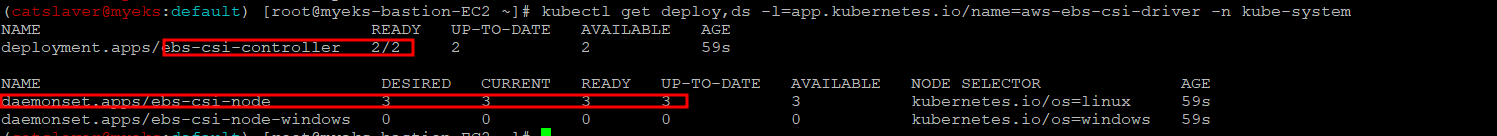

$> kubectl get deploy,ds -l=app.kubernetes.io/name=aws-ebs-csi-driver -n kube-system

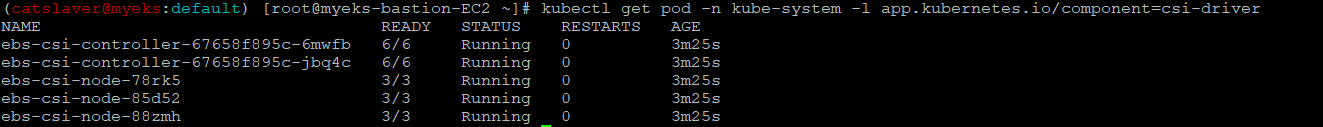

$> kubectl get pod -n kube-system -l 'app in (ebs-csi-controller,ebs-csi-node)'

$> kubectl get pod -n kube-system -l app.kubernetes.io/component=csi-driver

#ebs-csi-controller 파드에 6개 컨테이너 확인

$> kubectl get pod -n kube-system -l app=ebs-csi-controller -o jsonpath='{.items[0].spec.containers[*].name}' ; echo ebs-plugin csi-provisioner csi-attacher csi-snapshotter csi-resizer liveness-probe

# csinodes 확인

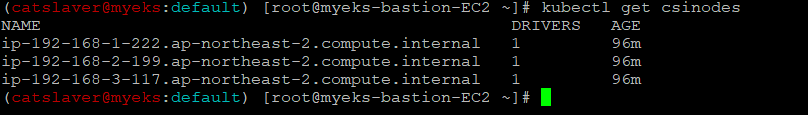

$> kubectl get csinodes

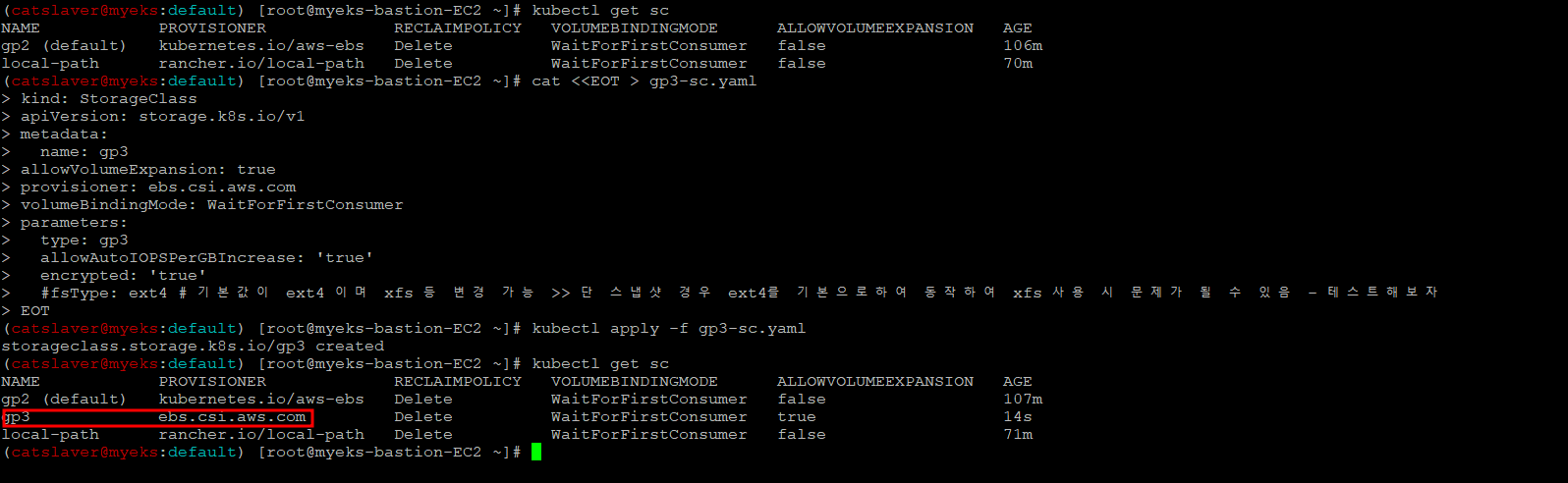

4.5 gp3 스토리지 클래스 생성

$> kubectl get sc

$> cat <<EOT > gp3-sc.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: gp3

allowVolumeExpansion: true

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumer

parameters:

type: gp3

allowAutoIOPSPerGBIncrease: 'true'

encrypted: 'true'

#fsType: ext4 # 기본값이 ext4 이며 xfs 등 변경 가능 >> 단 스냅샷 경우 ext4를 기본으로하여 동작하여 xfs 사용 시 문제가 될 수 있음 - 테스트해보자

EOT

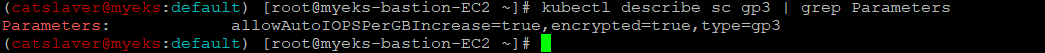

$> kubectl apply -f gp3-sc.yaml4.6 gp3 스토리지 클래스 생성 확인

$> kubectl get sc

$> kubectl describe sc gp3 | grep Parameters

4.7 생성된 Storage Class와 CSI Driver를 통해서 EBS Storage에 접근하는 시험

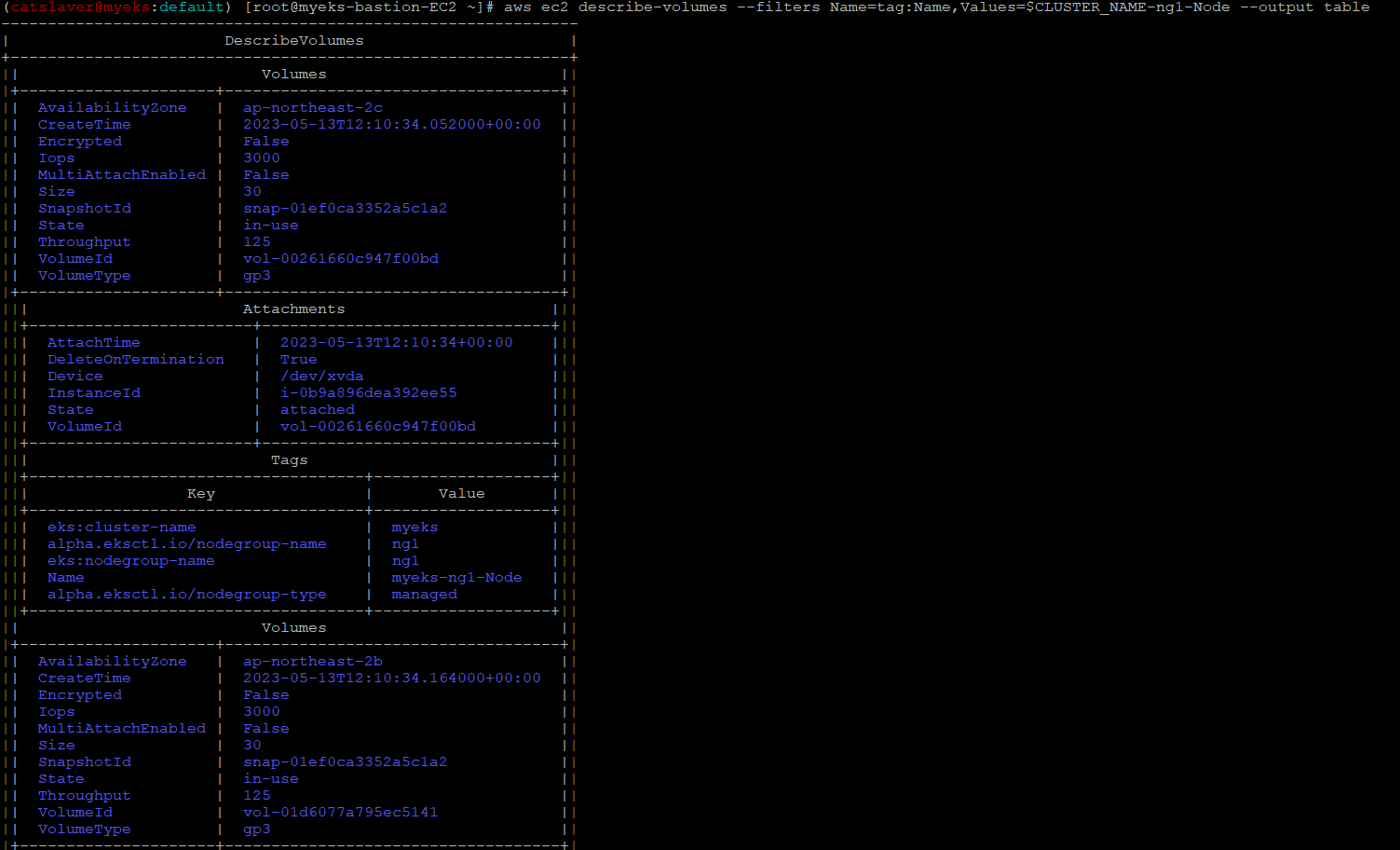

4.7.1 현재 워커노드의 EBS 볼륨 확인

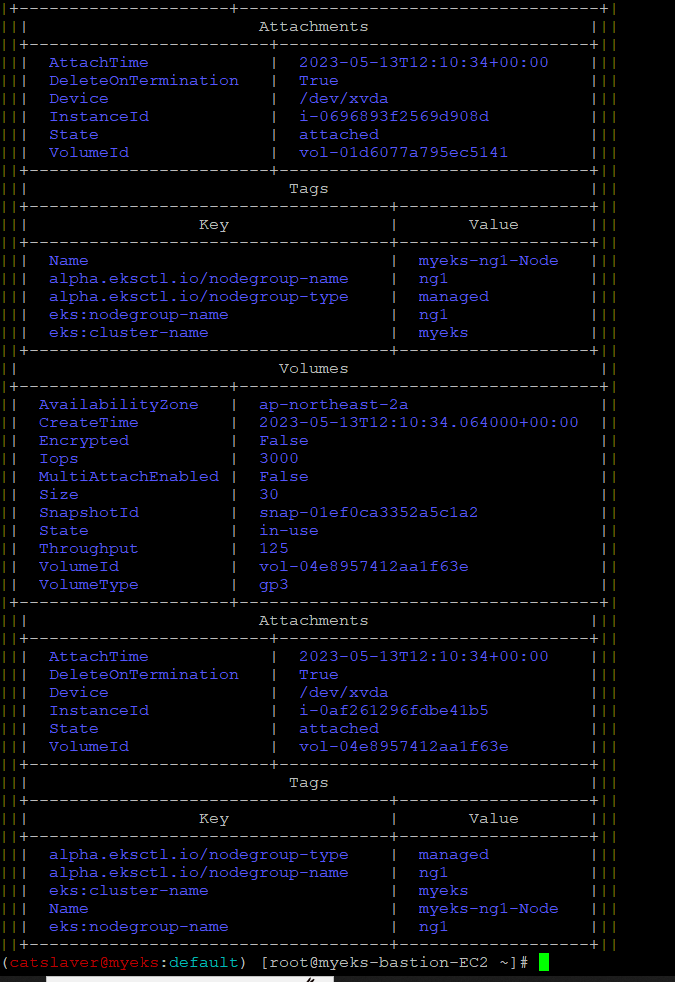

$> aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --output table

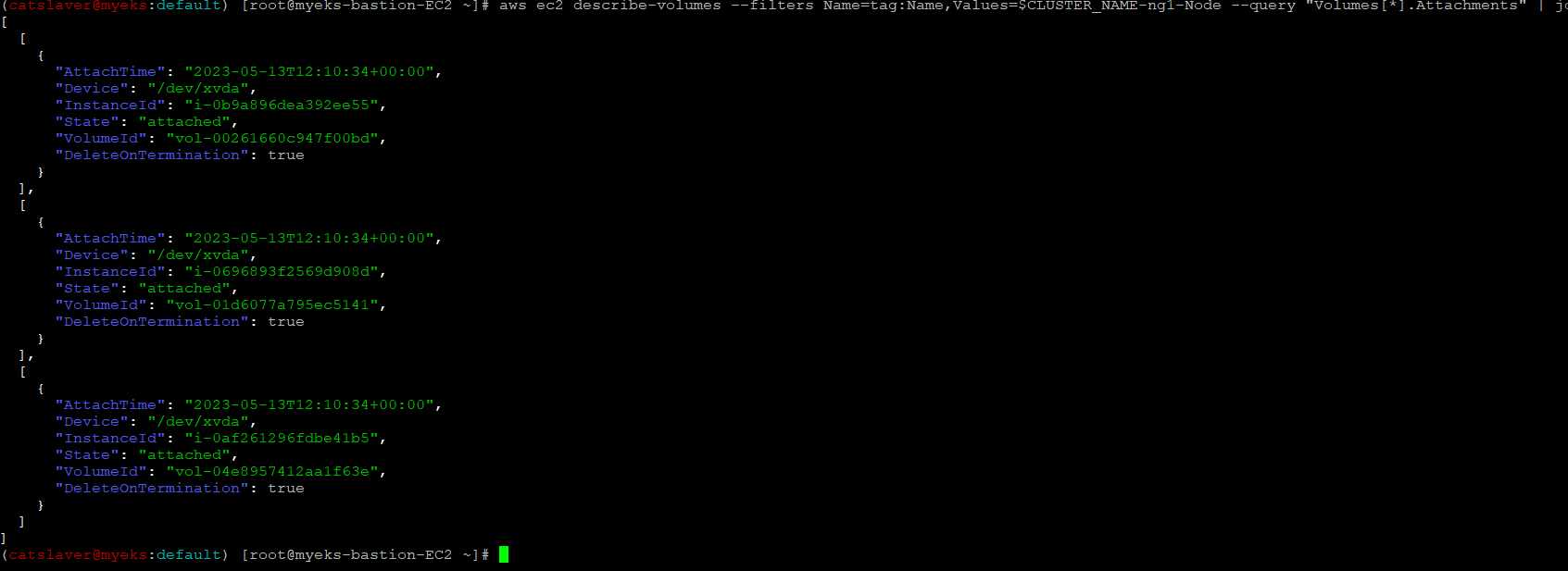

$> aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[*].Attachments" | jq

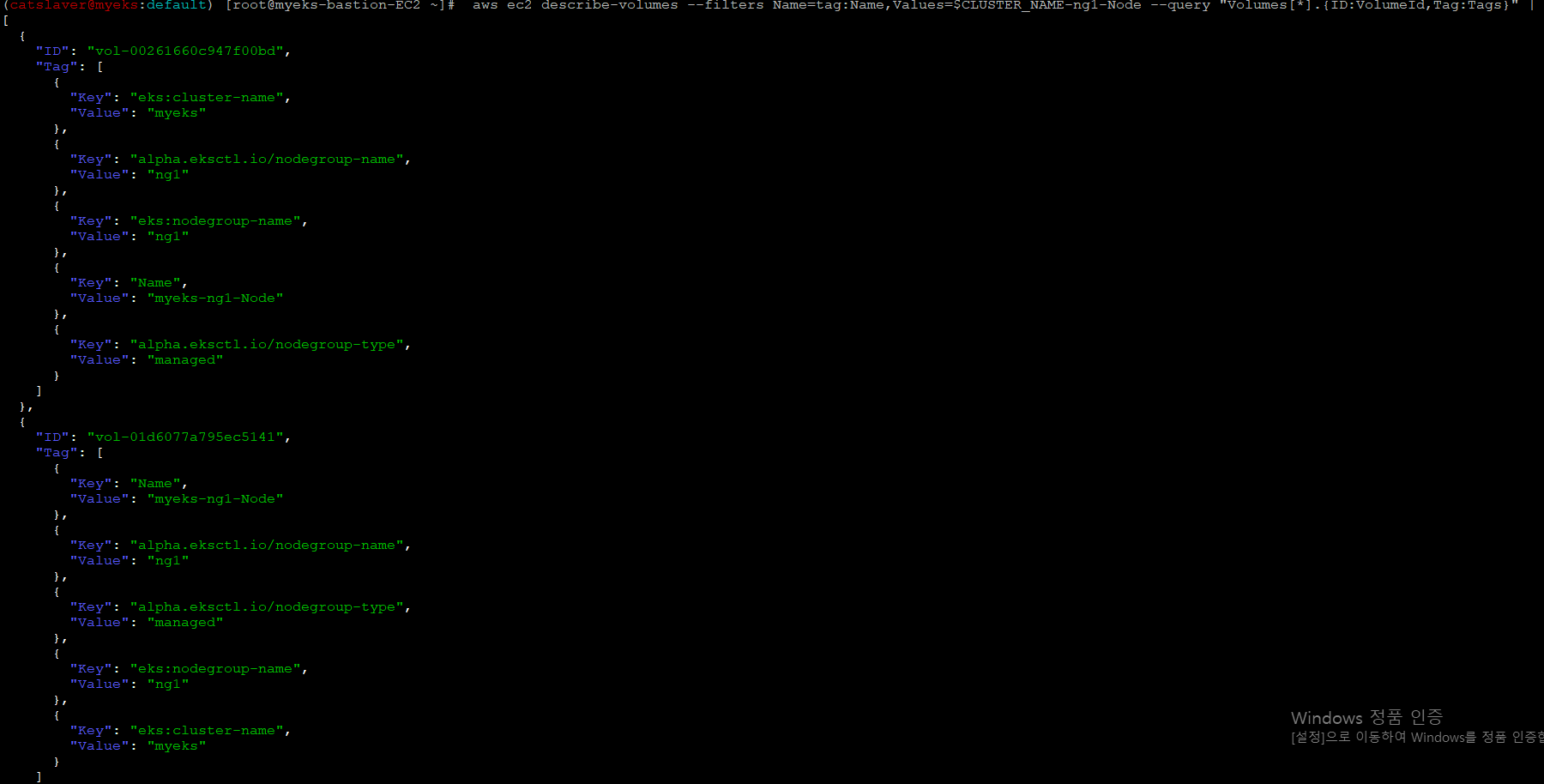

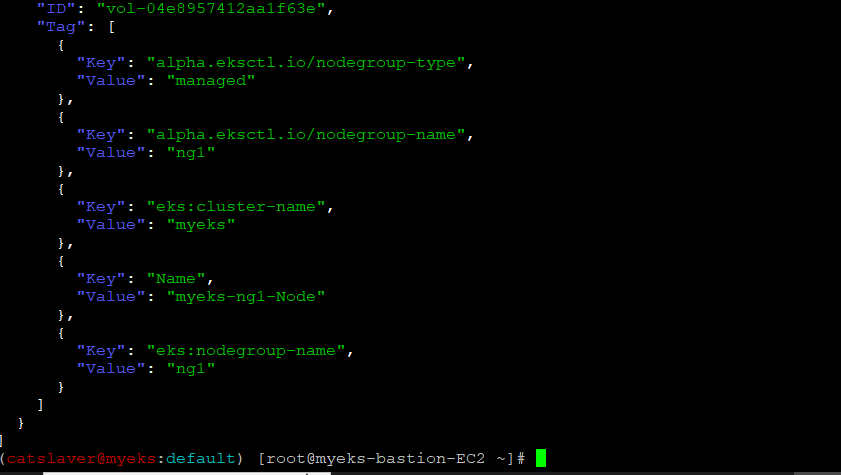

$> aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

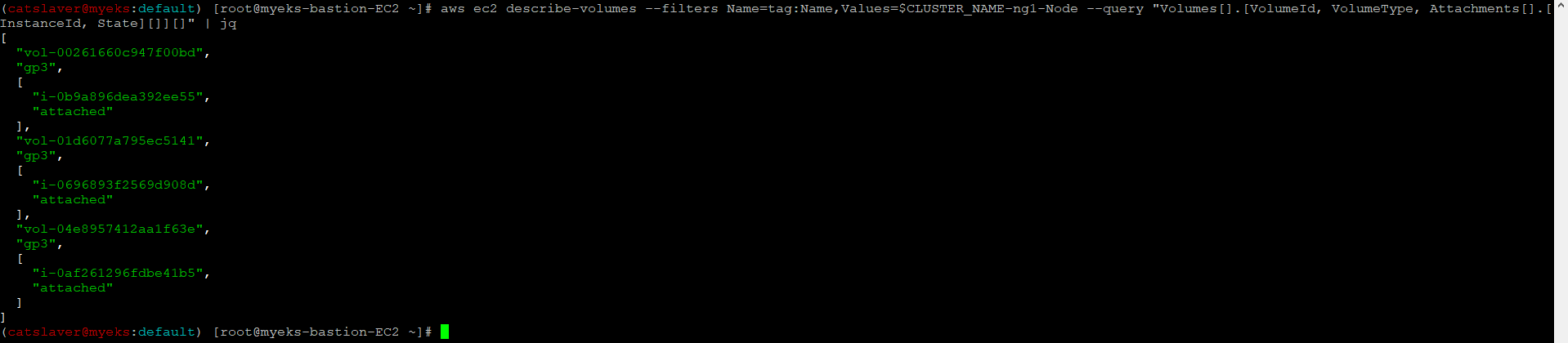

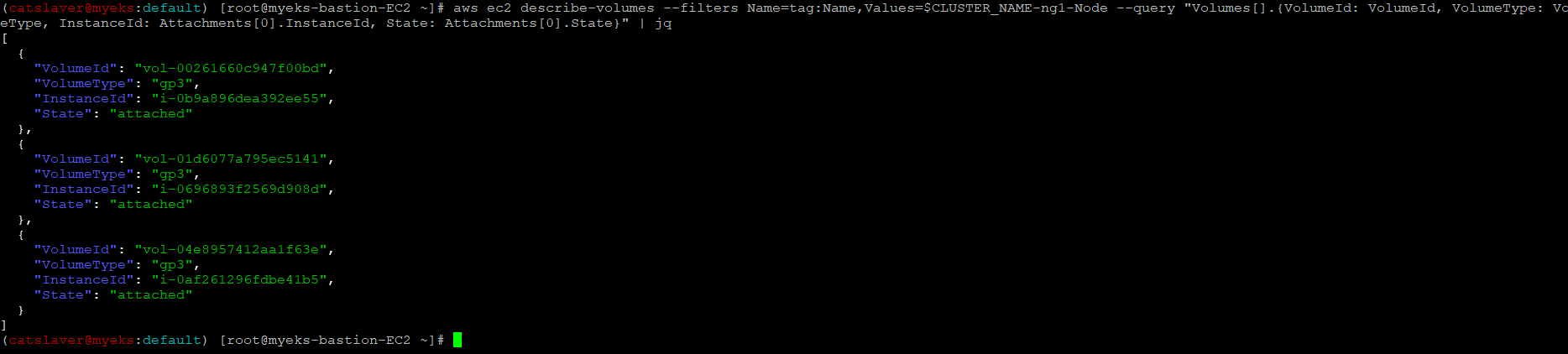

$> aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].[VolumeId, VolumeType, Attachments[].[InstanceId, State][]][]" | jq

$> aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

4.7.2 워커노드에서 파드에 추가한 EBS 볼륨 확인

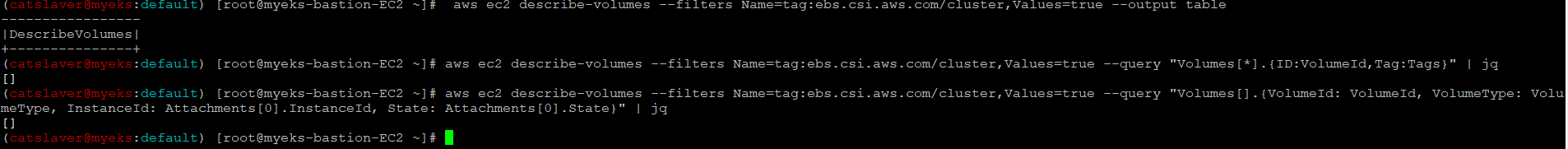

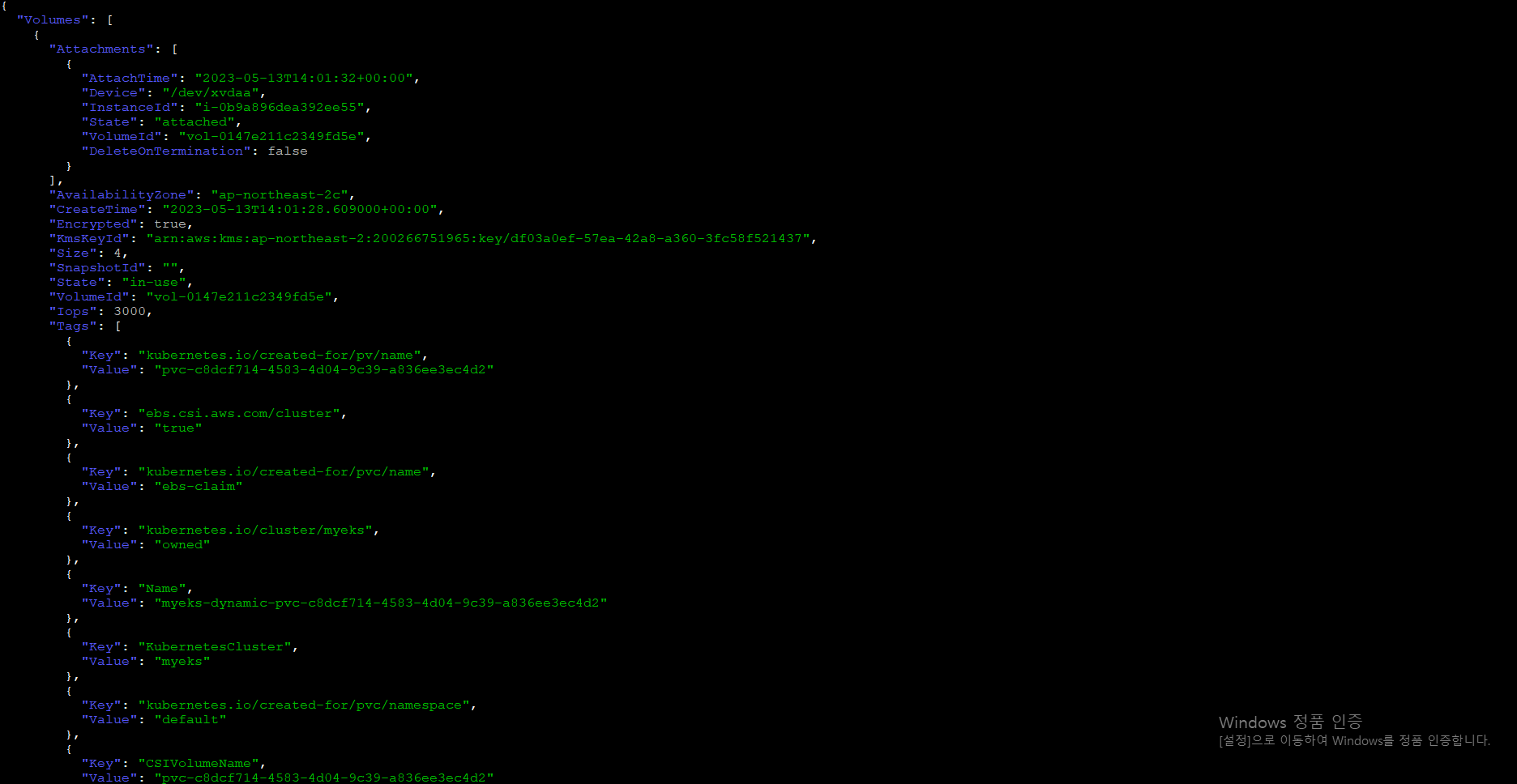

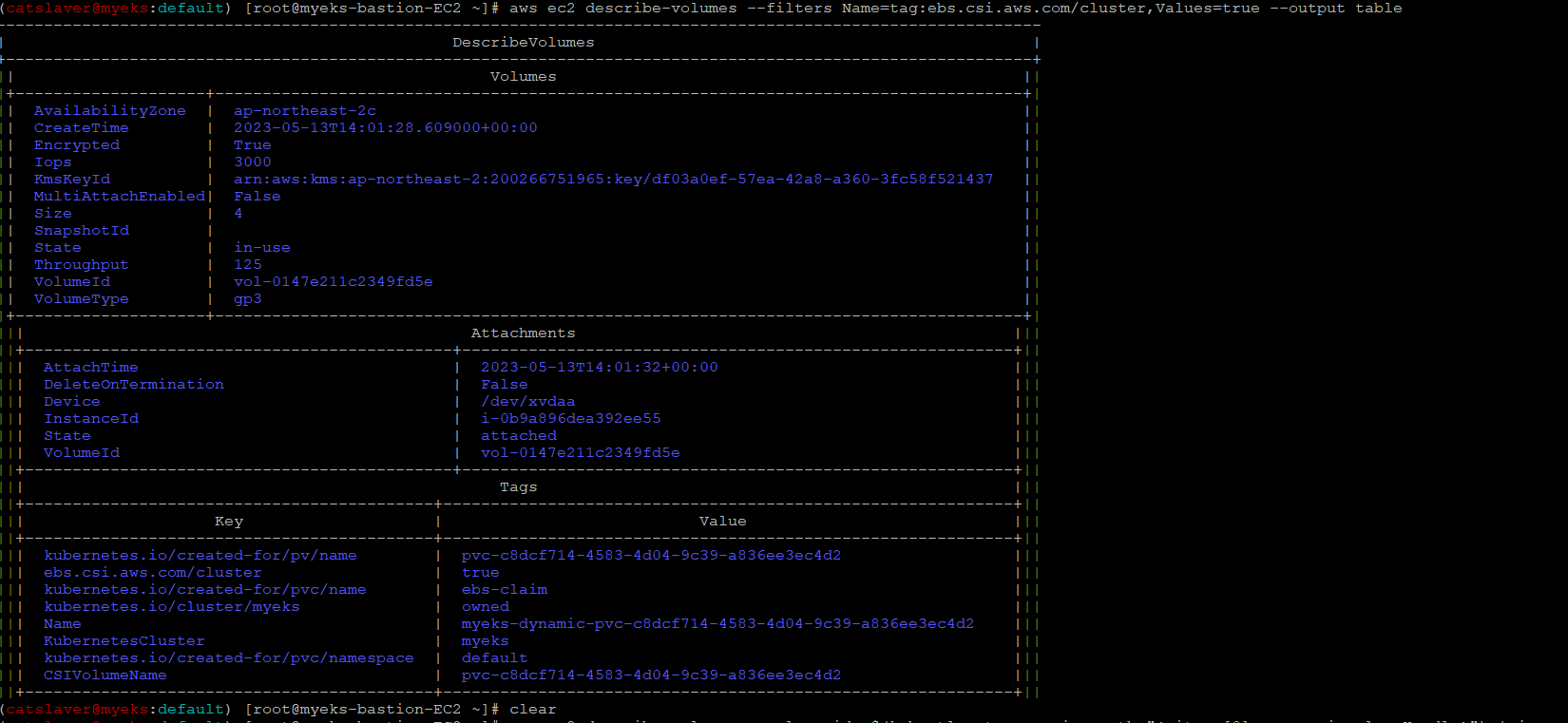

$> aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --output table

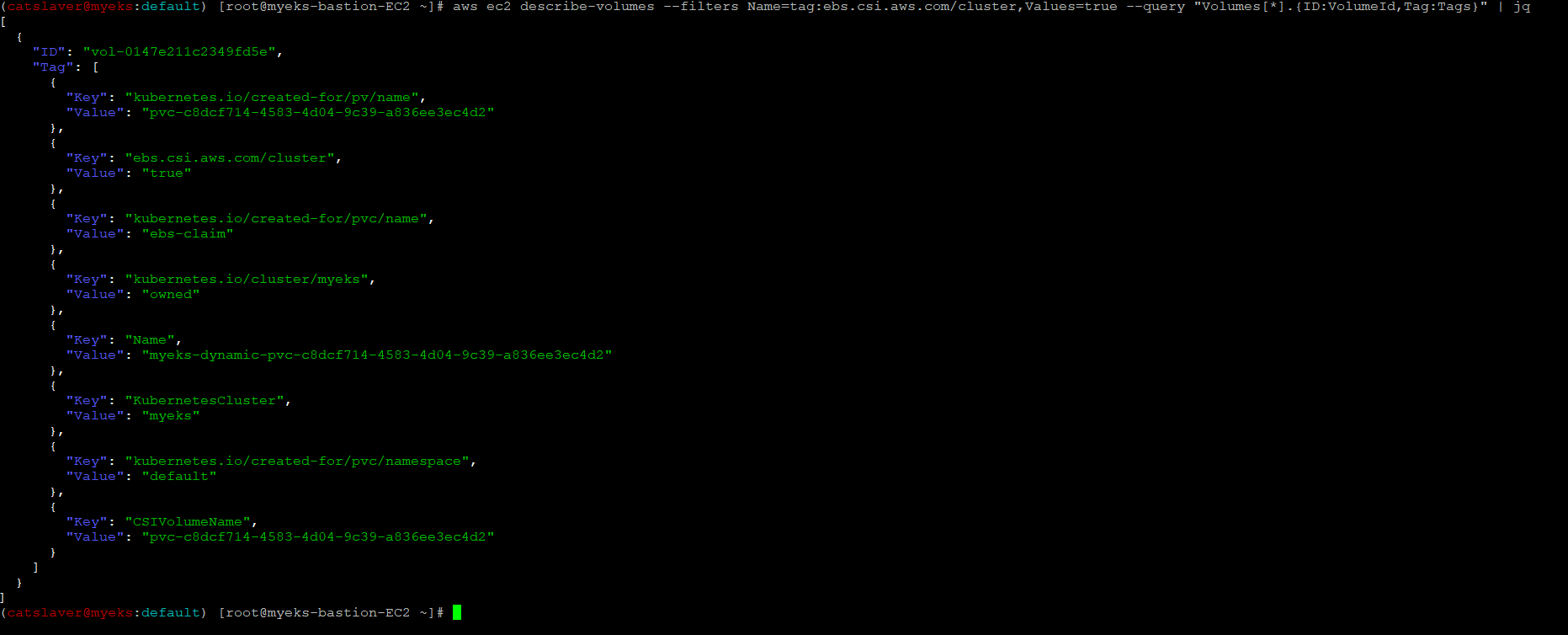

$> aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

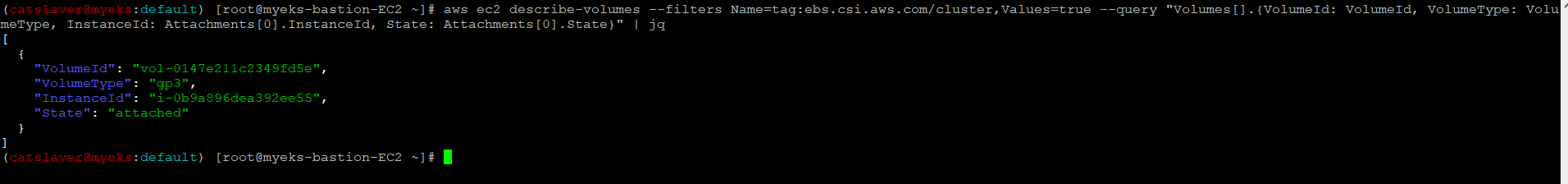

$> aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

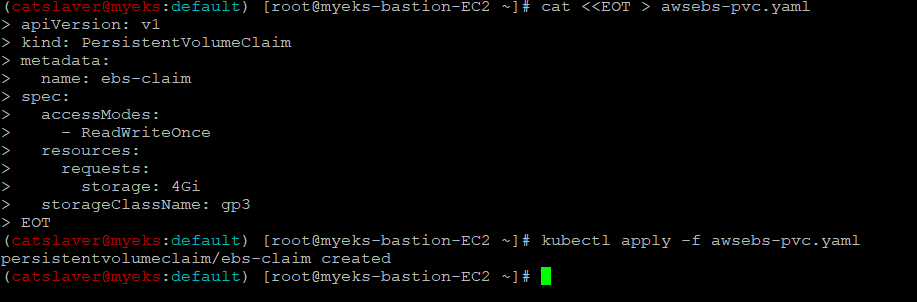

4.7.3 PVC 생성_gp3 4G

$> cat <<EOT > awsebs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

storageClassName: gp3

EOT

$> kubectl apply -f awsebs-pvc.yaml

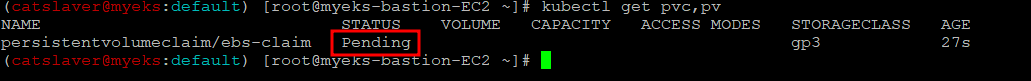

4.7.4 PVC 생성 확인

$> kubectl get pvc,pvBinding이 안되어 아직 PV가 미 생성

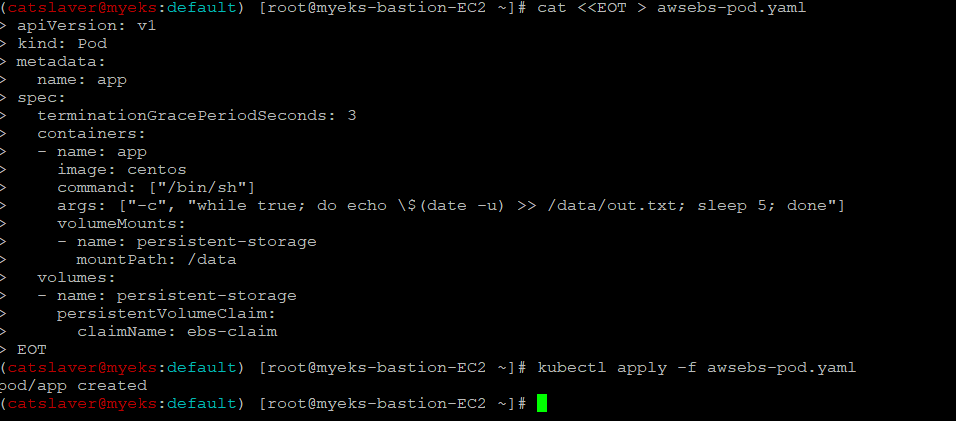

4.7.5 PVC 로 생성된 EBS Volume에 접근하는 Pod 배포

$> cat <<EOT > awsebs-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

terminationGracePeriodSeconds: 3

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo \$(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: ebs-claim

EOT

$> kubectl apply -f awsebs-pod.yaml

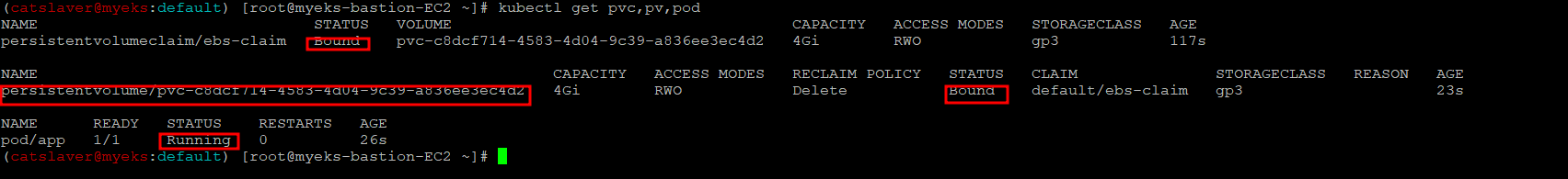

4.7.6 PVC, 파드 확인

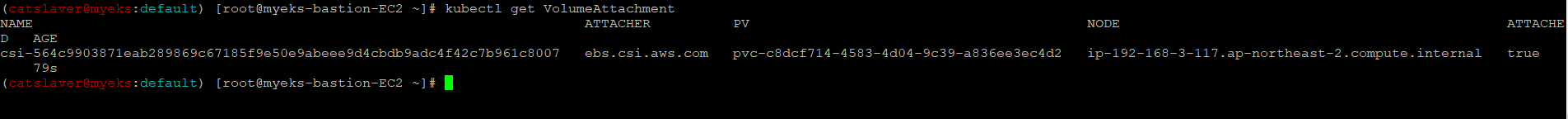

$> kubectl get pvc,pv,pod

$> kubectl get VolumeAttachment

4.7.7 추가된 EBS 볼륨 상세 정보 확인

$> aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jq

$> aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --output table

$> aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

$> aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

4.7.8 PV 상세 확인_node affinity?

$> kubectl get pv -o yaml | yh

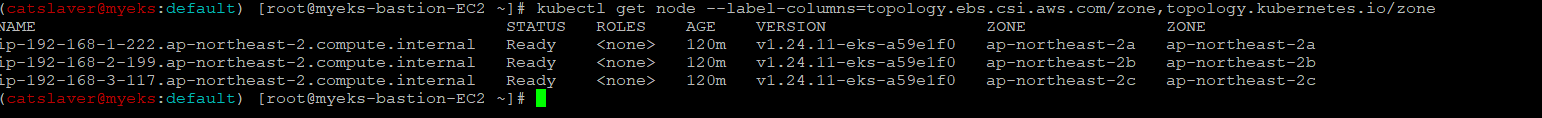

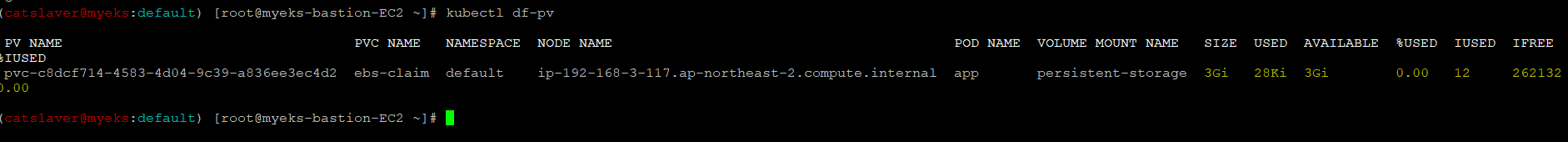

$> kubectl get node --label-columns=topology.ebs.csi.aws.com/zone,topology.kubernetes.io/zone

$> kubectl describe node | more

4.7.9 PVC로 생성된 Volume의 파일 내용 확인

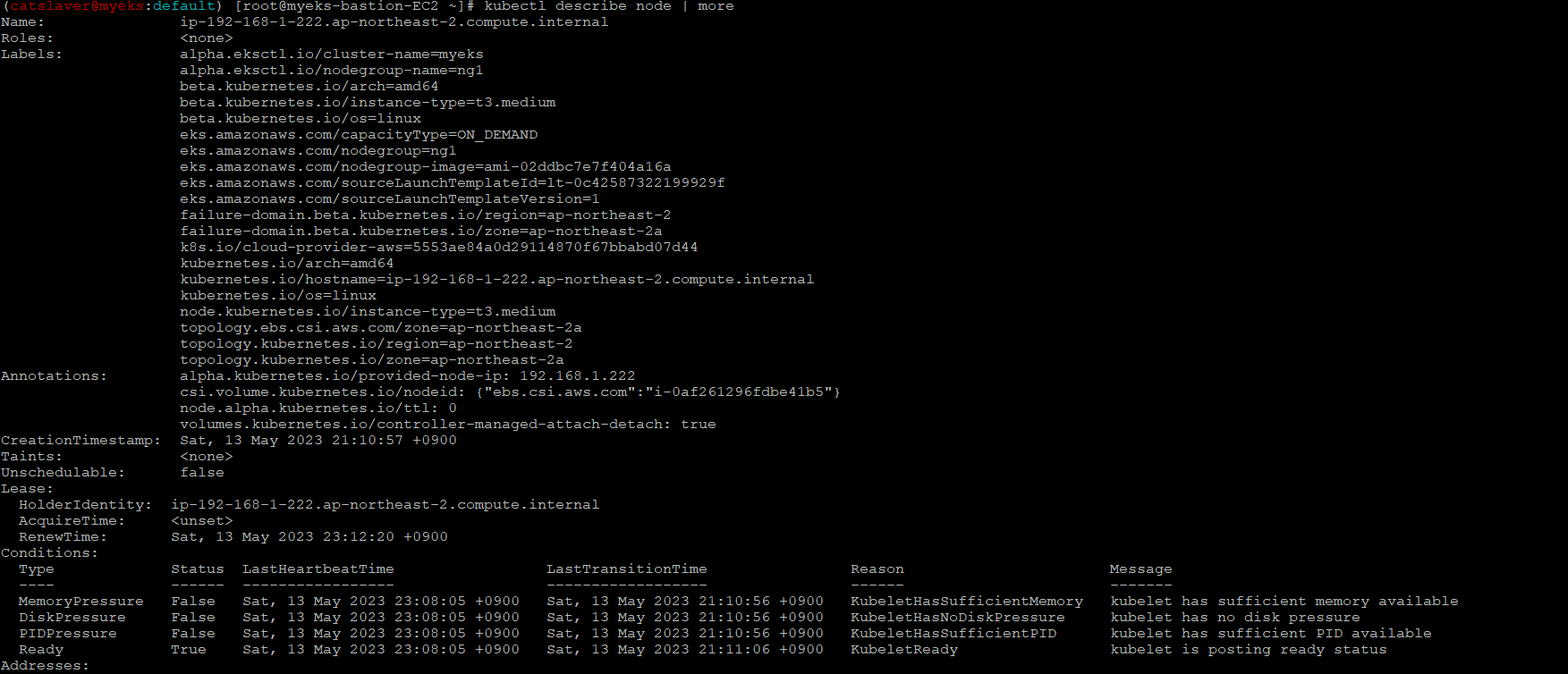

$> kubectl exec app -- tail -f /data/out.txt

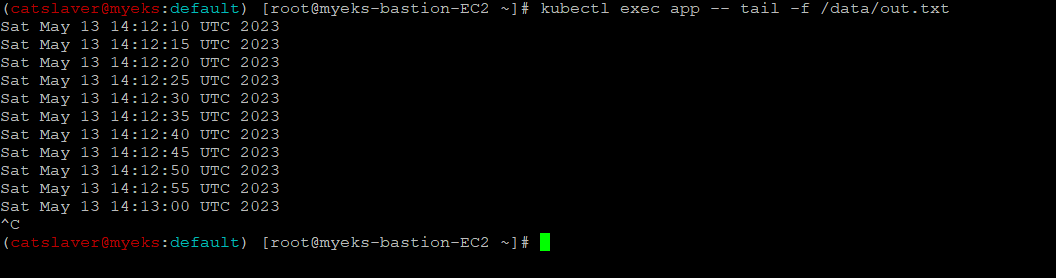

4.7.10 pv 단위의 disk file system 확인?

$> kubectl df-pv

4.7.11 파드 내에서 볼륨 정보 확인

$> kubectl exec -it app -- sh -c 'df -hT --type=overlay'$> kubectl exec -it app -- sh -c 'df -hT --type=ext4'

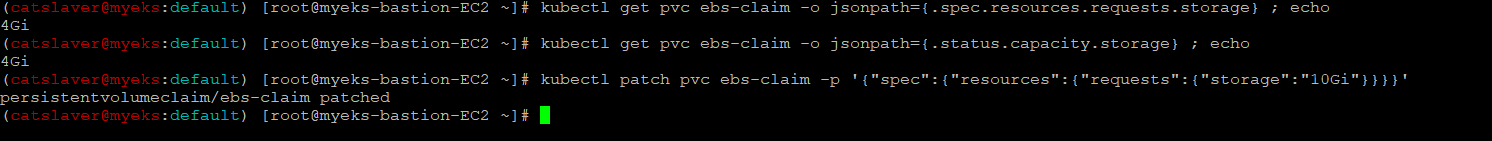

4.7.12 4G > 10G 로 증가

$> kubectl get pvc ebs-claim -o jsonpath={.spec.resources.requests.storage} ; echo

$> kubectl get pvc ebs-claim -o jsonpath={.status.capacity.storage} ; echo

$> kubectl patch pvc ebs-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}'

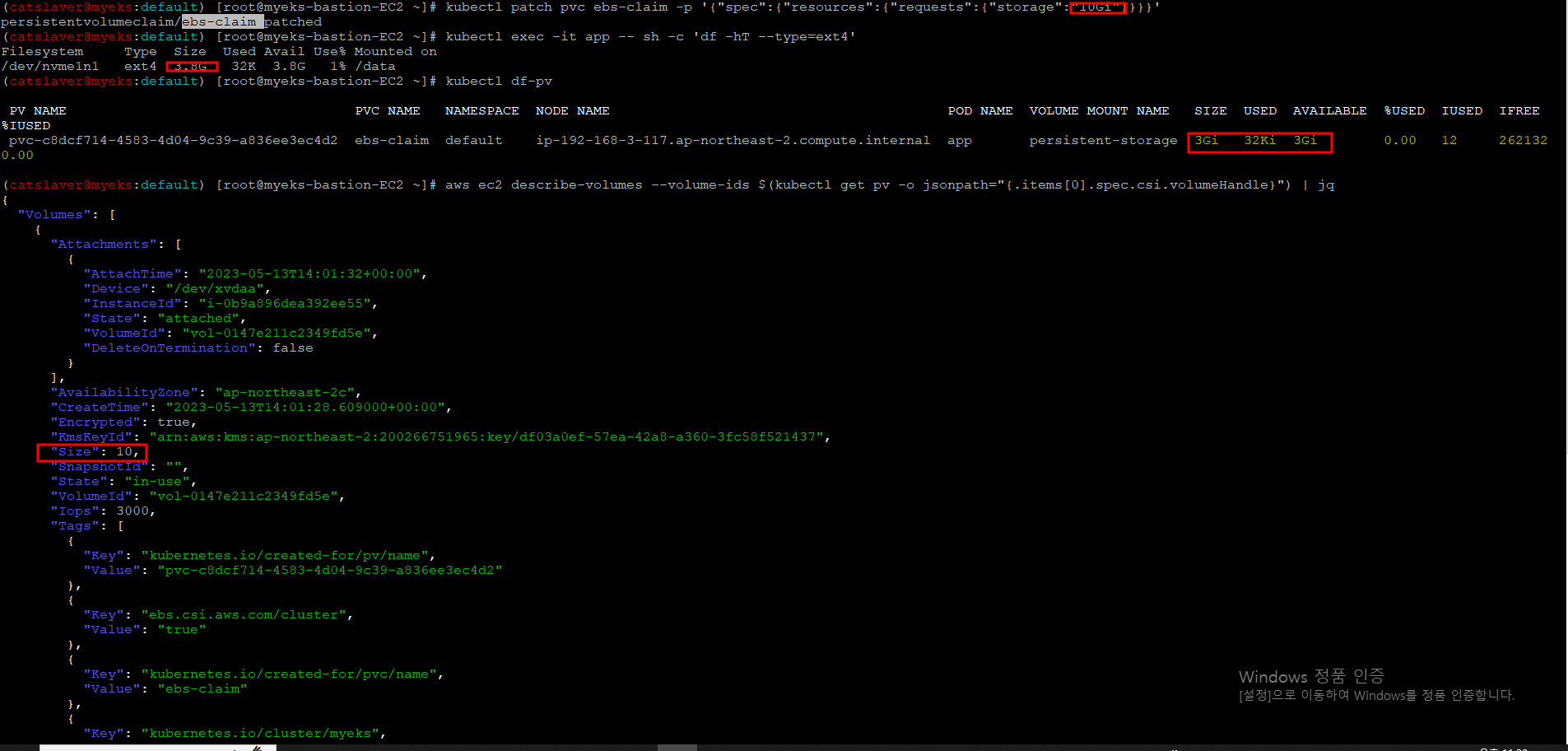

4.7.13 용량 증가 확인

$> kubectl exec -it app -- sh -c 'df -hT --type=ext4'

$> kubectl df-pv

$> aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jqpv에서는 반영이 안되어 보이는데, describe-volumes에서는 10G로 변경이 되는데, 정확히 이해가 안됨(?)

4.7.14 Pod, PVC 삭제

$> kubectl delete pod app & kubectl delete pvc ebs-claim5 Volume SnapShots Controller

Storage 장애를 대비한 Backup Image인 Snapshot 역시 Controller를 통해서 서비스 제공이 가능하다

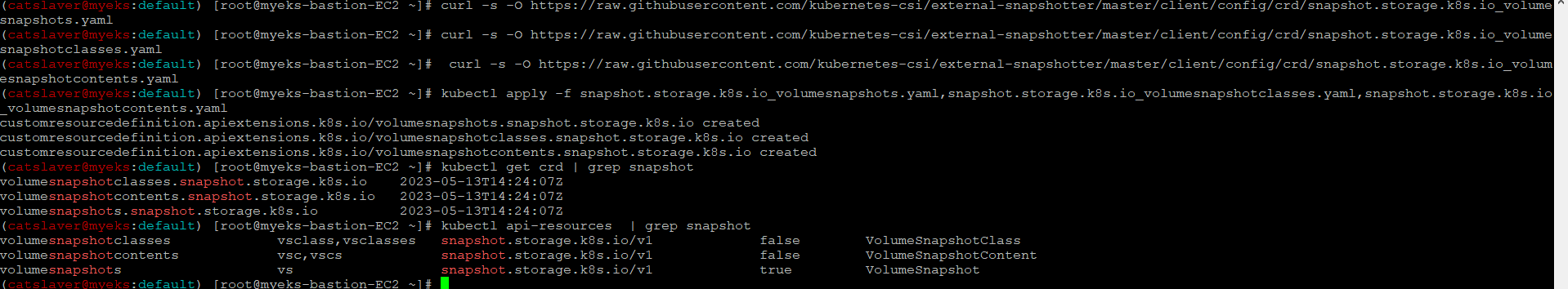

5.1 Volumesnapshots 컨트롤러 설치

5.1.1 Install Snapshot CRDs

$> curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml

$> curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

$> curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

$> kubectl apply -f snapshot.storage.k8s.io_volumesnapshots.yaml,snapshot.storage.k8s.io_volumesnapshotclasses.yaml,snapshot.storage.k8s.io_volumesnapshotcontents.yaml

$> kubectl get crd | grep snapshot

$> kubectl api-resources | grep snapshot

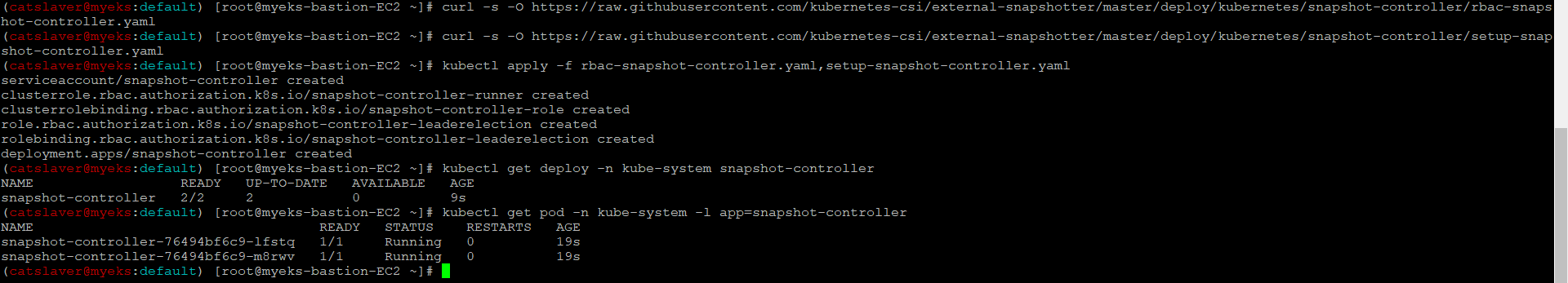

5.1.2 Install Common Snapshot Controller

$> curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

$> curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml

$> kubectl apply -f rbac-snapshot-controller.yaml,setup-snapshot-controller.yaml

# 배포 확인

$> kubectl get deploy -n kube-system snapshot-controller

$> kubectl get pod -n kube-system -l app=snapshot-controller

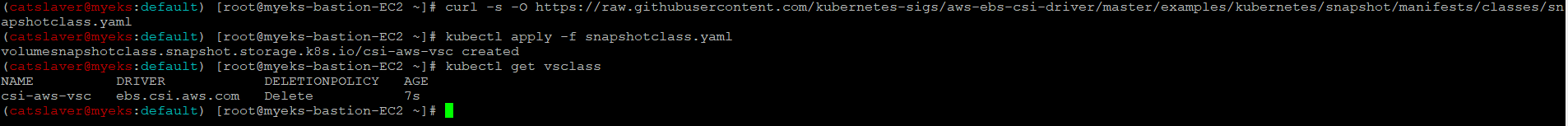

5.1.3 Install Snapshotclass

$> curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml

$> kubectl apply -f snapshotclass.yaml

#설치 확인

$> kubectl get vsclass # 혹은 volumesnapshotclasses

5.2 테스트 PVC/Pod 생성

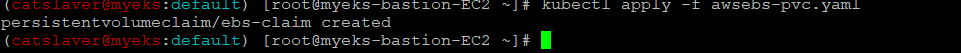

5.2.1 PVC 생성

$> kubectl apply -f awsebs-pvc.yaml

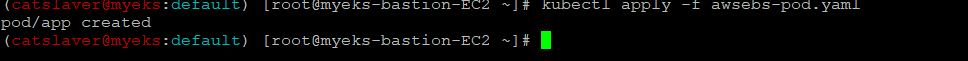

5.2.2 Pod 생성

$> kubectl apply -f awsebs-pod.yaml

5.2.3 생성된 Volume의 파일 생성 확인

$> kubectl exec app -- tail -f /data/out.txt

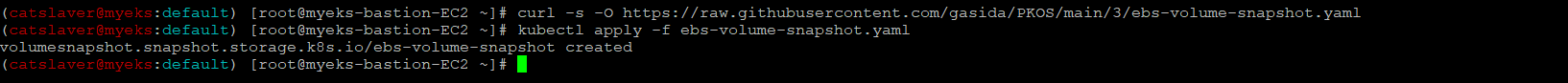

5.2.4 VolumeSnapshot 생성

$> curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-volume-snapshot.yaml

$> kubectl apply -f ebs-volume-snapshot.yaml

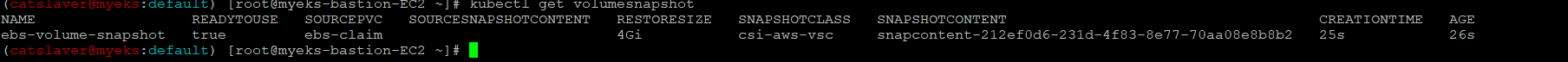

5.2.5 VolumeSnapshot 확인

$> kubectl get volumesnapshot

$> kubectl get volumesnapshot ebs-volume-snapshot -o jsonpath={.status.boundVolumeSnapshotContentName} ; echo

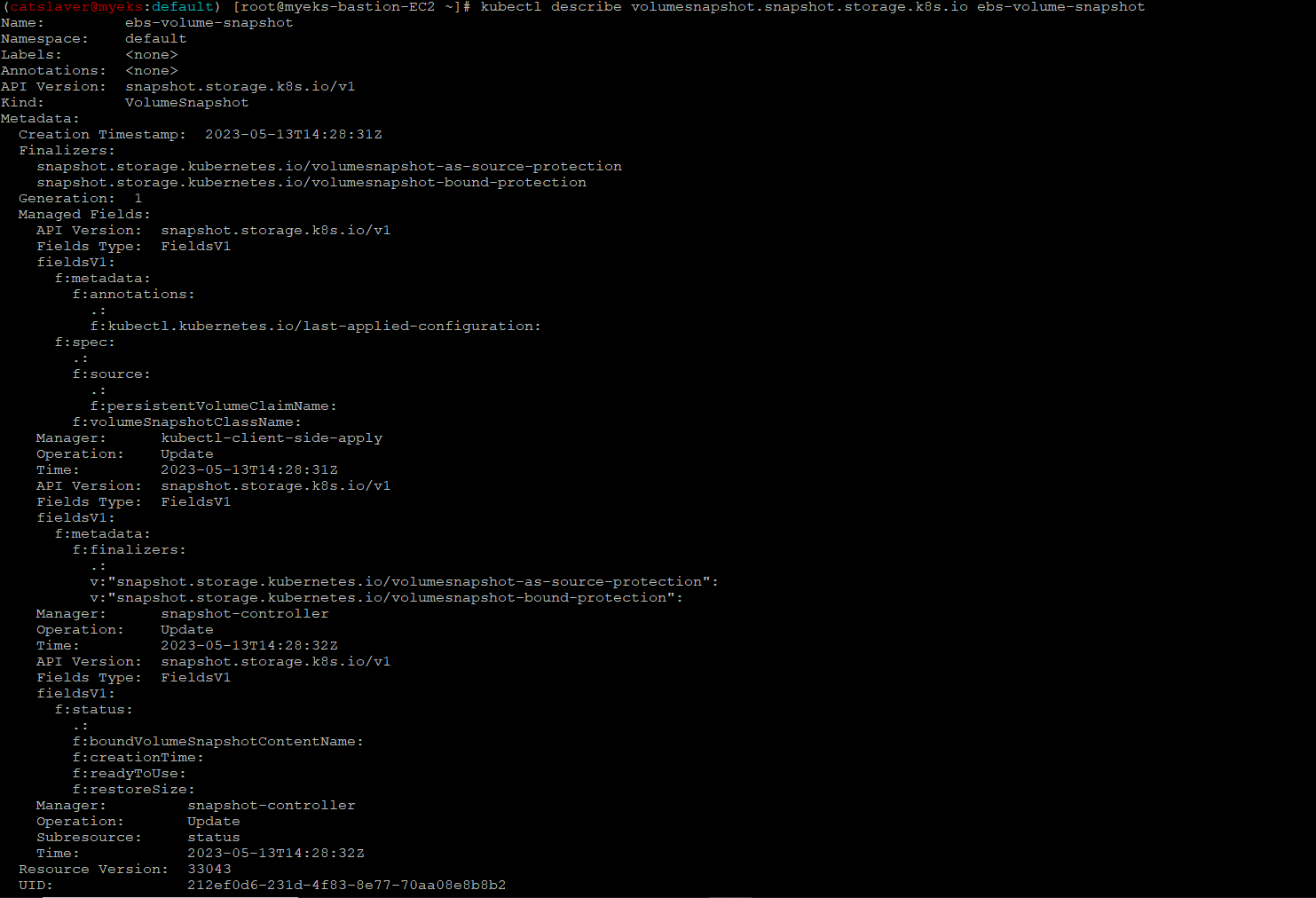

$> kubectl describe volumesnapshot.snapshot.storage.k8s.io ebs-volume-snapshot

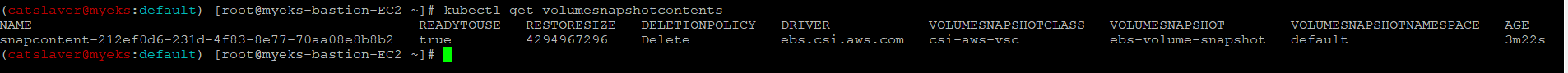

$> kubectl get volumesnapshotcontents

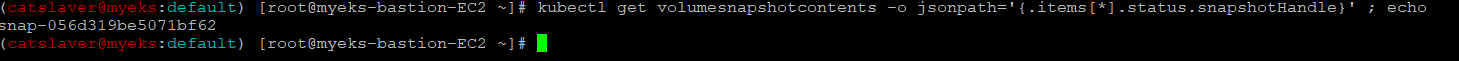

# Volume Snapshot ID 확인

$> kubectl get volumesnapshotcontents -o jsonpath='{.items[*].status.snapshotHandle}' ; echo

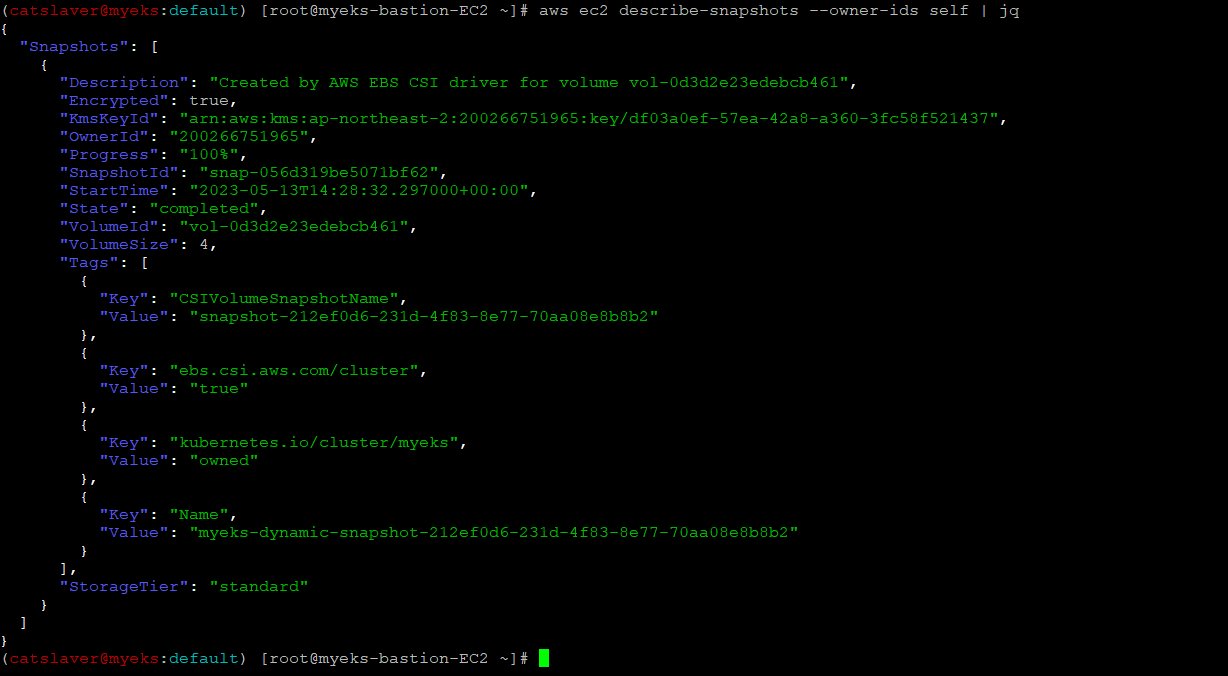

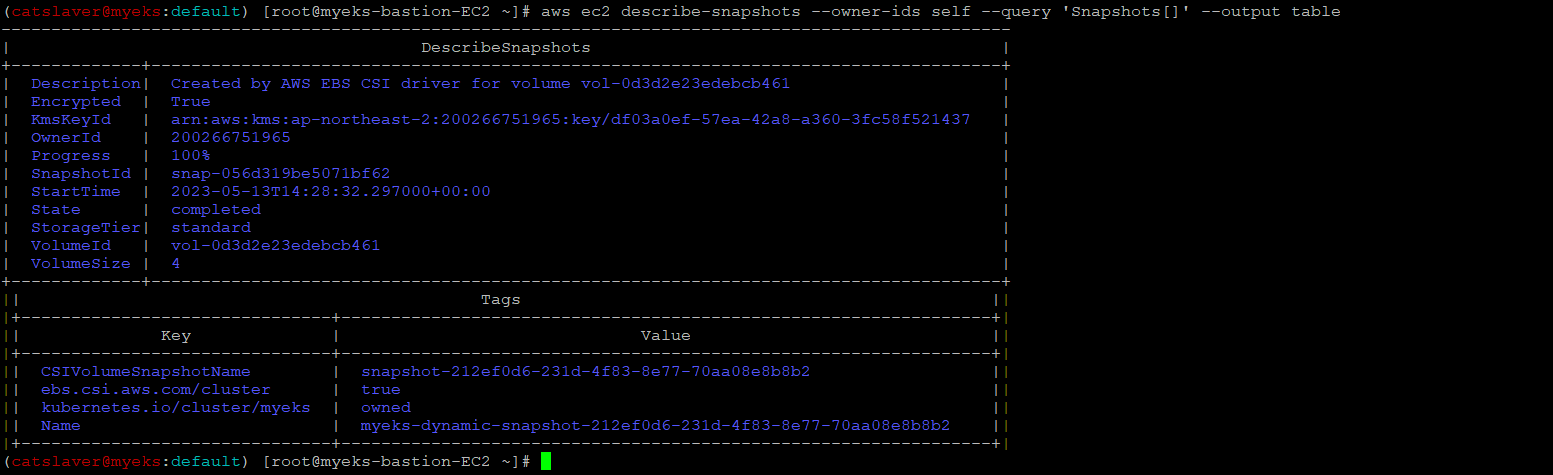

5.2.6 AWS EBS Snapshot 확인

$> aws ec2 describe-snapshots --owner-ids self | jq

$> aws ec2 describe-snapshots --owner-ids self --query 'Snapshots[]' --output table

5.2.7 복원 시험을 위한 강제 Pod & PVC 종료

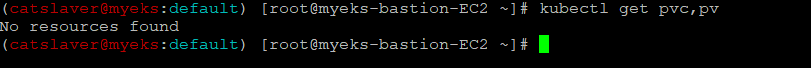

$> kubectl delete pod app && kubectl delete pvc ebs-claim5.3 Snapshot으로 복원

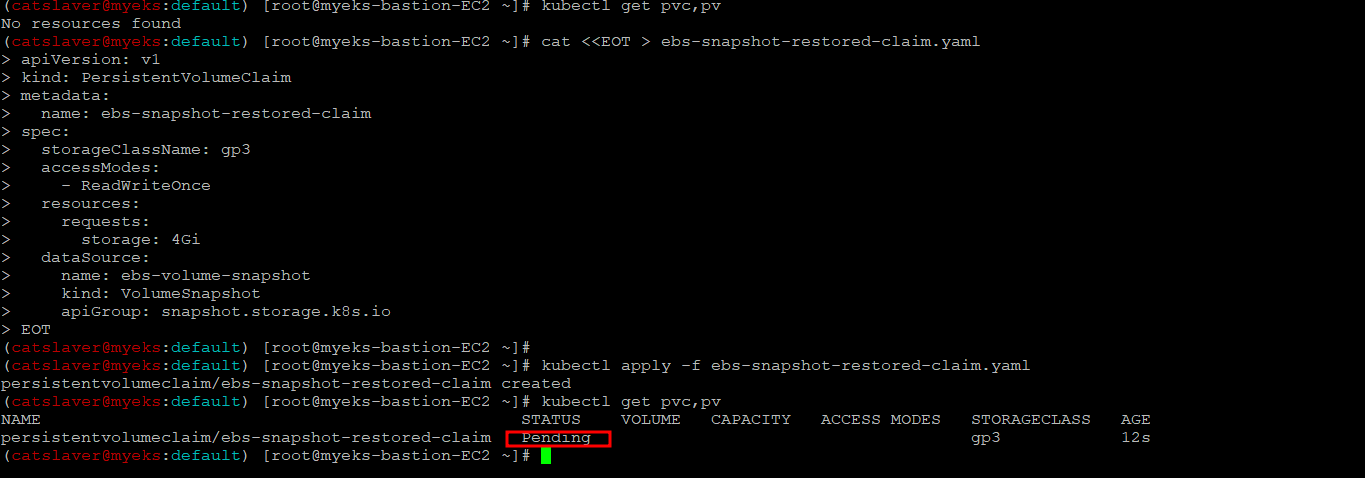

5.3.1 Snapshot으로 PVC 배포

$> kubectl get pvc,pv

$> cat <<EOT > ebs-snapshot-restored-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-snapshot-restored-claim

spec:

storageClassName: gp3

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

dataSource:

name: ebs-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

EOT

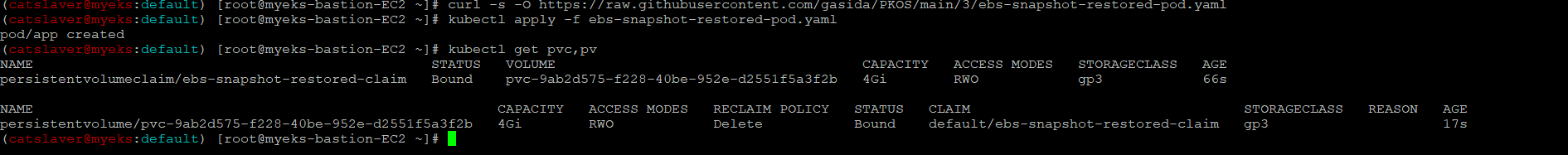

$> kubectl apply -f ebs-snapshot-restored-claim.yaml5.3.2 PVC, PV 확인

$> kubectl get pvc,pv

5.3.3 시험용 파드 생성_ /data/out.txt 파일에 5초 단위로 날짜 정보 추가

$> curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-snapshot-restored-pod.yaml

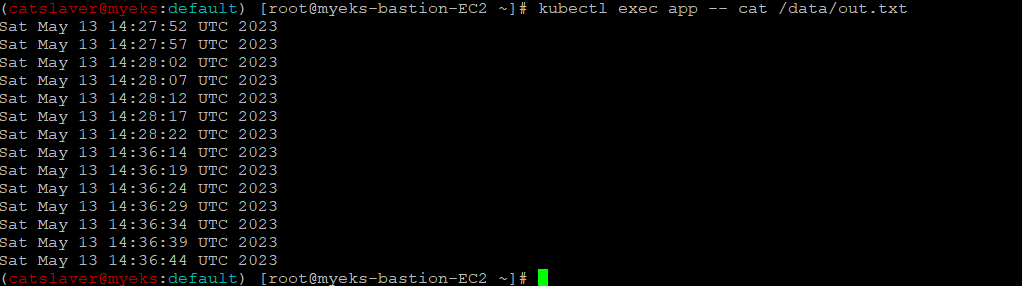

$> kubectl apply -f ebs-snapshot-restored-pod.yaml5.3.4 복원한 내용과 현재 생성되는 데이터를 확인

$> kubectl get pvc,pv

$> kubectl exec app -- cat /data/out.txt

5.3.5 Pod , PVC VolumeSnapshot 삭제

$> kubectl delete pod app && kubectl delete pvc ebs-snapshot-restored-claim && kubectl delete volumesnapshots ebs-volume-snapshot6 AWS EFS Controller

6.1 EFS Controller 설치

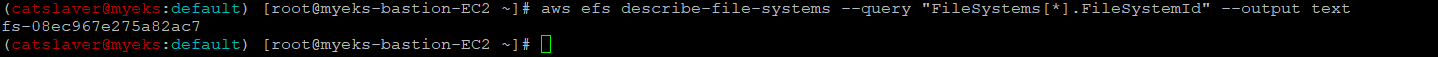

6.1.1 현재 EFS ID 정보 확인

$> aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text

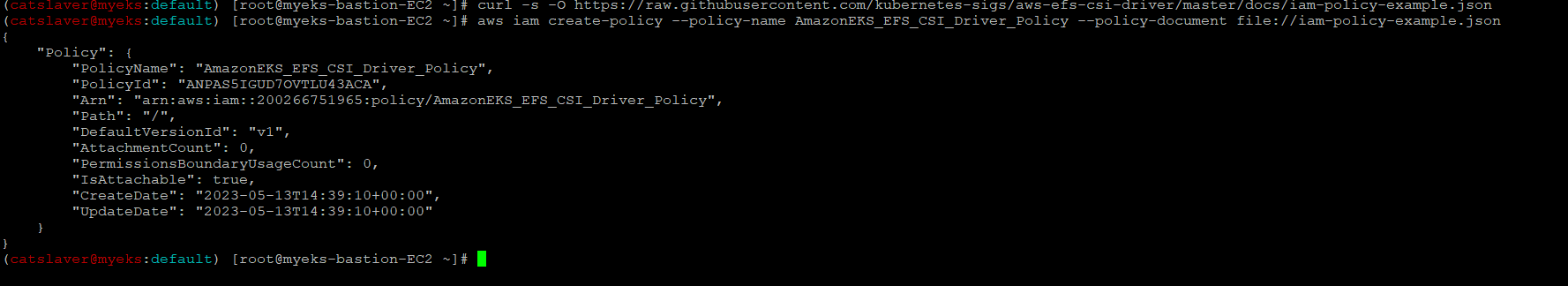

6.1.2 IAM 정책 생성_EBS와 달리 EFS 관련 AWS 관리 정책이 없어 수동으로 생성

$> curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/docs/iam-policy-example.json

$> aws iam create-policy --policy-name AmazonEKS_EFS_CSI_Driver_Policy --policy-document file://iam-policy-example.json

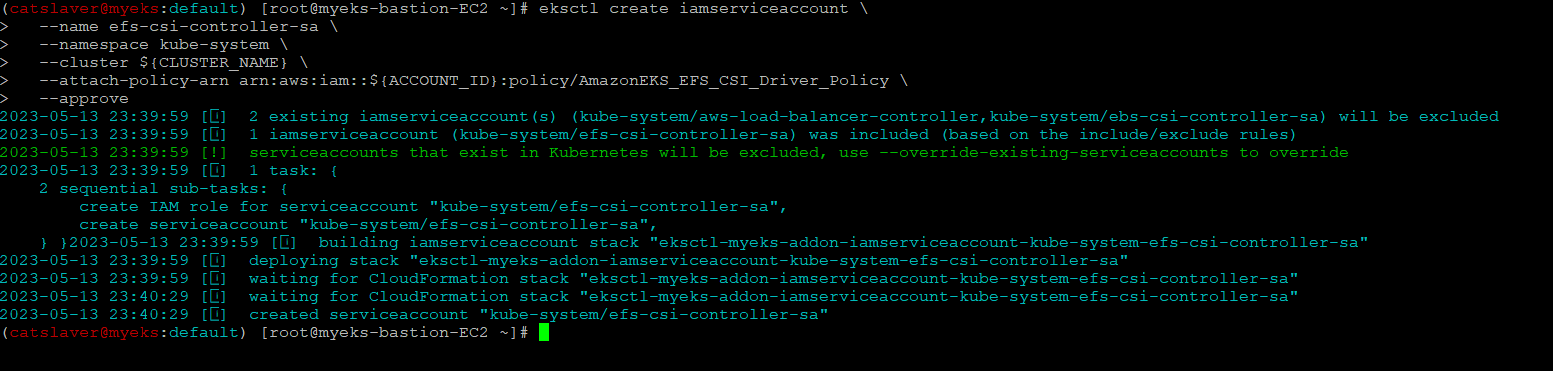

6.1.3 ISRA 설정_EBS와 동일

$> eksctl create iamserviceaccount \

--name efs-csi-controller-sa \

--namespace kube-system \

--cluster ${CLUSTER_NAME} \

--attach-policy-arn arn:aws:iam::${ACCOUNT_ID}:policy/AmazonEKS_EFS_CSI_Driver_Policy \

--approve

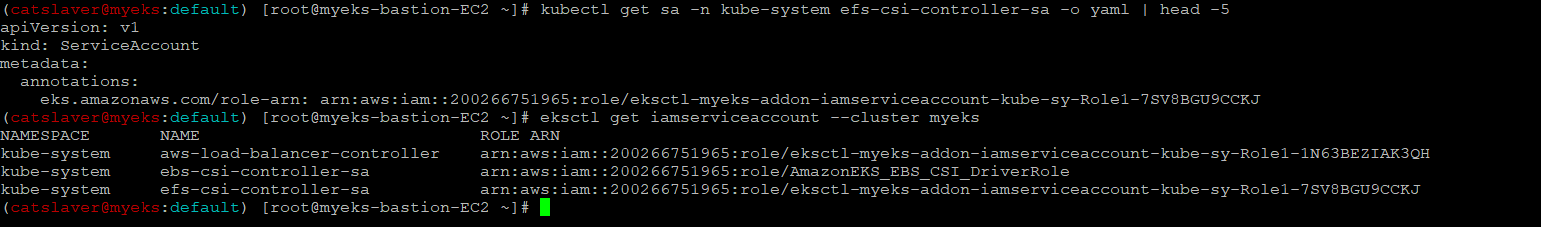

6.1.4 IRSA 확인

$> kubectl get sa -n kube-system efs-csi-controller-sa -o yaml | head -5

$> eksctl get iamserviceaccount --cluster myeks

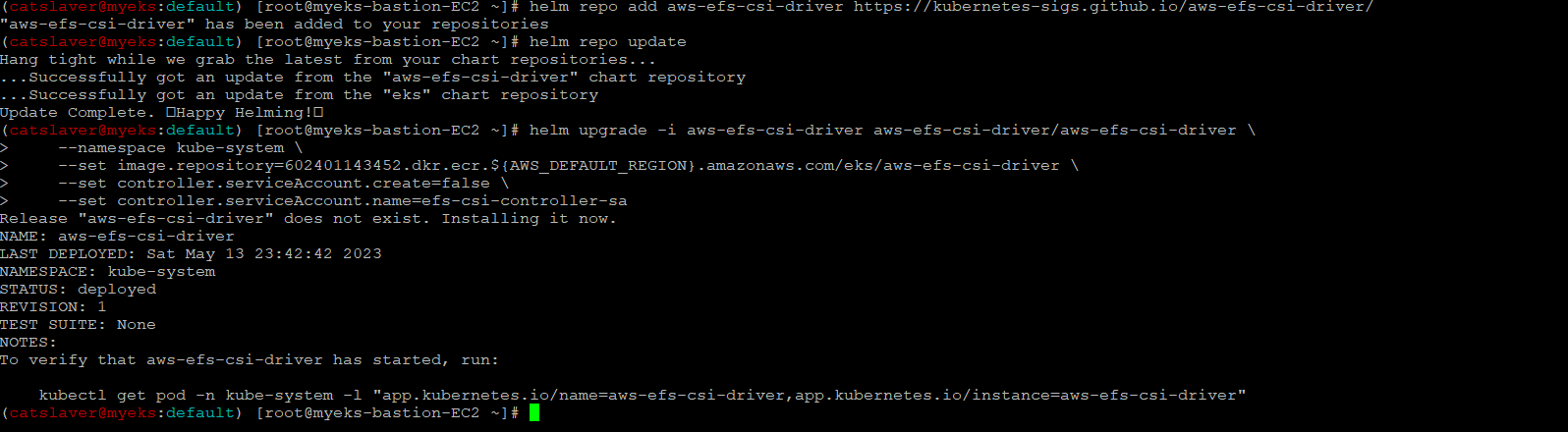

6.1.5 EFS Controller 설치

$> helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/

$> helm repo update

$> helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \

--namespace kube-system \

--set image.repository=602401143452.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com/eks/aws-efs-csi-driver \

--set controller.serviceAccount.create=false \

--set controller.serviceAccount.name=efs-csi-controller-sa

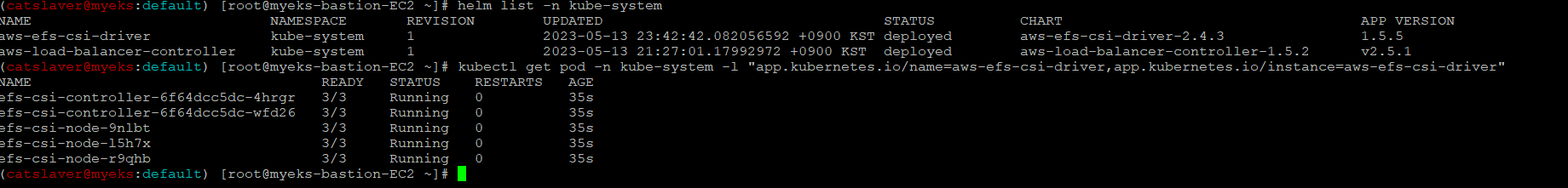

6.1.6 EFS Controller 설치 확인

$> helm list -n kube-system

$> kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver"

6.2 EFS 파일시스템을 다수의 파드가 사용하게 설정_Add empty StorageClasses from static example

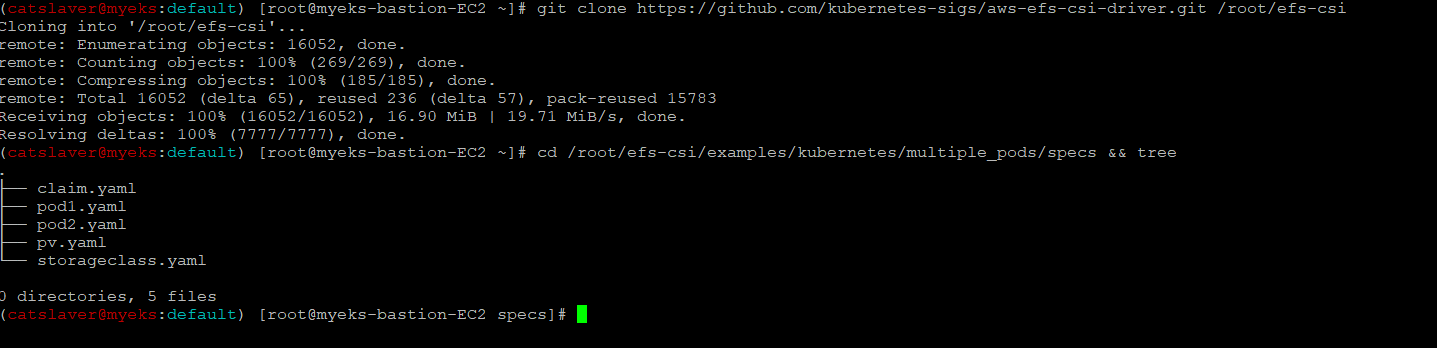

6.2.1 실습 코드 clone

$> git clone https://github.com/kubernetes-sigs/aws-efs-csi-driver.git /root/efs-csi

$> cd /root/efs-csi/examples/kubernetes/multiple_pods/specs && tree

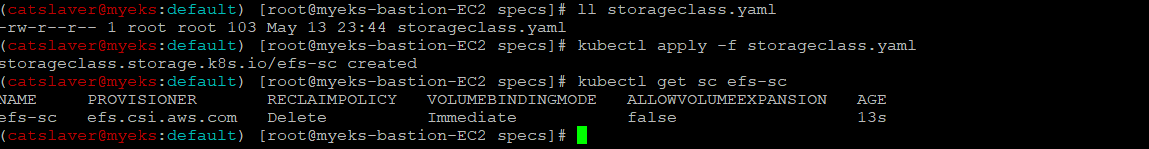

6.2.2 EFS 스토리지클래스 생성 및 확인

$> kubectl apply -f storageclass.yaml

$> kubectl get sc efs-sc

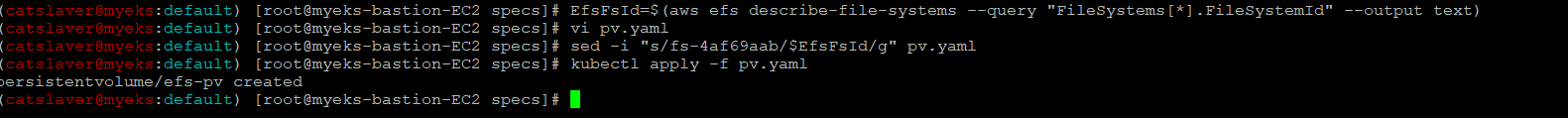

6.2.3 PV 생성

$> EfsFsId=$(aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text)

$> sed -i "s/fs-4af69aab/$EfsFsId/g" pv.yaml

$> kubectl apply -f pv.yaml

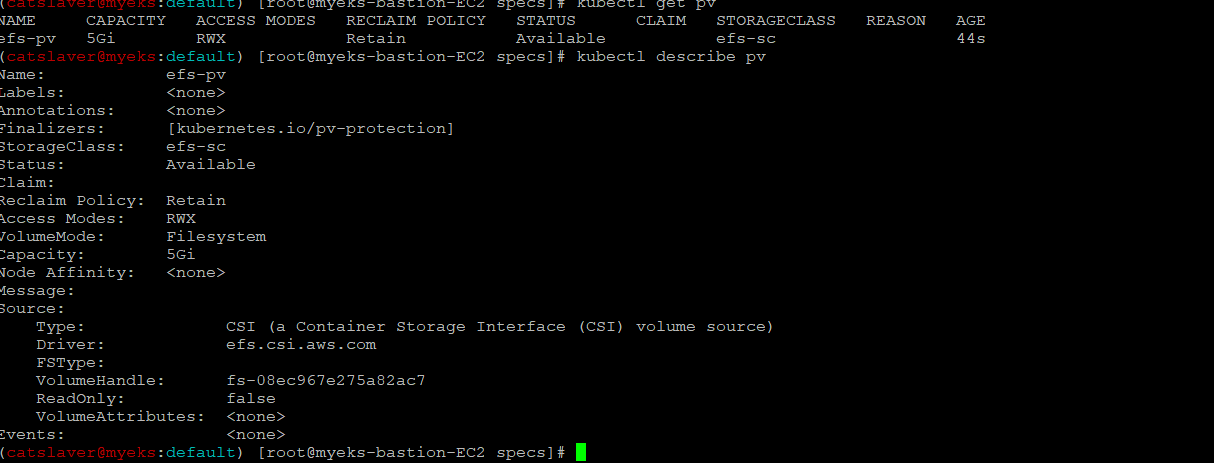

6.2.4 PV 생성 확인

$> kubectl get pv

$> kubectl describe pv

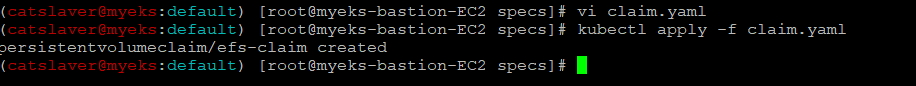

6.2.5 PVC 생성

$> kubectl apply -f claim.yaml

6.2.6 PVC 생성 확인

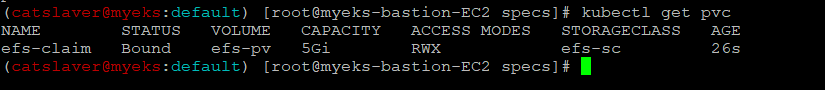

$> kubectl get pvc

6.2.7 파드 생성 및 연동 : 파드 내에 /data 데이터는 EFS를 사용

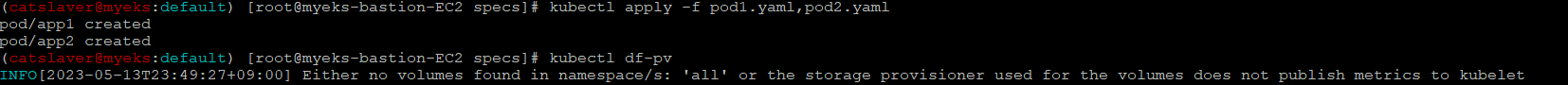

$> kubectl apply -f pod1.yaml,pod2.yaml

$> kubectl df-pv

6.2.8 파드 정보 확인

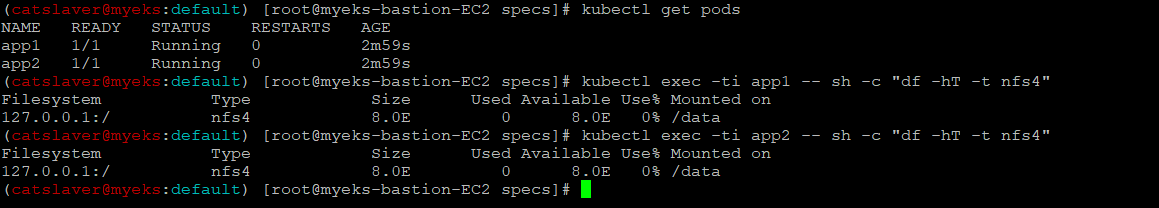

$> kubectl get pods

$> kubectl exec -ti app1 -- sh -c "df -hT -t nfs4"

$> kubectl exec -ti app2 -- sh -c "df -hT -t nfs4"

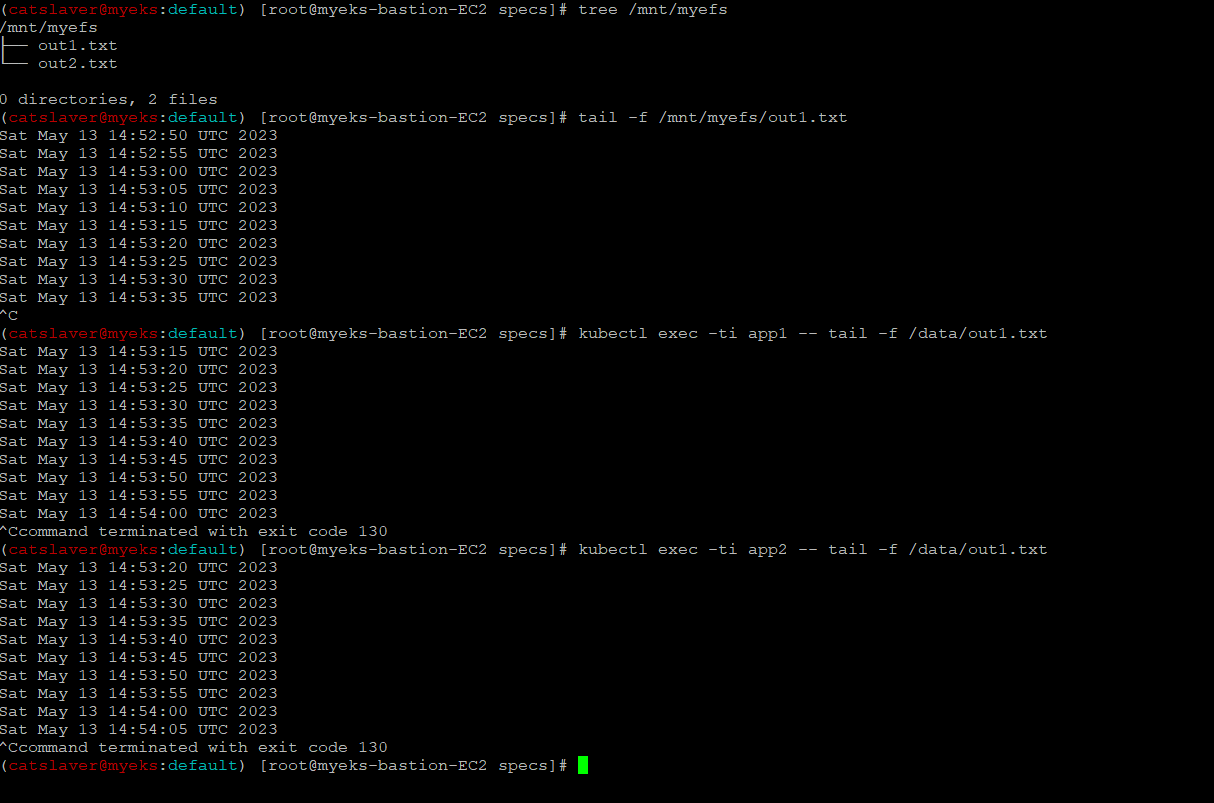

6.2.9 Bastion Host에서 EFS 에 접근하여 확인

$> tree /mnt/myefs # 작업용EC2에서 확인

$> tail -f /mnt/myefs/out1.txt # 작업용EC2에서 확인

각 pod에 접속하여 확인

$> kubectl exec -ti app1 -- tail -f /data/out1.txt

$> kubectl exec -ti app2 -- tail -f /data/out2.txt

6.2.10 자원 삭제_Pod, PVC, PV, SC

$> kubectl delete pod app1 app2

$> kubectl delete pvc efs-claim && kubectl delete pv efs-pv && kubectl delete sc efs-sc6.3 EFS 파일시스템을 다수의 파드가 사용하게 설정 : Dynamic provisioning

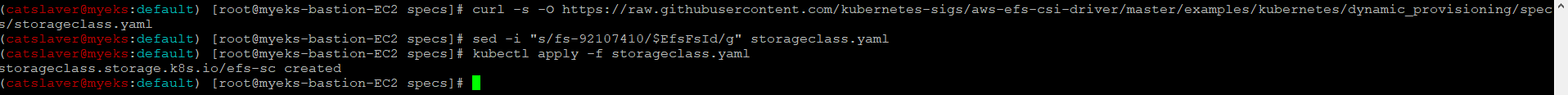

6.3.1 EFS 스토리지클래스 생성

$> curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/storageclass.yaml

$> sed -i "s/fs-92107410/$EfsFsId/g" storageclass.yaml

$> kubectl apply -f storageclass.yaml

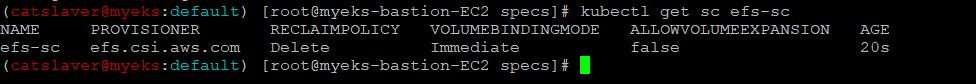

6.3.2 생성 확인

$> kubectl get sc efs-sc

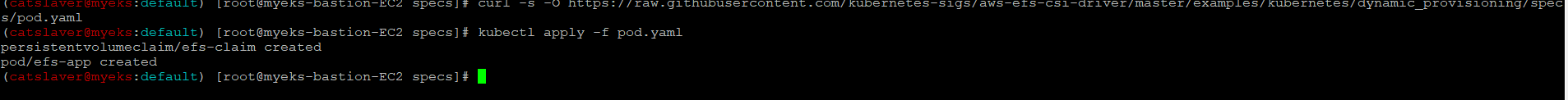

6.3.3 PVC & Pod 생성

$> curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/pod.yaml

$> kubectl apply -f pod.yaml

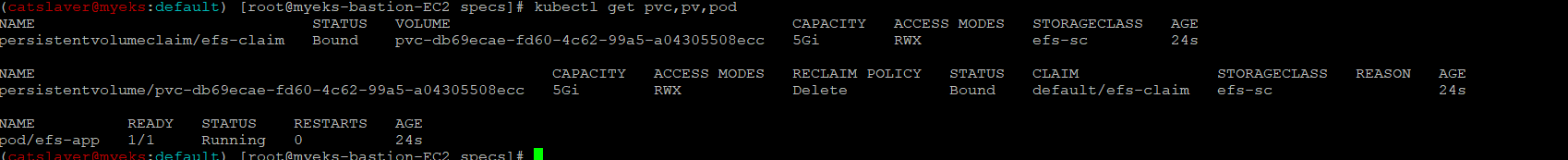

6.3.4 Pv, Pvc, pod 생성 확인

$> kubectl get pvc,pv,pod

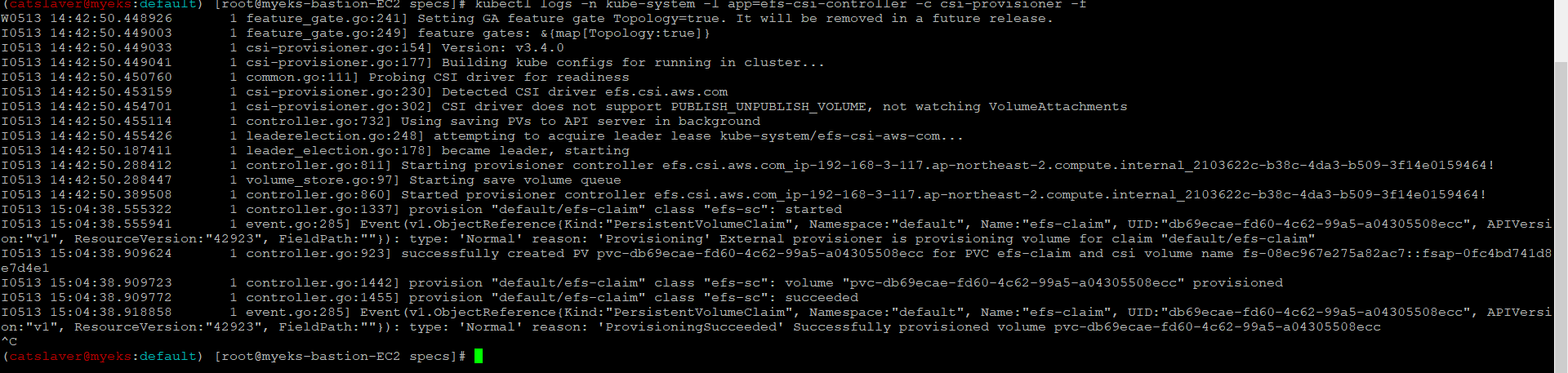

6.3.5 PVC/PV 생성 로그 확인

$> kubectl logs -n kube-system -l app=efs-csi-controller -c csi-provisioner -f

6.3.6 EFS File System 확인

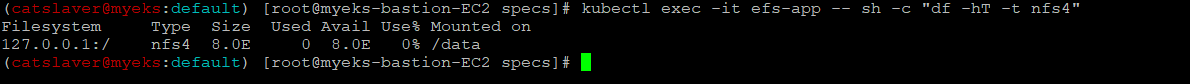

$> kubectl exec -it efs-app -- sh -c "df -hT -t nfs4"

6.3.7 Bastion Host에서 EFS 에 접근하여 확인

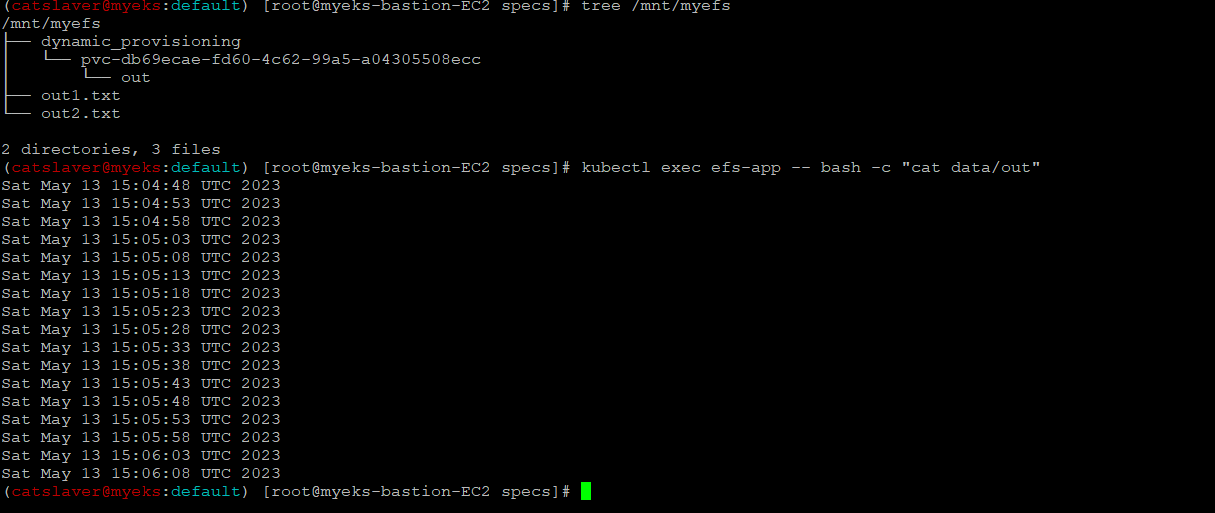

$> tree /mnt/myefs # 작업용EC2에서 확인

$> kubectl exec efs-app -- bash -c "cat data/out" # 작업용EC2에서 확인

6.3.8 Pod, PVC, SC 리소스 삭제

$> kubectl delete -f pod.yaml

$> kubectl delete -f storageclass.yaml7 Node Group 2개 구성_하나의 Node Group의 Worknode가 NVME Interface를 지원하는 C5.lagre EC2로 구성

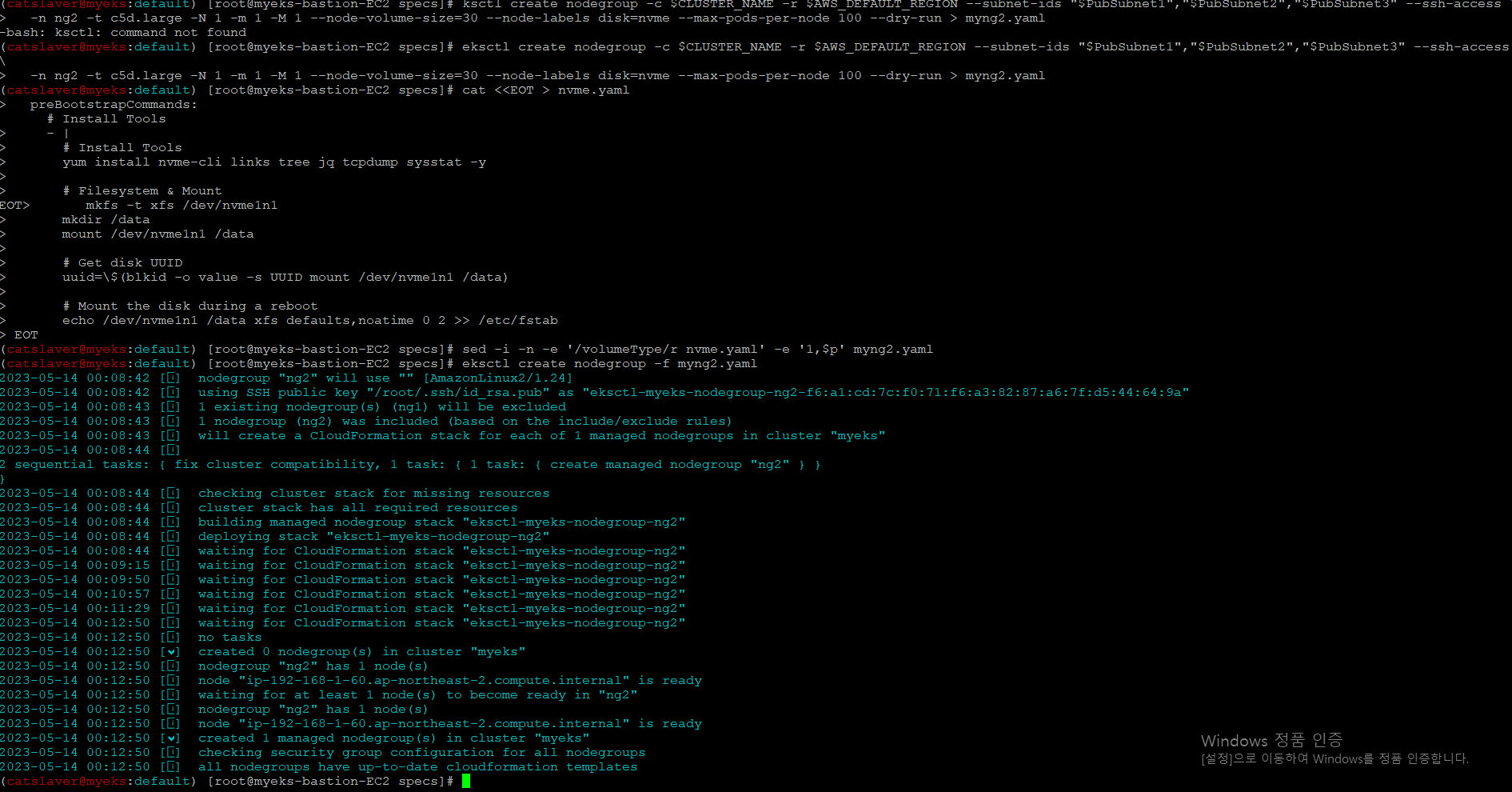

7.1 신규 Node Group 생성

$> eksctl create nodegroup -c $CLUSTER_NAME -r $AWS_DEFAULT_REGION --subnet-ids "$PubSubnet1","$PubSubnet2","$PubSubnet3" --ssh-access \

-n ng2 -t c5d.large -N 1 -m 1 -M 1 --node-volume-size=30 --node-labels disk=nvme --max-pods-per-node 100 --dry-run > myng2.yaml

$> cat <<EOT > nvme.yaml

preBootstrapCommands:

- |

# Install Tools

yum install nvme-cli links tree jq tcpdump sysstat -y

# Filesystem & Mount

mkfs -t xfs /dev/nvme1n1

mkdir /data

mount /dev/nvme1n1 /data

# Get disk UUID

uuid=\$(blkid -o value -s UUID mount /dev/nvme1n1 /data)

# Mount the disk during a reboot

echo /dev/nvme1n1 /data xfs defaults,noatime 0 2 >> /etc/fstab

EOT

$> sed -i -n -e '/volumeType/r nvme.yaml' -e '1,$p' myng2.yaml

$> eksctl create nodegroup -f myng2.yaml

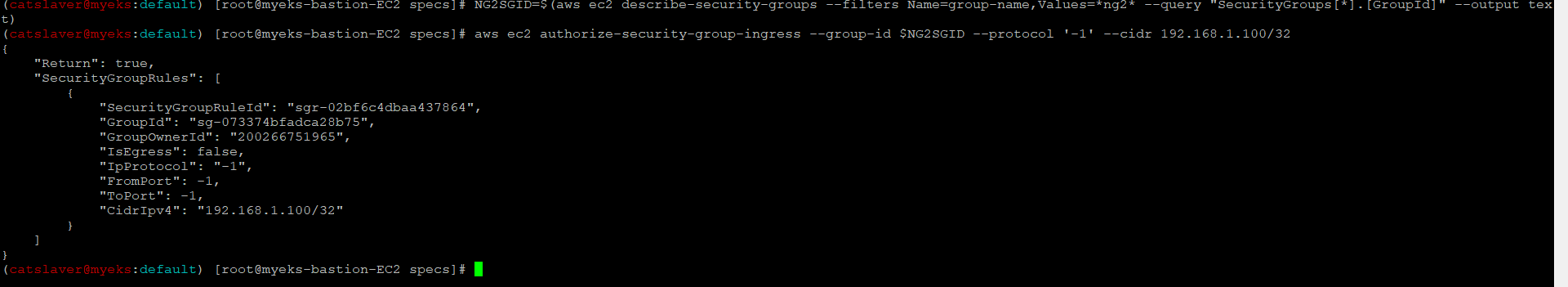

7.2 노드 보안그룹 ID 확인

$> NG2SGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng2* --query "SecurityGroups[*].[GroupId]" --output text)

$> aws ec2 authorize-security-group-ingress --group-id $NG2SGID --protocol '-1' --cidr 192.168.1.100/32

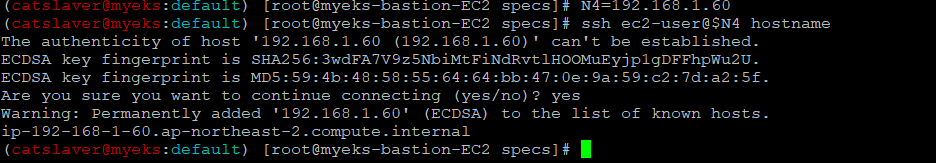

7.3 워커 노드 SSH 접속

$> N4=192.168.1.60

$> ssh ec2-user@$N4 hostname

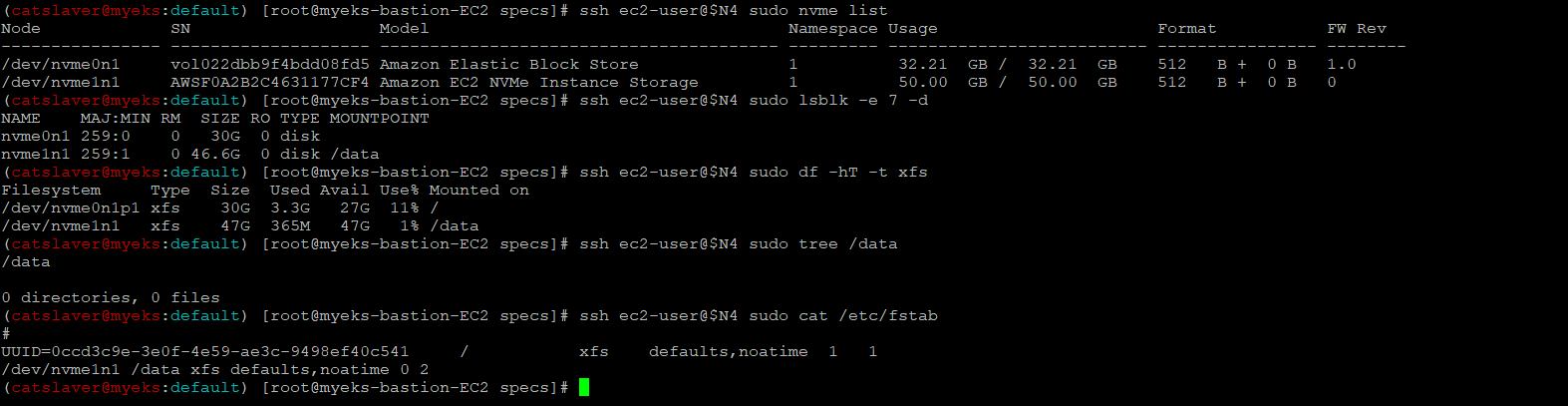

7.4 Instance Store 확인

$> ssh ec2-user@$N4 sudo nvme list

$> ssh ec2-user@$N4 sudo lsblk -e 7 -d

$> ssh ec2-user@$N4 sudo df -hT -t xfs

$> ssh ec2-user@$N4 sudo tree /data

$> ssh ec2-user@$N4 sudo cat /etc/fstab

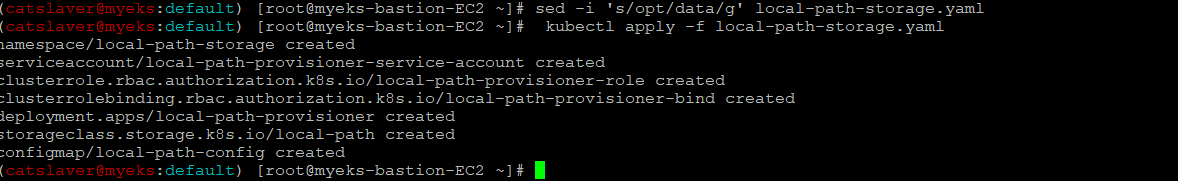

7.5 Storage Class 변경

7.5.1 기존 Storage Class 삭제

$> kubectl delete -f local-path-storage.yaml

7.5.2 opt -> data path로 변경_상세하게 한 번 더 확인(?)

$> sed -i 's/opt/data/g' local-path-storage.yaml

$> kubectl apply -f local-path-storage.yaml

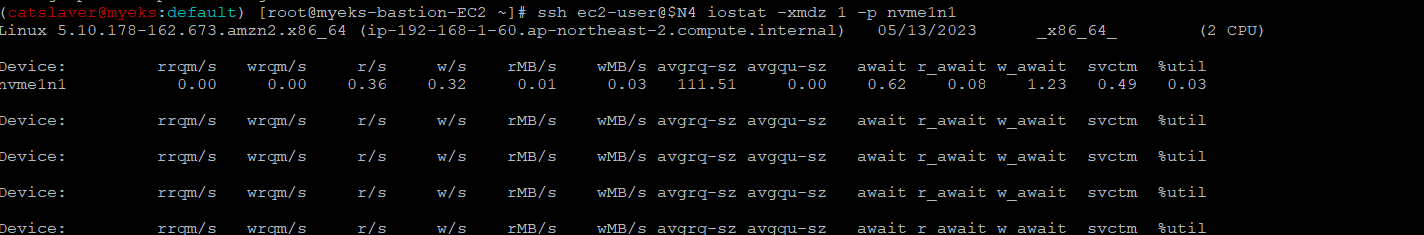

7.5.3 모니터링

$> watch 'kubectl get pod -owide;echo;kubectl get pv,pvc'

$> ssh ec2-user@$N4 iostat -xmdz 1 -p nvme1n1

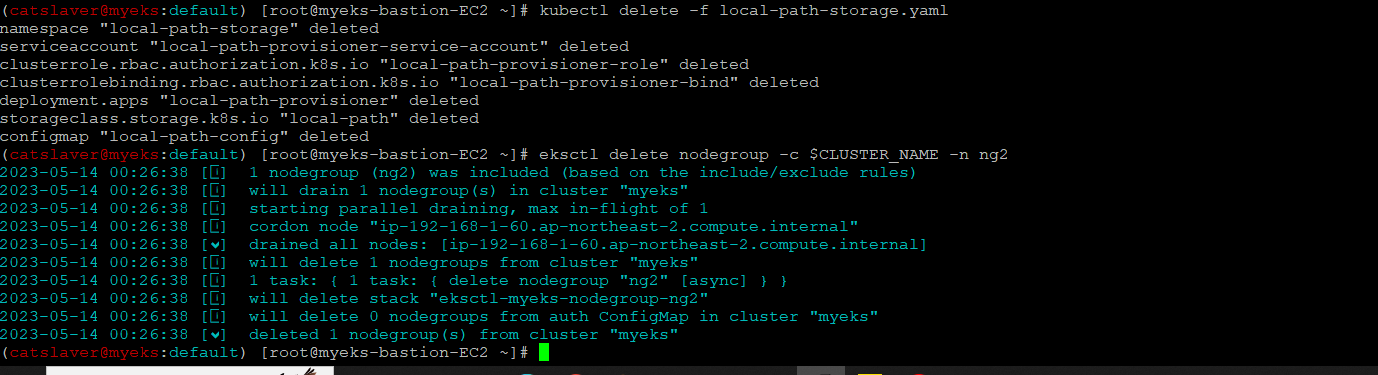

7.5.4 자원 삭제

$> kubectl delete -f local-path-storage.yaml

$> eksctl delete nodegroup -c $CLUSTER_NAME -n ng2

8 전체 실습 자료 삭제

8.1 IRSA 삭제

$> helm uninstall -n kube-system kube-ops-view

$> aws cloudformation delete-stack --stack-name eksctl-$CLUSTER_NAME-addon-iamserviceaccount-kube-system-efs-csi-controller-sa

$> aws cloudformation delete-stack --stack-name eksctl-$CLUSTER_NAME-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa

$> aws cloudformation delete-stack --stack-name eksctl-$CLUSTER_NAME-addon-iamserviceaccount-kube-system-aws-load-balancer-controller8.2 Cluster와 기타 AWS 자원 삭제

$> eksctl delete cluster --name $CLUSTER_NAME && aws cloudformation delete-stack --stack-name $CLUSTER_NAME