1. EKS Console

1.1 Workload

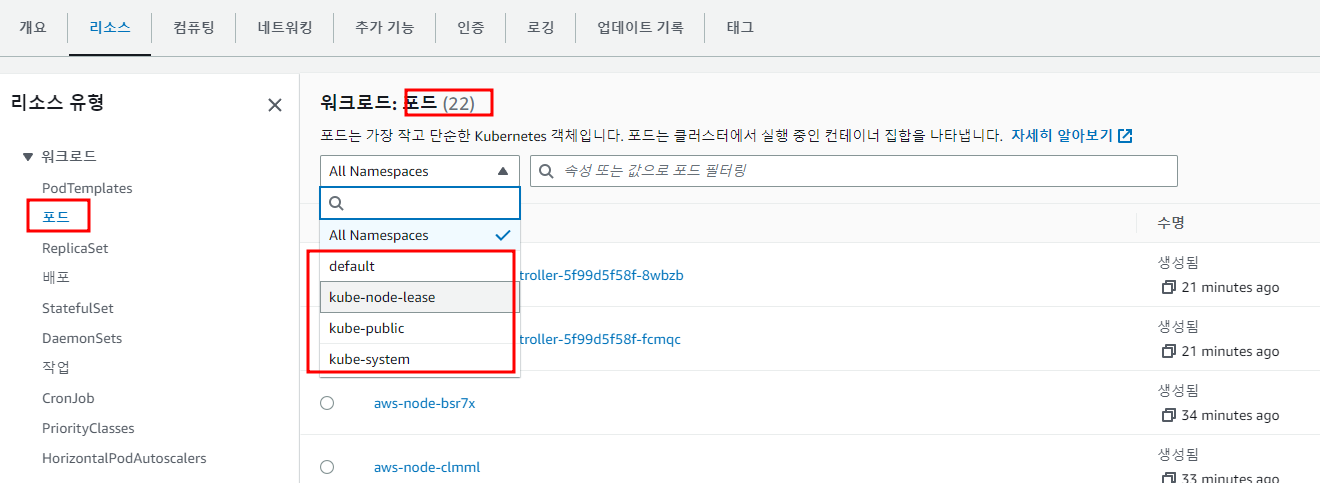

1.1.1 pod

Namespace별, Pod Name별로 검색이 가능,전체 Pod 수도 표시

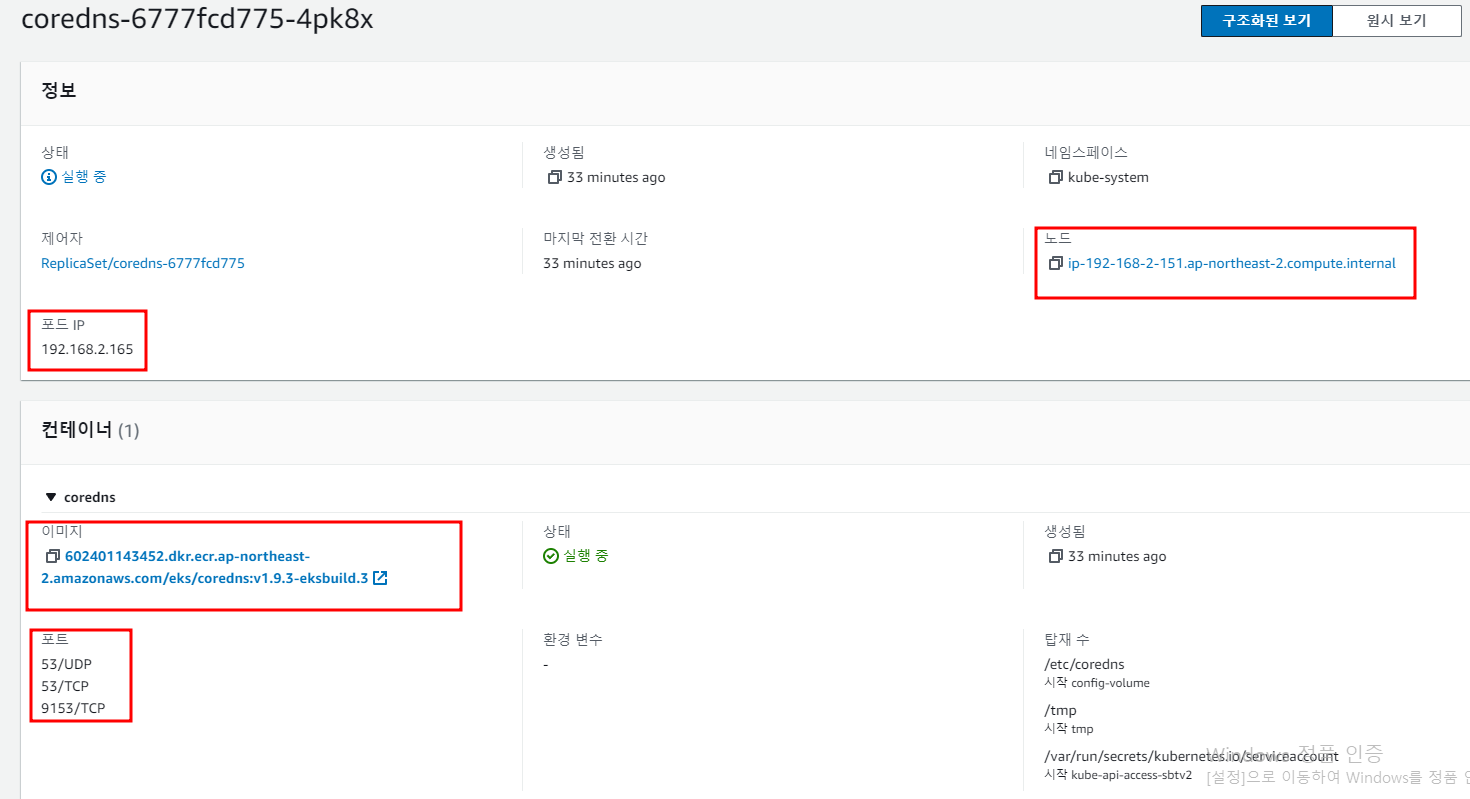

특정 Pod를 Click하면 해당 Pod의 기본적인 정보를 표시

대표적으로 Image, Container, Node IP, Pod IP

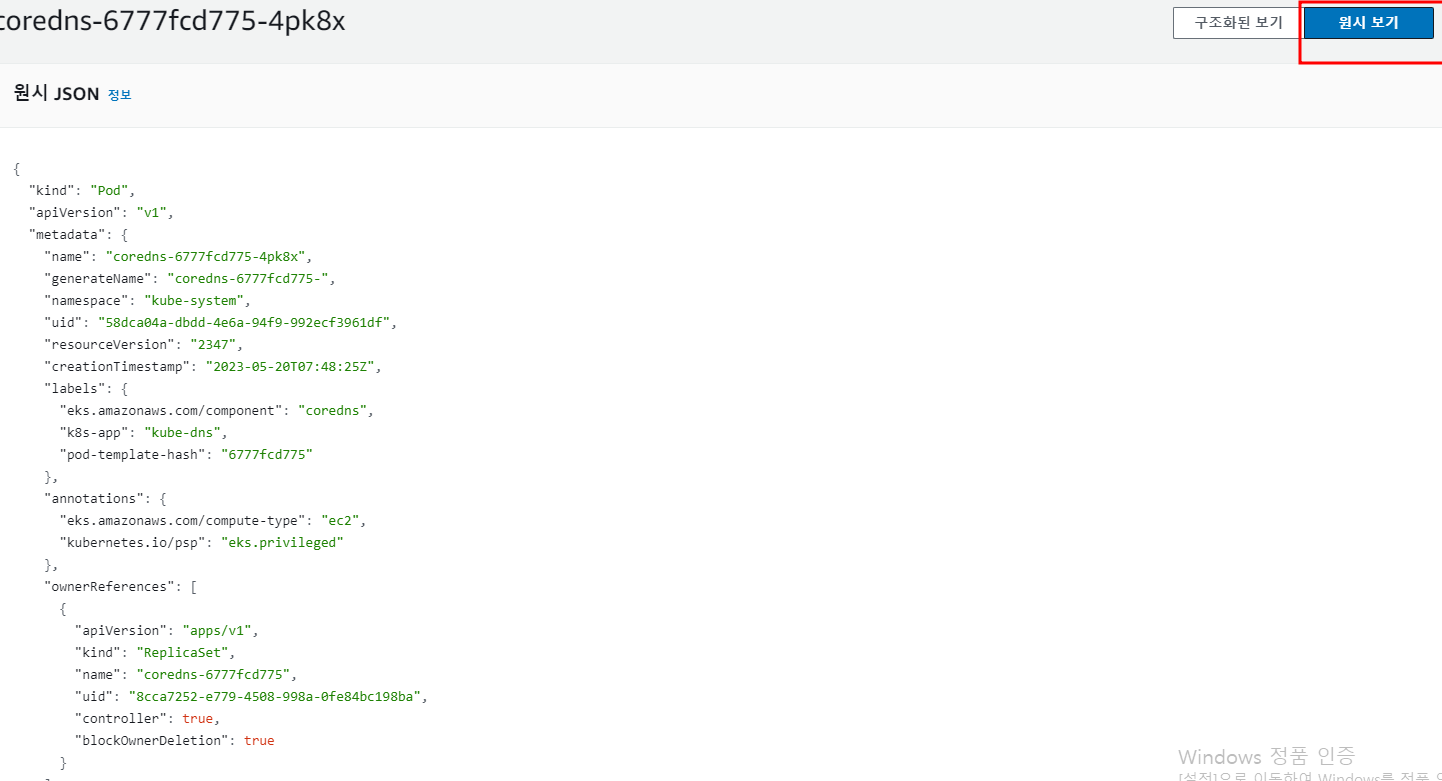

원시보기를 선택하면 JSON Format으로 구성 정보를 표시

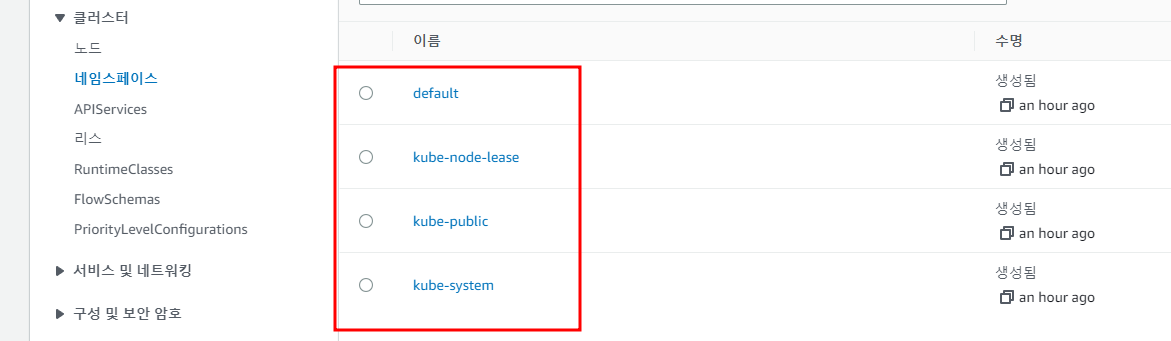

1.2 Cluster

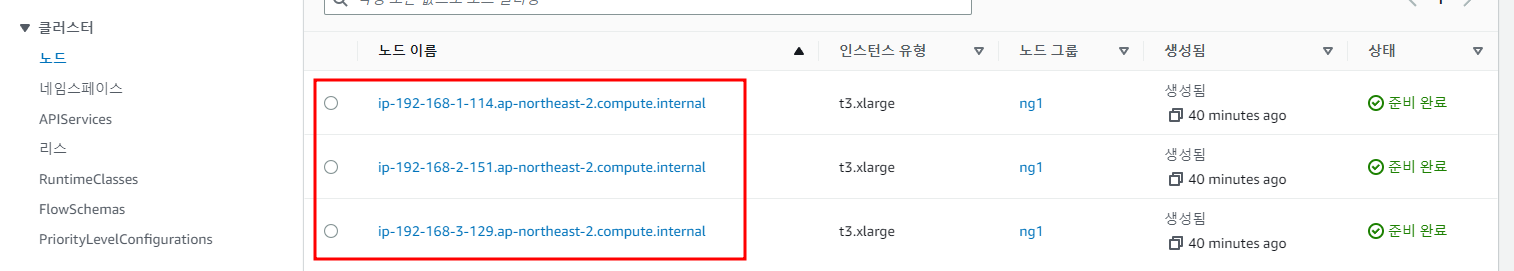

Cluster 주요 구성 요소를 Node별, Namespace별, API Service별로 분류하여 표시

Namespace를 표시

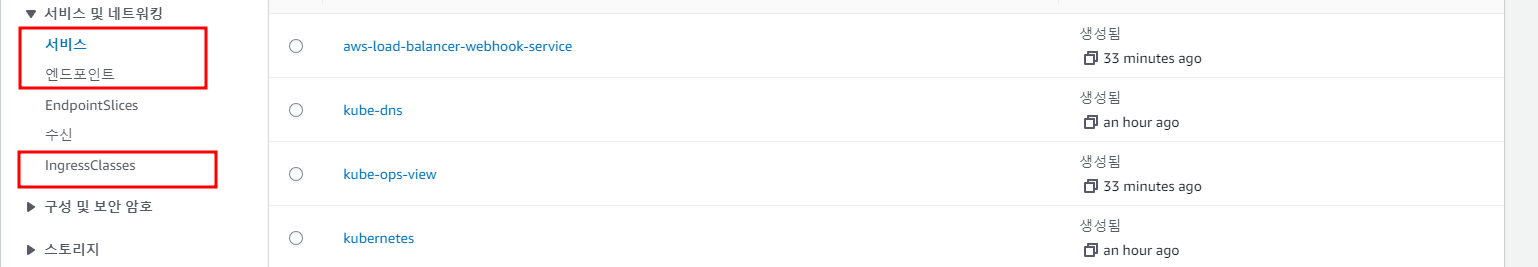

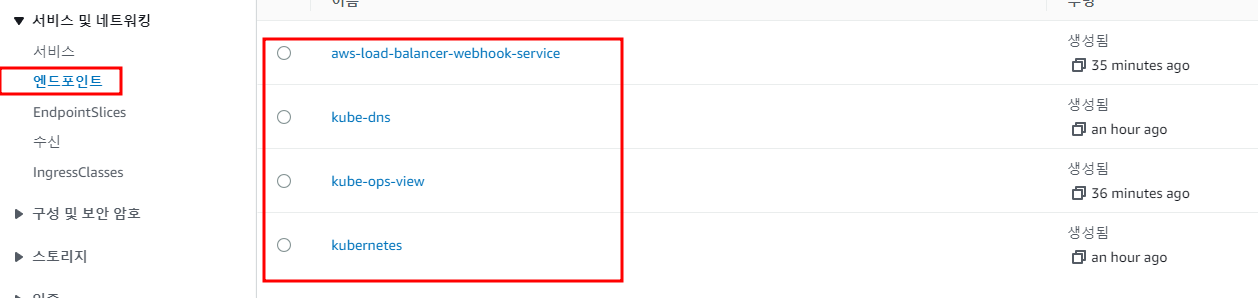

1.3 서비스 및 네트워킹

외부와의 접점인 요소들을 표시

서비스와 엔트 포인트의 같이 동일, 연결 지점이라는 의미에서 동일한 것일까?

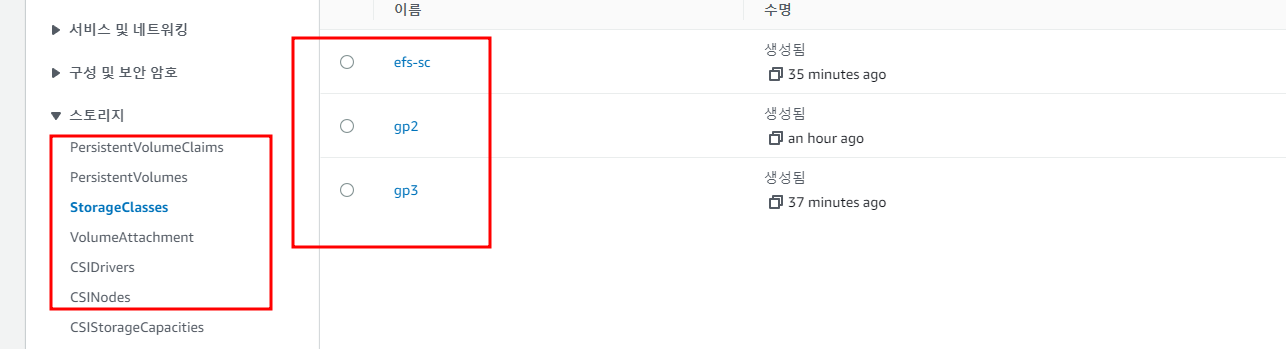

1.4 스토리지

스토리지 관련 주요 서비스인 StorageClass, CSIDriver, CSINodes, PVC, PV 정보를 표시

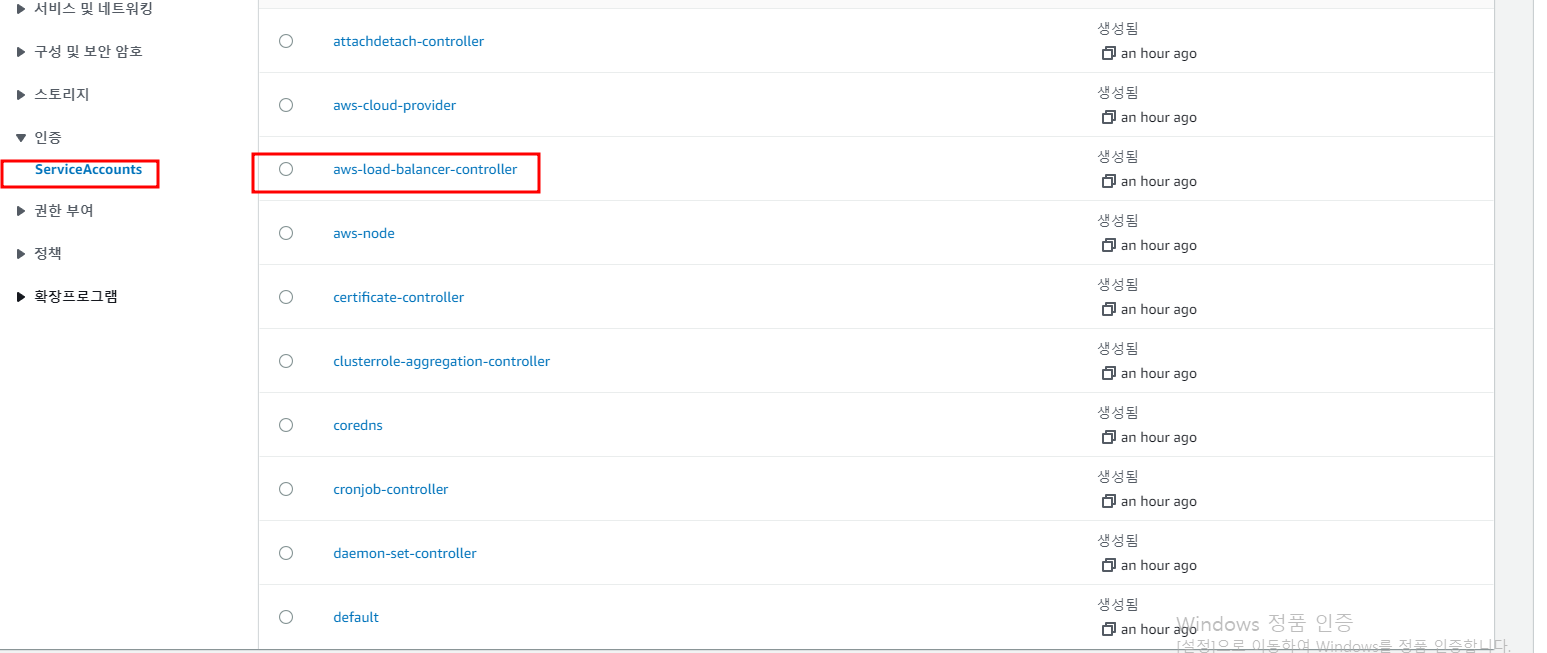

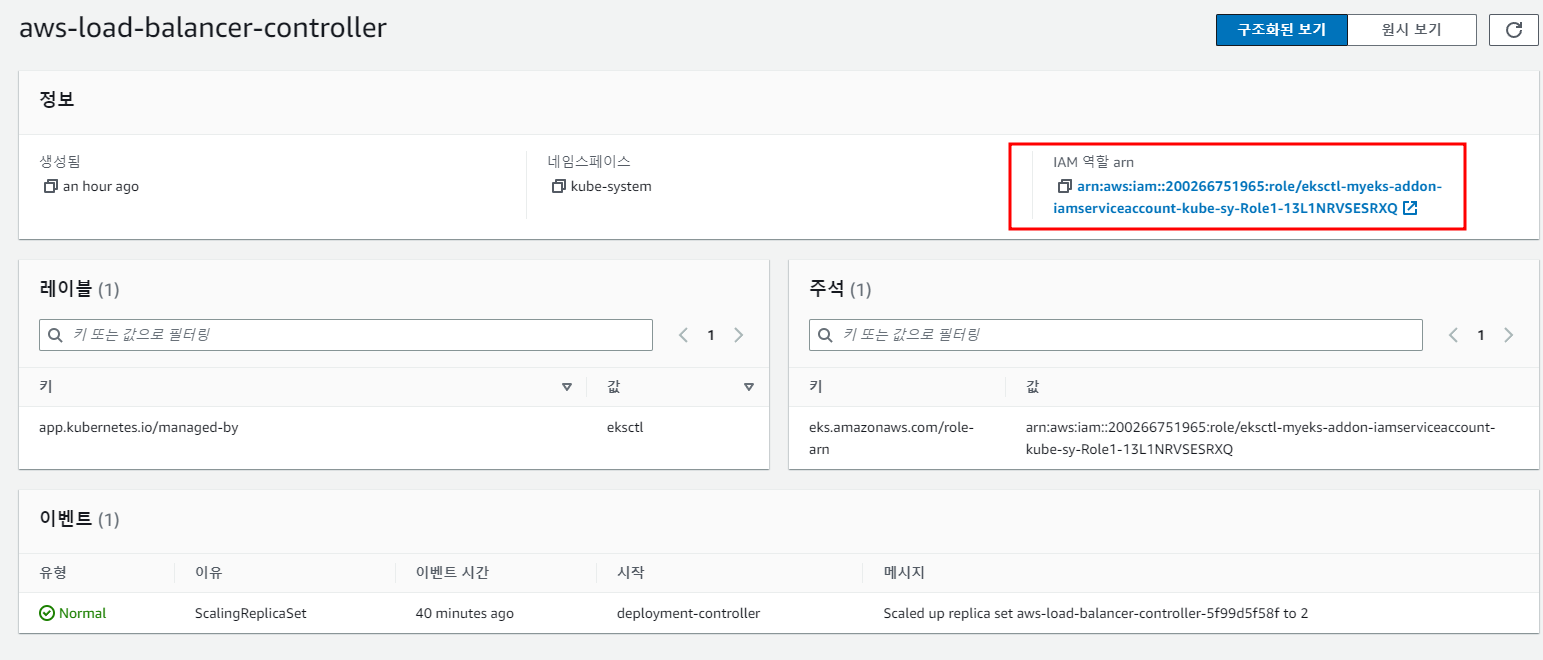

1.5 인증

EKS의 Service Account List를 표시

service Account와 연동하는 IAM ARN 정보를 포함

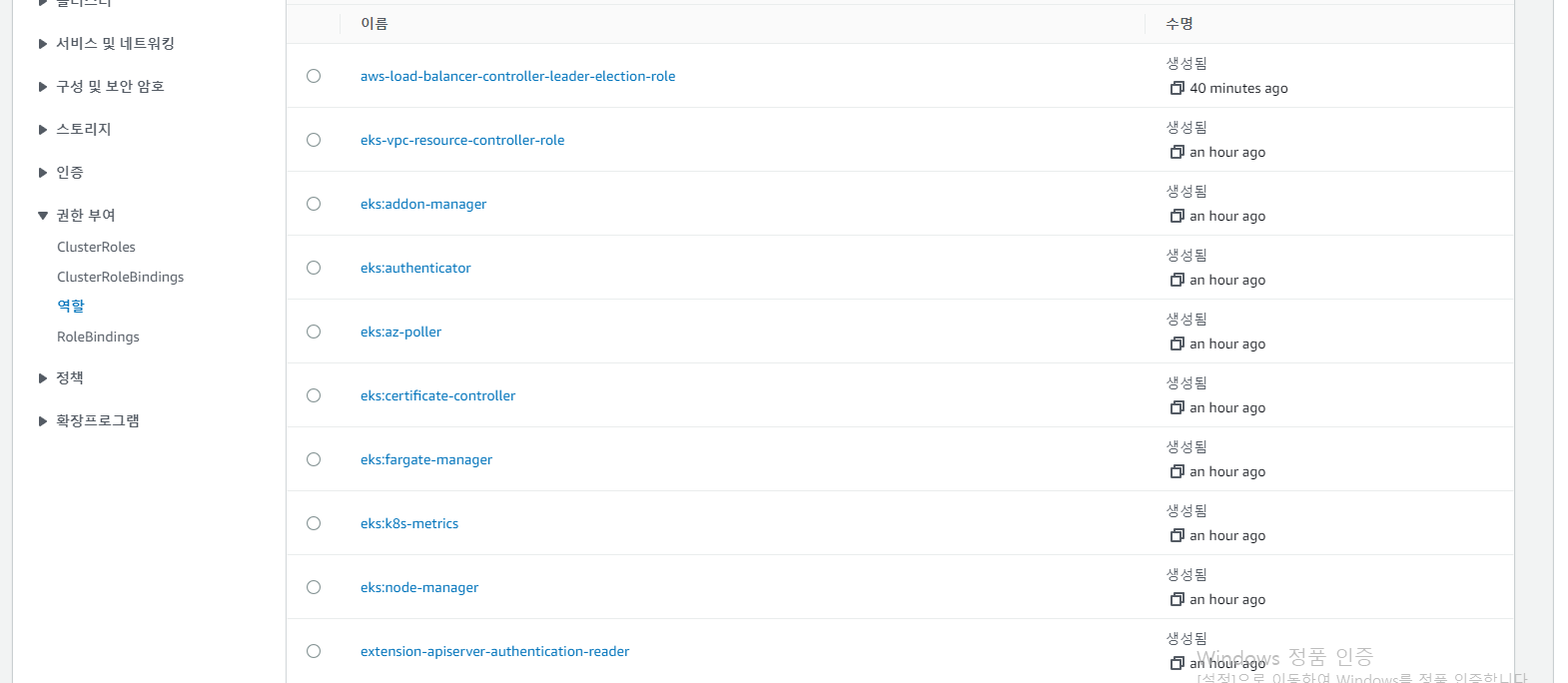

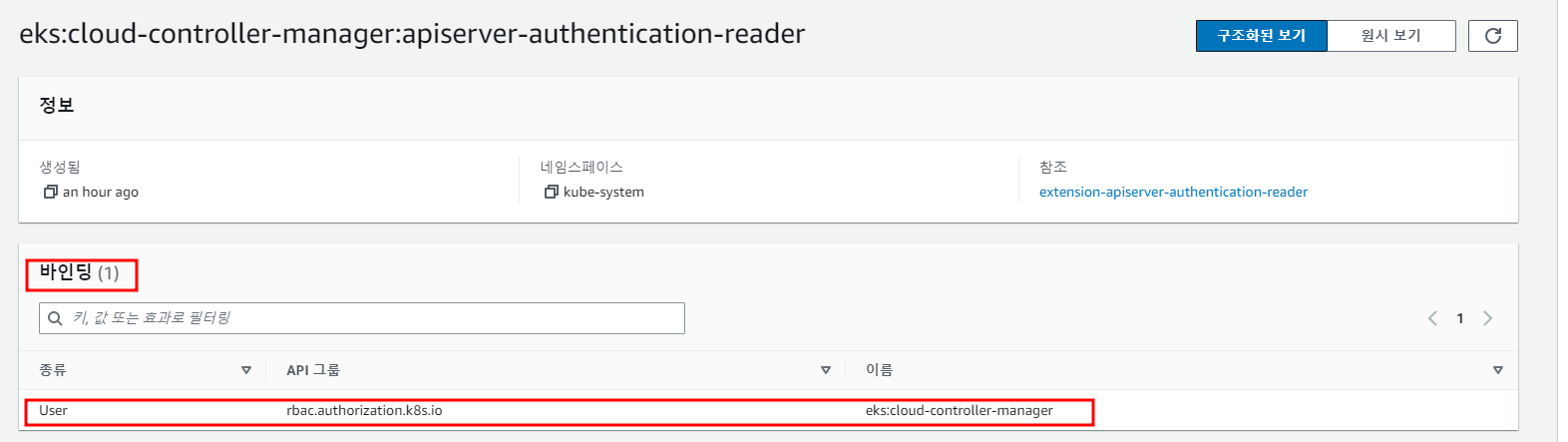

1.6 권한 부여

권한 관련 Role과 RoleBindings를 표시

RoleBindings에서는 Binded User 정보를 표시

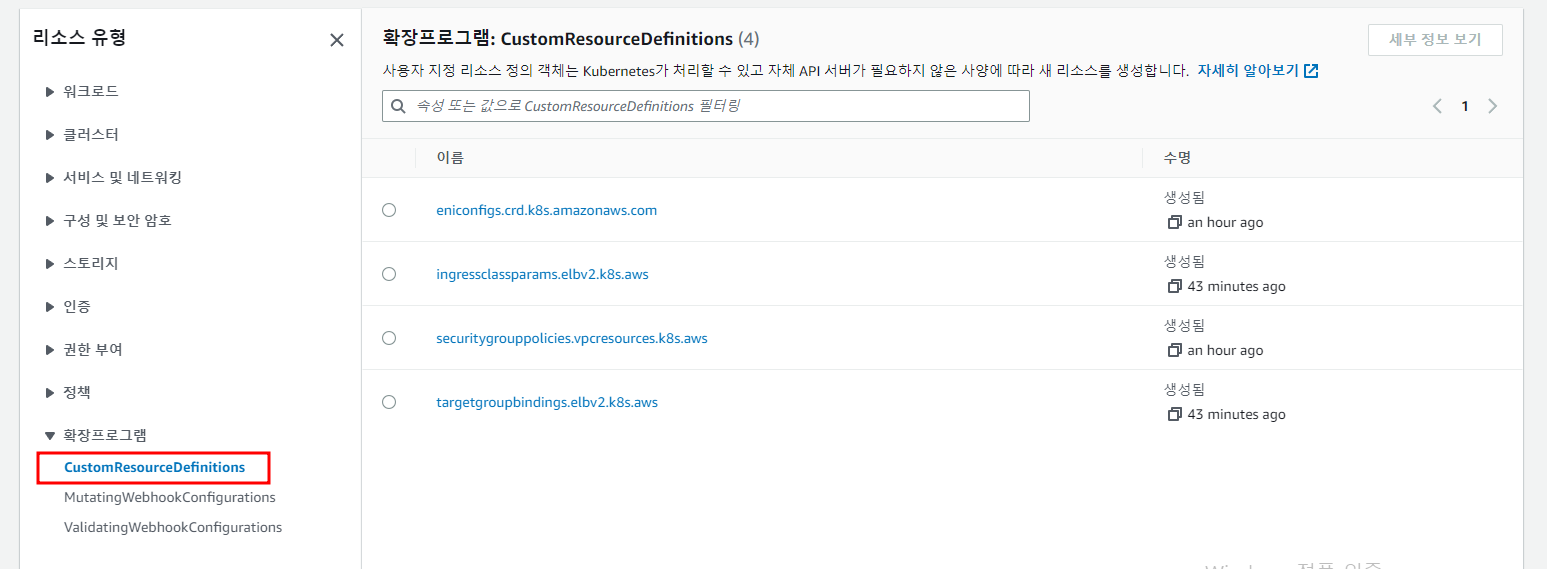

1.7 확장된 프로그램

CRD 정보를 표시

2. Logging in EKS

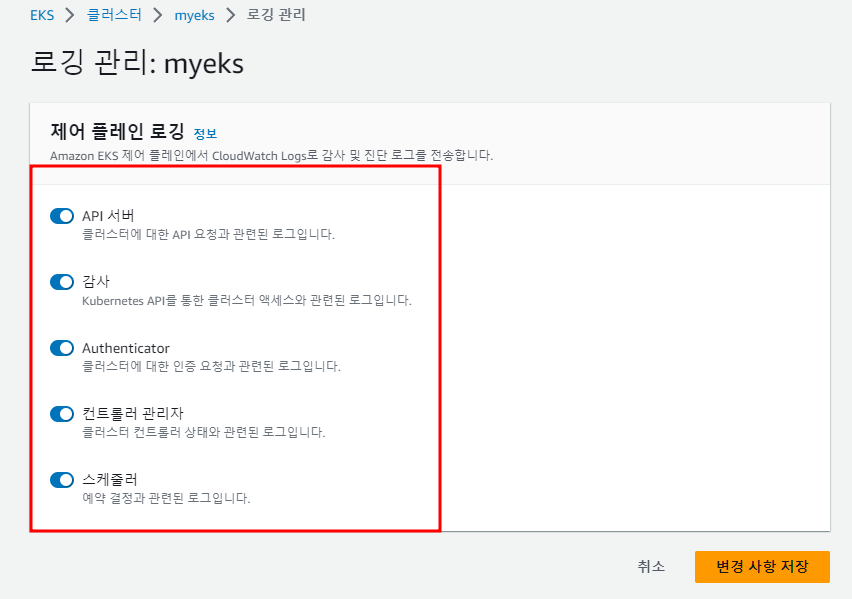

2.1 Control plane logging

2.1.1 AWS WEB Console를 통한 설정

2.1.1.1 설정 변경

기본 설정으로 Control Plane Log는 Off이며, 활성화 하고자 할 경우 AWS WEB Console의 EKS Dashboard에서 Logging Tab의 Manage logging을 통해서 5개 항목 별로 on/off가 가능하다.

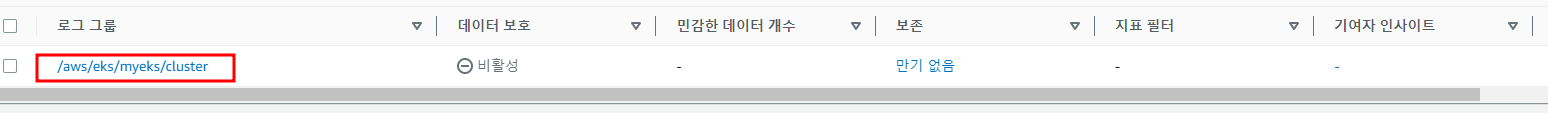

2.1.1.2 확인_CloudWatch에서 확인 가능

2.1.2 AWS CLI를 통한 설정

2.1.2.1 모든 Logging 활성화

$> aws eks update-cluster-config --region $AWS_DEFAULT_REGION --name $CLUSTER_NAME \

--logging '{"clusterLogging":[{"types":["api","audit","authenticator","controllerManager","scheduler"],"enabled":true}]}'

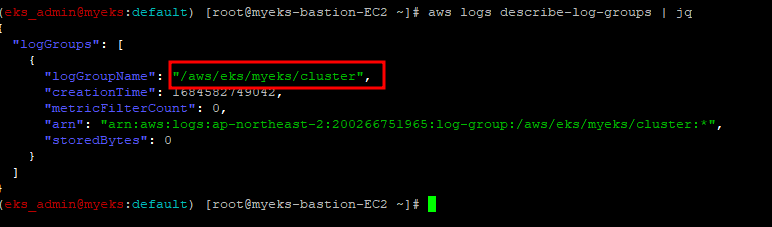

2.1.2.2 로그 그룹 확인

$> aws logs describe-log-groups | jq

참고 : aws logs tail은 지난 10분동안 관련된 Cloudwatch streams의 log들을 보여준다.

보다 자세한 사항은 URL 참조 : https://awscli.amazonaws.com/v2/documentation/api/latest/reference/logs/tail.html

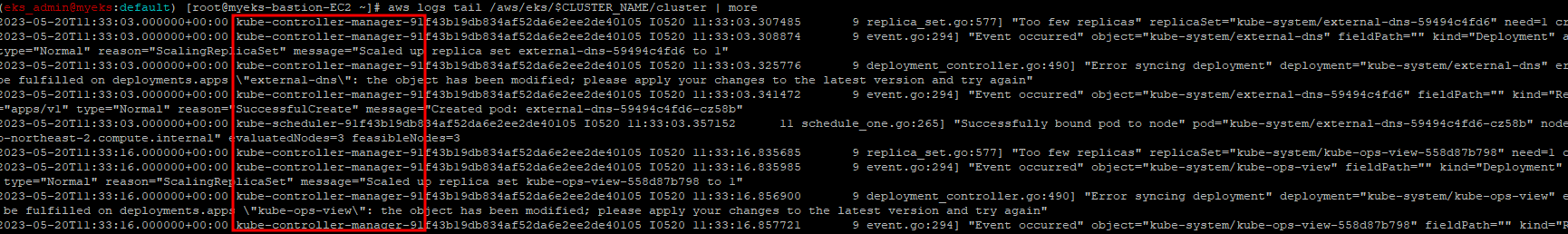

2.1.2.3 로그 Tail 확인

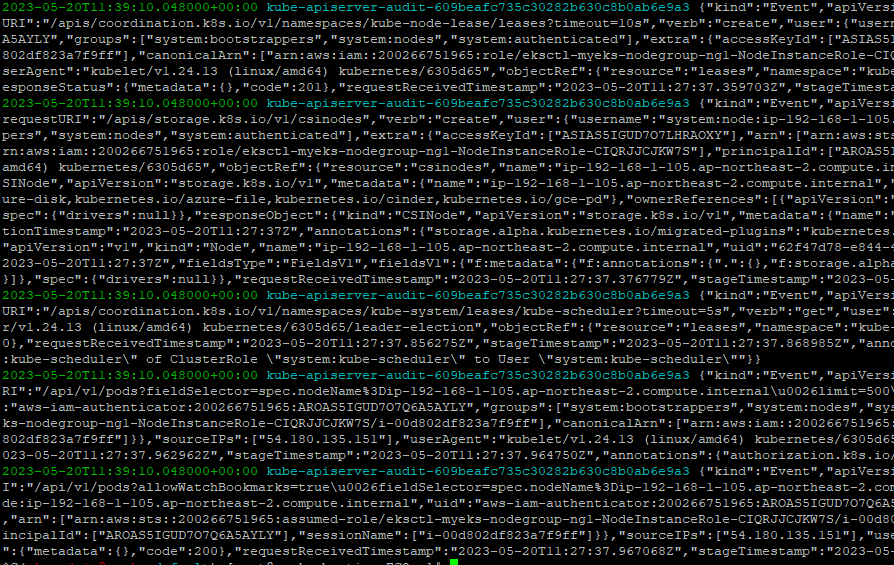

$> aws logs tail /aws/eks/$CLUSTER_NAME/cluster | more

2.1.2.4 신규 로그를 바로 출력

$> aws logs tail /aws/eks/$CLUSTER_NAME/cluster --follow

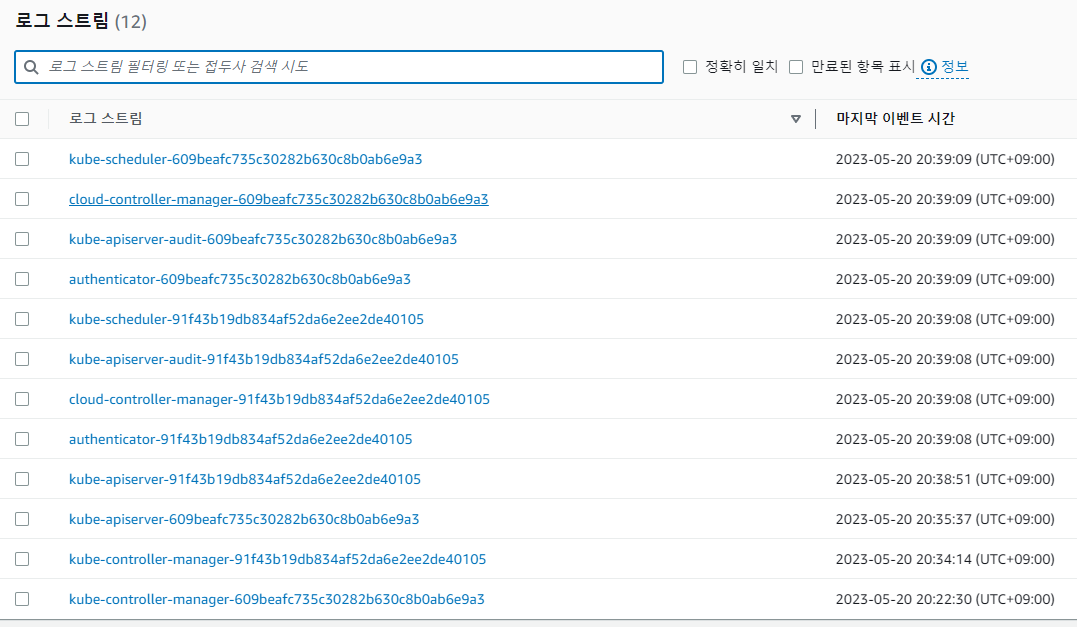

2.1.2.5 Log Stream 이름

# 로그 Stream은 아마도 AWS WEB Console을 참조 해야 할듯

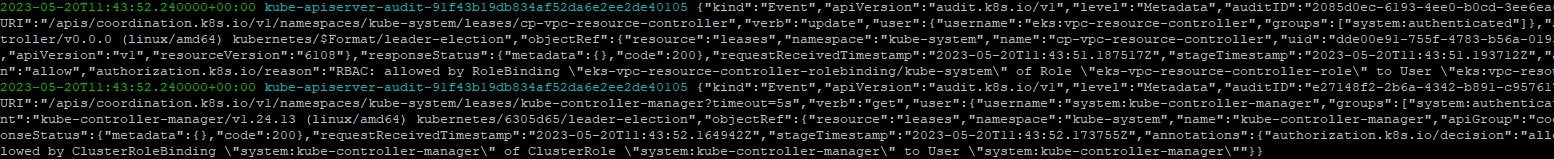

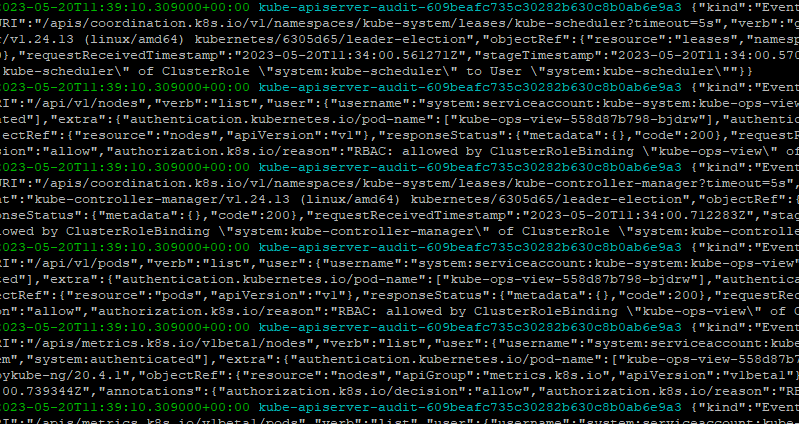

$> aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix <로그 스트림 prefix> --followkube로 시작하는 log stream만 표시

$> aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix kube-apiserver-audit --followkube-apiserver-audit log stream 만 표시

#아래 두 명령어는 여기에서 무슨 의미가 뭐지?

$> kubectl scale deployment -n kube-system coredns --replicas=1

$> kubectl scale deployment -n kube-system coredns --replicas=22.1.2.7 로그 시간 지정

1시간 30분 이전 부터 로그 표시

$> aws logs tail /aws/eks/$CLUSTER_NAME/cluster --since 1h30m

2.1.2.8 short format

default는 detailed type

$> aws logs tail /aws/eks/$CLUSTER_NAME/cluster --since 1h30m --format short

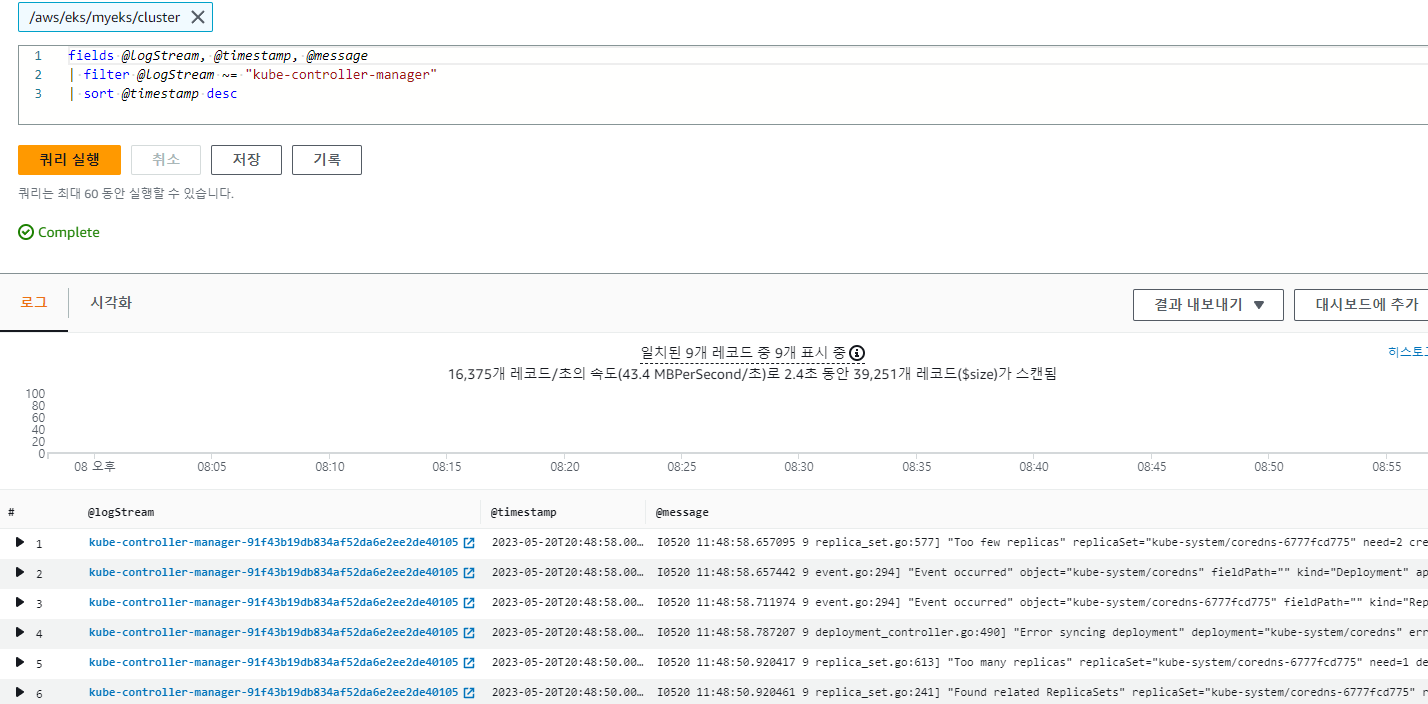

2.1.3 AWS WEB Console의 CloudWath Insights에서 Log 검색

단순 로그 나열이 아닌 원하는 데이타를 찾기 위한 검색 명령어를 CloudWatch Insights에서 실행하여 원하는 결과를 확인

보다 자세한 내용은 URL 참조 : https://docs.aws.amazon.com/ko_kr/AmazonCloudWatch/latest/logs/CWL_QuerySyntax.html

2.1.2.3.1 EC2 Instance가 NodeNotReady 상태인 로그 검색

fields @timestamp, @message

| filter @message like /NodeNotReady/

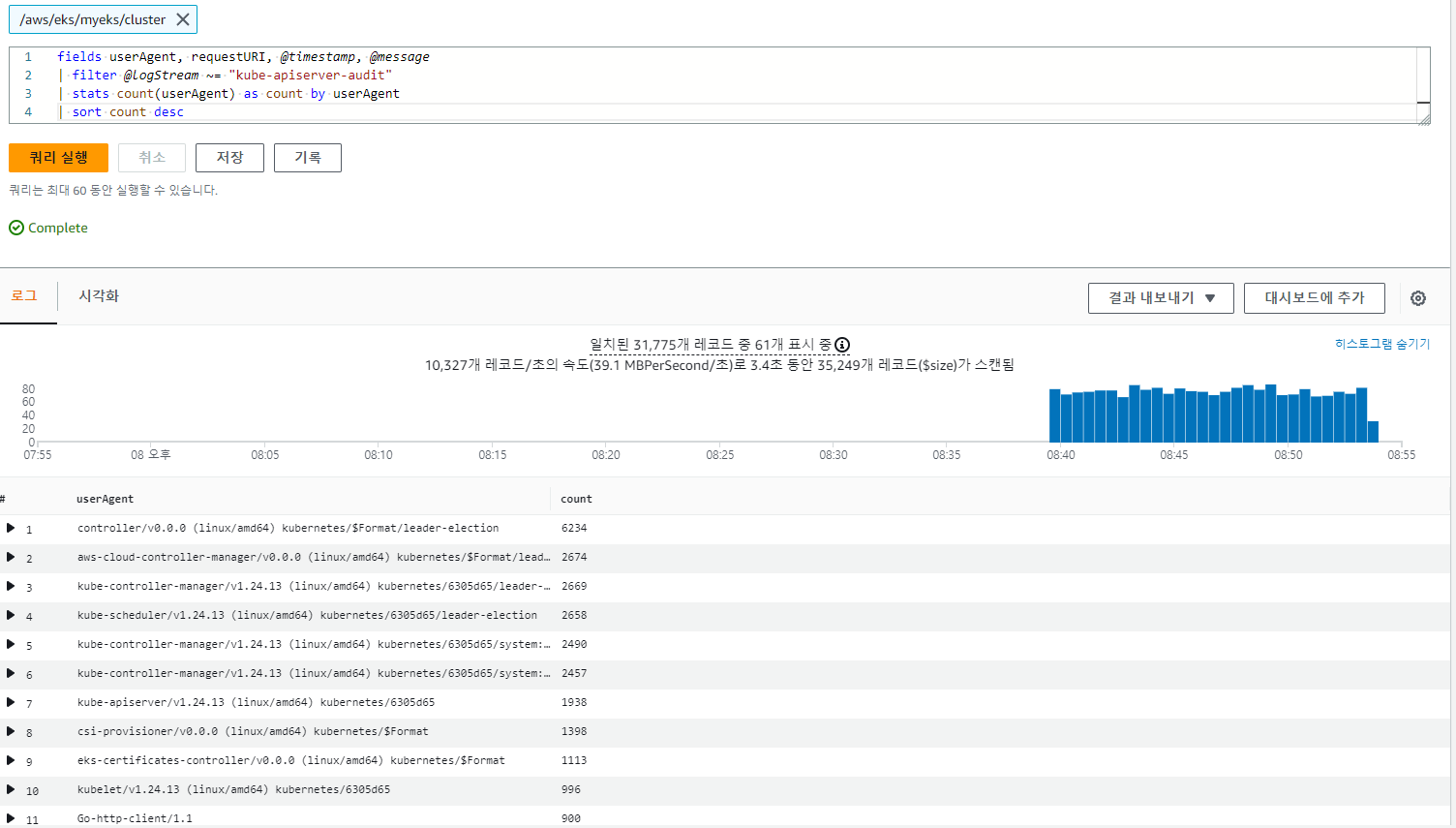

| sort @timestamp desc2.1.2.3.2 kube-apiserver-audit 로그에서 userAgent 정렬해서 아래 4개 필드 정보 검색

fields userAgent, requestURI, @timestamp, @message

| filter @logStream ~= "kube-apiserver-audit"

| stats count(userAgent) as count by userAgent

| sort count desc

log stream이 "kube-scheduler" 인 경우

fields @timestamp, @message

| filter @logStream ~= "kube-scheduler"

| sort @timestamp desc

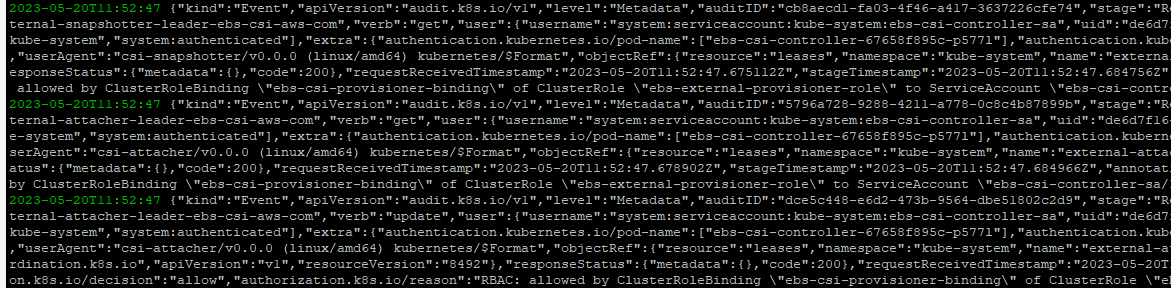

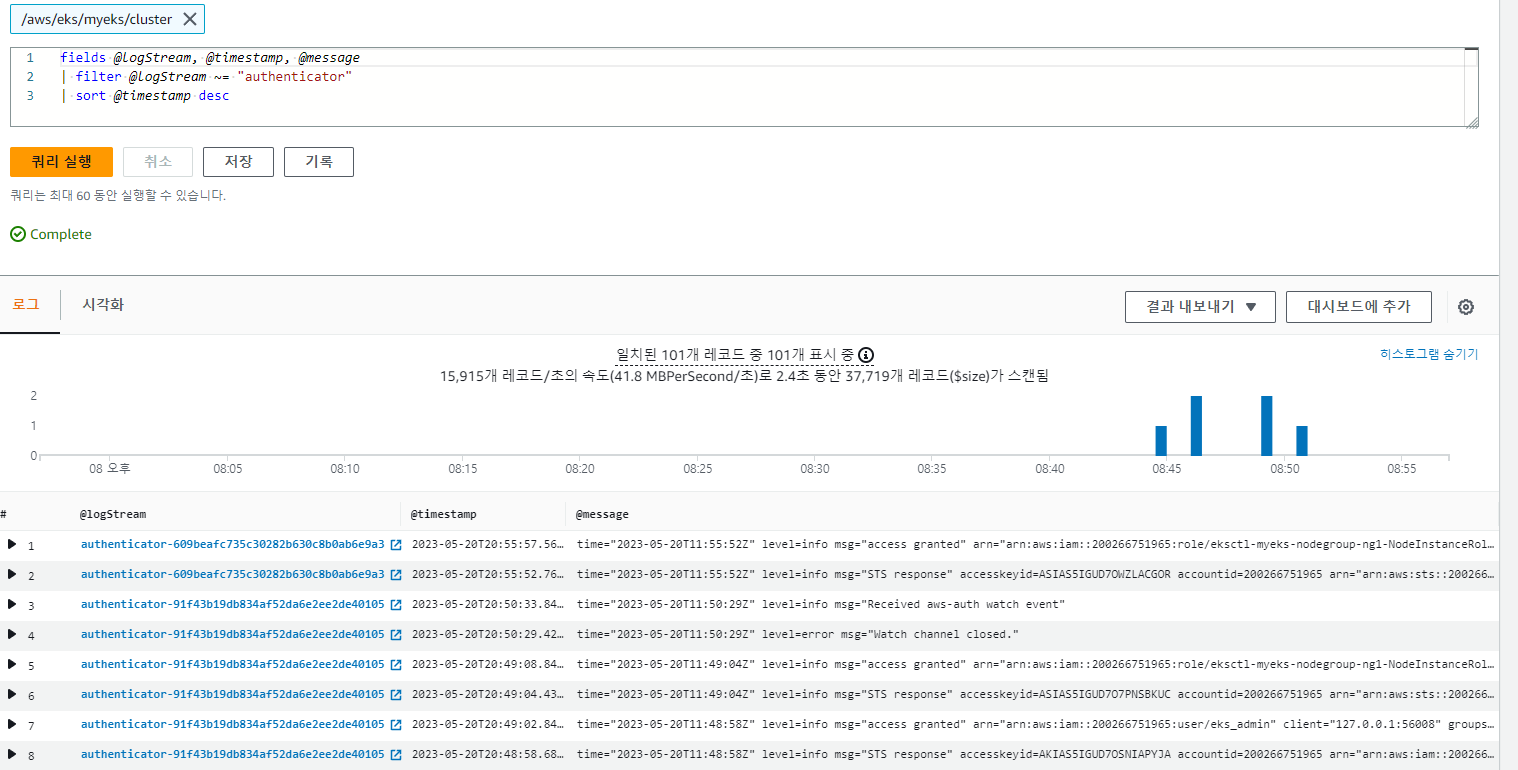

log stream이 "authenticator" 인 경우

fields @timestamp, @message

| filter @logStream ~= "authenticator"

| sort @timestamp desc

log stream이 "kube-controller-manager" 인 경우

fields @timestamp, @message

| filter @logStream ~= "kube-controller-manager"

| sort @timestamp desc

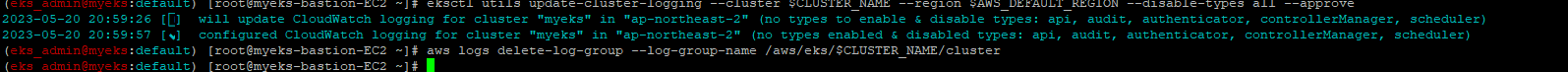

2.1.3 Control plance log off

2.1.3.1 EKS Control Plane 로깅(CloudWatch Logs) 비활성화

$> eksctl utils update-cluster-logging --cluster $CLUSTER_NAME --region $AWS_DEFAULT_REGION --disable-types all --approve2.1.3.2 로그 그룹 삭제

$> aws logs delete-log-group --log-group-name /aws/eks/$CLUSTER_NAME/cluster

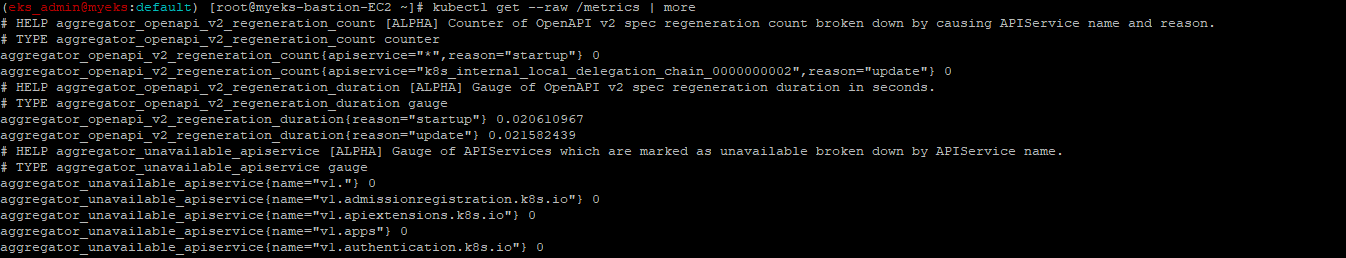

2.2 Control Plane metrics with Prometheus & CW Logs Insights 쿼리

The Kubernetes API server exposes a number of metrics that are useful for monitoring and analysis. These metrics are exposed internally through a metrics endpoint that refers to the /metrics HTTP API

$> kubectl get --raw /metrics

example output

...

# HELP rest_client_requests_total Number of HTTP requests, partitioned by status code, method, and host.

# TYPE rest_client_requests_total counter

rest_client_requests_total{code="200",host="127.0.0.1:21362",method="POST"} 4994

rest_client_requests_total{code="200",host="127.0.0.1:443",method="DELETE"} 1

rest_client_requests_total{code="200",host="127.0.0.1:443",method="GET"} 1.326086e+06

rest_client_requests_total{code="200",host="127.0.0.1:443",method="PUT"} 862173

rest_client_requests_total{code="404",host="127.0.0.1:443",method="GET"} 2

rest_client_requests_total{code="409",host="127.0.0.1:443",method="POST"} 3

rest_client_requests_total{code="409",host="127.0.0.1:443",method="PUT"} 8

# HELP ssh_tunnel_open_count Counter of ssh tunnel total open attempts

# TYPE ssh_tunnel_open_count counter

ssh_tunnel_open_count 0

# HELP ssh_tunnel_open_fail_count Counter of ssh tunnel failed open attempts

# TYPE ssh_tunnel_open_fail_count counter

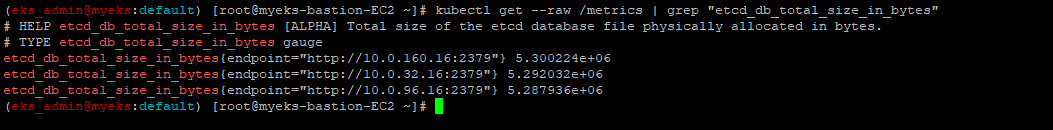

ssh_tunnel_open_fail_count 02.2.1 Managing etcd database size on Amazon EKS clusters

2.2.1.1 How to monitor etcd database size?

$> kubectl get --raw /metrics | grep "etcd_db_total_size_in_bytes"

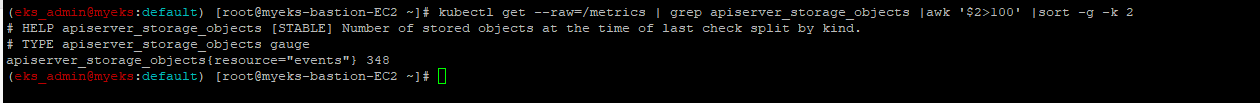

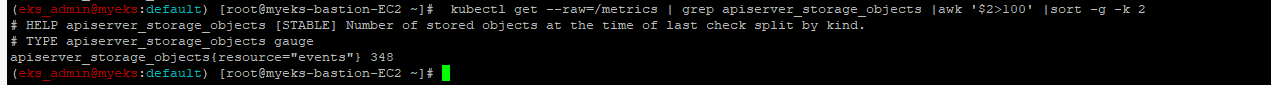

$> kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>100' |sort -g -k 2

2.2.1.2 How do I identify what is consuming etcd database space?

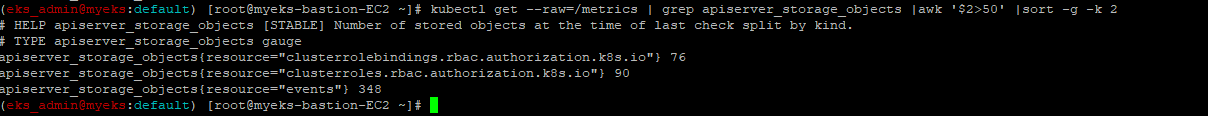

$> kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>100' |sort -g -k 2

$> kubectl get --raw=/metrics | grep apiserver_storage_objects |awk '$2>50' |sort -g -k 2

2.2.1.3 CW Logs Insights 쿼리 : Request volume - Requests by User Agent

fields userAgent, requestURI, @timestamp, @message

| filter @logStream like /kube-apiserver-audit/

| stats count(*) as count by userAgent

| sort count desc2.2.1.5 CW Logs Insights 쿼리 : Request volume - Requests by Universal Resource Identifier (URI)/Verb:

filter @logStream like /kube-apiserver-audit/

| stats count(*) as count by requestURI, verb, user.username

| sort count desc2.2.1.6 Object revision updates

fields requestURI

| filter @logStream like /kube-apiserver-audit/

| filter requestURI like /pods/

| filter verb like /patch/

| filter count > 8

| stats count(*) as count by requestURI, responseStatus.code

| filter responseStatus.code not like /500/

| sort count descfields @timestamp, userAgent, responseStatus.code, requestURI

| filter @logStream like /kube-apiserver-audit/

| filter requestURI like /pods/

| filter verb like /patch/

| filter requestURI like /name_of_the_pod_that_is_updating_fast/

| sort @timestamp위 CW Log Insights 명령어가 Control plane Logging off 이후에도 가능한지 확인 필요

-> 확인해 보니 실행 불가,

2.3 Container(Pod) Logging

POD Type으로 배포된 WEB Server에서 생성되는 Log를 확인

2.3.1 NGINX WEB Server 배포 with HELM

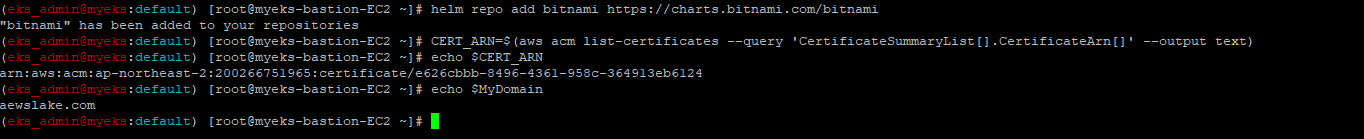

2.3.1.1 NGINX 웹서버 repo 추가

$> helm repo add bitnami https://charts.bitnami.com/bitnami2.3.1.2 HTTPS 통신을 위한 인증 ARN 확인

$> CERT_ARN=$(aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text)

$> echo $CERT_ARN

$> echo $MyDomain

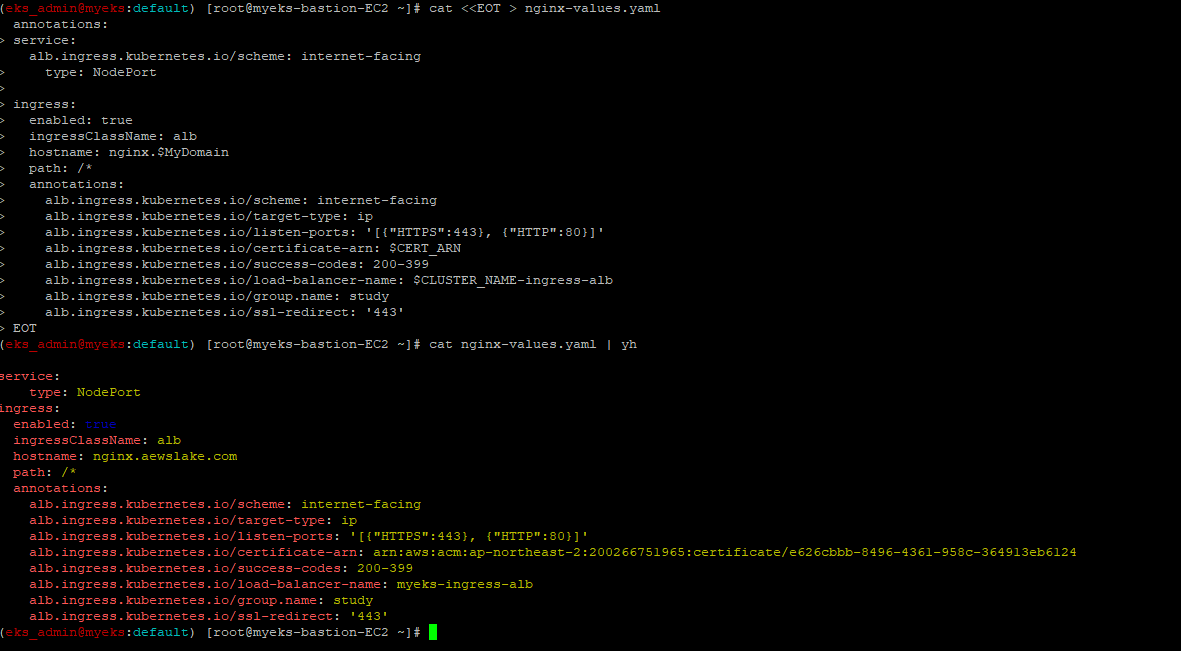

2.3.1.3 HELM CONFIG 파일 생성

$> cat <<EOT > nginx-values.yaml

service:

type: NodePort

ingress:

enabled: true

ingressClassName: alb

hostname: nginx.$MyDomain

path: /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: $CLUSTER_NAME-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

EOT

$> cat nginx-values.yaml | yh

2.3.1.4 NGINX 배포

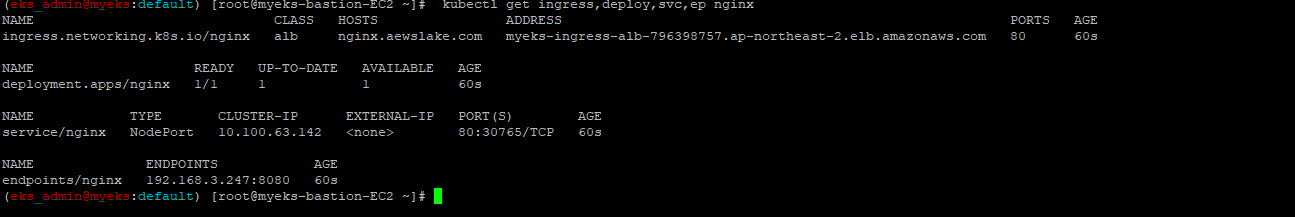

$> helm install nginx bitnami/nginx --version 14.1.0 -f nginx-values.yaml2.3.1.5 배포 확인

$> kubectl get ingress,deploy,svc,ep nginx

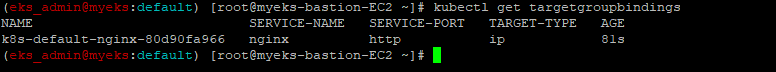

$> kubectl get targetgroupbindings # ALB TG 확인

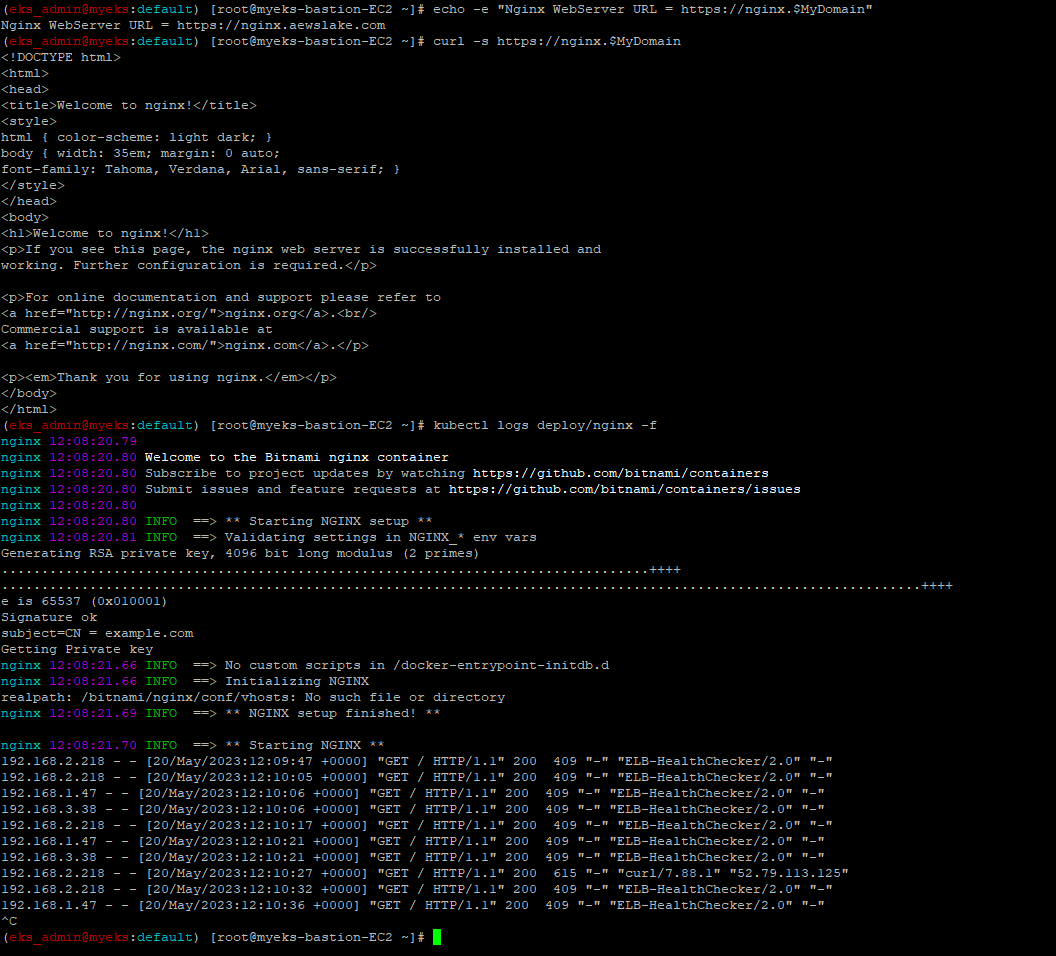

2.3.1.6 접속 확인

$> echo -e "Nginx WebServer URL = https://nginx.$MyDomain"

$> curl -s https://nginx.$MyDomain

$> kubectl logs deploy/nginx -f

2.3.1.7 WEB 접속 시도를 계속해서 한다.

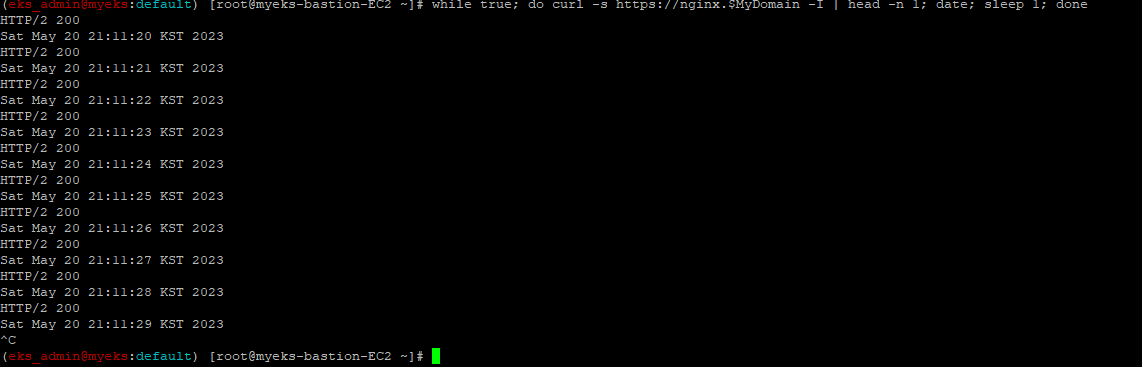

$> while true; do curl -s https://nginx.$MyDomain -I | head -n 1; date; sleep 1; done

2.3.1.7 컨테이너 환경의 로그 조회

컨테이너 환경의 로그 특성 상 지속적이지 않고, 여러 Node에 흩어져 있어 조회가 용이하지 않아, 일반적으로 로그들을 표준 출력/에러로 link로 해서 kubectl log 명령어로 통합 조회가 가능하게 한다.

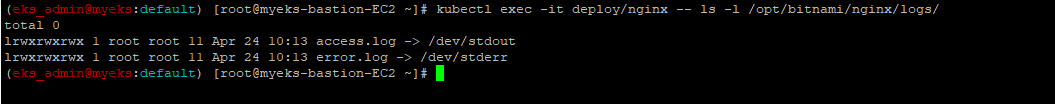

2.3.1.8 컨테이너 로그 파일 위치 확인

access.log는 표준 출력으로, error.log는 표준 에러로 출력

$> kubectl exec -it deploy/nginx -- ls -l /opt/bitnami/nginx/logs/

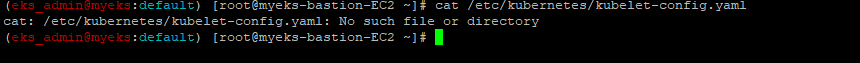

2.3.1.9 그리고 Kebelet의 기본 로그 파일 크기 설정은 10mi이다.

$> cat /etc/kubernetes/kubelet-config.yaml왜 파일이 없다고 하지?

3 Container Insights metrics in Amazon CloudWatch & Fluent Bit (Logs)

3.1 노드의 로그 확인

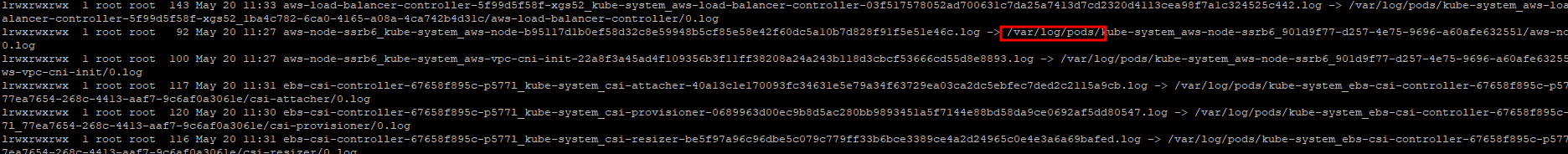

3.1.1 application log source( /var/log/containers -> symbolic link to /var/log/pods/

3.1.1.1 각 노드에 접속하여 log 확인, N2, N3도 차례대로 확인

$> ssh ec2-user@$N1 sudo tree /var/log/containers

$> ssh ec2-user@$N1 sudo ls -al /var/log/containers

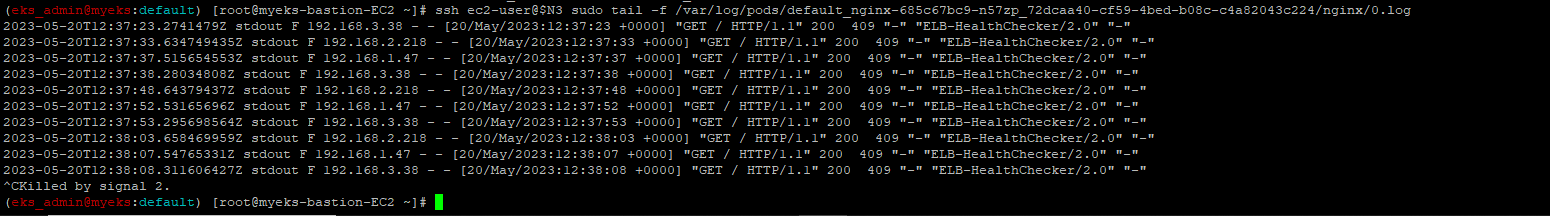

3.1.1.2 Pod에 접속하여 Log 확인

$> ssh ec2-user@$N3 sudo tail -f /var/log/pods/[pod]/nginx/0.log

3.1.2 Host log source 확인(/var/log/dmesg, /var/log/secure, and /var/log/messages), 노드(호스트) 로그

3.1.2.1 로그 위치 확인

$> ssh ec2-user@$N1 sudo tree /var/log/ -L 1

3.1.2.2 호스트 로그 확인

N1 sudo tail /var/log/dmesg

N1 sudo tail /var/log/secure

N1 sudo tail /var/log/messages

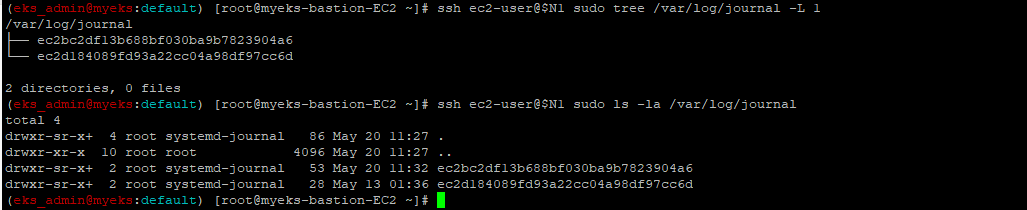

### 3.1.2.3 dataplane log source 확인(/var/log/journal for kubelet.service, kubeproxy.service, and docker.service), 쿠버네티스 데이터플레인 로그

$> ssh ec2-user@$N1 sudo tree /var/log/journal -L 1

$> ssh ec2-user@$N1 sudo ls -la /var/log/journal

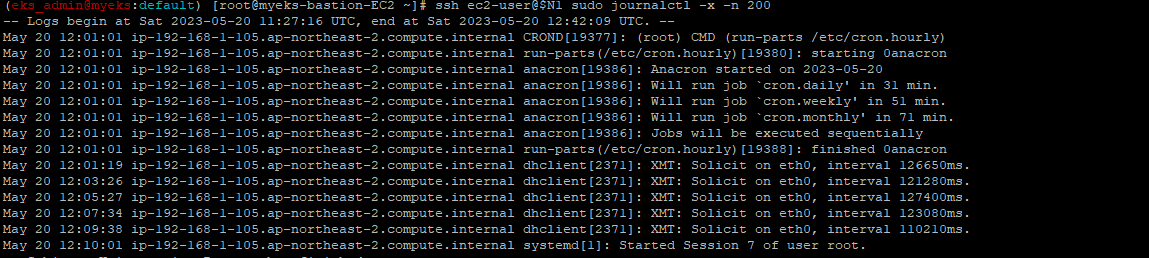

3.1.2.4 Journal log 내용 확인_with journalctl 명령어

$> ssh ec2-user@$N1 sudo journalctl -x -n 200

$> ssh ec2-user@$N1 sudo journalctl -f

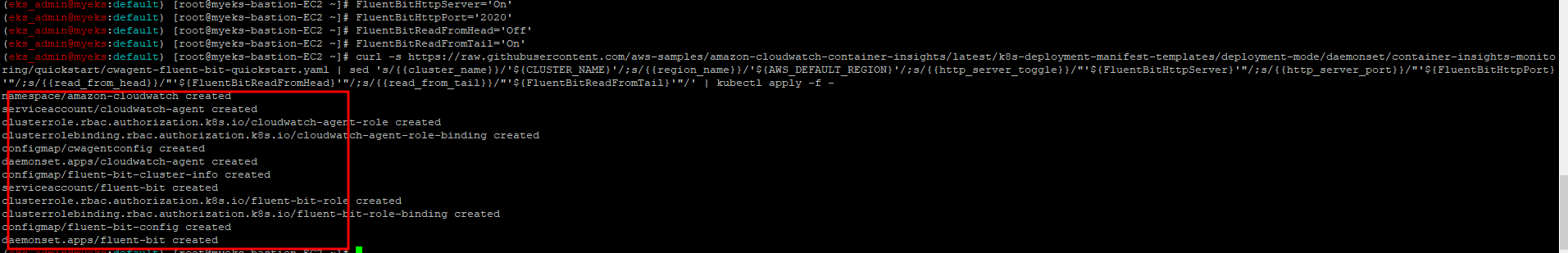

3.2 CloudWatch Container Insight 설치 : cloudwatch-agent & fluent-bit

3.2.1 설치

$> FluentBitHttpServer='On'

$> FluentBitHttpPort='2020'

$> FluentBitReadFromHead='Off'

$> FluentBitReadFromTail='On'

$> curl -s https://raw.githubusercontent.com/aws-samples/amazon-cloudwatch-container-insights/latest/k8s-deployment-manifest-templates/deployment-mode/daemonset/container-insights-monitoring/quickstart/cwagent-fluent-bit-quickstart.yaml | sed 's/{{cluster_name}}/'${CLUSTER_NAME}'/;s/{{region_name}}/'${AWS_DEFAULT_REGION}'/;s/{{http_server_toggle}}/"'${FluentBitHttpServer}'"/;s/{{http_server_port}}/"'${FluentBitHttpPort}'"/;s/{{read_from_head}}/"'${FluentBitReadFromHead}'"/;s/{{read_from_tail}}/"'${FluentBitReadFromTail}'"/' | kubectl apply -f -

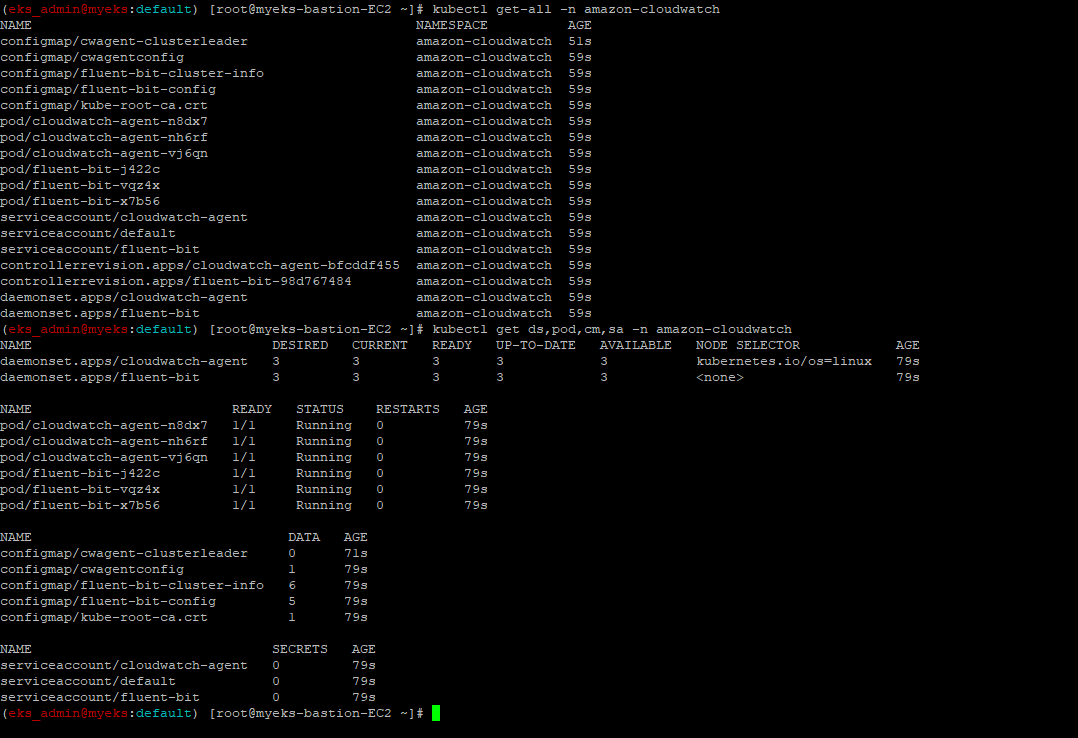

3.2.2 설치 확인

$> kubectl get-all -n amazon-cloudwatch

$> kubectl get ds,pod,cm,sa -n amazon-cloudwatch

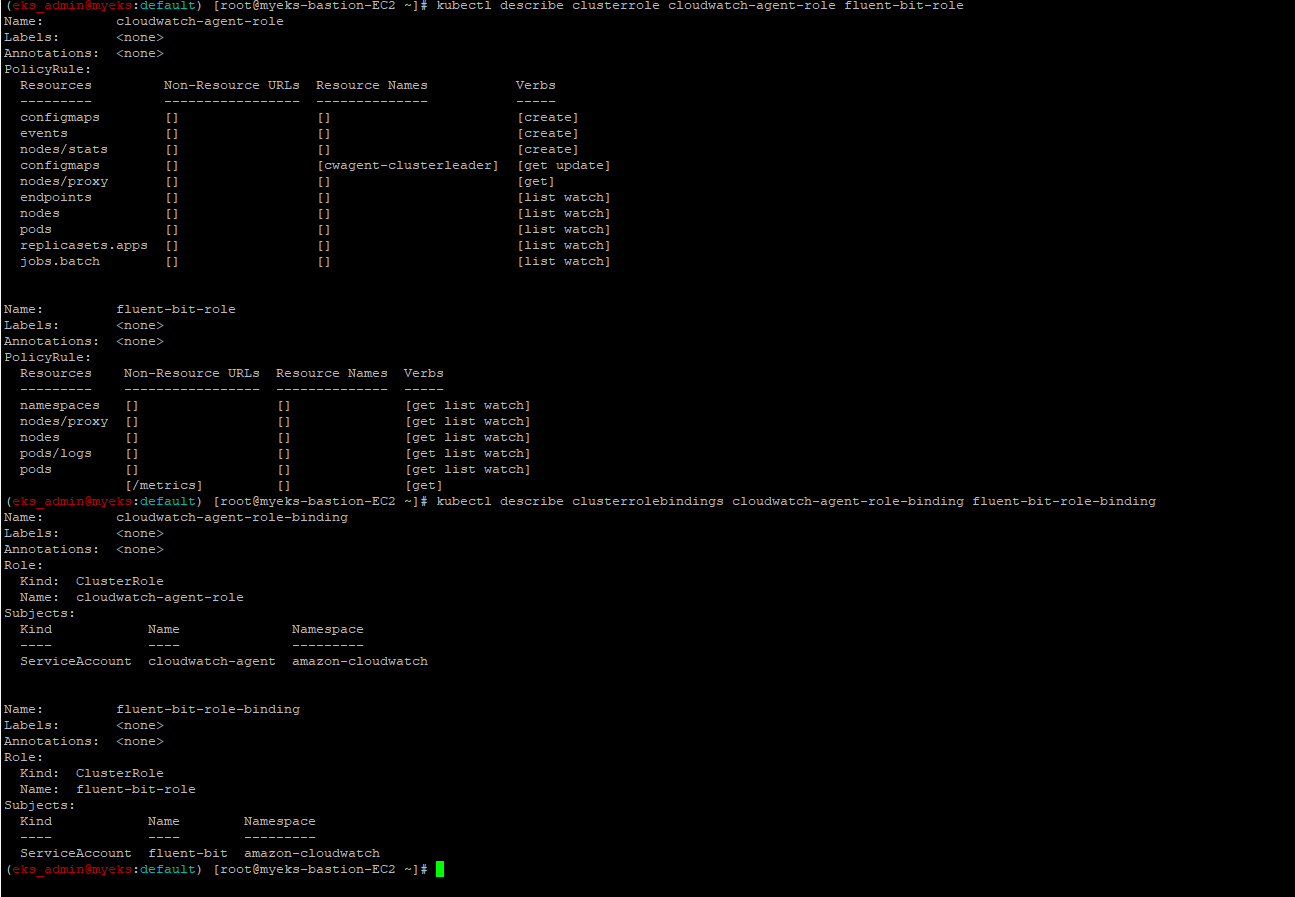

$> kubectl describe clusterrole cloudwatch-agent-role fluent-bit-role

$> kubectl describe clusterrolebindings cloudwatch-agent-role-binding fluent-bit-role-binding

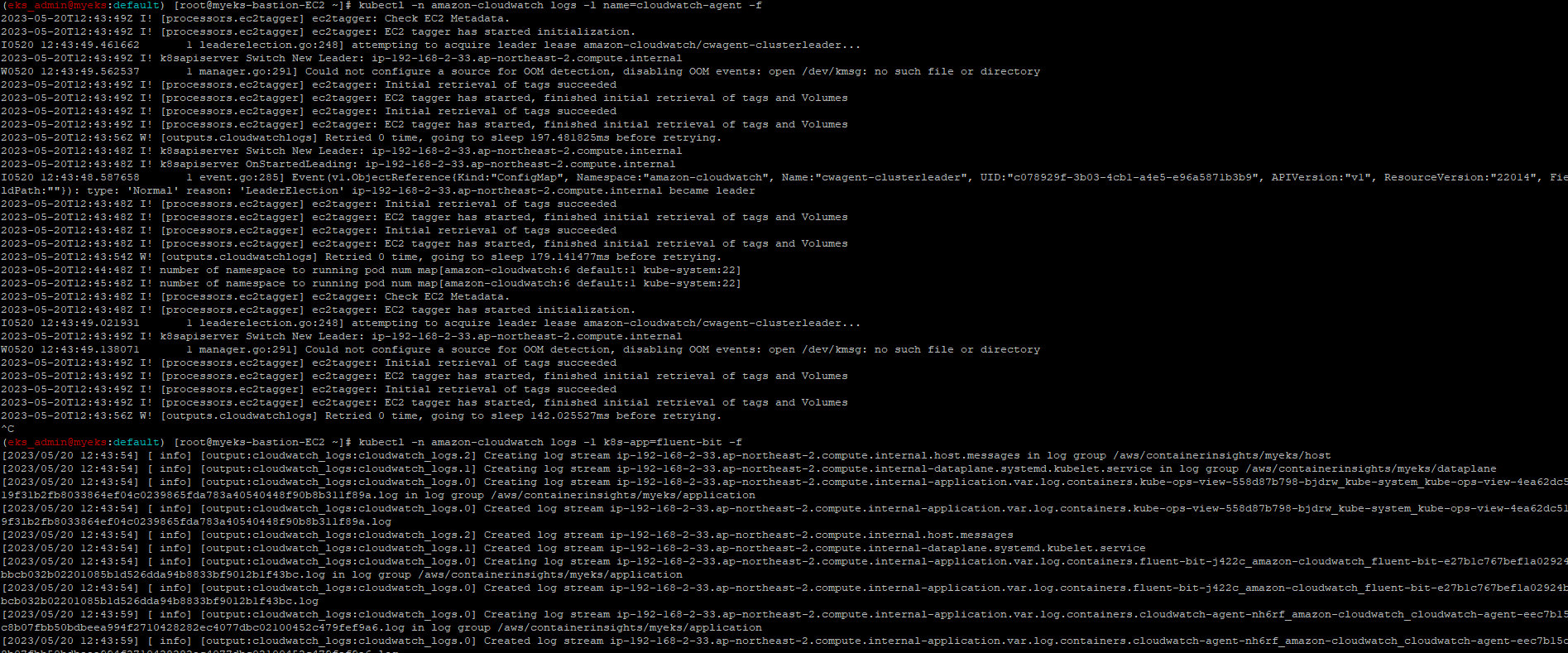

$> kubectl -n amazon-cloudwatch logs -l name=cloudwatch-agent -f

$> kubectl -n amazon-cloudwatch logs -l k8s-app=fluent-bit -f # 파드 로그 확인

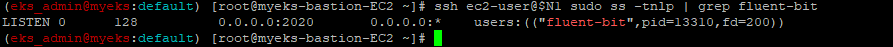

t : tcp, l: listening socket, p : socket 을 사용하는 프로세스의 정보를 표시

자세한 정보는 URL 참조 : https://www.lesstif.com/lpt/linux-socket-ss-socket-statistics-91947283.html

Node별 fluent-bit socket status

$> ssh ec2-user@$N1 sudo ss -tnlp | grep fluent-bit

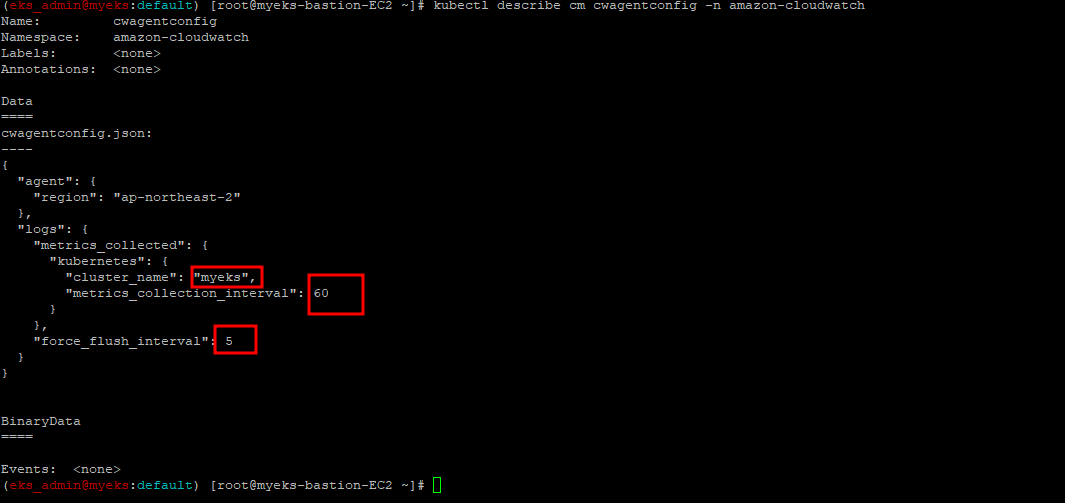

3.2.3 cloudwatch-agent 설정 확인

$> kubectl describe cm cwagentconfig -n amazon-cloudwatch

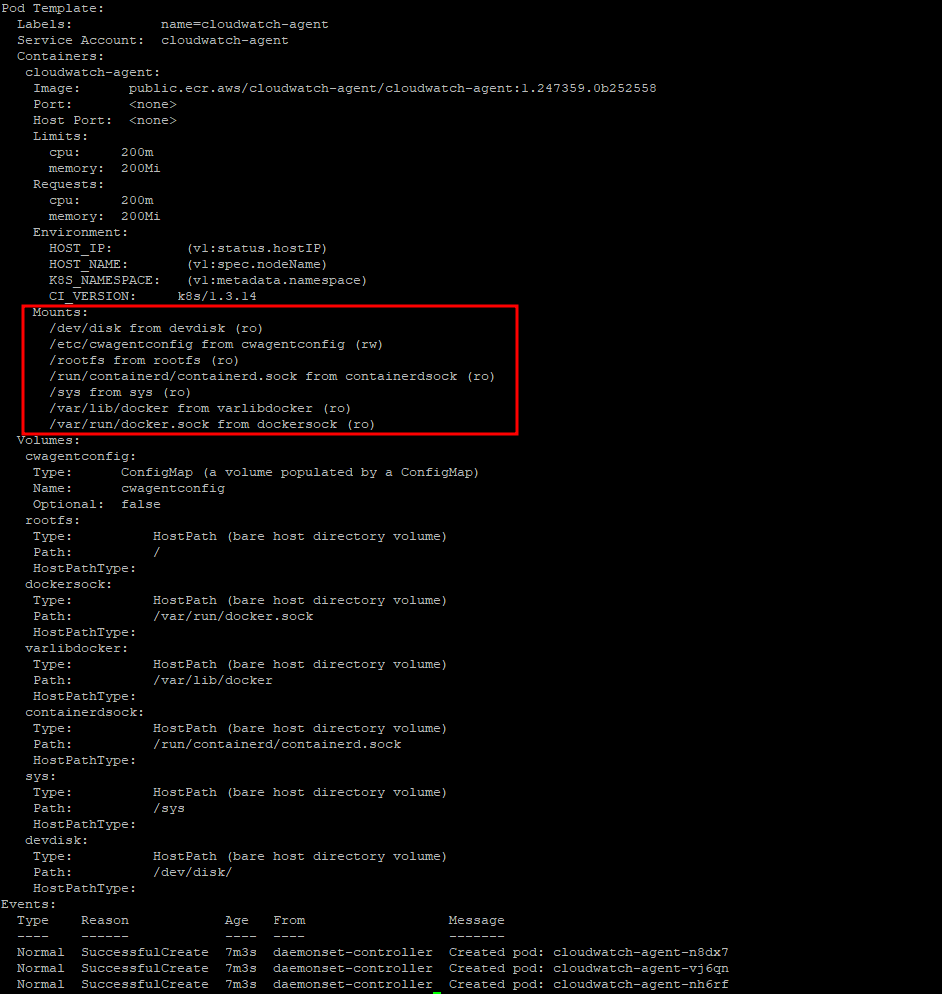

3.2.4 CW 파드가 수집하는 방법_Volumes에 HostPath를 살펴보자

$> kubectl describe -n amazon-cloudwatch ds cloudwatch-agent

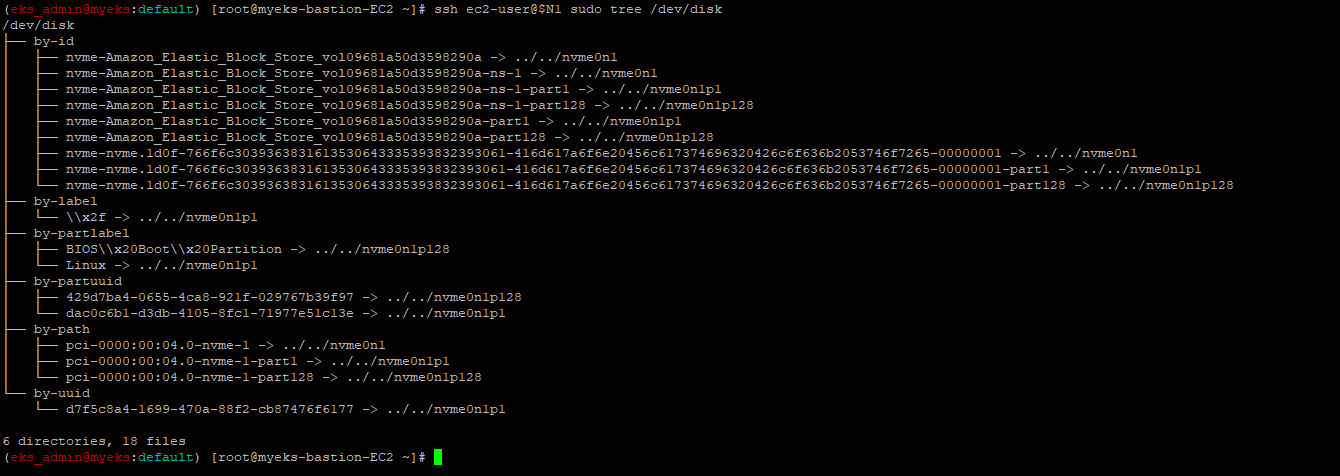

3.2.5 hostpath에 명시된 경로를 확인

$> ssh ec2-user@$N1 sudo tree /dev/disk

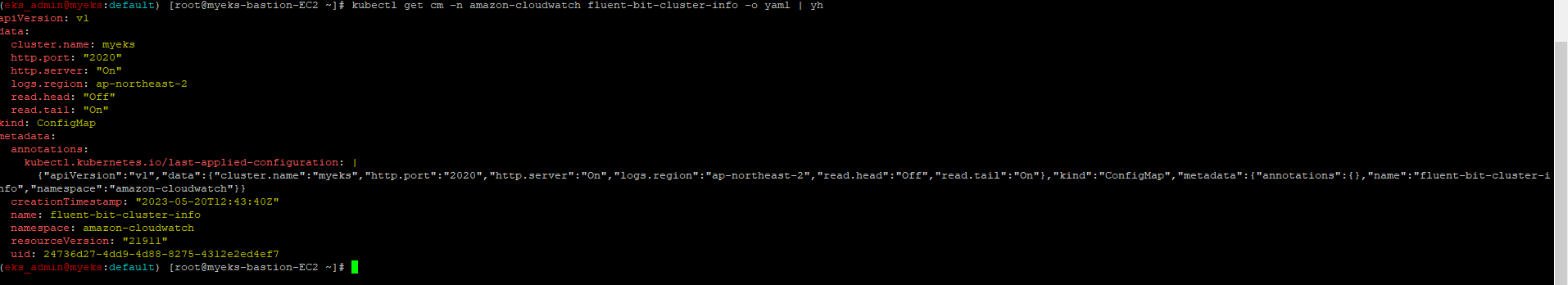

3.2.6 Fluent Bit Cluster Info_configmap 확인

$> kubectl get cm -n amazon-cloudwatch fluent-bit-cluster-info -o yaml | yh

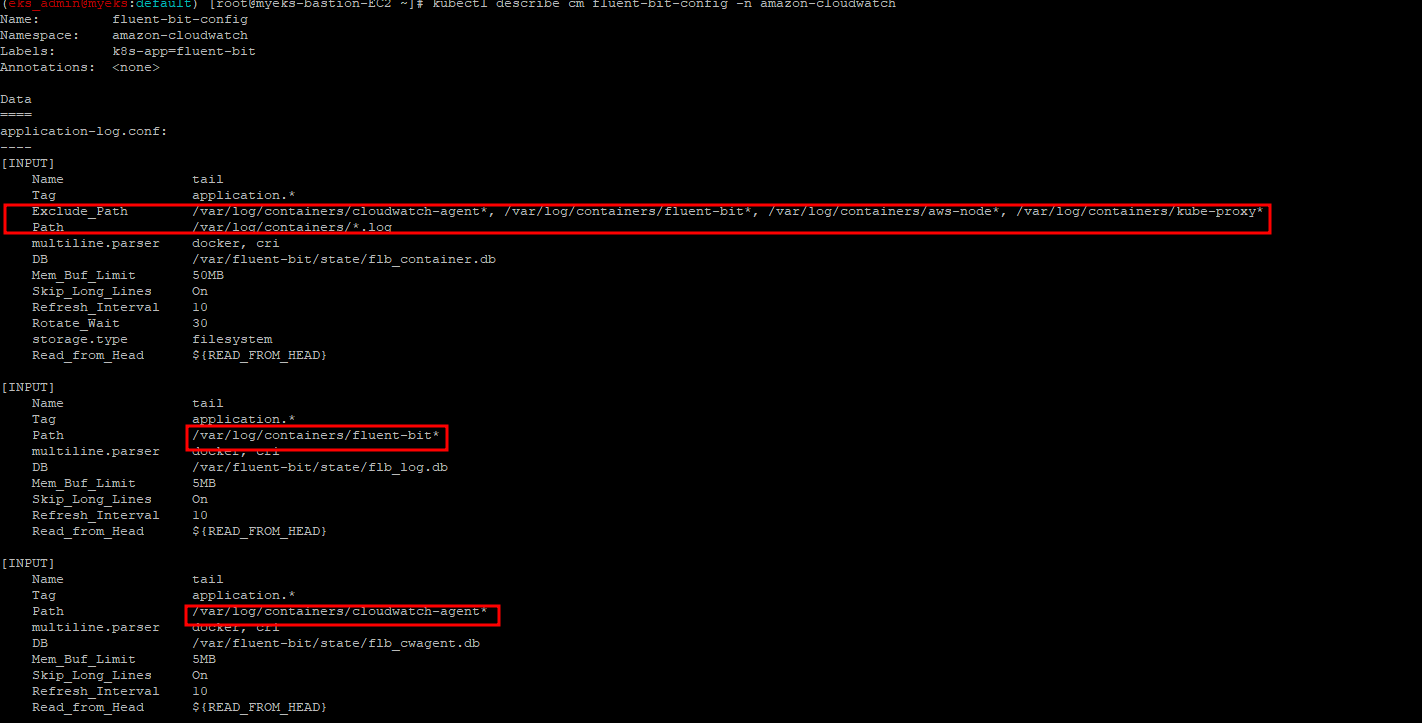

3.2.7 Fluent Bit 로그 INPUT/FILTER/OUTPUT 설정 확인

설정 부분 구성 : application-log.conf , dataplane-log.conf , fluent-bit.conf , host-log.conf , parsers.conf

$> kubectl describe cm fluent-bit-config -n amazon-cloudwatch

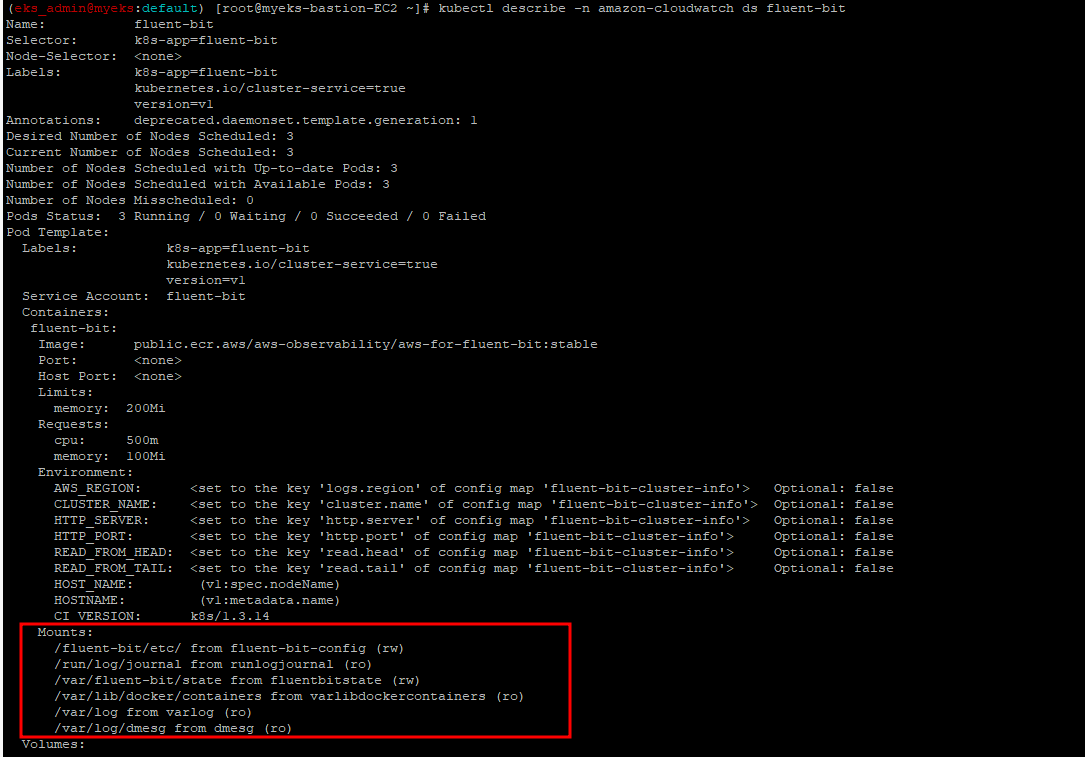

3.2.8 Fluent Bit 파드가 수집하는 방법 : Volumes에 HostPath를 살펴보자

$> kubectl describe -n amazon-cloudwatch ds fluent-bit

3.3 로그 확인

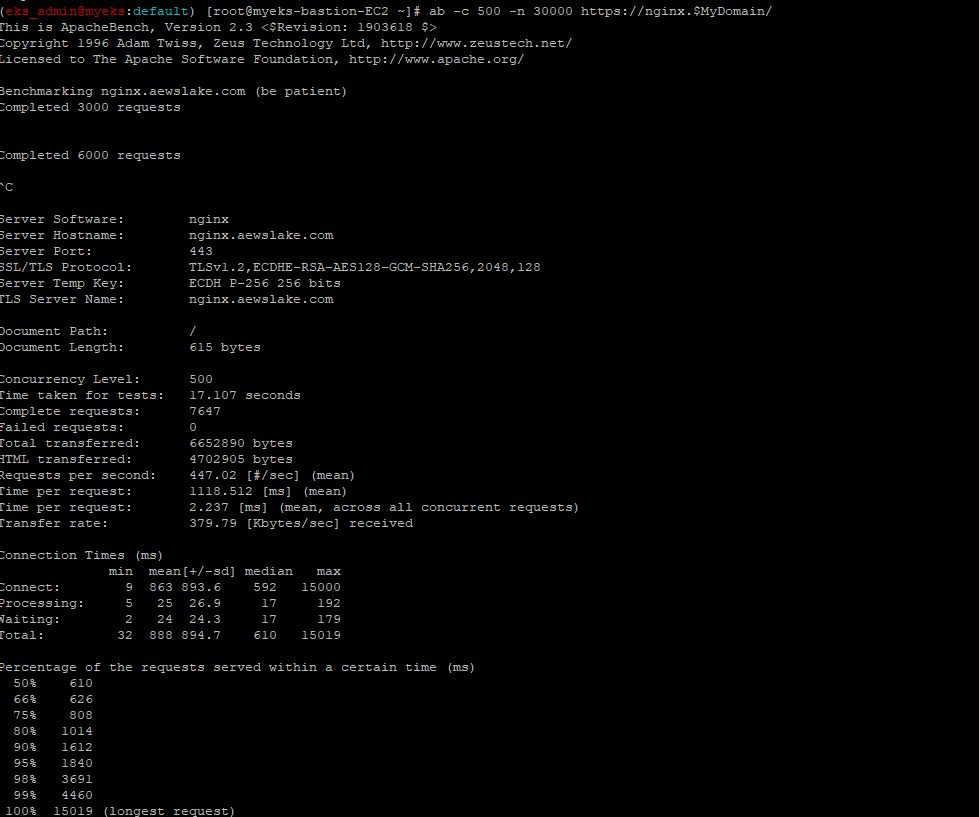

3.3.1 부하 발생

$> curl -s https://nginx.$MyDomain

$> yum install -y httpd

#ab -c 클라이언트수 -n 요청수 -t 시간 URL

$> ab -c 500 -n 30000 https://nginx.$MyDomain/

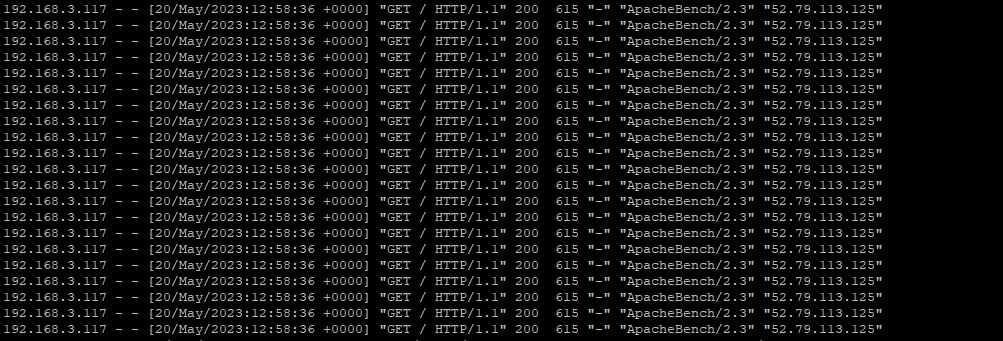

3.3.2 파드 직접 로그 모니터링

$> kubectl logs deploy/nginx -f

3.4 AWS WEB Console에서 CloudWatch Insights 조회

상세한 Query는 URL 참조 : https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/CWL_QuerySyntax.html

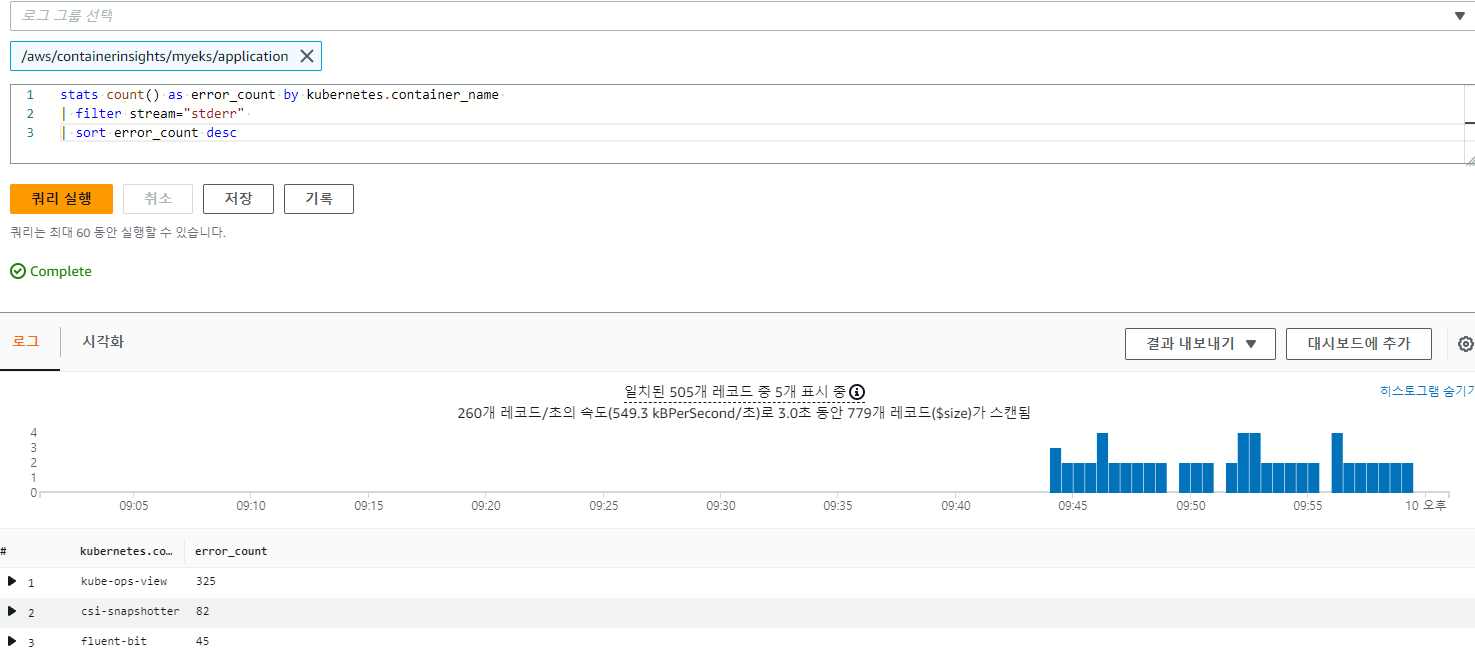

3.4.1 Application log errors by container name : 컨테이너 이름별 애플리케이션 로그 오류

로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/application

stats count() as error_count by kubernetes.container_name

| filter stream="stderr"

| sort error_count desc

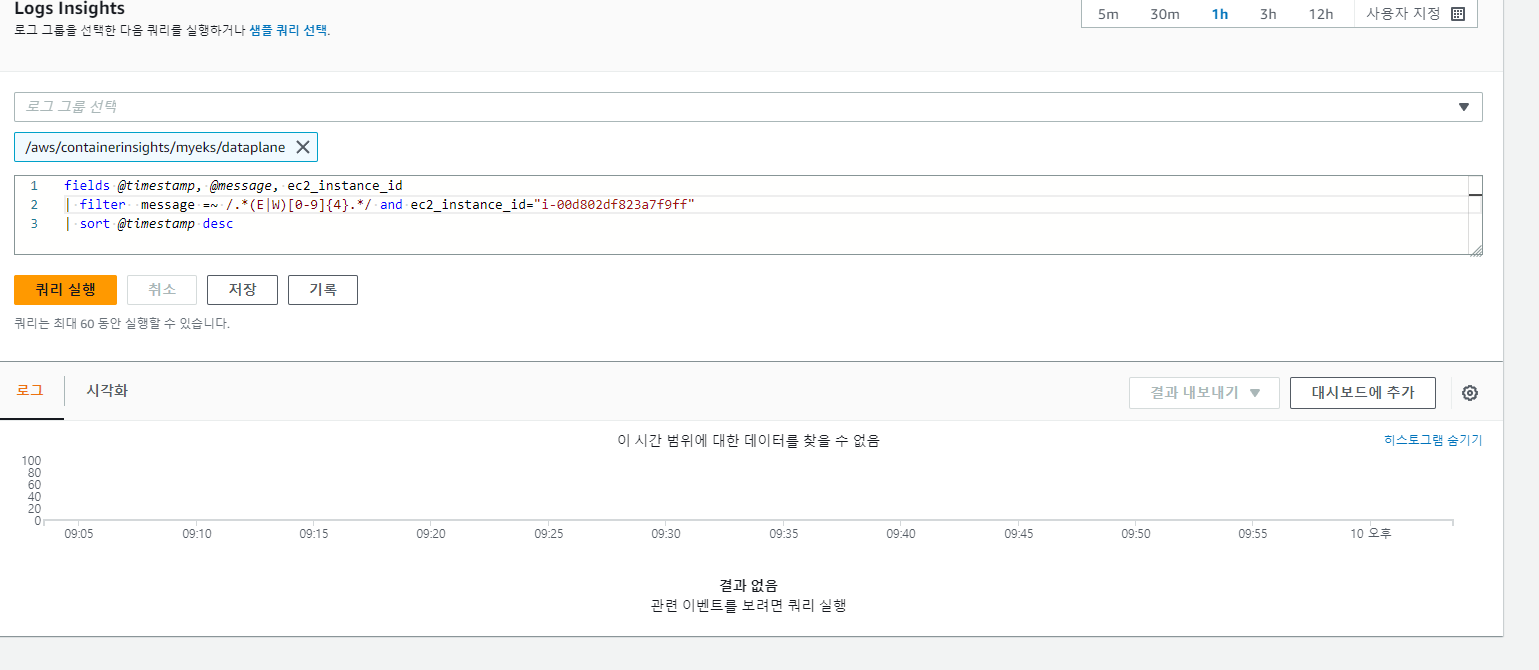

3.4.2 All Kubelet errors/warning logs for for a given EKS worker node

#로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/dataplane

fields @timestamp, @message, ec2_instance_id

| filter message =~ /.*(E|W)[0-9]{4}.*/ and ec2_instance_id="<YOUR INSTANCE ID>"

| sort @timestamp desc

3.4.3 Kubelet errors/warning count per EKS worker node in the cluster

#로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/dataplane

fields @timestamp, @message, ec2_instance_id

| filter message =~ /.*(E|W)[0-9]{4}.*/

| stats count(*) as error_count by ec2_instance_id

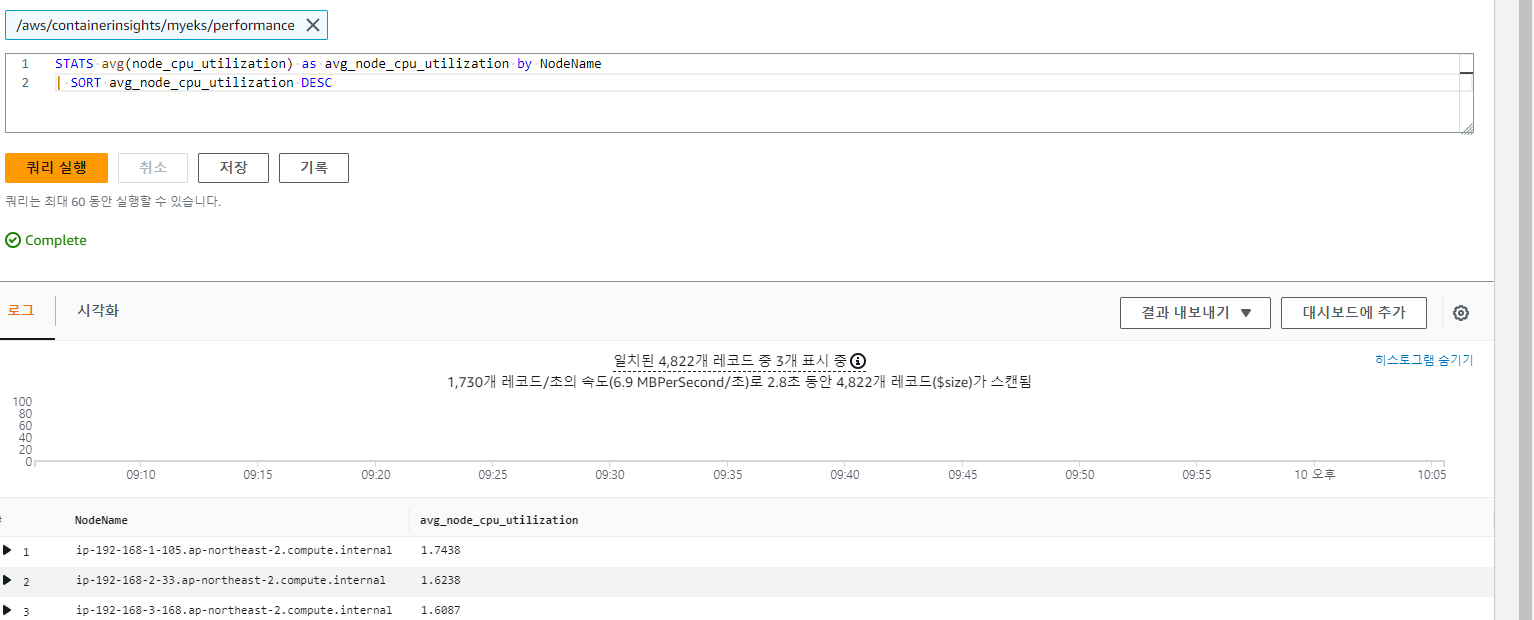

3.4.4 performance 로그 그룹

#로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/performance

STATS avg(node_cpu_utilization) as avg_node_cpu_utilization by NodeName

| SORT avg_node_cpu_utilization DESC

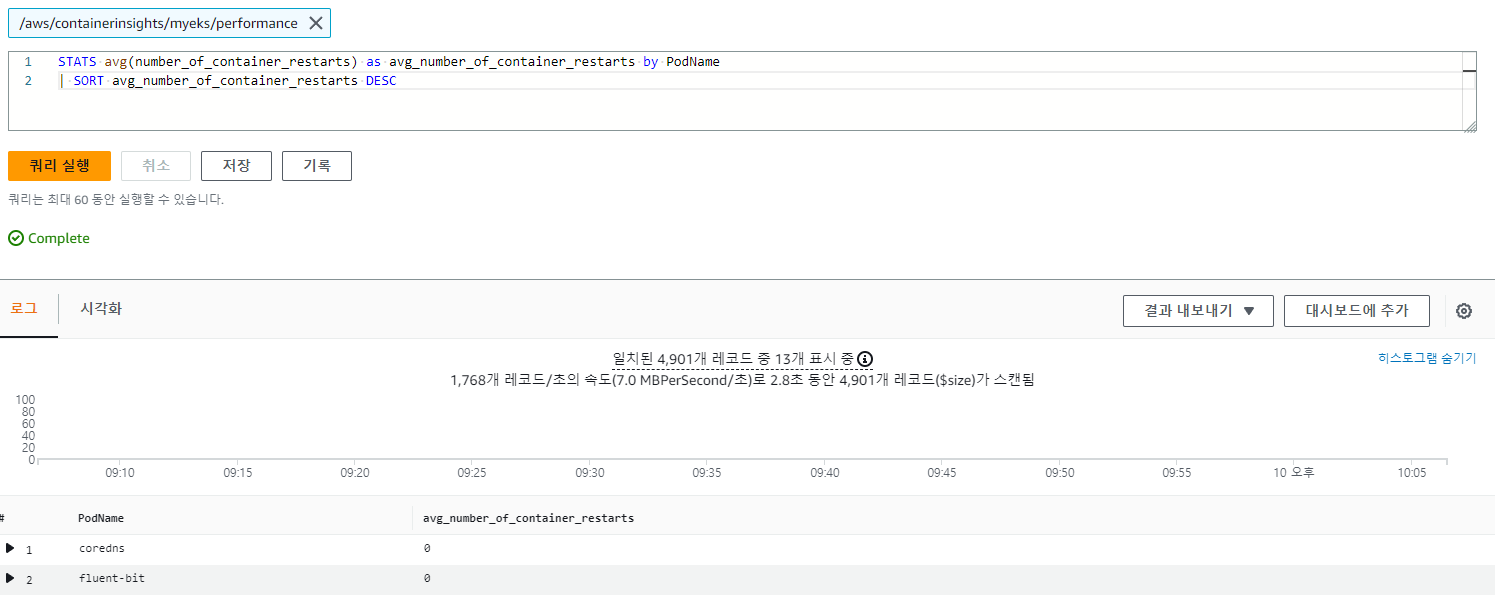

3.4.5 파드별 재시작(restart) 카운트

STATS avg(number_of_container_restarts) as avg_number_of_container_restarts by PodName

| SORT avg_number_of_container_restarts DESC

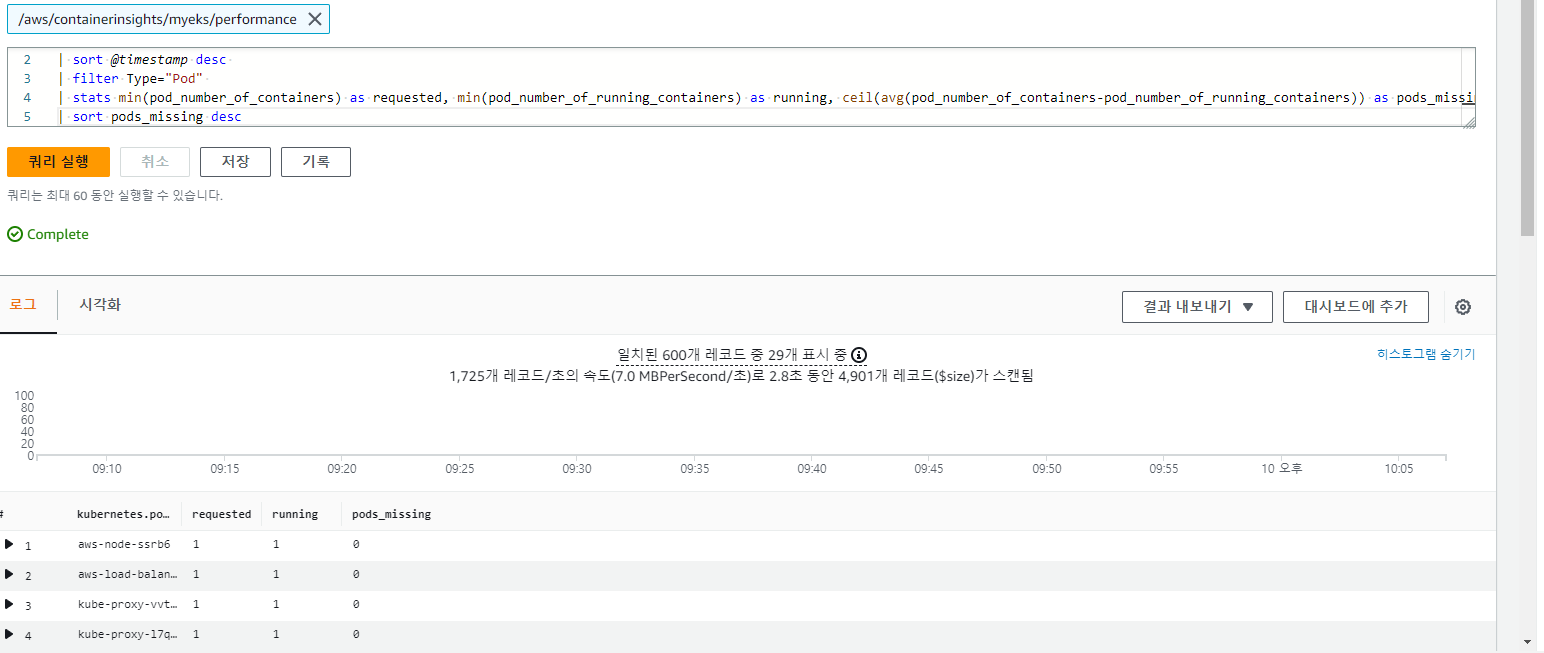

3.4.6 요청된 Pod와 실행 중인 Pod 간 비교

fields @timestamp, @message

| sort @timestamp desc

| filter Type="Pod"

| stats min(pod_number_of_containers) as requested, min(pod_number_of_running_containers) as running, ceil(avg(pod_number_of_containers-pod_number_of_running_containers)) as pods_missing by kubernetes.pod_name

| sort pods_missing desc

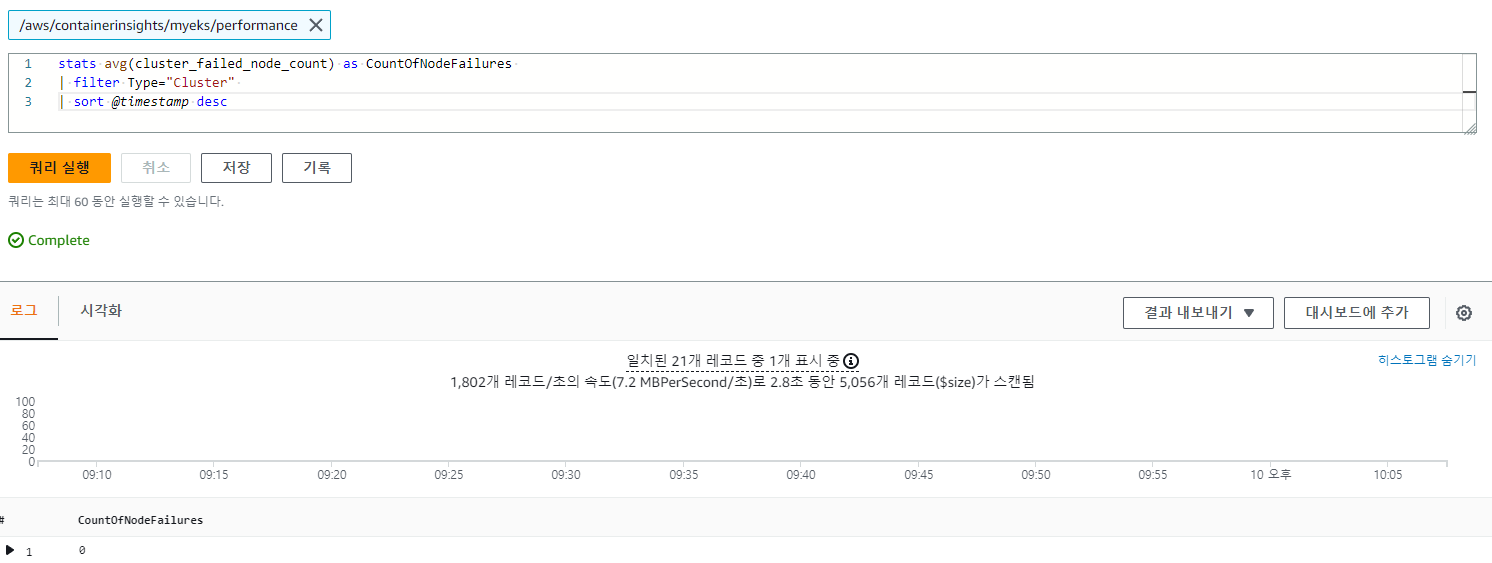

3.4.7 클러스터 노드 실패 횟수

stats avg(cluster_failed_node_count) as CountOfNodeFailures

| filter Type="Cluster"

| sort @timestamp desc

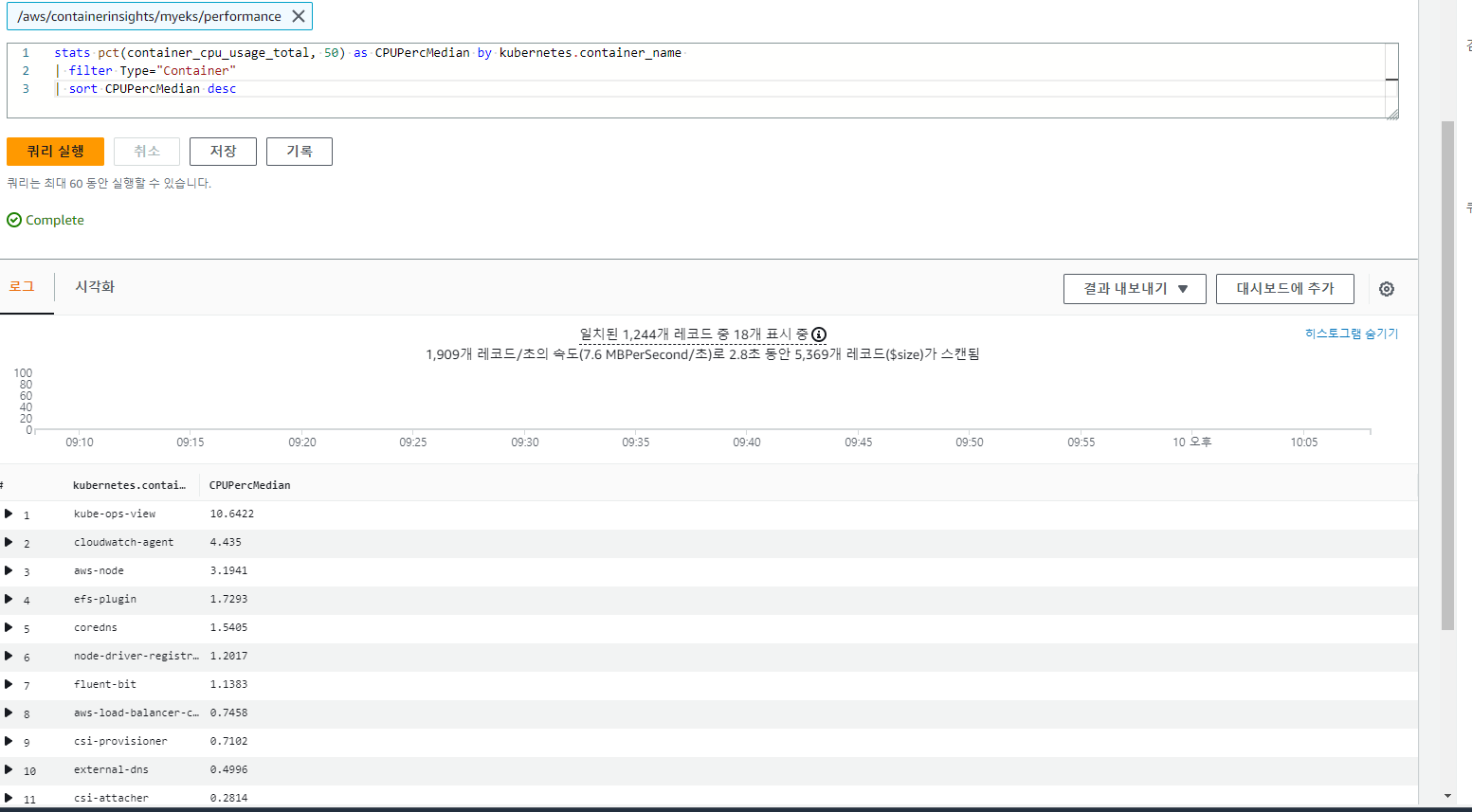

3.4.8 파드별 CPU 사용량

stats pct(container_cpu_usage_total, 50) as CPUPercMedian by kubernetes.container_name

| filter Type="Container"

| sort CPUPercMedian desc

3.5 매트릭 확인

AWS WEB Console에서 Container Insights의 메뉴 확인

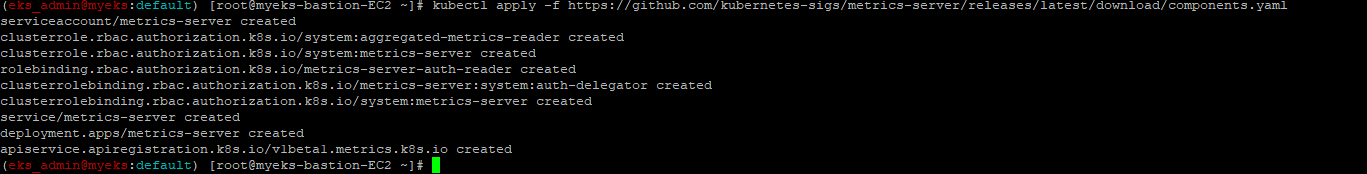

4 Metric-server & kwathch & botkube

4.1 Metric-server

Metric-server는 사용자가 요청한 kubectl top 명령어를 api-server로부터 받아 ,각 Pod(Container)의 자원 사용량을 정보를 취합하는 cAdvisor에서 정보를 요청하는 kubelet에서 정보를 받아 최종적으로 사용자에게 취합 결과를 전달한다.

4.1.1 배포

$> kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

4.1.2 메트릭 서버 확인 : 메트릭은 15초 간격으로 cAdvisor를 통하여 가져옴

$> kubectl get pod -n kube-system -l k8s-app=metrics-server

$> kubectl api-resources | grep metrics

$> kubectl get apiservices |egrep '(AVAILABLE|metrics)'

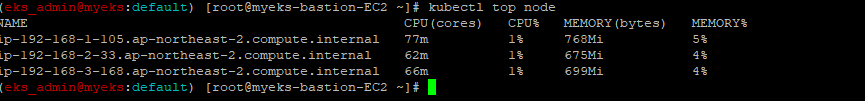

4.1.3 노드 메트릭 확인

kubectl top 명령어는 Nodes or Pods의 자원 소모량을 보여주는 명령어로 자세한 설명은 URL 참조: https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#top

$> kubectl top node

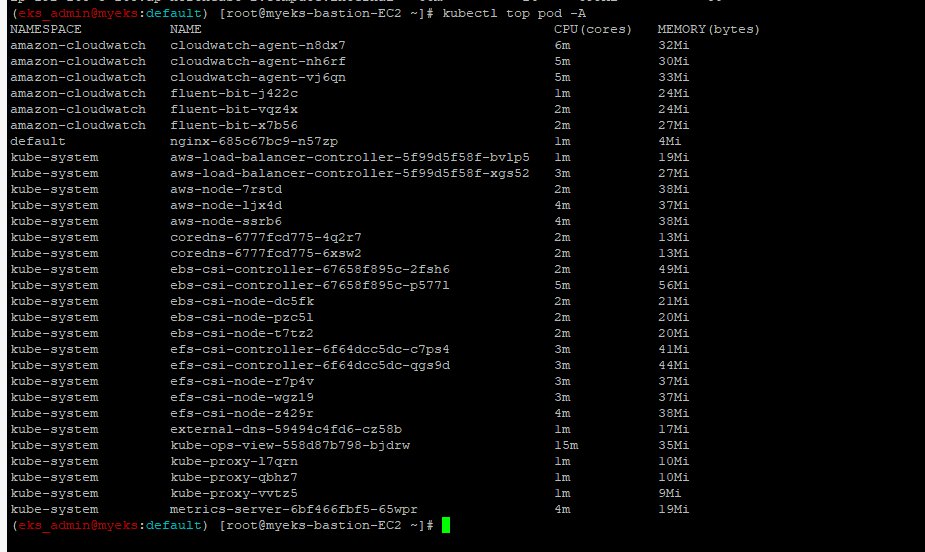

4.1.4 파드 메트릭 확인

$> kubectl top pod -A

$> kubectl top pod -n kube-system --sort-by='cpu'

$> kubectl top pod -n kube-system --sort-by='memory'

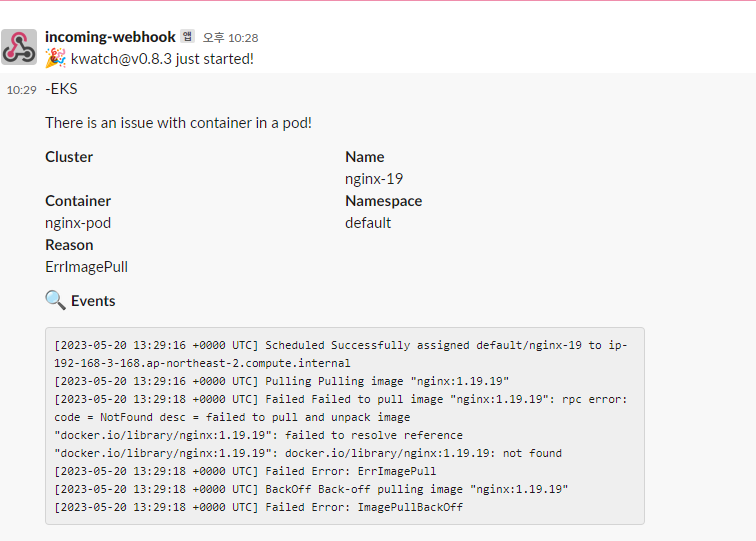

4.2 kwath

kwatch helps you monitor all changes in your Kubernetes(K8s) cluster, detects crashes in your running apps in realtime, and publishes notifications to your channels (Slack, Discord, etc.) instantly

보다 자세한 설명은 URL 참조 : https://kwatch.dev/

4.2.1 배포

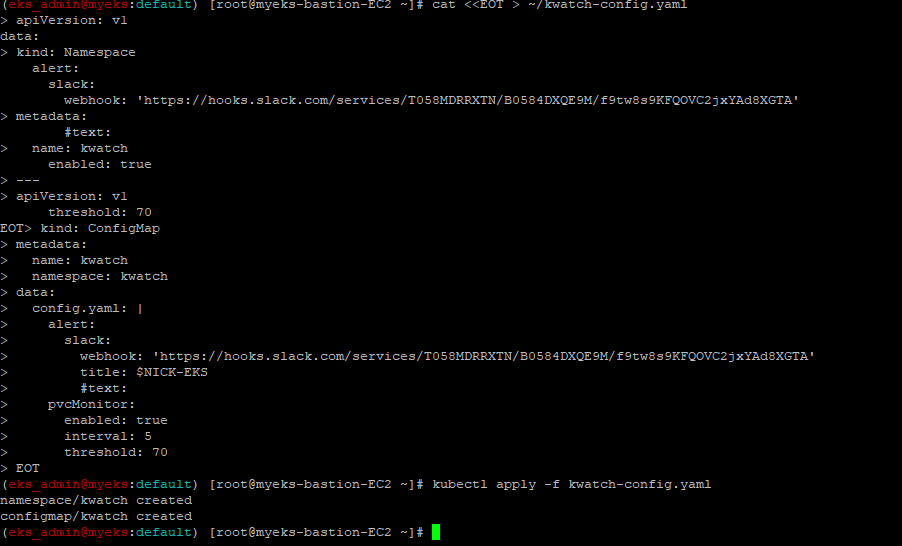

4.2.1.1 kwatch namespace 및 configmap 배포

개인 Slack Channel 백수로 메시지 전송

$> cat <<EOT > ~/kwatch-config.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kwatch

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kwatch

namespace: kwatch

data:

config.yaml: |

alert:

slack:

webhook: 'https://hooks.slack.com/services/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'

title: $NICK-EKS

#text:

pvcMonitor:

enabled: true

interval: 5

threshold: 70

EOT

$> kubectl apply -f kwatch-config.yaml

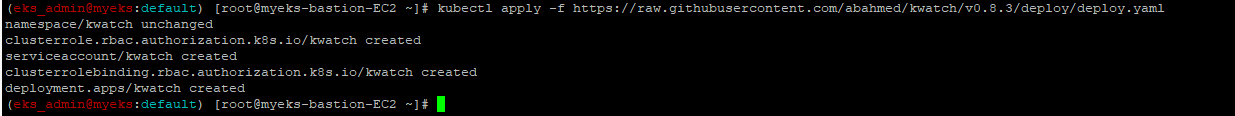

4.2.1.2 배포

$> kubectl apply -f https://raw.githubusercontent.com/abahmed/kwatch/v0.8.3/deploy/deploy.yaml

4.2.2 잘못된 이미지 파드 배포 시험으로 확인

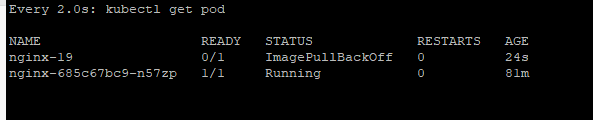

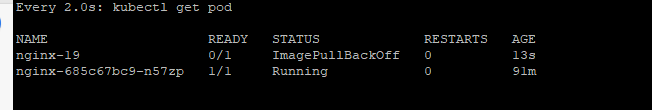

4.2.2.1 Pod 상태 확인_별도 Terminal

$> watch kubectl get pod

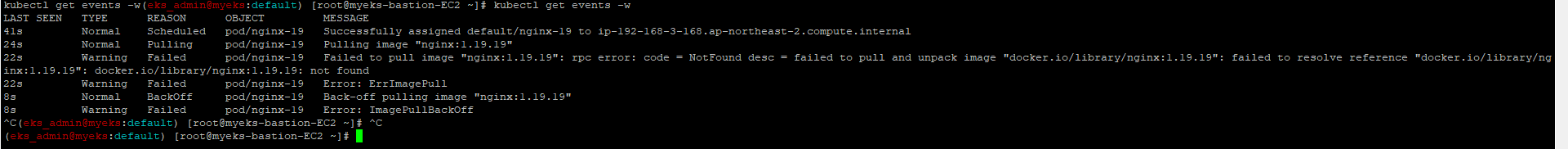

4.2.2.2 잘못된 이미지 정보의 파드 배포

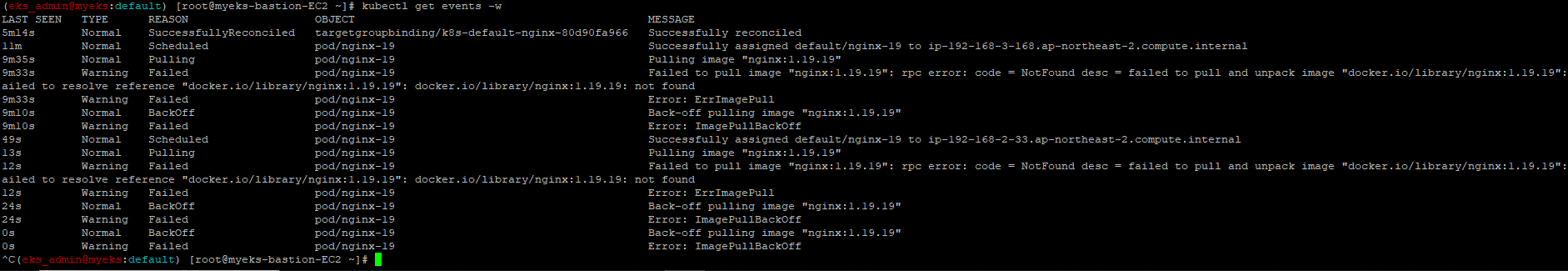

$> kubectl apply -f https://raw.githubusercontent.com/junghoon2/kube-books/main/ch05/nginx-error-pod.yml

$> kubectl get events -w

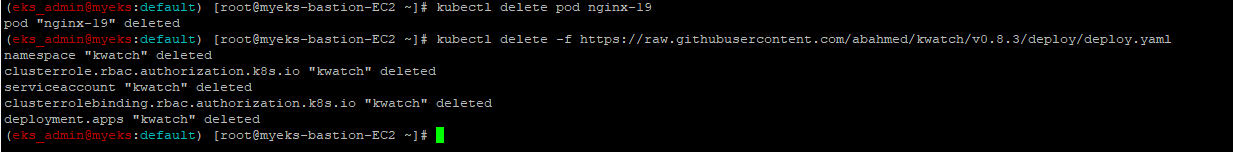

4.2.2.3 pod 삭제

$> kubectl delete pod nginx-194.2.3 kwatch 삭제

$> kubectl delete -f https://raw.githubusercontent.com/abahmed/kwatch/v0.8.3/deploy/deploy.yaml

4.3 Botkube

BotKube는 Kubernetes 클러스터 모니터링 및 디버깅을위한 메시징 봇이며, Slack, Mattermost, Microsoft Teams 등을 지원하고 메신저에서 kubectl 을 통한 명령도 가능

보다 자세한 사항은 URL 참조 : https://botkube.io/

4.3.1 slack app 설정_SLACK_API_BOT_TOKEN 과 SLACK_API_APP_TOKEN 생성

아래 TOKEN 정보는 블로그에서는 보이지 않게 표시

자세한 사항은 URL 참조 : https://docs.botkube.io/installation/slack/

$> export SLACK_API_BOT_TOKEN='xoxb-5293467881940-5291085511123-Mnm7wL6XNNyOJIlgwHcYbNuM'

$> export SLACK_API_APP_TOKEN='xapp-1-A058XQW2VQR-5291233622130-93fad117387b75eb1b3662b63c851ed414f1ca2cafc2d88201016047311c969e'4.3.2 설치

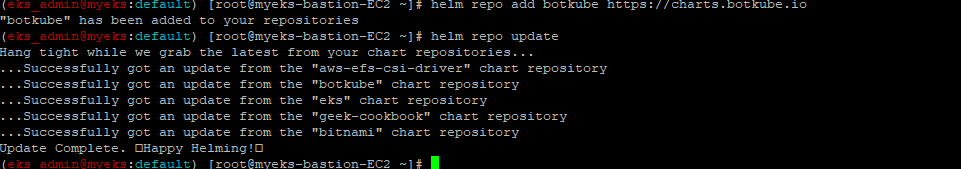

4.3.2.1 Botkube Helm repo 추가

$> helm repo add botkube https://charts.botkube.io

$> helm repo update

4.3.2.2 관련 Helm Parameter 지정

Botkube helm chart 관련 상세한 Parmaeter 부분은 URL 참조 : https://docs.botkube.io/next/configuration/helm-chart-parameters/

#이건 뭔지 모르겠네..

$> export ALLOW_KUBECTL=true

# enables `helm` commands execution

$> export ALLOW_HELM=true

#Slack channel name without '#' prefix where you have added Botkube and want to receive notifications in

$> export SLACK_CHANNEL_NAME=백수들4.3.2.3 Helm Chart Config File 생성

$> cat <<EOT > botkube-values.yaml

actions:

'describe-created-resource': # kubectl describe

enabled: true

'show-logs-on-error': # kubectl logs

enabled: true

executors:

k8s-default-tools:

botkube/helm:

enabled: true

botkube/kubectl:

enabled: true

EOT4.3.2.4 설치(배포)

$> helm install --version v1.0.0 botkube --namespace botkube --create-namespace \

--set communications.default-group.socketSlack.enabled=true \

--set communications.default-group.socketSlack.channels.default.name=${SLACK_CHANNEL_NAME} \

--set communications.default-group.socketSlack.appToken=${SLACK_API_APP_TOKEN} \

--set communications.default-group.socketSlack.botToken=${SLACK_API_BOT_TOKEN} \

--set settings.clusterName=${CLUSTER_NAME} \

--set 'executors.k8s-default-tools.botkube/kubectl.enabled'=${ALLOW_KUBECTL} \

--set 'executors.k8s-default-tools.botkube/helm.enabled'=${ALLOW_HELM} \

-f botkube-values.yaml botkube/botkube

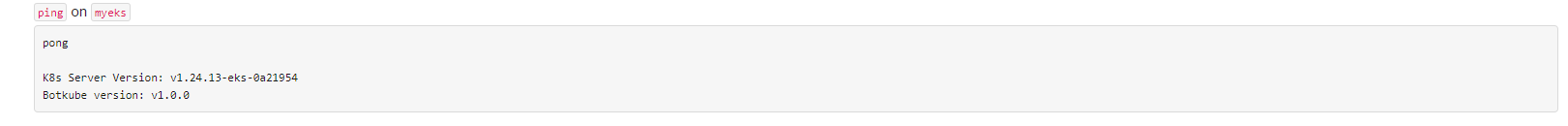

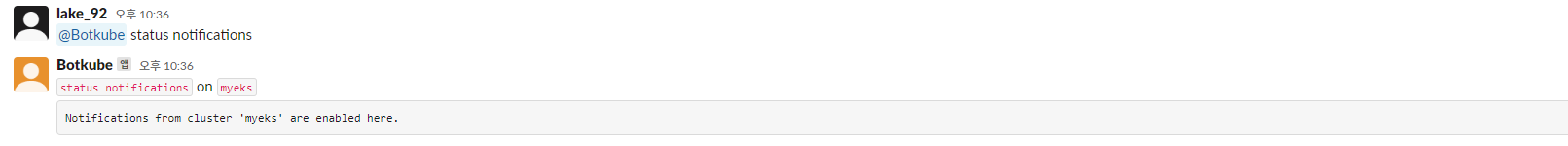

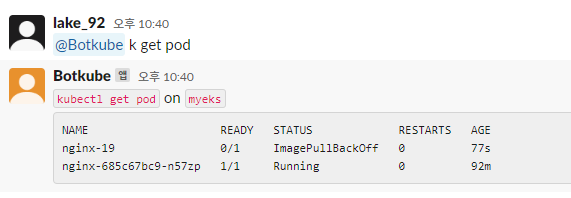

4.3.3 사용 on slack 백수들 channel

4.3.3.1 연결 상태, notifications 상태 확인

@Botkube ping

@Botkube status notifications

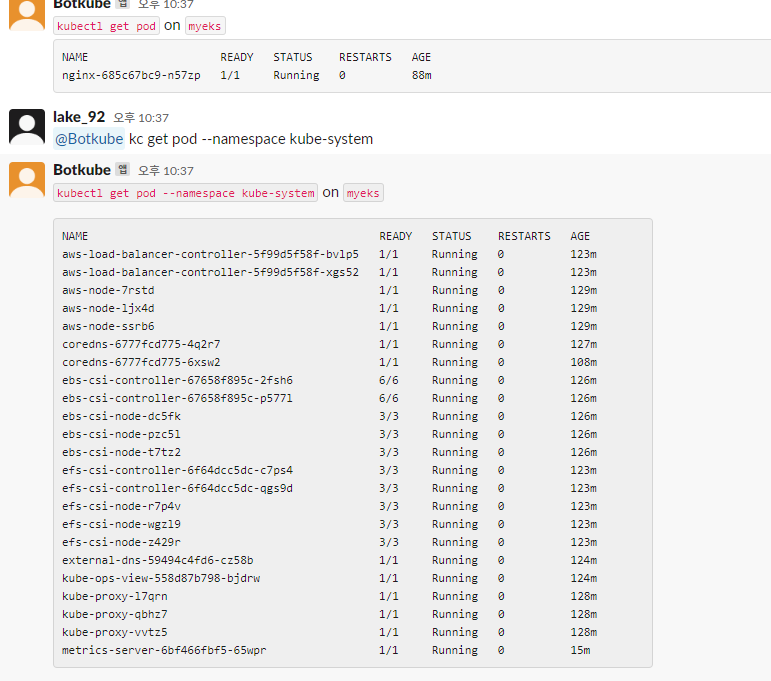

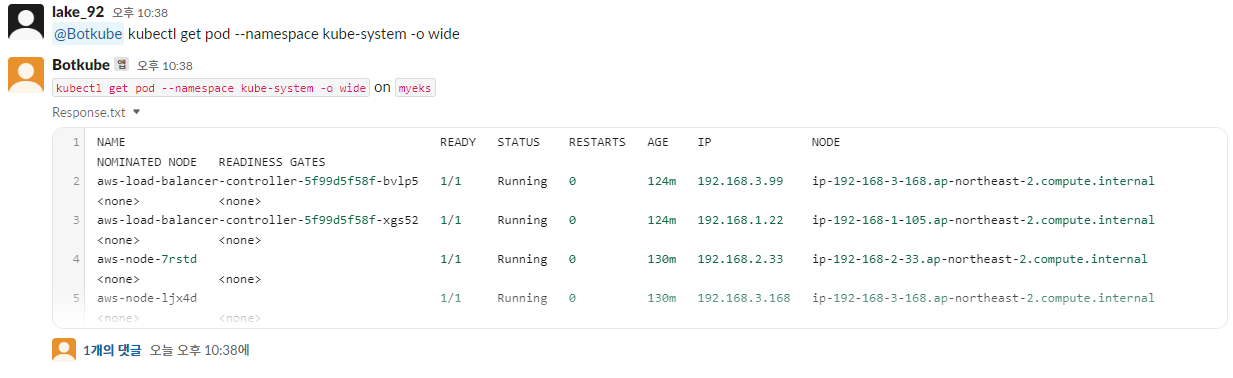

4.3.3.2 파드 정보 조회

@Botkube k get pod

@Botkube kc get pod --namespace kube-system

@Botkube kubectl get pod --namespace kube-system -o wide

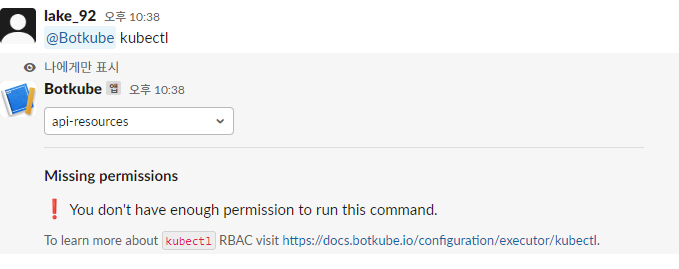

4.3.3.3 Actionable notifications

@Botkube kubectl

4.3.4 잘못된 이미지 파드 배포 및 확인

4.3.4.1 Pod 상태 감시_별도 Terminal

$> watch kubectl get pod

4.3.4.2 잘못된 이미지 정보의 파드 배포

$> kubectl apply -f https://raw.githubusercontent.com/junghoon2/kube-books/main/ch05/nginx-error-pod.yml

$> kubectl get events -w

@Botkube k get pod

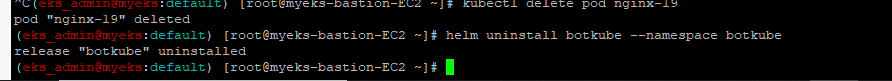

4.3.4.3 Pod 삭제

$> kubectl delete pod nginx-194.3.4.4 botkube helm chart 삭제

$> helm uninstall botkube --namespace botkube

5 Prometheus

5.1 소개

kubenetis system의 자원의 상태를 감시하고 alert 메시지를 발생 시키는 open source monitoring solution

service discvery : 모니터링 대상을 service endpoint로 등록하여, 자동으로 변경 내역을 감지

pull 방식 : prometheus server가 모니터링 대상의 정보를 pull 방식으로 획득

TSDB(Time-series database) 사용 :감시 대상의 Metric 데이터를 시간과 값이 한쌍을 이루는 데이터 형태로 관리하여 시간 순차적으로 저장하는 시계열 데이터 베이스를 사용

자체 검색 언어 PromQL 제공 : 다양한 데이터 조회가 가능하며 , 이를 그래프로 표시가 가능

5.2 Prometheus 설치

모니터링에 필요한 여러 요소를 스택(단일 Chart)으로 제공

5.2.1 monitoring namespace 생성 및 관련 서비스 생성 모니터링

$> kubectl create ns monitoring

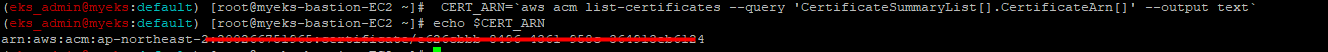

$>watch kubectl get pod,pvc,svc,ingress -n monitoring 5.2.2 인증서 ARN 확인

$> CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

$> echo $CERT_ARN

5.2.3 prometheus,grafana helm repo 추가

$> helm repo add prometheus-community https://prometheus-community.github.io/helm-charts5.2.4 helm chart config yaml file 생성

$> cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

defaultRules:

create: false

kubeControllerManager:

enabled: false

kubeEtcd:

enabled: false

kubeScheduler:

enabled: false

alertmanager:

enabled: false

# alertmanager:

# ingress:

# enabled: true

# ingressClassName: alb

# hosts:

# - alertmanager.$MyDomain

# paths:

# - /*

# annotations:

# alb.ingress.kubernetes.io/scheme: internet-facing

# alb.ingress.kubernetes.io/target-type: ip

# alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

# alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

# alb.ingress.kubernetes.io/success-codes: 200-399

# alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

# alb.ingress.kubernetes.io/group.name: study

# alb.ingress.kubernetes.io/ssl-redirect: '443'

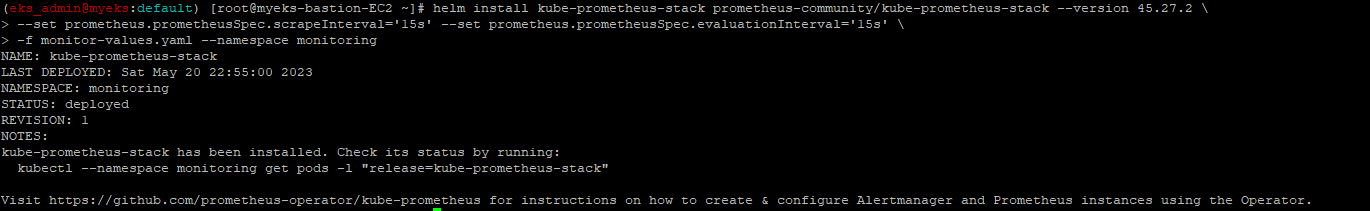

EOT5.2.5 prometheus,grafana 배포

$> helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

-f monitor-values.yaml --namespace monitoring

5.2.6 배포 확인

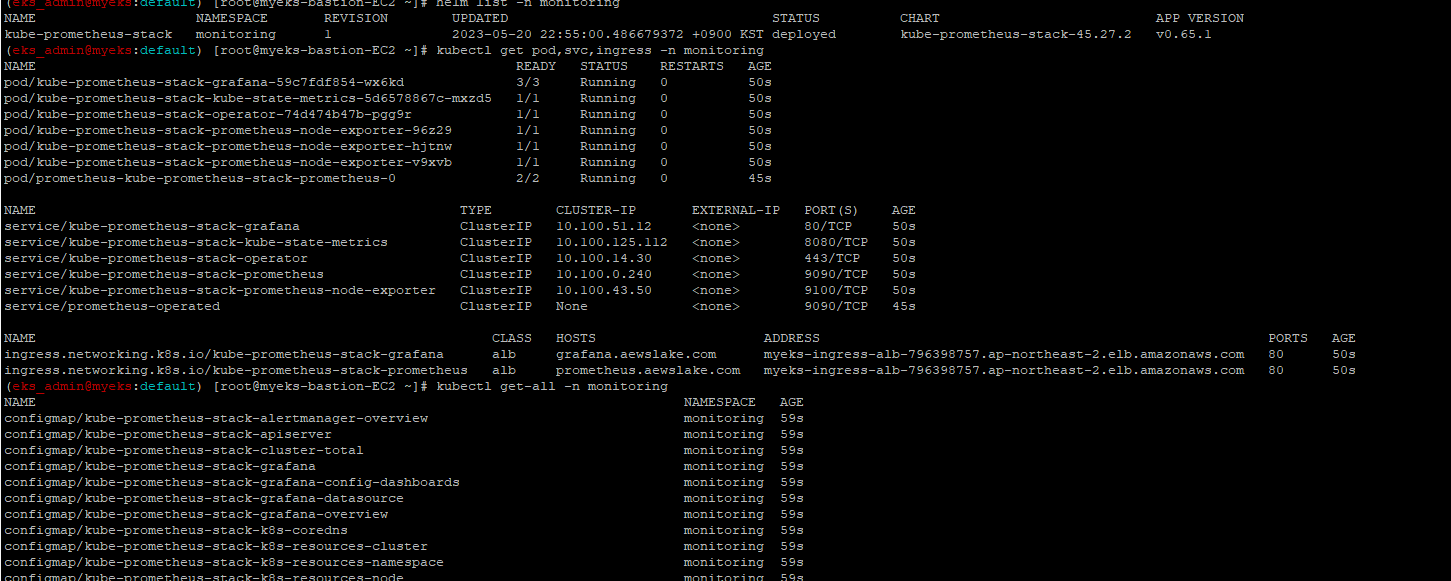

$> helm list -n monitoring

$> kubectl get pod,svc,ingress -n monitoring

$> kubectl get-all -n monitoring

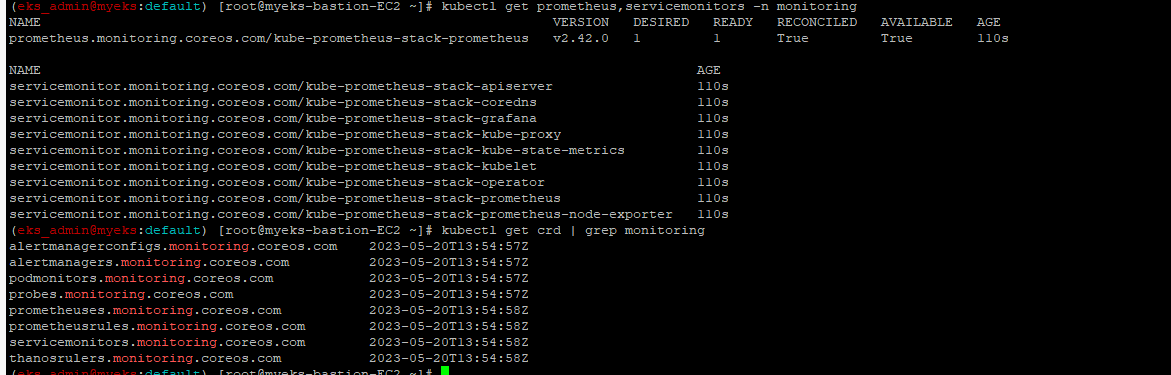

$> kubectl get prometheus,servicemonitors -n monitoring

$> kubectl get crd | grep monitoring

5.2.7 Prometheus 기본 사용

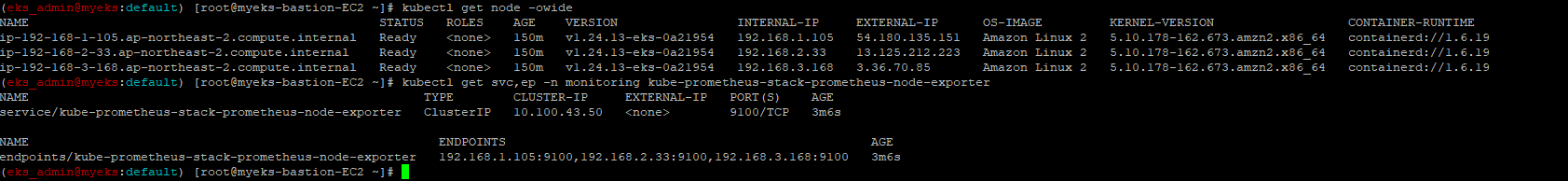

Promethus Server는 각 감시 대상의 Node에 HTTP /metrics:9100 GET 방식으로 Metric 정보를 조회하여 TSDB에 저장

$> kubectl get node -owide

$> kubectl get svc,ep -n monitoring kube-prometheus-stack-prometheus-node-exporter

5.2.8 각 Node의 9100 Port로 접속하여 Metric 정보 조회

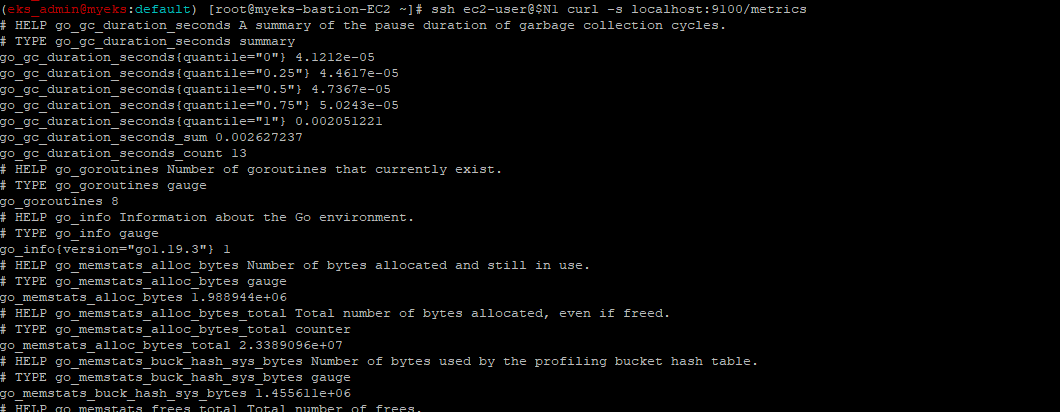

$> ssh ec2-user@$N1 curl -s localhost:9100/metrics

5.3 프로메테우스 ingress 도메인으로 웹 접속

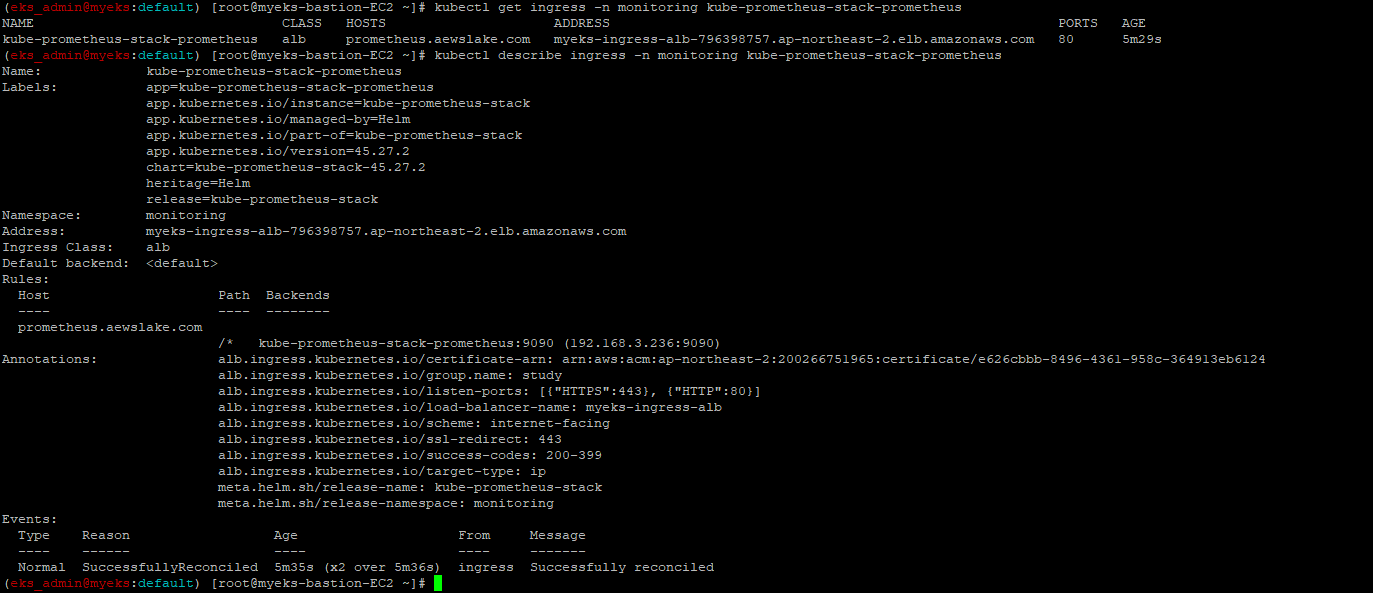

5.3.1 ingress 확인

$> kubectl get ingress -n monitoring kube-prometheus-stack-prometheus

$> kubectl describe ingress -n monitoring kube-prometheus-stack-prometheus

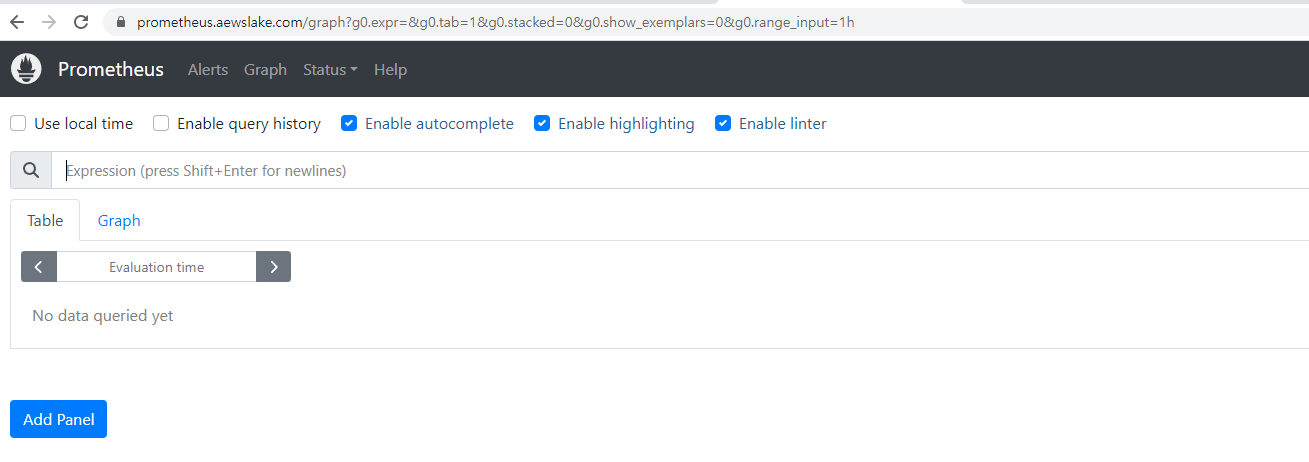

5.3.2 웹 접속

$> echo -e "Prometheus Web URL = https://prometheus.$MyDomain"

6 Grafana

6.1 소개

TSDB 데이타를 시각화, Alert 메시지 생성, 사용자 Dashboard 생성

Grafana는 자체 DB를 가지지 않으며, 이번 실습에서는 Promethus에서 생성한 TSDB를 참조

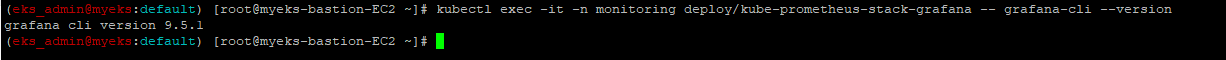

6.1.1 Grafana Version 확인

$> kubectl exec -it -n monitoring deploy/kube-prometheus-stack-grafana -- grafana-cli --version

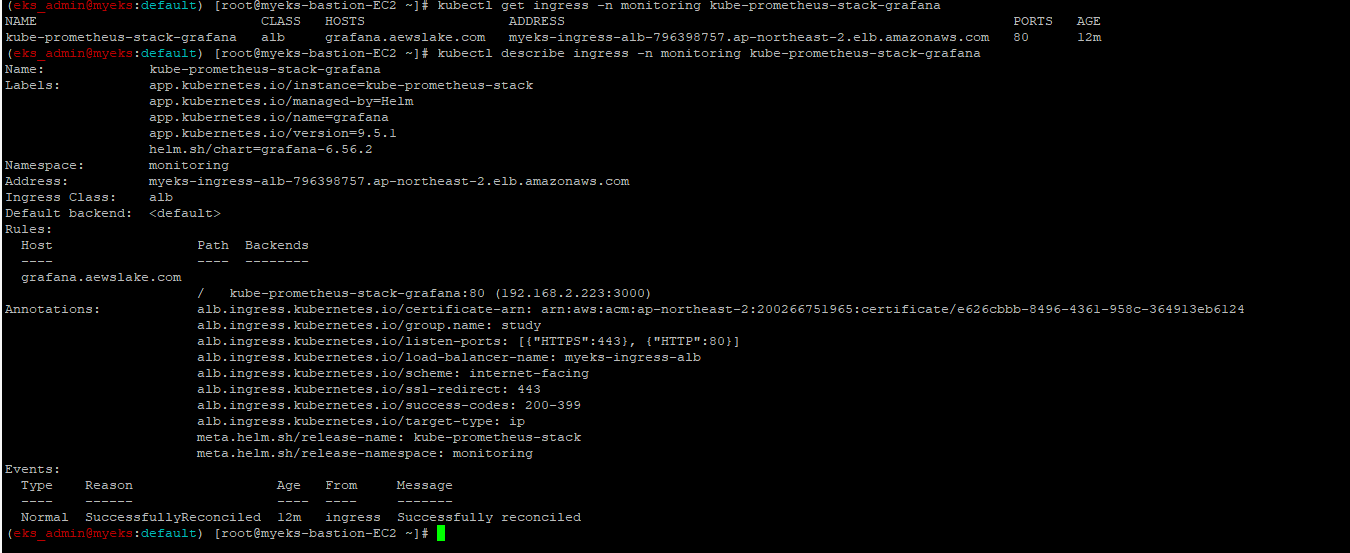

6.1.2 ingress 확인

$> kubectl get ingress -n monitoring kube-prometheus-stack-grafana

$> kubectl describe ingress -n monitoring kube-prometheus-stack-grafana

6.1.3 ingress Domain으로 WEB 접속

MyDomain"

6.2 NGINX 웹서버 감시

6.2.1 감시 방법

- NGINX 배포시 프로메테우스 Exporter option을 추가하면 자동으로 NGINX를 프로메테우스 모니터링에 등록, 만약 기존 Application에 추가할려면 사이트카 방식으로 프로메테우스 Exporter를 추가

- 프로메테우스 설정에서 서비스 모니터 CRD를 이용하여 NGINX 모니터링을 등록

- Grafana에서 NGINX 관련항목을 추가하여 새로운 Dashboard 생성

6.2.2 프로메테우스 Exporter 추가를 위한 Config Yaml 생성

cat <<EOT > ~/nginx_metric-values.yaml

metrics:

enabled: true

service:

port: 9113

serviceMonitor:

enabled: true

namespace: monitoring

interval: 10s

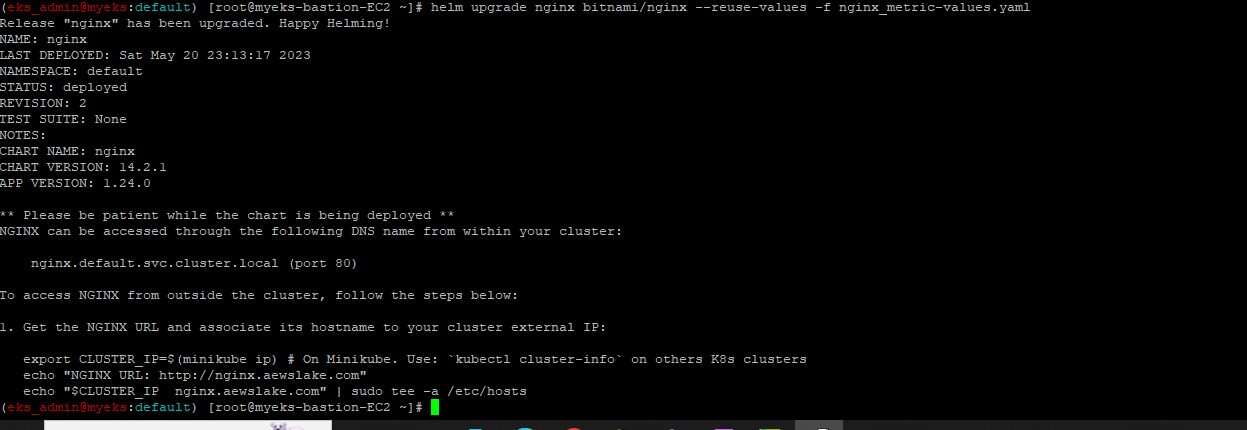

EOT6.2.3 NGINX 재 배포

$> helm upgrade nginx bitnami/nginx --reuse-values -f nginx_metric-values.yaml

6.2.4 NGINX 배포 확인

$> kubectl get pod,svc,ep

```

$> kubectl get servicemonitor -n monitoring nginx

$> kubectl get servicemonitor -n monitoring nginx -o json | jq

### 6.2.5 nginx 파드내에 컨테이너 갯수 확인

promethus exporter container가 sidecar 형식으로 추가가 되어 container 갯수가 2개$> kubectl get pod -l app.kubernetes.io/instance=nginx

$> kubectl describe pod -l app.kubernetes.io/instance=nginx

### 6.2.6 메트릭 확인 >> 프로메테우스에서 Target 확인(kubectl get pod -l app.kubernetes.io/instance=nginx -o jsonpath={.items[0].status.podIP})

NGINXIP:9113/metrics

NGINXIP:9113/metrics | grep ^nginx_connections_active

### 6.2.7 NGINX 접속 및 접속 로그 확인MyDomain"

MyDomain

$> kubectl logs deploy/nginx -f

## 6.3 Promethus Dashboard에 Nginx Target 등록

상위 메뉴 Status -> Targets 메뉴 선택후