개요 🦜️🔗

지난 포스팅에서는 Model I/O, LCEL, Prompt, Memory 등에 대해 정리하였다. 이번에는 RAG 방식을 통해 외부 문서를 제공하고, 이를 기반으로 정보를 찾아낼 수 있는 채팅 모델을 만들어 본다.

RAG란?

RAG(Retrieval-Augmented Generation, 검색 증강 생성)란 AI모델의 정확성과 신뢰성을 향상시키기 위해 Pre-training(사전 학습) 데이터 이외의 외부 문서를 참조하도록 하는 기술을 의미한다. 이를 통해 AI는 최신 정보나 사용자 맞춤의 정확한 정보를 제공할 수 있게 된다.

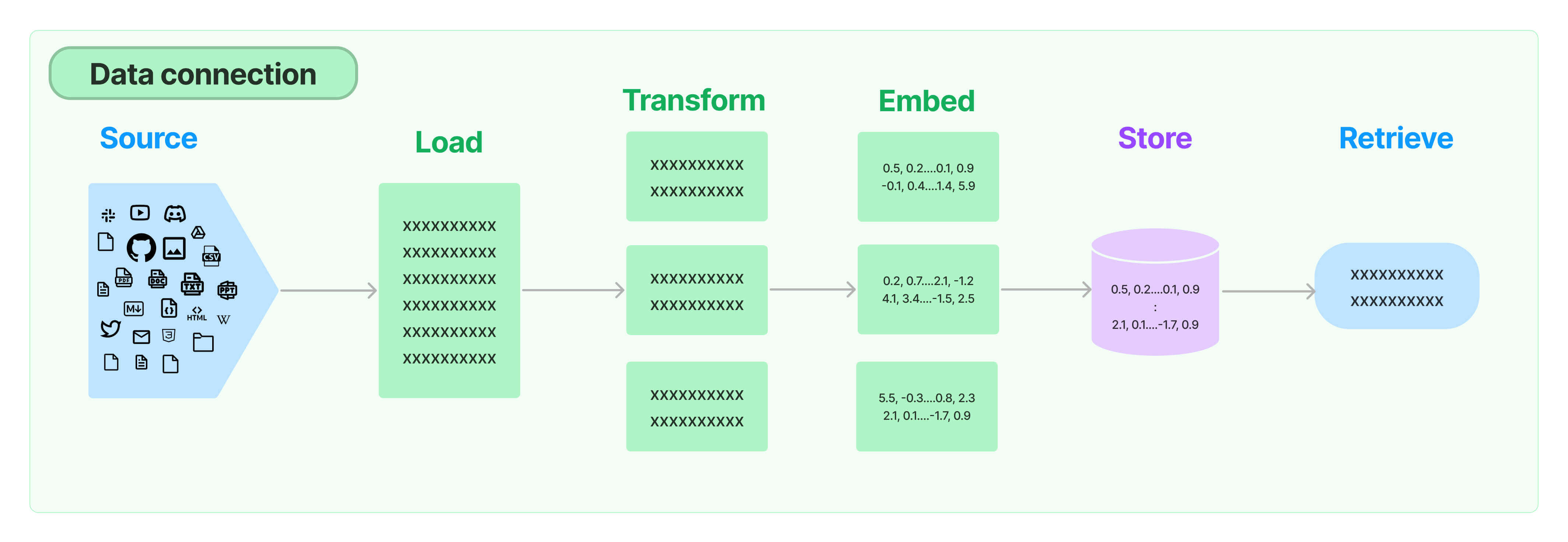

LangChain에서는 크게 다음과 같은 과정을 통해 RAG를 구현할 수 있다.

원본 데이터 -> 적재(load) -> 분할(split) -> 임베딩 -> 벡터 공간 저장(vector store & retriever)

코드

from langchain.chat_models import ChatOpenAI

from langchain.document_loaders import UnstructuredFileLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings, CacheBackedEmbeddings

from langchain.vectorstores import FAISS

from langchain.storage import LocalFileStore

from langchain.prompts import ChatPromptTemplate

from langchain.schema.runnable import RunnablePassthrough, RunnableLambda

llm = ChatOpenAI(

temperature=0.1,

)

cache_dir = LocalFileStore("./.cache/practice/")

splitter = CharacterTextSplitter.from_tiktoken_encoder(

separator="\n",

chunk_size=600,

chunk_overlap=100,

)

loader = UnstructuredFileLoader("./files/운수 좋은 날.txt")

docs = loader.load_and_split(text_splitter=splitter)

embeddings = OpenAIEmbeddings()

cached_embeddings = CacheBackedEmbeddings.from_bytes_store(embeddings, cache_dir)

vectorstore = FAISS.from_documents(docs, cached_embeddings)

retriever = vectorstore.as_retriever()

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"""

You are a helpful assistant.

Answer questions using only the following context.

If you don't know the answer just say you don't know, don't make it up:

\n\n

{context}",

"""

),

("human", "{question}"),

]

)

chain = (

{

"context": retriever,

"question": RunnablePassthrough(),

}

| prompt

| llm

)

result = chain.invoke("김첨지는 학생을 어디로 데려다 주었나?")

print(result)출력

content='김첨지는 학생을 남대문 정거장까지 데려다 주었습니다.'Loading & Splitting

LLM에 문서를 제공할 때 문서 전문(全文)을 LLM에 전달한다면 프롬프트가 과도하게 길어질 수 있다. 이로 인해 정확도가 떨어지고 답변이 느리며 비용 소모가 많아지게 된다. 따라서 문서를 분할하여 필요한 부분만 전달할 필요가 있다. 단, 문맥이나 의미를 손상할 만큼 작은 단위의 분할은 좋지 않기에 적절한 크기로 분할하는 것이 중요하다.

splitter = CharacterTextSplitter.from_tiktoken_encoder( separator="\n", chunk_size=600, chunk_overlap=100, ) loader = UnstructuredFileLoader("./files/운수 좋은 날.txt") docs = loader.load_and_split(text_splitter=splitter)

CharacterTextSplitter

문서를 분할할 때 사용될 splitter을 선언한다. LangChain에서 제공하는 splitter의 종류에는 Recursive, HTML, Markdown 등 여러가지가 있지만 여기서는 Character를 사용한다. CharacterTextSplitter는 사용자가 지정한 문자를 기준으로 문서를 분할한다.

파라미터

- separator: 해당 문자를 기준으로 문서를 분할한다. 여기서는 "\n(줄 바꿈)"을 기준으로 문서를 분할한다.

- chunk_size: 분할된 한 문서의 최대 청크 크기를 지정한다.

- chunk_overlap: 문서 분할 시 앞뒤 문서의 100자를 중복으로 추가하여 생성한다. 이를 통해 문맥상 적절하지 않은 부분에서 문서가 분할되는 문제를 해결할 수 있다.

UnstructuredFileLoader

파일 로딩에 사용될 Loader를 선언한다. LangChain은 TextLoader, PyPDFLoader 등 다양한 파일 형식에 맞춘 Loader를 제공한다. UnstructuredFileLoader는 text files, powerpoints, html, pdfs, images등 여러 형식의 파일을 지원하기에 편리하다. 문서가 저장되어 있는 경로를 입력한다.

loader.load_and_split()

파일을 로딩하는 동시에 분할을 진행한다. splitter를 파라미터로 전달하며, 분할된 문서를 반환한다. (return type: list)

Embedding

Embedding이란 사람이 읽는 자연어를 컴퓨터가 이해할 수 있는 숫자의 나열인 vector로 변환하는 작업을 의미한다. 간단히 설명하자면, text에 의미별로 적절한 점수를 부여하는 방식으로 진행된다.

Embedding을 통해 변환된 문서는 vector store(벡터 공간)에 저장된다. 사용자가 쿼리를 입력하면 Retriever는 쿼리와 연관성이 높은 문서들을 vector store에서 찾아오고, 이 문서들을 LLM에 전달할 프롬프트에 포함함으로써 정확도가 높은 답변을 기대할 수 있다.

embeddings = OpenAIEmbeddings() cache_dir = LocalFileStore("./.cache/practice/") cached_embeddings = CacheBackedEmbeddings.from_bytes_store(embeddings, cache_dir)

OpenAIEmbeddings

OpenAI는 Embedding 서비스를 제공한다.

CacheBackedEmbeddings

Embedding은 비용이 부과되는 서비스이다. 같은 문서를 매번 임베딩하는 것은 효율적이지 않기에, Cache Memory를 사용하는 것이 효과적이다.

Embedding 객체와 캐시가 저장되어 있는 위치를 파라미터로 전달한다. 추후 embedding 객체가 호출될 일이 있을 때 cached_embeddings를 사용한다.

이 때 만약 이미 캐시되어 있다면 저장된 캐시를 사용하고, 그렇지 않다면 embedding을 진행하여 캐시를 생성한다.

Vector store & Retriever

vectorstore = FAISS.from_documents(docs, cached_embeddings) retriever = vectorstore.as_retriever()

LangChain은 여러 vector sotre를 제공한다. 여기서는 로컬에서 직접 실행이 가능한 무료 라이브러리인 FAISS를 사용하였다. 비슷한 라이브러리로 Chroma도 사용해 볼 수 있다.

Retriever

Retriever란 사용자로부터 받은 Query를 해석하여 그와 관련이 있는 문서를 찾아와서(retrieve) 반환하는 인터페이스이다. 코드에서는 vector store를 retriever로 사용하였지만 데이터베이스, 클라우드 등 여러 유형의 저장소를 retriever로 사용할 수도 있다.

(ex: retriever = WikipediaRetriever(top_k_results=5))

Prompt

prompt = ChatPromptTemplate.from_messages( [ ( "system", """ You are a helpful assistant. Answer questions using only the following context. If you don't know the answer just say you don't know, don't make it up: \n\n {context} """, ), ("human", "{question}"), ] )

LLM에 전달될 프롬프트를 작성한다. LLM의 hallucination(환각) 현상을 방지하기 위한 문구를 추가한다. (If you don't know the answer just say you don't know, don't make it up)

Chain

Retriever로부터 가져온 분할 문서들을 LLM에 전달하는 방식으로는 여러 가지가 있다.

Stuff

관련된 문서들을 모두 prompt에 채워 넣어(stuff) 전달한다. 프롬프트가 과도하게 길어질 수 있다는 단점이 있다.

Map reduce

각각의 문서들을 요약하고, 요약된 문서를 기반으로 최종 요약본을 만들어낸다. 토큰 이슈(프롬프트가 제한 토큰 수 이상으로 길어짐)를 해결할 수 있다는 장점이 있지만, 모든 문서를 요약하기에 속도가 느리다는 단점이 있다.

Refine

문서들을 순회하며 중간 답변(Intermediate Answer)생성하고, 이를 반복하며 점점 답변을 정제(refine)한다. 최종적으로 양질의 답변이 만들어지게 된다. 속도가 느리고 비용이 비싸지만 결과물이 뛰어나다는 장점이 있다.

Map re-rank

각각 문서에 대해 각각의 답변을 만들어 내고, 이에 점수를 부여한다. 가장 높은 점수를 받은 답변을 최종 답변으로 설정한다. 문서가 구분되어 있고 높은 유사성을 요구할 때(하나의 문서에만 원하는 정보가 있을 때) 유용하다.

여기서는 가장 간단한 구조인 Stuff 방식을 LCEL로 구현해 본다.

llm = ChatOpenAI(temperature=0.1) chain = ( { "context": retriever, "question": RunnablePassthrough(), } | prompt | llm ) result = chain.invoke("김첨지는 학생을 어디로 데려다 주었나?") print(result)

chain.invoke()를 통해 전달된 쿼리는 retriever에 전달되고, 반환된 문서를 {context}에 넣는다.RunnablePassthrough()를 통해 사용자의 질문 또한 {question}에 그대로 들어가게 된다.- 이렇게 만들어진 딕셔너리 객체를 통해 프롬프트가 완성되고, LLM에 전달되어 최종 답변을 받을 수 있다.

실행 결과

김첨지는 학생을 어디로 데려다 주었나?

-> 김첨지는 학생을 남대문 정거장까지 데려다 주었습니다.

김첨지는 학생을 어디로 데려다 주었나? 해당 부분의 원문과 함께 출력하시오.

-> 김첨지는 학생을 남대문 정거장까지 데려다 주었다.

해당 부분의 원문은 다음과 같다:\n\n"남대문 정거장까지 얼마요?RetrievalQA

off-the-shelf chain 클래스인 RetrievalQA를 사용한다면 간단하게 Chain을 사용할 수 있다. 그러나 원리가 모호하고 커스텀하기 어렵다는 단점이 있다. 또한 이는 legacy로 공식적으로 LCEL로 직접 구현하는 방식이 권장된다.

chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="map_rerank",

retriever=retriever,

)

result = chain.run("김첨지는 학생을 어디로 데려다 주었나?")

print(result)chain_type에 원하는 방식을 설정할 수 있다.

기타

- 테스트 결과

운수 좋은 날.txt문서는 총 41개의 문서로 분할되며, 4개의 문서가 LLM에 전달된다. - 문서와 쿼리, 프롬프트 지시문 등은 모두 영어로 작성하는 것이 좋다. 한국어는 정확도가 떨어지는 것 같다.

- embedding과 retriever의 원리는 좀 더 공부가 필요할 듯 하다..

출처 및 참고자료

https://python.langchain.com/docs

https://github.com/nomadcoders/fullstack-gpt

https://www.youtube.com/watch?v=tQUtBR3K1TI

https://nomadcoders.co/fullstack-gpt