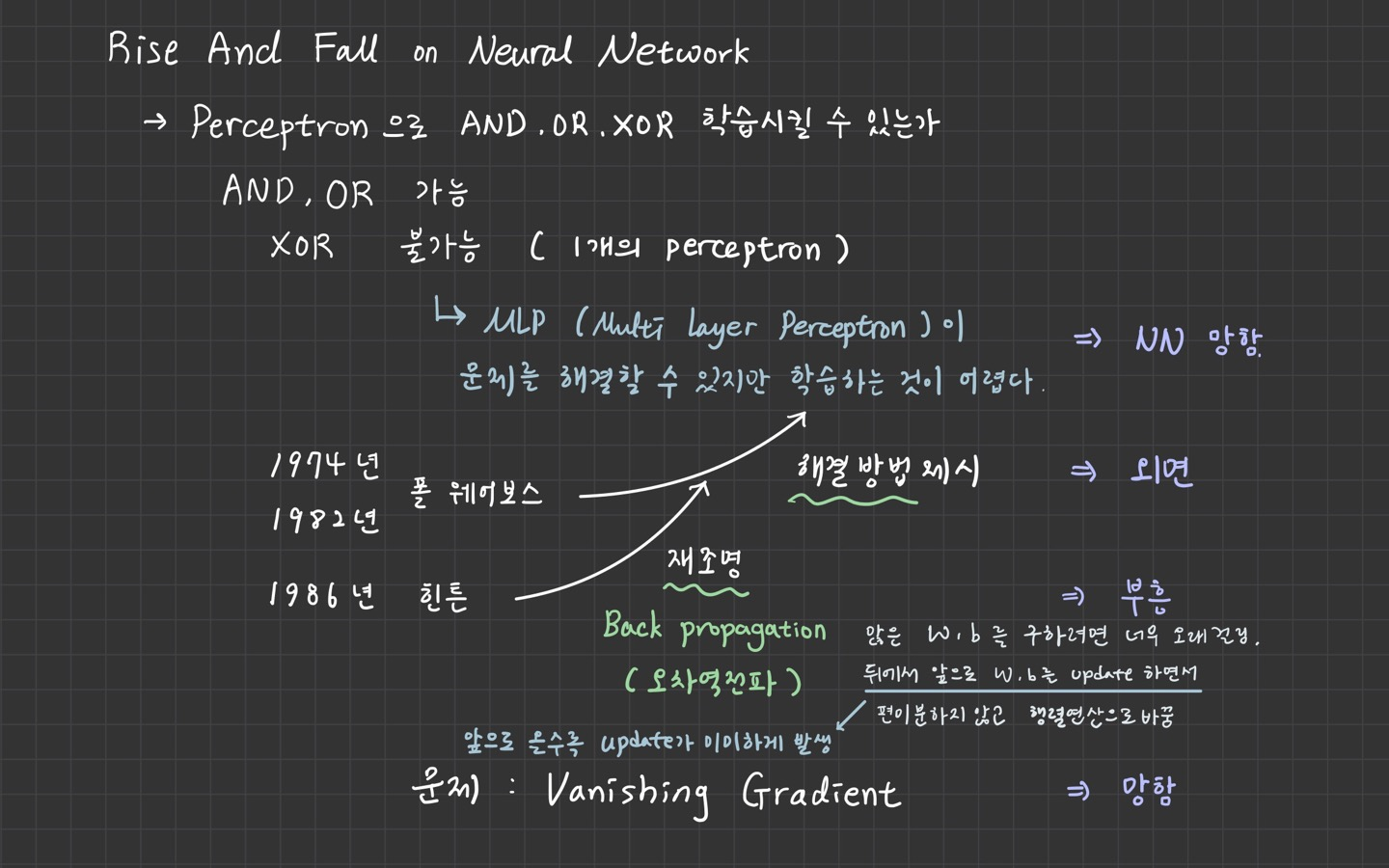

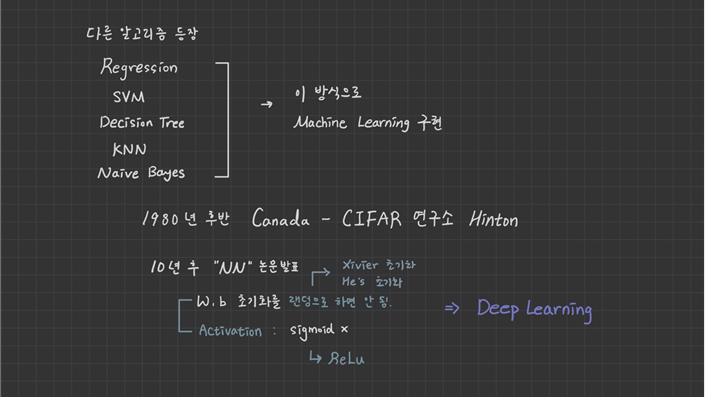

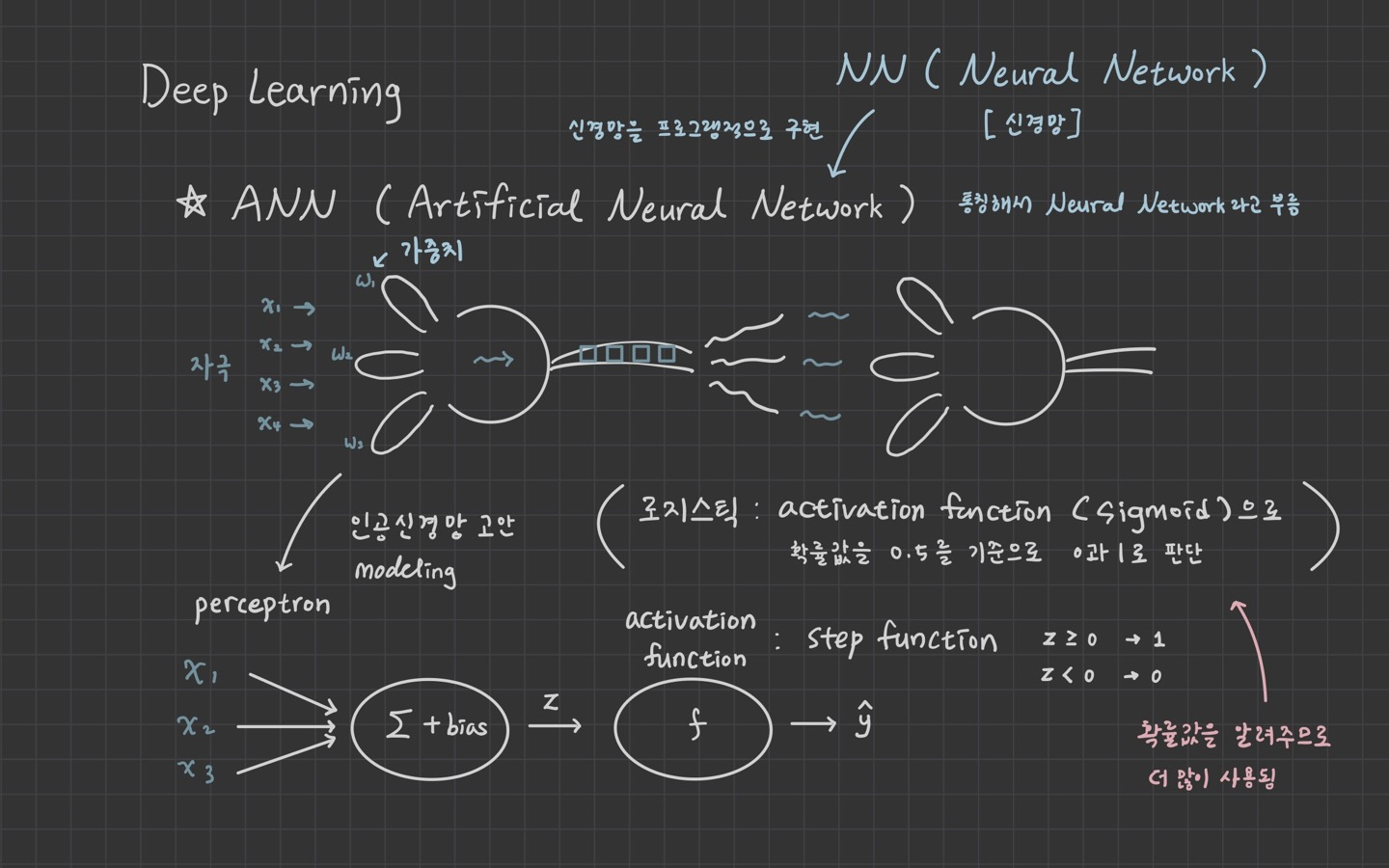

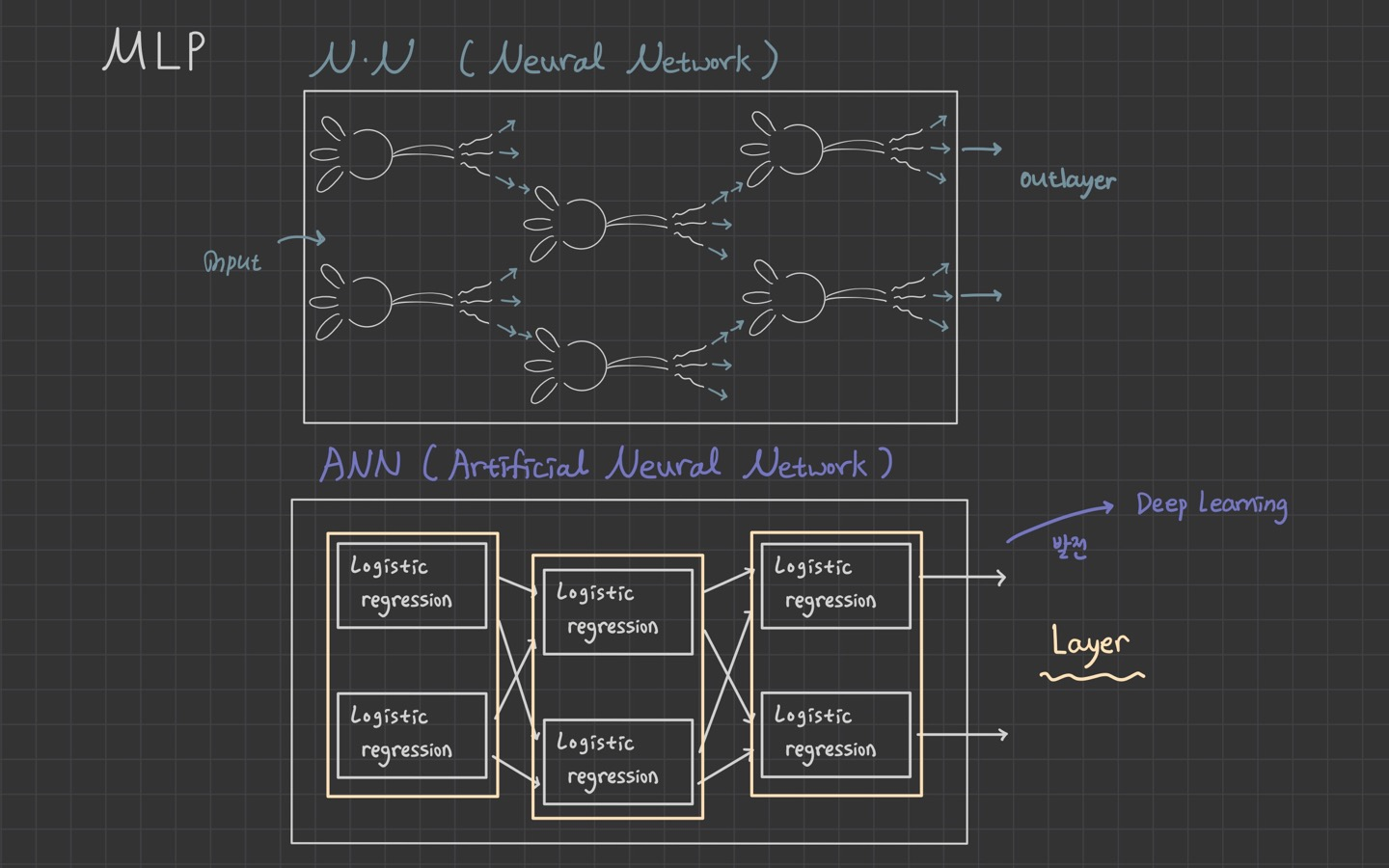

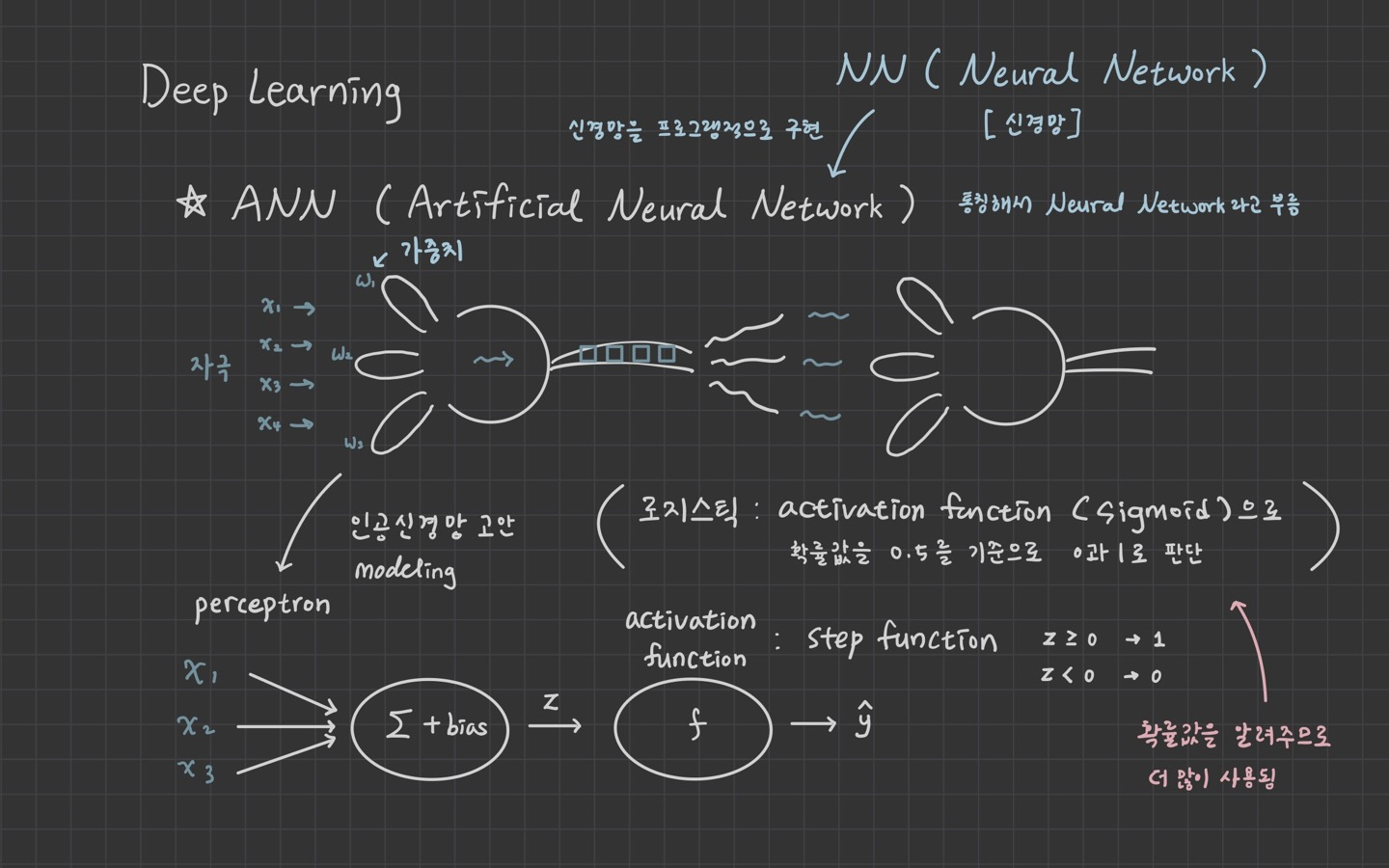

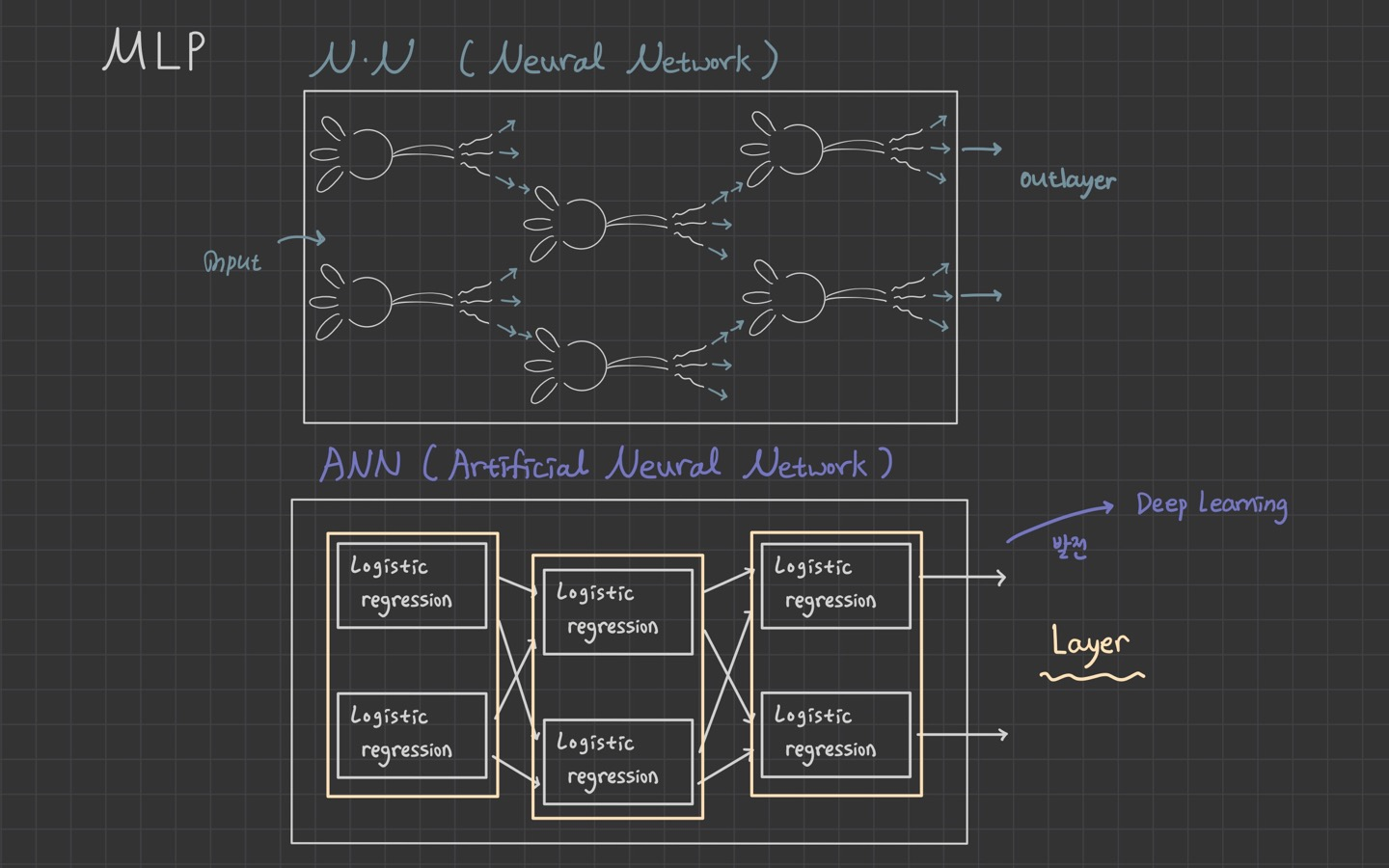

1. ANN (Artificial Neural Network)

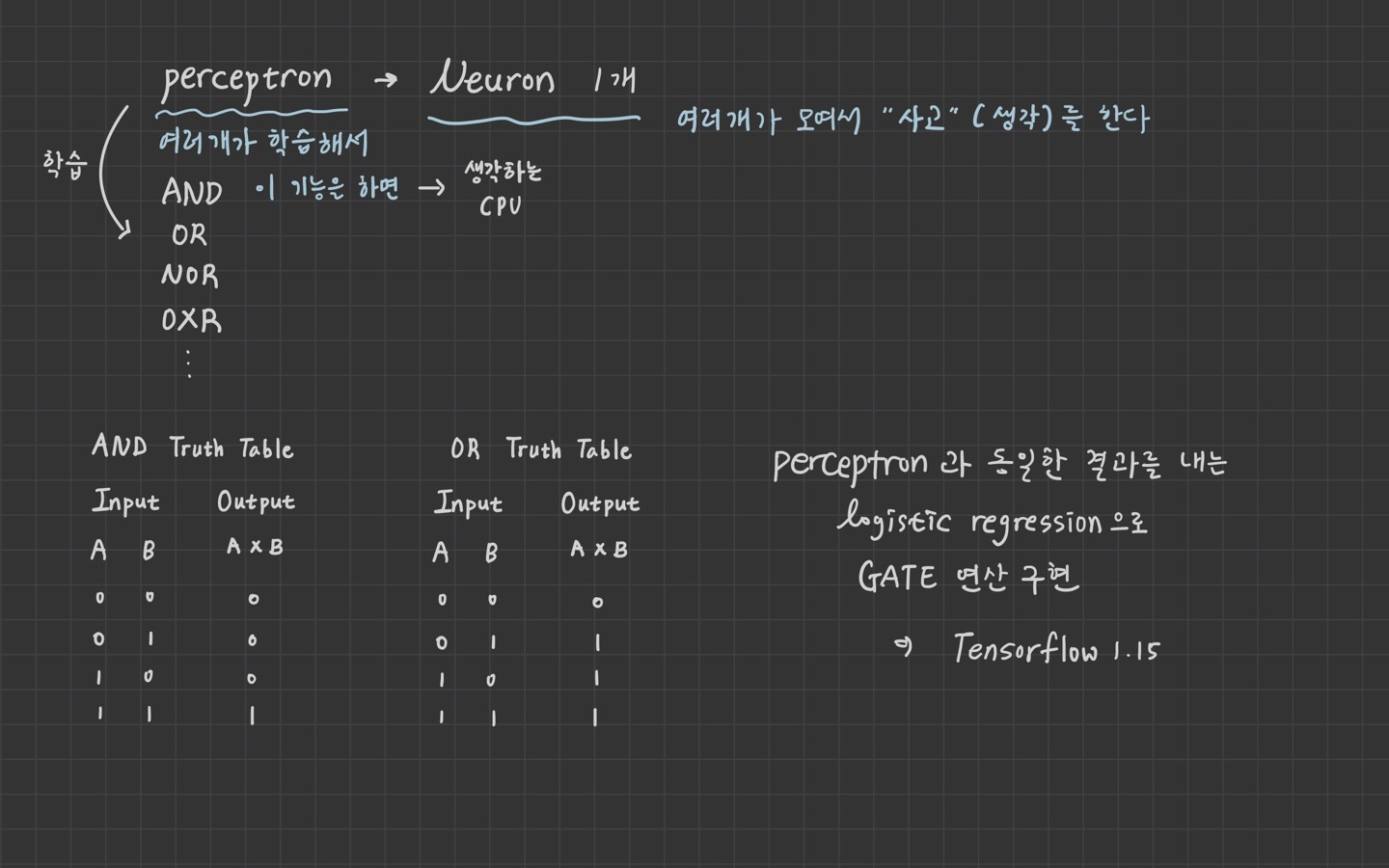

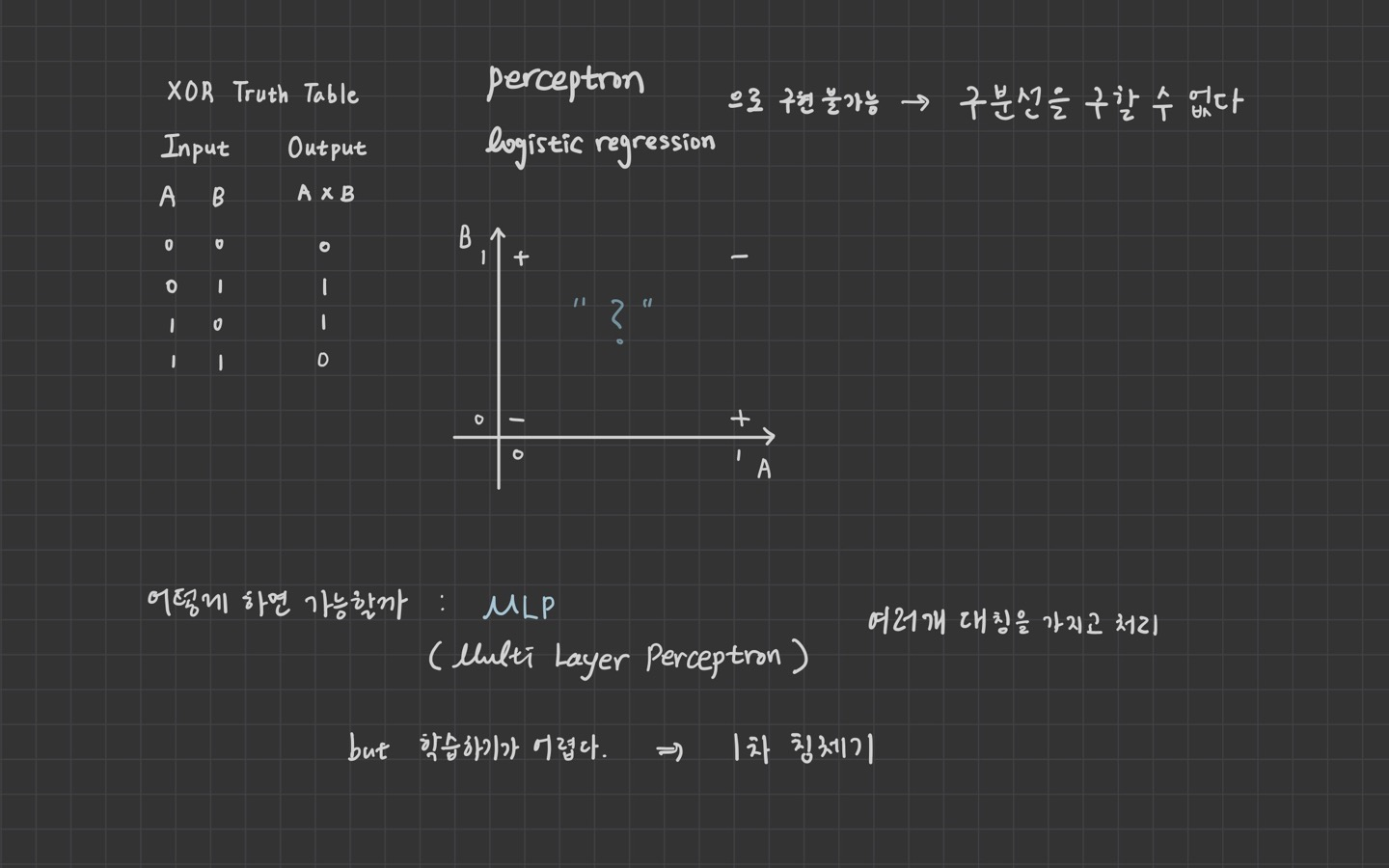

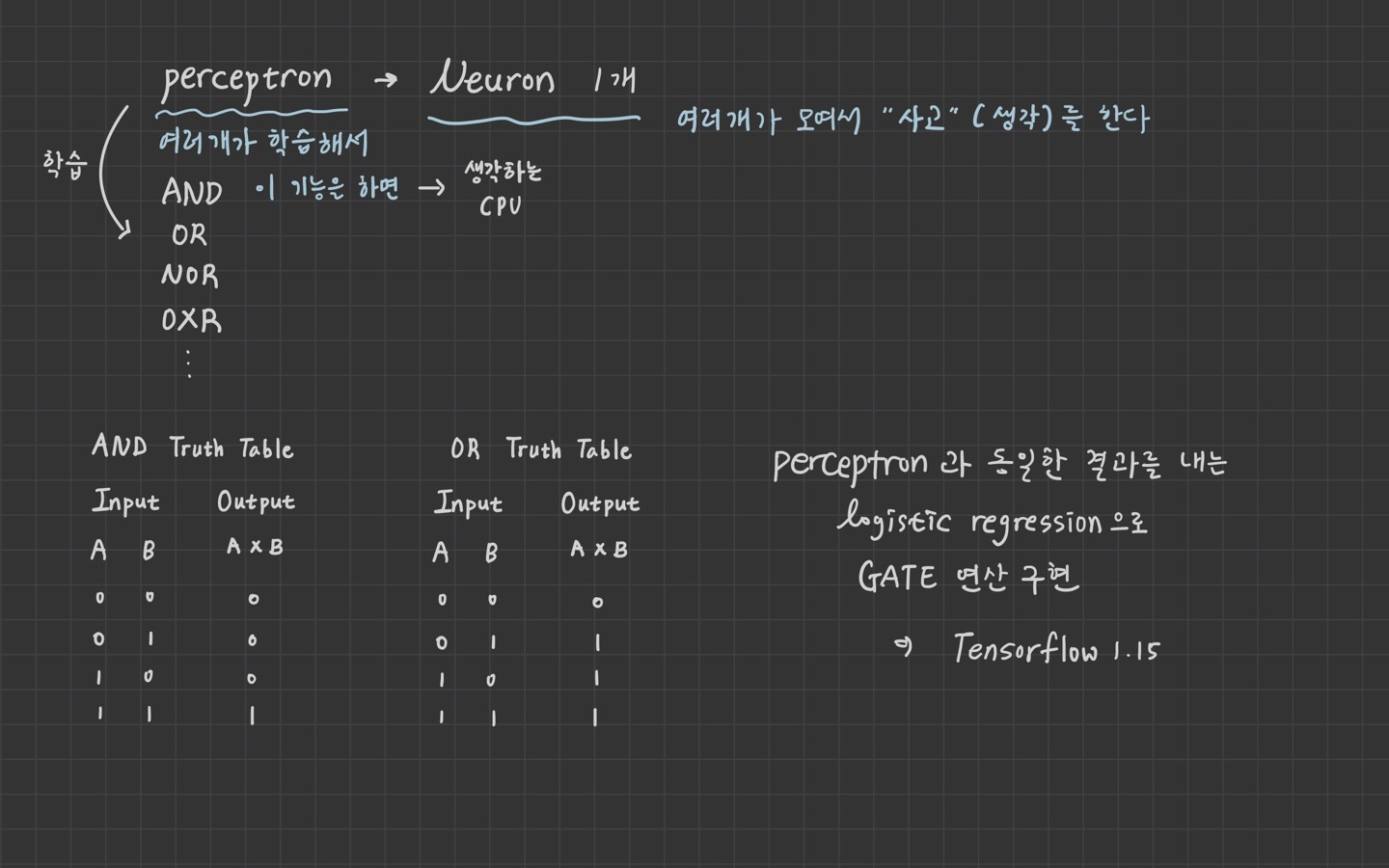

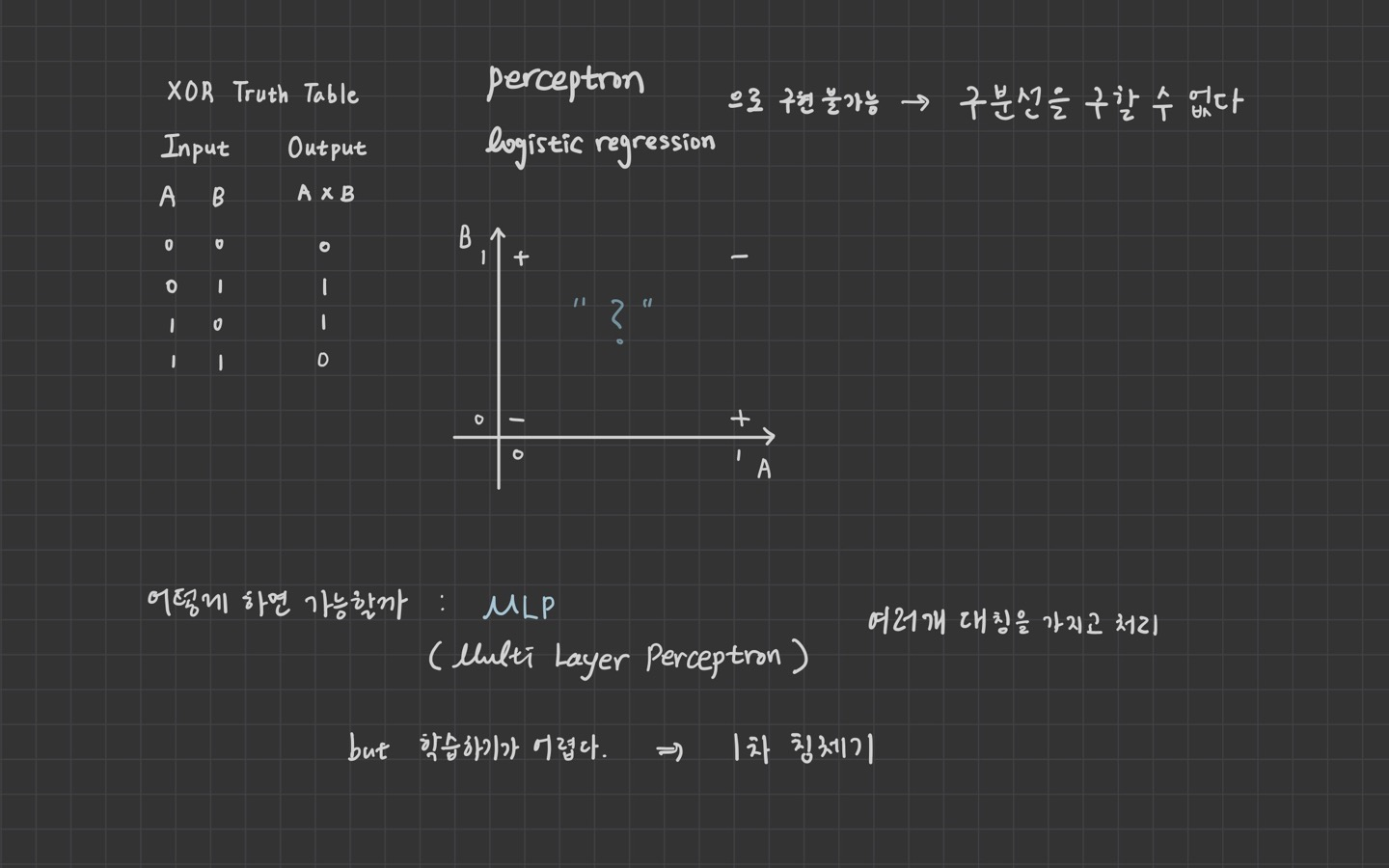

2. Perceptron

Logistic Regression으로 GATE 연산 학습

AND , OR , XOR

tensorflow 1.15

import numpy as np

import tensorflow as tf

from sklearn.metrics import classification_report

x_data = np.array([[0, 0],

[0, 1],

[1, 0],

[1, 1]], dtype=np.float64)

t_data = np.array([0, 0, 0, 1], dtype=np.float64)

t_data = np.array([0, 1, 1, 1], dtype=np.float64)

t_data = np.array([0, 1, 1, 0], dtype=np.float64)

X = tf.placeholder(shape=[None,2], dtype=tf.float32)

T = tf.placeholder(shape=[None,1], dtype=tf.float32)

W = tf.Variable(tf.random.normal([2,1]))

b = tf.Variable(tf.random.normal([1]))

logit = tf.matmul(X,W) + b

H = tf.sigmoid(logit)

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=logit,

labels=T))

train = tf.train.GradientDescentOptimizer(learning_rate=1e-2).minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

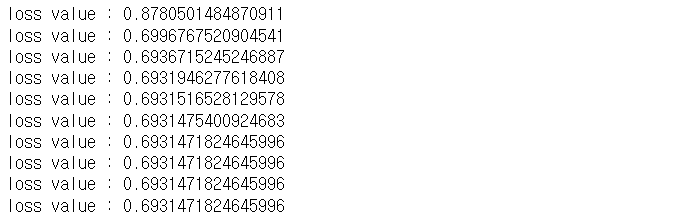

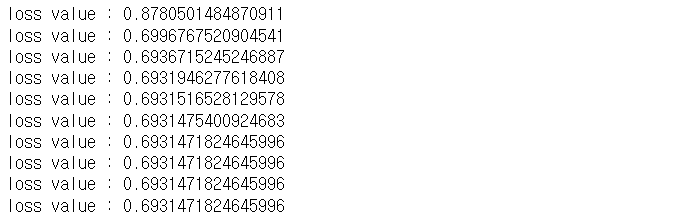

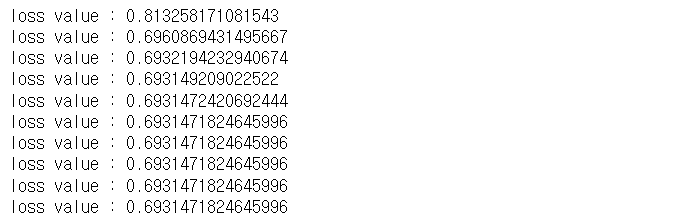

for step in range(30000):

_, loss_val = sess.run([train, loss],

feed_dict={X:x_data,

T:t_data.reshape(-1,1)} )

if step % 3000 == 0:

print('loss value : {}'.format(loss_val))

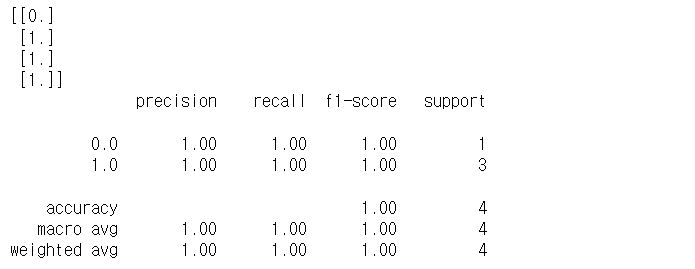

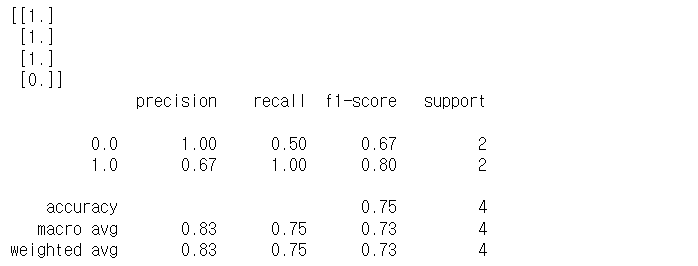

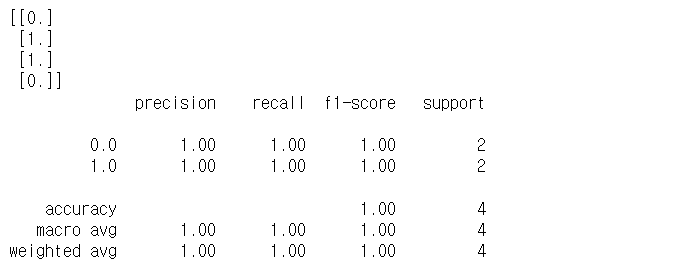

AND

OR

XOR

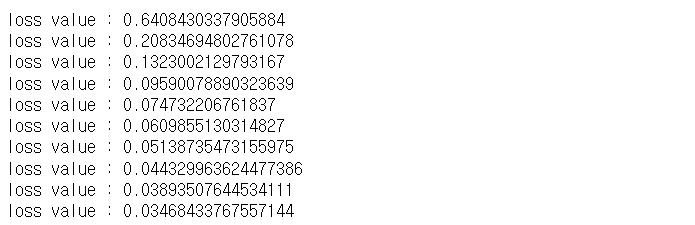

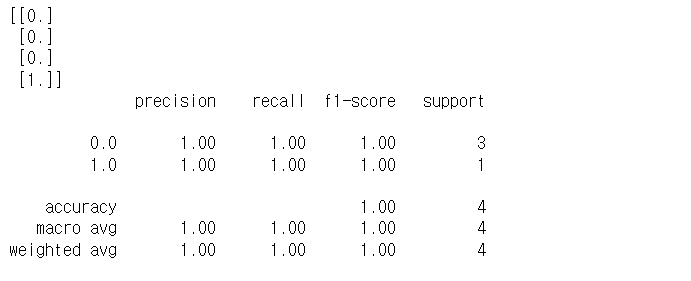

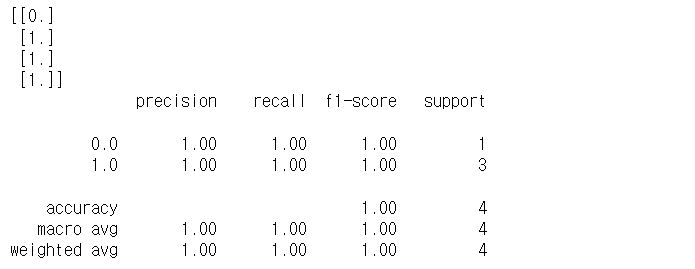

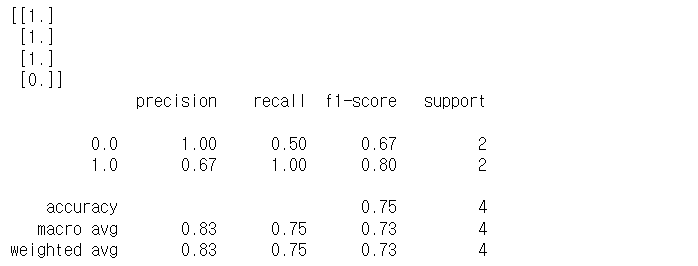

모델평가

tf.cast() : 새로운 자료형으로 변환

predict = tf.cast(H >= 0.5, dtype=tf.float32)

predict_val = sess.run(predict, feed_dict={X:x_data})

print(predict_val)

print(classification_report(t_data, predict_val.ravel()))

AND

OR

XOR

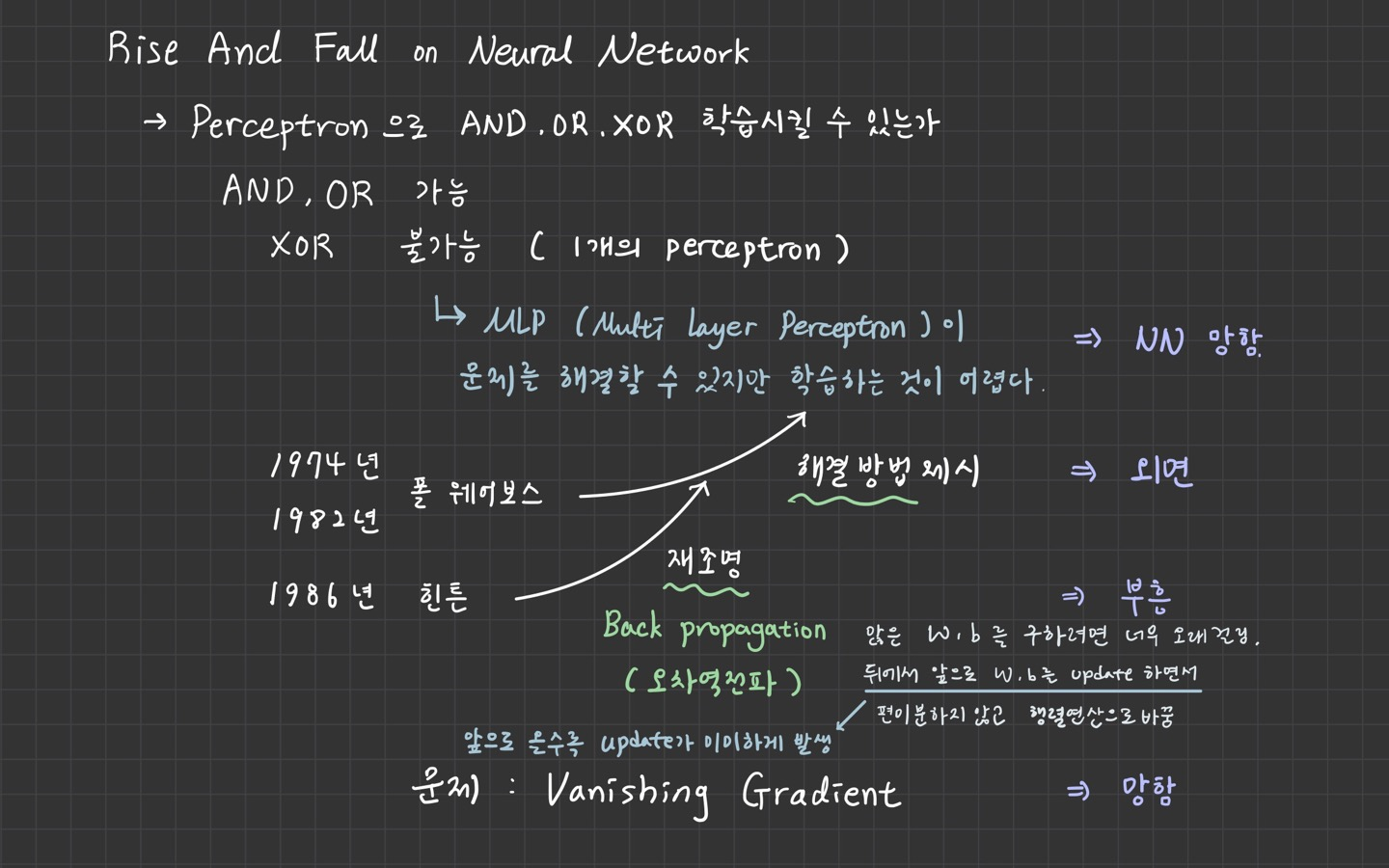

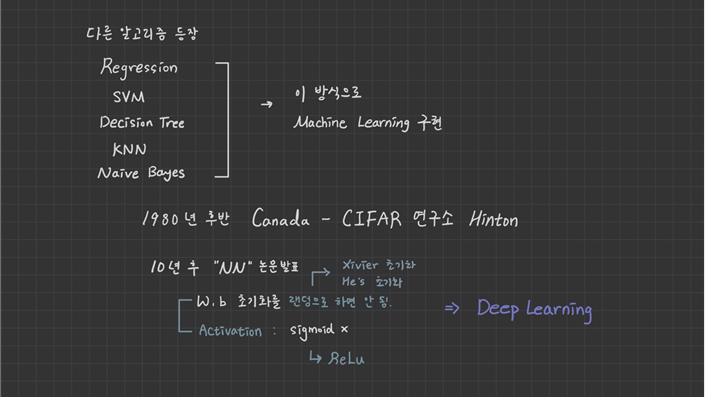

MLP

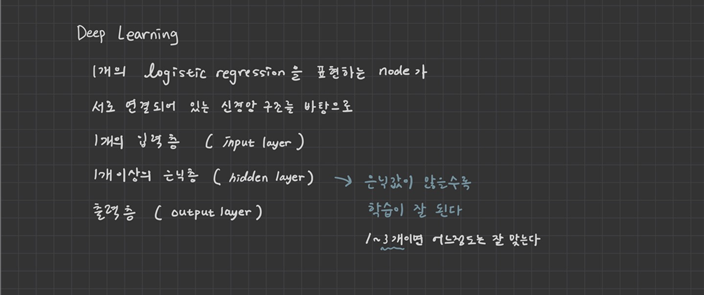

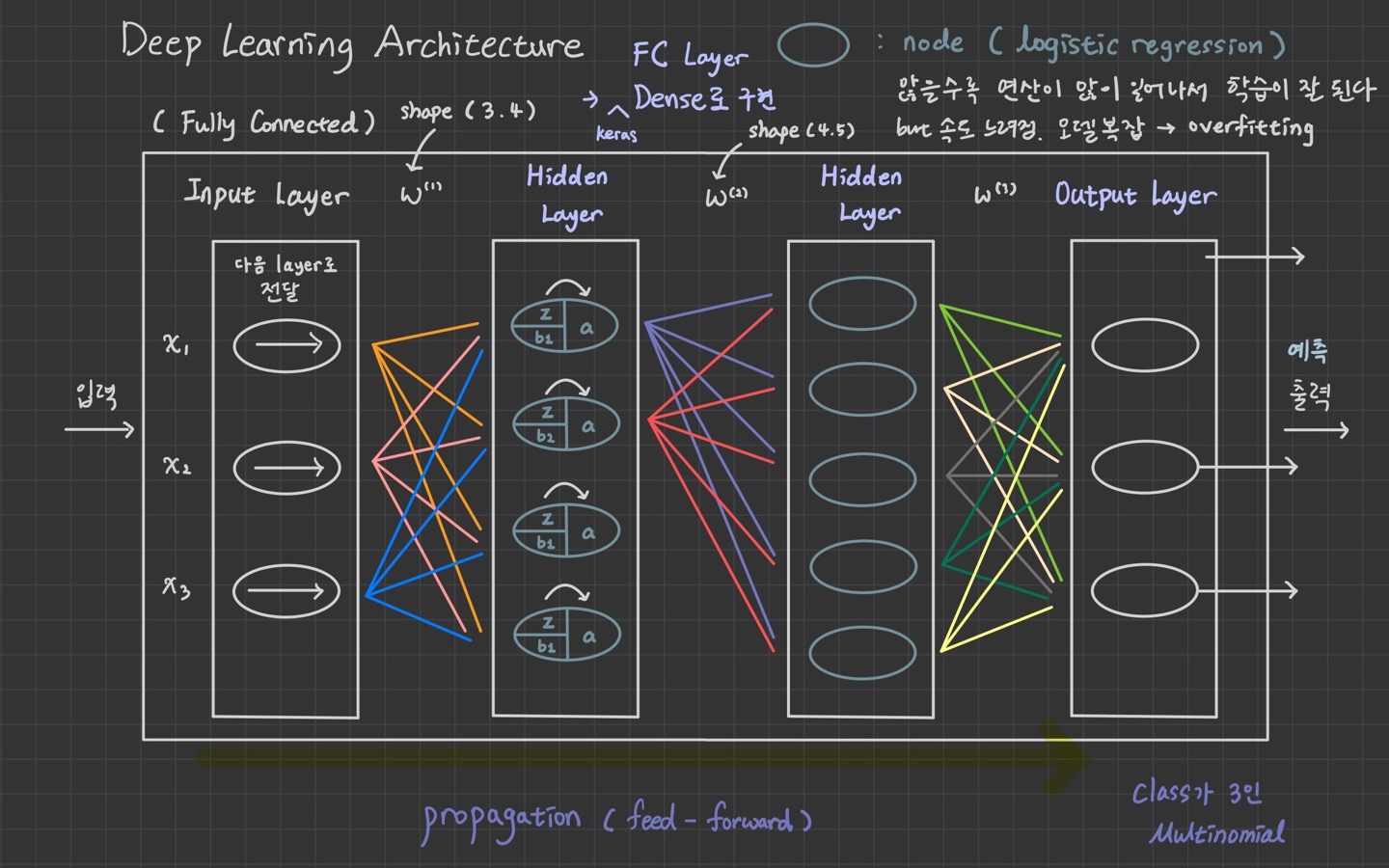

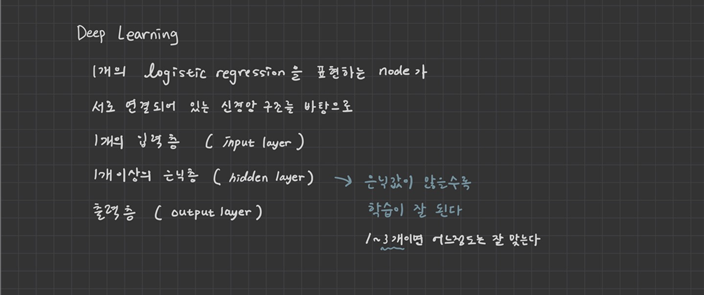

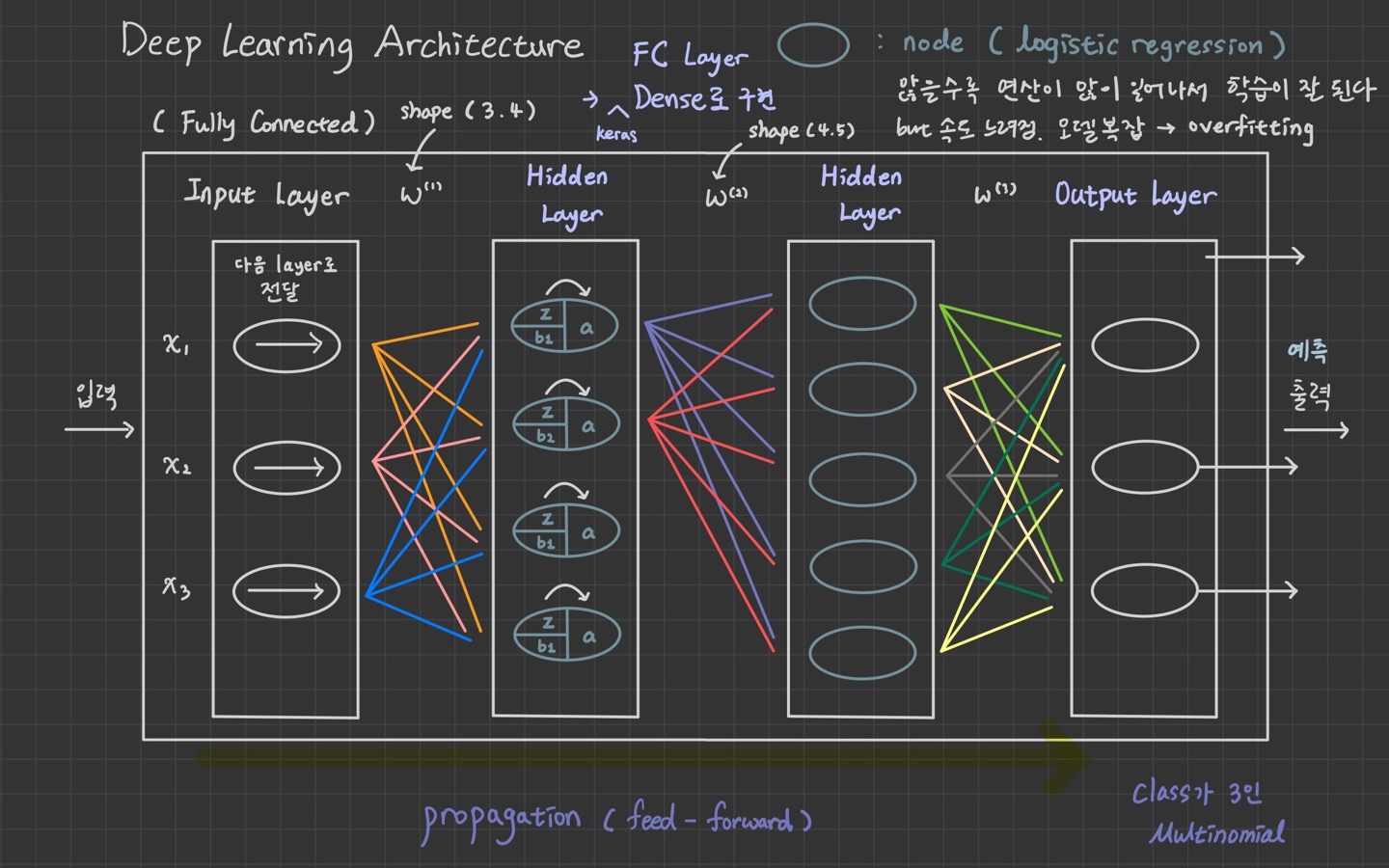

3. Deep Learning

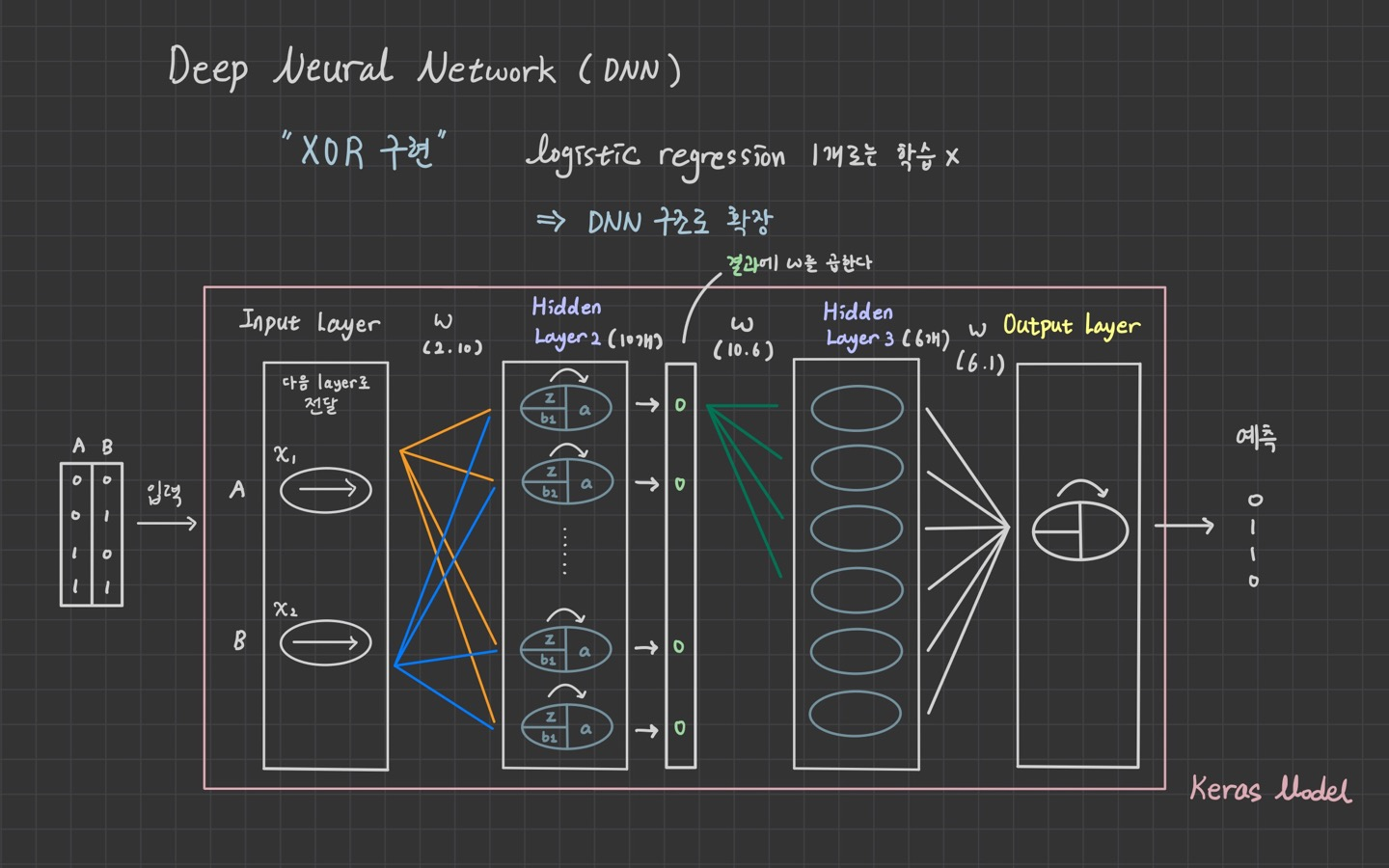

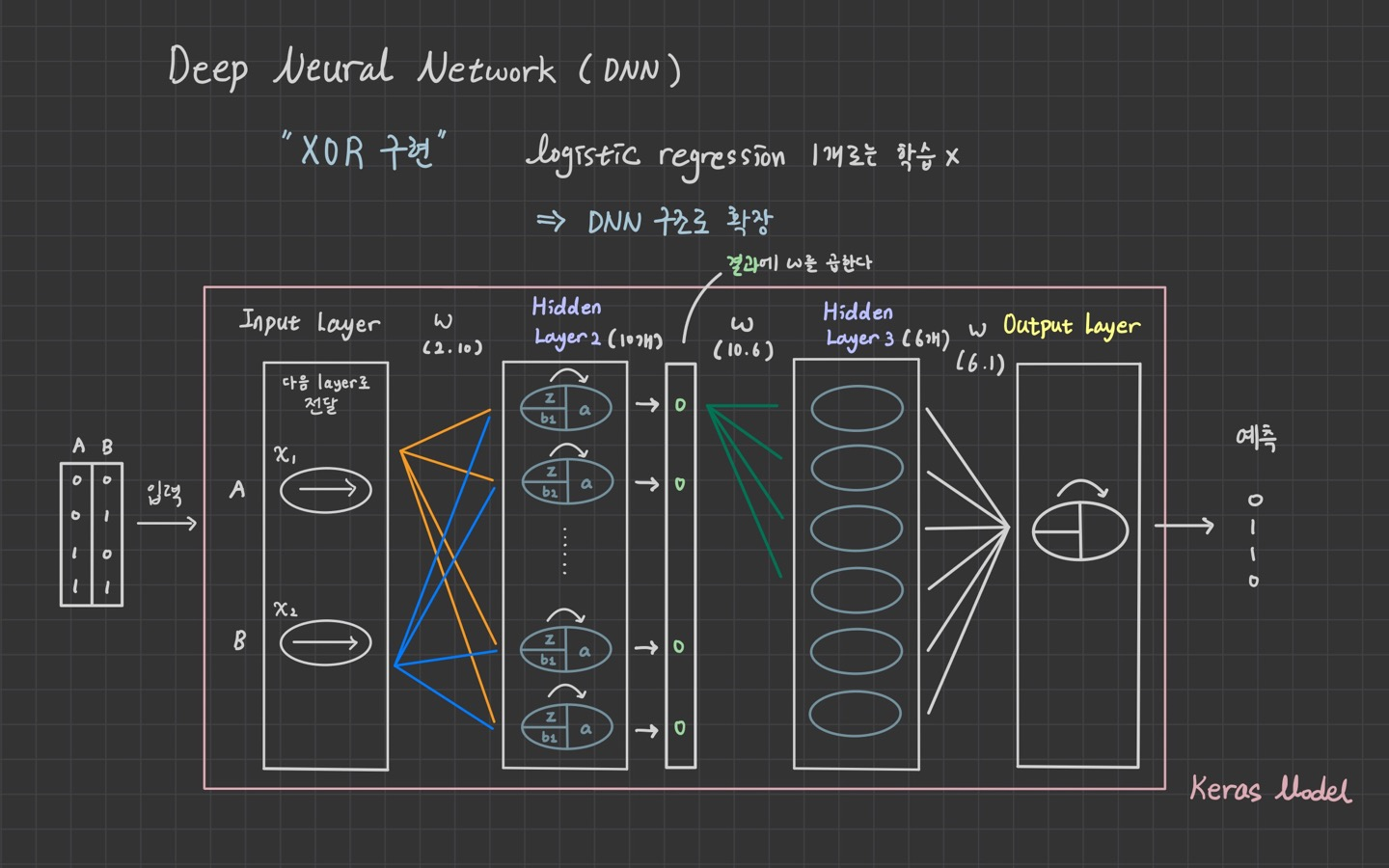

4. DNN

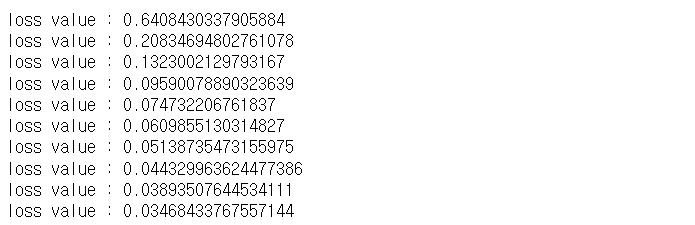

DNN으로 GATE 연산 학습 1

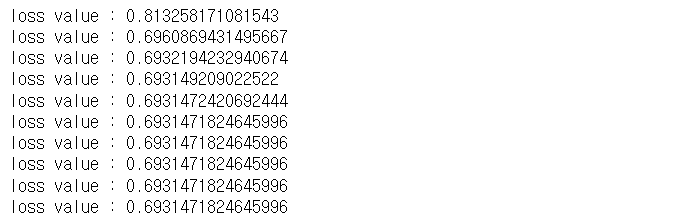

XOR

tensorflow 1.15

import numpy as np

import tensorflow as tf

from sklearn.metrics import classification_report

x_data = np.array([[0, 0],

[0, 1],

[1, 0],

[1, 1]], dtype=np.float64)

t_data = np.array([0, 1, 1, 0], dtype=np.float64)

X = tf.placeholder(shape=[None,2], dtype=tf.float32)

T = tf.placeholder(shape=[None,1], dtype=tf.float32)

W2 = tf.Variable(tf.random.normal([2,10]))

b2 = tf.Variable(tf.random.normal([10]))

layer2 = tf.sigmoid(tf.matmul(X,W2) + b2)

W3 = tf.Variable(tf.random.normal([10,6]))

b3 = tf.Variable(tf.random.normal([6]))

layer3 = tf.sigmoid(tf.matmul(layer2,W3) + b3)

W4 = tf.Variable(tf.random.normal([6,1]))

b4 = tf.Variable(tf.random.normal([1]))

logit = tf.matmul(layer3,W4) + b4

H = tf.sigmoid(logit)

loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=logit,

labels=T))

train = tf.train.GradientDescentOptimizer(learning_rate=1e-2).minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

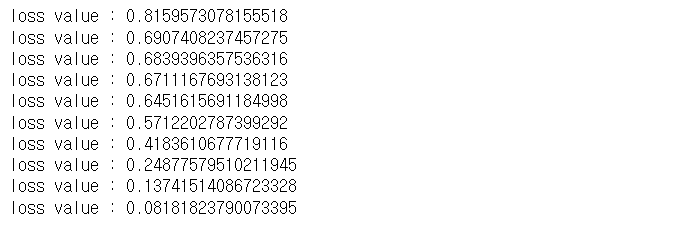

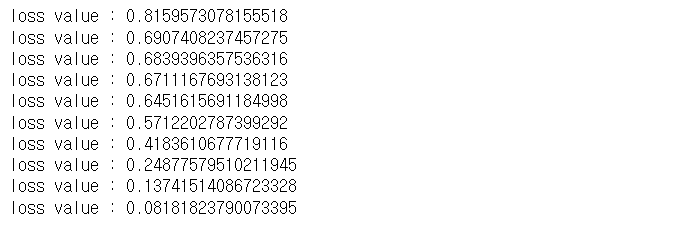

for step in range(30000):

_, loss_val = sess.run([train, loss],

feed_dict={X:x_data,

T:t_data.reshape(-1,1)})

if step % 3000 == 0:

print('loss value : {}'.format(loss_val))

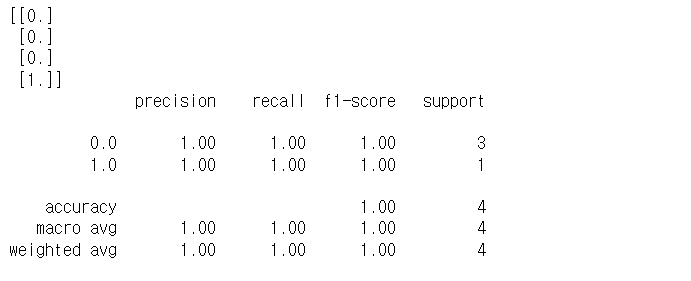

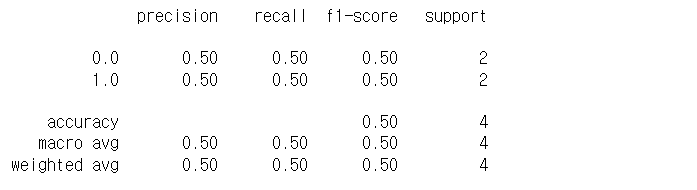

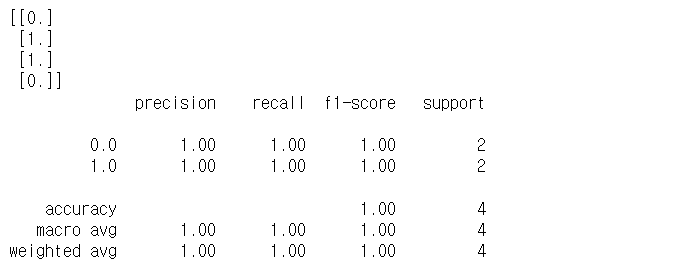

모델평가

predict = tf.cast(H >= 0.5, dtype=tf.float32)

predict_val = sess.run(predict, feed_dict={X:x_data})

print(predict_val)

print(classification_report(t_data, predict_val.ravel()))

DNN으로 GATE 연산 학습 2

XOR

tensorflow 2.X

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense

from tensorflow.keras.optimizers import SGD

from sklearn.metrics import classification_report

x_data = np.array([[0, 0],

[0, 1],

[1, 0],

[1, 1]], dtype=np.float64)

t_data = np.array([0, 1, 1, 0], dtype=np.float64)

model = Sequential()

model.add(Flatten(input_shape=(2,)))

model.add(Dense(units=128,

activation='sigmoid'))

model.add(Dense(units=32,

activation='sigmoid'))

model.add(Dense(units=16,

activation='sigmoid'))

model.add(Dense(units=1,

activation='sigmoid'))

model.compile(optimizer=SGD(learning_rate=1e-2),

loss='binary_crossentropy')

model.fit(x_data,

t_data.reshape(-1,1),

epochs=30000,

verbose=0)

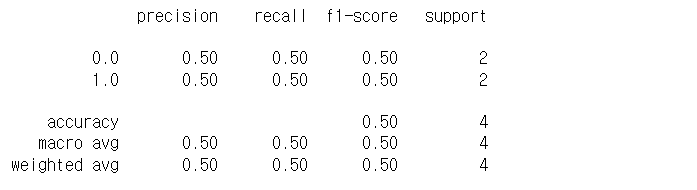

모델학습

predict_val = model.predict(x_data)

predict_val = tf.cast(predict_val > 0.5, dtype=tf.float32).numpy().ravel()

print(classification_report(t_data, predict_val))

왜 문제가 있는지는 나중에 다시 얘기할 것이다.