!pip install efficientnet

!pip install tensorflow-addons

import os

import numpy as np

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import efficientnet

import efficientnet.tfkeras as efn

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense, Dropout

from tensorflow.keras.layers import GlobalAveragePooling2D

import tensorflow_addons as tfkeras

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

from tensorflow.keras.optimizers import RMSprop, Adam

import matplotlib.pyplot as plt

import cv2 as cv

train_dir = '/content/drive/MyDrive/colab/cat_dog_small/train'

valid_dir = '/content/drive/MyDrive/colab/cat_dog_small/validation'

IMAGE_SIZE = 256

BATCH_SIZE = 8

LEARNING_RATE = 5e-5

def generate_preprocessing(img):

img = cv.resize(img, (IMAGE_SIZE, IMAGE_SIZE))

return img

train_datagen = ImageDataGenerator(rescale=1/255,

rotation_range=40,

width_shift_range=0.1,

height_shift_range=0.1,

zoom_range=0.2,

horizontal_flip=True,

vertical_flip=True,

preprocessing_function=generate_preprocessing,

fill_mode='nearest')

valid_datagen = ImageDataGenerator(rescale=1/255,

preprocessing_function=generate_preprocessing)

train_generator = train_datagen.flow_from_directory(

train_dir,

classes=['cats', 'dogs'],

batch_size=BATCH_SIZE,

class_mode='binary'

)

valid_generator = valid_datagen.flow_from_directory(

valid_dir,

classes=['cats', 'dogs'],

batch_size=BATCH_SIZE,

class_mode='binary',

shuffle=False

)

pretrained_network = efn.EfficientNetB4(

weights='imagenet',

include_top=False,

input_shape=(IMAGE_SIZE,IMAGE_SIZE,3)

)

pretrained_network.trainable = False

model = Sequential()

model.add(pretrained_network)

model.add(GlobalAveragePooling2D())

model.add(Dense(units=1,

activation='sigmoid'))

es = EarlyStopping(monitor='val_loss',

mode='auto',

patience=5,

verbose=1)

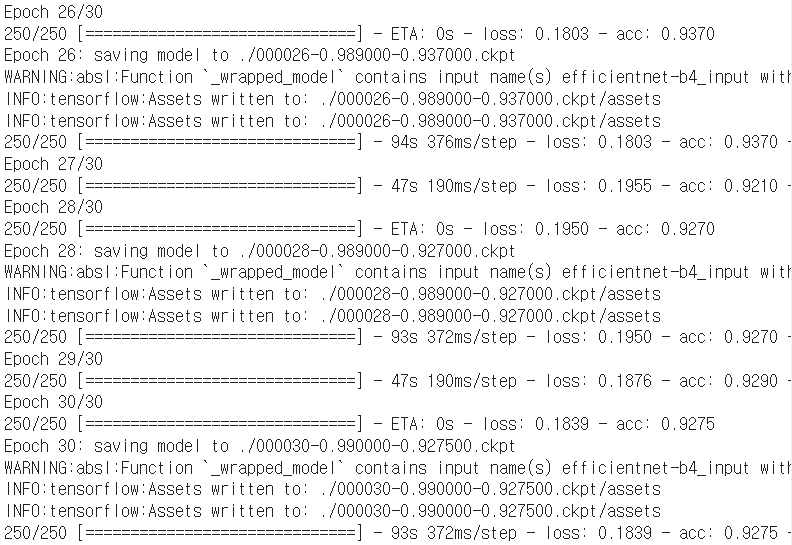

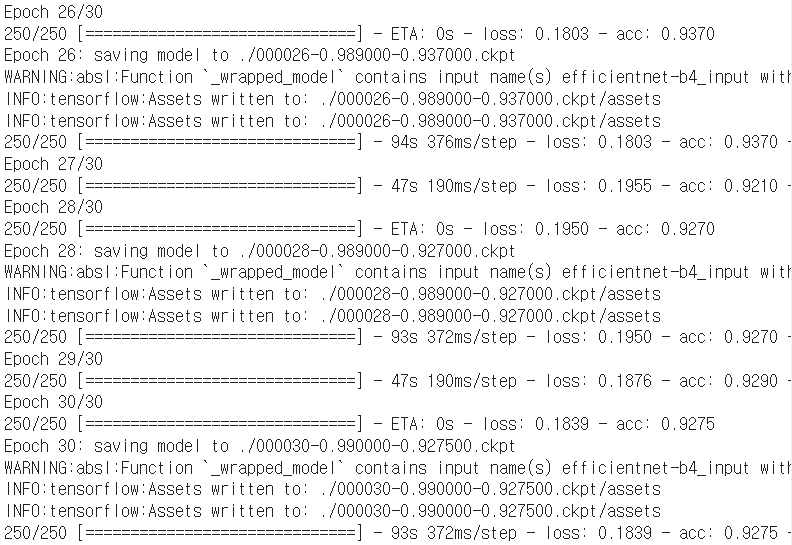

model_checkpoint = './{epoch:06d}-{val_acc:0.6f}-{acc:0.6f}.ckpt'

checkpointer = ModelCheckpoint(

filepath = model_checkpoint,

verbose=1,

period=2,

save_best_weights=True,

mode='auto',

monitor='val_acc'

)

model.compile(optimizer=Adam(learning_rate=LEARNING_RATE),

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit(train_generator,

steps_per_epoch=(2000 // BATCH_SIZE),

epochs=30,

validation_data=valid_generator,

validation_steps=(1000 // BATCH_SIZE),

callbacks=[es, checkpointer],

verbose=1)

Fine Tuning

Pretrained Network의 layer 몇 개를 학습이 가능한 형태로 동결 해제한 후 재학습