Dataset의 기본 구성 요소

init: 데이터 위치, 파일 명 등의 초기화 작업. 데이터를 불러온다.len: Dataset의 최대 요소 수를 반환getitem: Dataset의 index 데이터 반환

iris Dataset 구현

class IrisDataset(Dataset):

def __init__(self):

iris = load_iris()

self.X = iris.data

self.y = iris.target

self.feature_names = iris.feature_names

def __len__(self):

len_dataset = None

len_dataset = len(iris.data)

return len_dataset

def __getitem__(self, idx):

X, y = None, None

X = torch.FloatTensor(self.X[idx])

y = torch.tensor(self.y[idx])

return X, ytitanic Dataset, DataLoader 구현

!wget https://gist.githubusercontent.com/minsuk-sung/89df85781237b7e5b2bcd34e2c17c7ee/raw/3c3ceffb81f396e85bb964d8c77b5c90b681106c/train.csv -P data/titanic

!wget https://gist.githubusercontent.com/minsuk-sung/89df85781237b7e5b2bcd34e2c17c7ee/raw/3c3ceffb81f396e85bb964d8c77b5c90b681106c/test.csv -P data/titanic

class TitanicDataset(Dataset):

def __init__(self, path, drop_features, train=True):

self.data = pd.read_csv(path)

self.data['Sex'] = self.data['Sex'].map({'male':0, 'female':1})

self.data['Embarked'] = self.data['Embarked'].map({'S':0, 'C':1, 'Q':2})

self.data = self.data.drop(drop_features, axis=1)

self.X = self.data

self.y = self.data.pop('Survived')

self.features = list(self.data.columns)

self.classes = ['Survived', 'Dead'] ## dead

self.train = train

def __len__(self):

len_dataset = len(self.data)

return len_dataset

def __getitem__(self, idx):

X = self.X.loc[idx]

if self.train:

y = self.y.loc[idx]

# print("getitefm:: ",X, y)

return torch.tensor(X), torch.tensor(y)dataset_train_titanic = TitanicDataset('./data/titanic/train.csv',

drop_features=['PassengerId', 'Name', 'Ticket', 'Cabin'],

train=True)dataloader_train_titanic = DataLoader(dataset=dataset_train_titanic,

batch_size=8,

shuffle=True,

num_workers=4,

)MNIST Dataset, DataLoader 구현

dataset_train_MNIST = torchvision.datasets.MNIST('data/MNIST/',

train=True,

transform=transforms.ToTensor(),

download=True,

)학습 데이터

- 이미지: train-images-idx3-ubyte.gz

- 레이블: train-labels-idx1-ubyte.gz

평가 데이터 - 이미지: t10k-images-idx3-ubyte.gz

- 레이블: t10k-labels-idx1-ubyte.gz

BASE_MNIST_PATH = 'data/MNIST/MNIST/raw'

TRAIN_MNIST_IMAGE_PATH = os.path.join(BASE_MNIST_PATH, 'train-images-idx3-ubyte.gz')

TRAIN_MNIST_LABEL_PATH = os.path.join(BASE_MNIST_PATH, 'train-labels-idx1-ubyte.gz')

TEST_MNIST_IMAGE_PATH = os.path.join(BASE_MNIST_PATH, 't10k-images-idx3-ubyte.gz')

TEST_MNIST_LABEL_PATH = os.path.join(BASE_MNIST_PATH, 't10k-labels-idx1-ubyte.gz')

TRAIN_MNIST_PATH = {

'image': TRAIN_MNIST_IMAGE_PATH,

'label': TRAIN_MNIST_LABEL_PATH

}# MNIST RAW 데이터를 가져오는 함수

# https://stackoverflow.com/questions/40427435/extract-images-from-idx3-ubyte-file-or-gzip-via-python

def read_MNIST_images(path):

with gzip.open(path, 'r') as f:

# first 4 bytes is a magic number

magic_number = int.from_bytes(f.read(4), 'big')

# second 4 bytes is the number of images

image_count = int.from_bytes(f.read(4), 'big')

# third 4 bytes is the row count

row_count = int.from_bytes(f.read(4), 'big')

# fourth 4 bytes is the column count

column_count = int.from_bytes(f.read(4), 'big')

# rest is the image pixel data, each pixel is stored as an unsigned byte

# pixel values are 0 to 255

image_data = f.read()

images = np.frombuffer(image_data, dtype=np.uint8)\

.reshape((image_count, row_count, column_count))

return images

def read_MNIST_labels(path):

with gzip.open(path, 'r') as f:

# first 4 bytes is a magic number

magic_number = int.from_bytes(f.read(4), 'big')

# second 4 bytes is the number of labels

label_count = int.from_bytes(f.read(4), 'big')

# rest is the label data, each label is stored as unsigned byte

# label values are 0 to 9

label_data = f.read()

labels = np.frombuffer(label_data, dtype=np.uint8)

return labelsclass MyMNISTDataset(Dataset):

_repr_indent = 4

def __init__(self, path, transform, train=True):

self.train = train

self.X = read_MNIST_images(path['image'])

self.y = read_MNIST_labels(path['label'])

self.transform = transform

self.path = path

self.classes = list(range(10))

def __len__(self):

# ToTensor : (H x W x C) in the range [0, 255] to a torch.FloatTensor of shape (C x H x W)

# len_dataset = len(self.X[1])

len_dataset = len(self.X)

return len_dataset

def __getitem__(self, idx):

# transform 인자 = tensor or PIL

X = self.transform(self.X[idx]) ### init에서 transform(self.X) XXX

y = None

if self.train:

y = self.y[idx]

return torch.tensor(X, dtype=torch.double), torch.tensor(y, dtype=torch.long)

def __repr__(self):

'''

https://github.com/pytorch/vision/blob/master/torchvision/datasets/vision.py

'''

head = "(PyTorch HomeWork) My Custom Dataset : MNIST"

data_path = self._repr_indent*" " + "Data path: {}".format(self.path['image'])

label_path = self._repr_indent*" " + "Label path: {}".format(self.path['label'])

num_data = self._repr_indent*" " + "Number of datapoints: {}".format(self.__len__())

num_classes = self._repr_indent*" " + "Number of classes: {}".format(len(self.classes))

return '\n'.join([head,

data_path, label_path,

num_data, num_classes])dataset_train_MyMNIST = MyMNISTDataset(path=TRAIN_MNIST_PATH,

transform=transforms.Compose([

transforms.ToTensor()

]),

train=True

)dataloader_train_MNIST = DataLoader(dataset=dataset_train_MyMNIST,

batch_size=16,

shuffle=True,

num_workers=4,

)# test

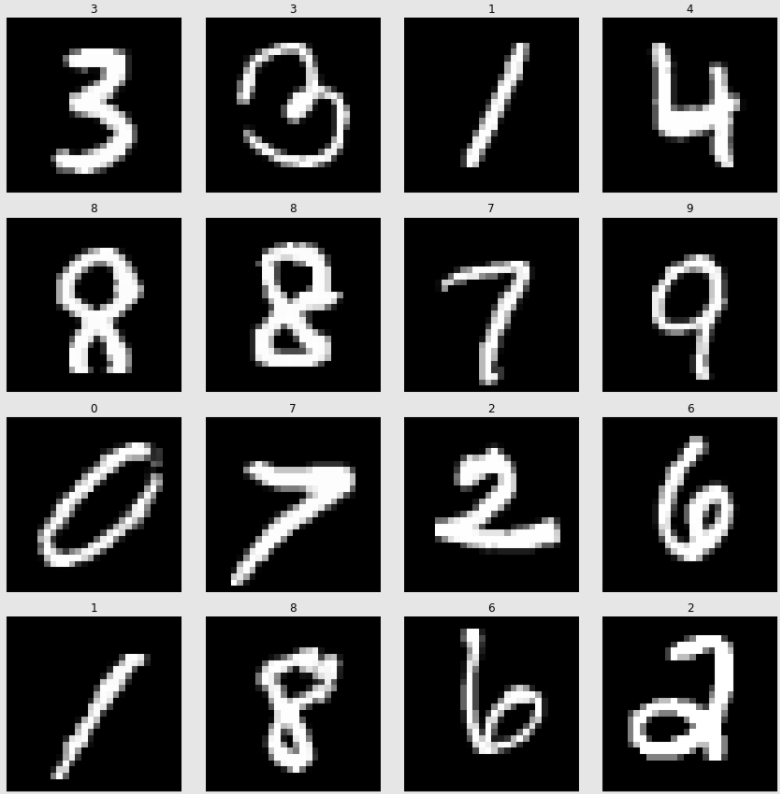

plt.figure(figsize=(12,12))

for n, (image, label) in enumerate(zip(images, labels), start=1):

plt.subplot(4,4,n)

plt.imshow(image.numpy().squeeze(), cmap='gray')

plt.title("{}".format(dataset_train_MyMNIST.classes[label]))

plt.axis('off')

plt.tight_layout()

plt.show()

AG_NEWS Dataset, DataLoader 구현

dataset_train_AG_NEWS, dataset_test_AG_NEWS = torchtext.datasets.AG_NEWS(root='./data')BASE_AG_NEWS_PATH = 'data/AG_NEWS'

TRAIN_AG_NEWS_PATH = os.path.join(BASE_AG_NEWS_PATH, 'train.csv')

TEST_AG_NEWS_PATH = os.path.join(BASE_AG_NEWS_PATH, 'test.csv')# https://www.kaggle.com/amananandrai/ag-news-classification-dataset

# import re

class MyAG_NEWSDataset(Dataset):

def __init__(self, path='./data/AG_NEWS/train.csv', train=True):

tqdm.pandas()

self._repr_indent = 4

self.data = pd.read_csv(path, sep=',', header=None, names=['class','title','description'])

self.path = path

self.train = train

self.X = self.data['title']+ ' ' + self.data['description']

self.y = self.data['class']

self.classes = ['1-World', '2-Sports', '3-Business', '4-Sci/Tech']

# vocab 단어집

# (1) 토크나이저 만들고

# tokenizer = torchtext.data.get_tokenizer("basic_english")

# (2) 문장을 토큰화해서 개수:토큰 counter 객체 만들기

counter = collections.Counter()

for data in self.X:

data = self._preprocess(data)

# counter.update(tokenizer(data))

counter.update(data.split(' '))

self.vocab = torchtext.vocab.vocab(counter, min_freq=1)

# encoder, decoder

vocab_dict = self.vocab.get_stoi()

self.encoder = vocab_dict

#itoi

self.decoder = {v:k for k,v in self.encoder.items()}

def __len__(self):

len_dataset = len(self.X)

return len_dataset

def __getitem__(self, idx):

X,y = None, None

X = self._preprocess(self.X[idx])

if self.train:

y = self.y[idx]

return y, X

def __repr__(self):

'''

https://github.com/pytorch/vision/blob/master/torchvision/datasets/vision.py

'''

head = "(PyTorch HomeWork) My Custom Dataset : AG_NEWS"

data_path = self._repr_indent*" " + "Data path: {}".format(self.path)

num_data = self._repr_indent*" " + "Number of datapoints: {}".format(self.__len__())

num_classes = self._repr_indent*" " + "Number of classes: {}".format(len(self.classes))

return '\n'.join([head, data_path, num_data, num_classes])

def _preprocess(self, s):

'''

영어 소문자, 숫자 전처리

'''

s = re.sub('[^a-zA-Z0-9 ]', '', s).lower()

return sdataset_train_MyAG_NEWS = MyAG_NEWSDataset(TRAIN_AG_NEWS_PATH, train=True)dataloader_train_AGNEWS = DataLoader(dataset_train_MyAG_NEWS,

batch_size=4,

collate_fn=custom_bowify,

shuffle=True)