In this post, I will introduce denoising score matching. We have to define some mathematical proofs in order to comprehend the connection between score matching and denoising autoencoders.

In short, I am going to explain some underlying knowledge for understanding NCSN (Noise Conditional Score Network).

GOAL 🎯

Relationship between Denoising AutoEncoder (DAE) and Score matching.

Parzen Kernel Estimation

Authors employ a parzen window in order to define some theorems quite strictly. Thus, I'm going to explain about this.

Parzen window is a simple concept for density estimation. In the paper, authors apply this concept to define expectations with probability density function, .

Parzen density estimation is defined with hyper-cube; Parzen window :

In the above equation, N and K are the total amount of data and the number of data in the Parzen window, respectively. V and k are the volume of the Parzen window and the Parzen window, respectively.

Linear / Tied weights DAE

In the paper, they try to define the connection between score matching and denoising autoencoder. Therefore, I will introduce a Denoising Autoencoder (DAE).

As you can know by the name "DAE", this is a simple autoencoder trained with noisy input and clear ground truth. In this paper, the authors assume that the encoder and decoder are composed of a linear weight and are shared. Resultantly, the objective of this model can be defined as :

Explicit Score Matching

Notations

: probability density model

: energy function

Langevin Sampling Method

In this section, I do not deal with the Metropolis-adjusted Langevin Algorithm.

First of all, I introduce "overdamped Langevin Ito diffusion".

This is defined as . In this definition,

is a Brownian motion. This means that the equation is a Markov chain and a stochastic process. In addition, a probability distribution of would be when .

In order to use this method, we have to discretize this definition. Simply, we just apply Euler-Maruyama method.

ESM : Explicit Score Matching

A probability function can be expressed as . In the expression, requires high computational costs. Therefore, we need indirect methods in order to understand . The score-matching method is one of these methods.

Original score functions are defined as , but the explicit score functions in this paper are defined as :

If we can model this function well, we can generate clear samples using the Langevin sampling method. This is because score functions mean a gradient flow of log probability density. Thus, we can perform a gradient ascent of probability density through the Langevin sampling method.

In order to train the score function, the objective can be defined as:

However, we cannot know ... Therefore, we have to find other methods to model the score function.

Implicit Score Matching

Make a long story short, Implicit Score Matching (ISM) is a method to train score functions, and the objective is defined as:

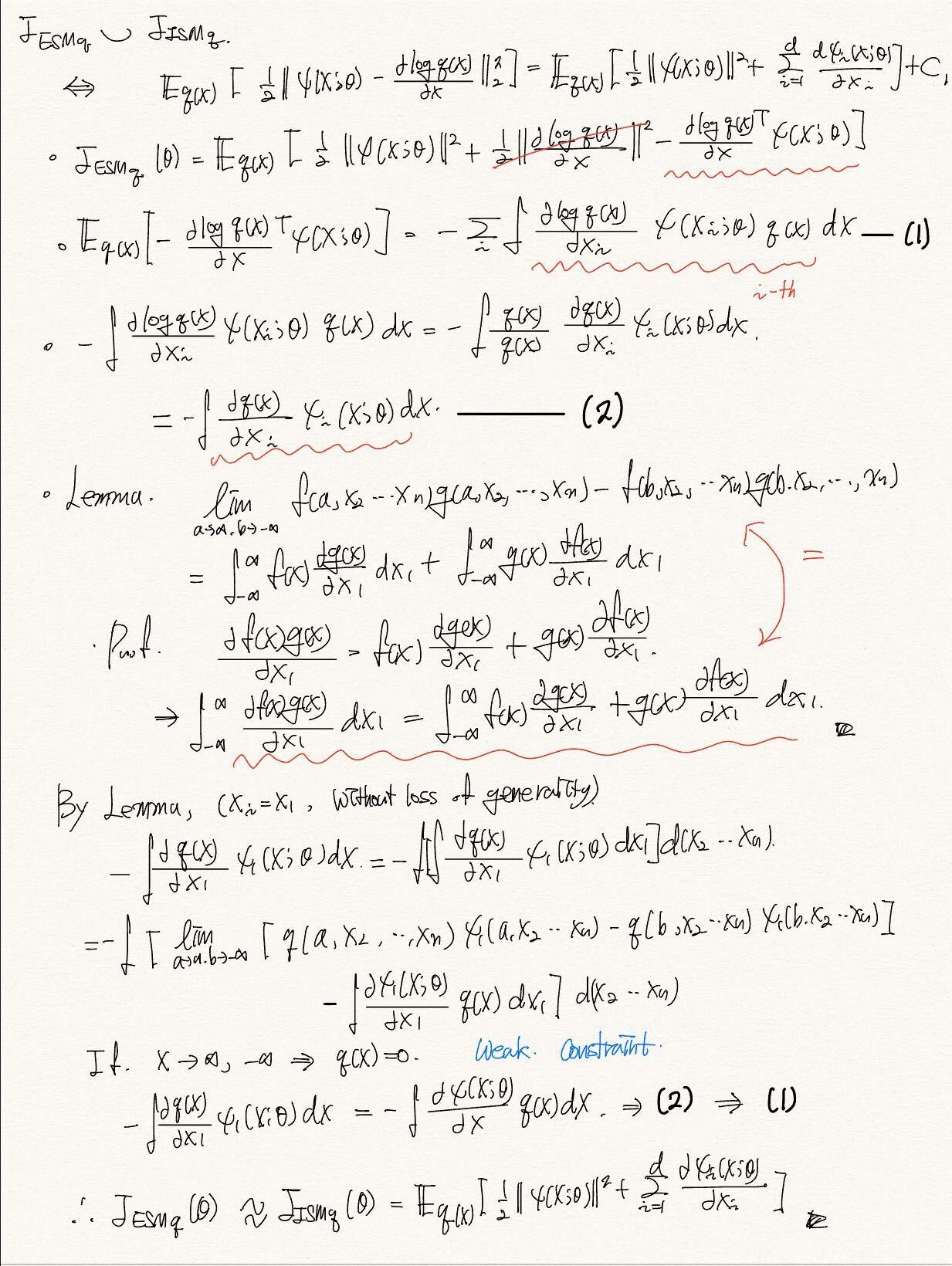

So, how can this term be derived? The details are in [Estimation of Non-Normalized Statistical Models by Score Matching : Theorem 1]. In this paper, authors prove that if some weak constraints are satisfied. means that these objectives result in same outcomes.

Proof:

In this proof, the last line has wrong sign. It must be .

Matching the Score of a Non-Parametric Estimator

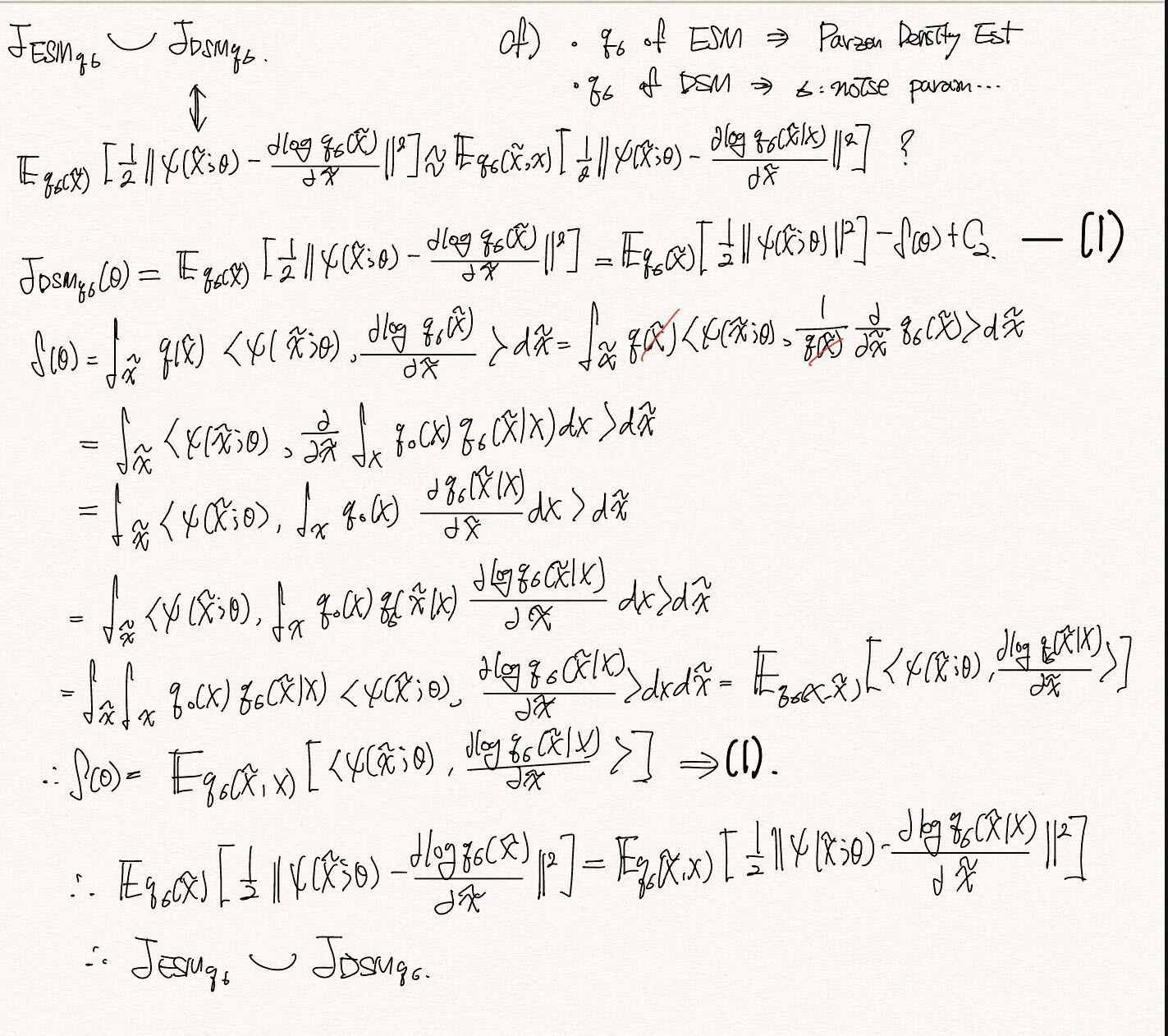

The above definition can be expressed using a non-parametric Estimator, a Parzen window. Let's define as a non-parametric estimator with a Parzen window (hyper-cube) which has a hyperparameter. Even if we define the ESM and ISM with the non-parametric estimator, the equality is satisfied.

Denoising Score Matching

First of all, ISM has a critical disadvantage. They require expensive computations because the objective of ISM needs the Hessian of the given model.

To alleviate this proble, we can use the Denoising Score Matching (DSM).

In this equation, is tractable because is a Gaussian distribution. Therefore, we can directly calculate the score function.

Proof : Appendix in the technical report : A Connection Between Score Matching and Denoising Autoencoders

https://www.iro.umontreal.ca/~vincentp/Publications/smdae_techreport.pdf

Proof:

이게 왜 작동하는가? -> proof에서 parzen density estimator를 기반으로 전개된 ESM과 비교하여 동일하다는것을 보인다. parzen density estimator와 real sample에 대한 random variables를 가지고 marginalization technique을 써서 전개를 해 나가는데, 이게 noise가 더해진 noisy data를 사용하는 것과 비교하는데 어떻게 이론적으로 맞는지가 잘 와닿지 않는다.

하지만 parzen estimator와 같은 estimator와 noisy한 샘플에 대한 pdf를 같게 볼 수 있지 않을까 라는 생각이 들긴 한다. 사실 Expectation을 사용하는 것 자체가 noise의 영향을 어느정도 배제할 수 있기 때문에 Expectation에 noised data 에 대한 데이터분포를 고려하는 것 자체가 estimator처럼 작동할 수 있었던것 아닐까.