Previous post : https://velog.io/@yhyj1001/Generative-Modeling-by-Estimating-Gradients-of-the-Data-Distribution-1-A-Connection-Between-Score-Matching-Denoising-Autoencoders

github: https://github.com/yhy258/ScoreMatching_pytorch/tree/main

Motivations & Intuitions ⭐️

Motivations 📚

- The score is a gradient taken in the ambient space of , so it is undefined when is confined to a low dimensional manifold (manifold hypothesis).

- When the support of the data distribution is confined, the score matching objective can not be a plausible score estimator. This is because the score matching function is an expectation for the given data distribution.

- Because of the scarcity of data in low density regions, score matching may not have enough evidence to estimate score functions accurately in regions of low data density.

- When two modes of the data distribution are separated by low density regions, Langevin dynamics cannot successfully reflect the relative weights of these two modes in reasonable time, and might not result in the ground truth data distribution. This is because the score function does not depend on the relative weights of the modes.

Intuitions 🧐

- The support of Gaussian noise distributions is the whole space.

- Large Gaussian noise can fill low density regions.

- Perturbed data using various levels of noise can effectively reflect the iterative sampling method, Langevin dynamics.

Contributions ⭐️

- They perturb the data using various levels of noise.

- They propose the annealing schedule for Langevin dynamics in order to accurately reflect the relative weights of the modes.

Methods

Noise Conditional Score Networks

First of all, authors define a decreasing positive geometric sequence . In addition, is a sequence of noise-perturbed distributions that converge to the true data distribution.

They employ the architecture design of UNet to jointly estimate the scores of all perturbed data distribution, .

Learning NCSNs via score matching

In order to perform the score matching, they use the denoising score matching. However, a sliced score matching can also train NCSNs.

As discussed in the previous post, the denoising score matching is defined as:

This equation can be defined because is tractable.

Then, they combine this equation for all .

where is positive.

Because they observe that when the score networks are trained to optimality, , they set coefficients as so that the values of are soughly of the same order of magnitude.

If , . In this equation, and

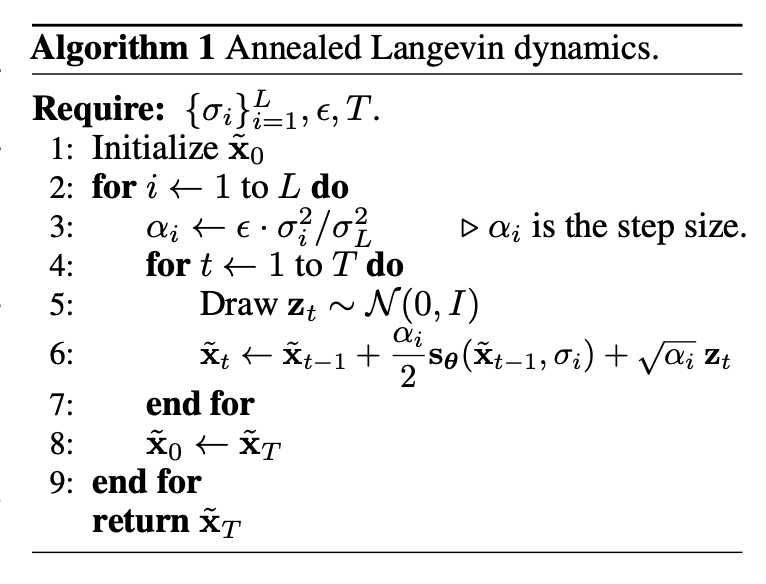

NCSN inference via annealed Langevin dynamics

- In the low density region, they employ larger step sizes.

- They can also choose in order to fix the magnitude of the "signal-to-noise" ratio in Langevin dynamics.

Langevin sampling method with the Euler method is defined as . According to this definition, SNR is .

If , SNR become .