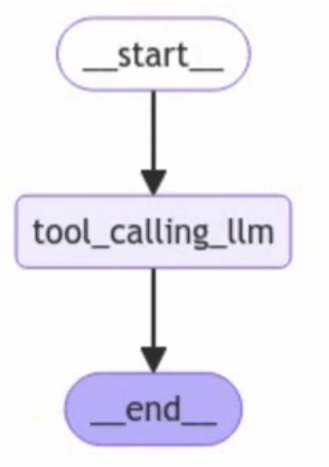

Tool calls without Router

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

from langchain_openai import ChatOpenAI

def multiply(a: int, b: int) -> int:

"""Multiply a and b.

Args:

a: first int

b: second int

"""

return a * b

llm = ChatOpenAI(model="gpt-4o")

llm_with_tools = llm.bind_tools([multiply])

# State

class MessagesState(MessagesState):

# Add any keys needed beyond messages, which is pre-built

pass

# Node

def tool_calling_llm(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# Build graph

builder = StateGraph(MessagesState)

builder.add_node("tool_calling_llm", tool_calling_llm)

builder.add_edge(START, "tool_calling_llm")

builder.add_edge("tool_calling_llm", END)

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

- If we pass in

Hello!, the LLM responds without any tool calls.

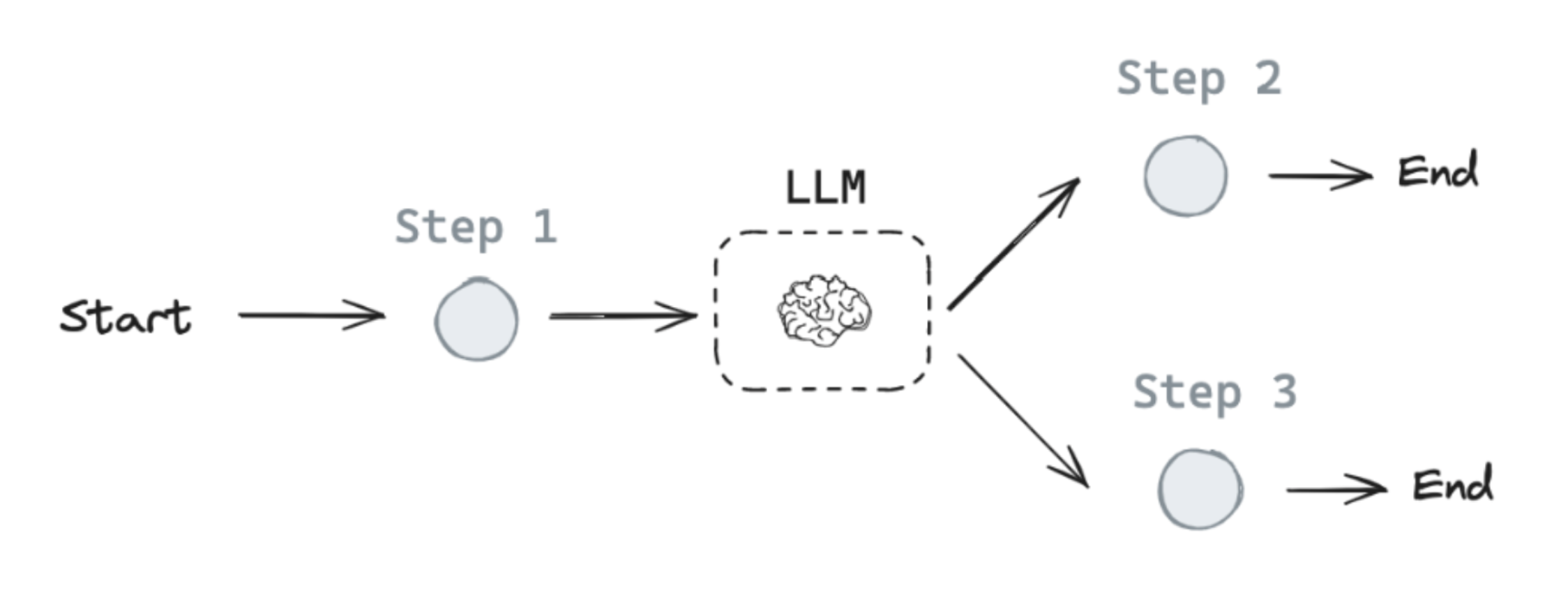

Add a node to call our tool (Router)

-

(1) Add a node that will call our tool.

-

(2) Add a conditional edge that will look at the chat model output, and route to our tool calling node or simply end if no tool call is performed.

-

Additionally, We use the built-in

ToolNodeand simply pass a list of our tools to initialize it. -

We use the built-in

tools_conditionas our conditional edge.

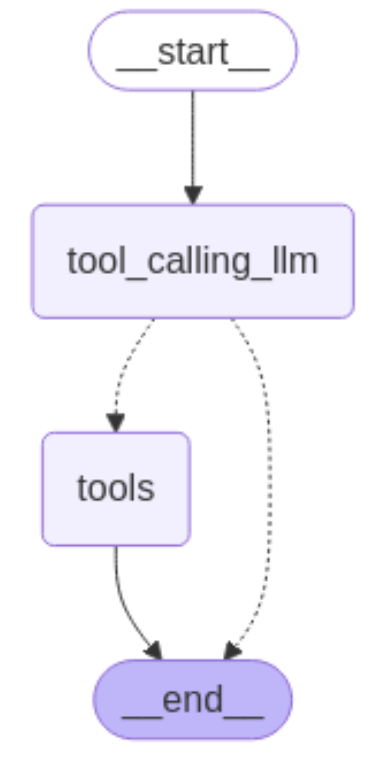

from IPython.display import Image, display

from langgraph.graph import StateGraph, START, END

from langgraph.graph import MessagesState

from langgraph.prebuilt import ToolNode

from langgraph.prebuilt import tools_condition

# Node

def tool_calling_llm(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# Build graph

## Add nodes

builder = StateGraph(MessagesState)

builder.add_node("tool_calling_llm", tool_calling_llm)

builder.add_node("tools", ToolNode([multiply]))

## Add edges

builder.add_edge(START, "tool_calling_llm")

builder.add_conditional_edges(

"tool_calling_llm",

# If the latest message (result) from assistant is a tool call -> tools_condition routes to tools

# If the latest message (result) from assistant is a not a tool call -> tools_condition routes to END

tools_condition,

)

builder.add_edge("tools", END)

## Compile

graph = builder.compile()

# View

display(Image(graph.get_graph().draw_mermaid_png()))

from langchain_core.messages import HumanMessage

messages = [HumanMessage(content="Hello, what is 2 multiplied by 2?")]

messages = graph.invoke({"messages": messages})

for m in messages['messages']:

m.pretty_print()= Human Message =

Hello, what is 2 multiplied by 2?

= Ai Message =

Tool Calls:

multiply (call_4XErrexQcSFn4uFq1SdWq5GJ)

Call ID: call_4XErrexQcSFn4uFq1SdWq5GJ

Args:

a: 2

b: 2

= Tool Message =

Name: multiply

4

Handling VIP and meet-and-greet areas requires extra care. These zones are often targeted by fans hoping to gain unauthorized access. Security ensures construction security guard jobs only credentialed individuals enter these areas, maintaining a respectful atmosphere where artists can safely engage with guests without being overwhelmed or put at risk.