📌 목표

오늘은 PKOS 스터디의 마지막 5주차의 주제인 보안에 대해서 알아가 보도록 하자!

EC2 IAM Role, 메타데이터

Pod 내에서 EC2 메타데이터 IAM 토큰정보를 사용해 AWS 서비스 사용해보는 것을 실습해보자.

인스턴스내에서 EC2에서 사용하는 메타데이터를 조회하고 활용할 수 있도록 제공하며

"http://169.254.169.254/latest/meta-data/" 를 통해 확인 할 수 있다.

curl 169.254.169.254/latest/meta-data/

user-data(sparkandassociates:harbor) [root@kops-ec2 ~]# curl 169.254.169.254/latest/meta-data

ami-id

ami-launch-index

ami-manifest-path

block-device-mapping/

events/

hostname

identity-credentials/

instance-action

instance-id

instance-life-cycle

instance-type

local-hostname

local-ipv4

mac

metrics/

network/

placement/

profile

public-hostname

public-ipv4

public-keys/

reservation-id

security-groups

services/참고로 Openstack 내 인스턴스도 동일하게 메타데이터 서버를 사용 가능하다.

root@y-1:~# curl 169.254.169.254/latest/meta-data

ami-id

ami-launch-index

ami-manifest-path

block-device-mapping/

hostname

instance-action

instance-id

instance-type

local-hostname

local-ipv4

placement/

public-hostname

public-ipv4

public-keys/

reservation-id

security-groupsroot@y-1:~#pod 내에서 기본적으로 조회가 안되도록 보안설정이 되어있다.

# netshoot-pod 생성

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: netshoot-pod

spec:

replicas: 2

selector:

matchLabels:

app: netshoot-pod

template:

metadata:

labels:

app: netshoot-pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 파드 이름 변수 지정

PODNAME1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].metadata.name})

PODNAME2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].metadata.name})

# EC2 메타데이터 정보 확인

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl exec -it $PODNAME1 -- curl 169.254.169.254 ;echo

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl exec -it $PODNAME2 -- curl 169.254.169.254 ;echo워커 노드 1대에서 EC2 메타데이터 보안을 제거하고 다시 해보자.

(nodes-ap-northeast-2a, nodes-ap-northeast-2c 워커 두대중 첫번째 워커만 적용)

#

kops edit ig nodes-ap-northeast-2a

---

# 아래 3줄 제거

spec:

instanceMetadata:

httpPutResponseHopLimit: 1

httpTokens: required

---

# 업데이트 적용 : 노드1대 롤링업데이트

kops update cluster --yes && echo && sleep 3 && kops rolling-update cluster --yes

..

..

Detected single-control-plane cluster; won't detach before draining

NAME STATUS NEEDUPDATE READY MIN TARGET MAX NODES

control-plane-ap-northeast-2a Ready 0 1 1 1 1 1

nodes-ap-northeast-2a NeedsUpdate 1 0 1 1 1 1

nodes-ap-northeast-2c Ready 0 1 1 1 1 1

..

..

I0403 10:40:08.777396 23960 instancegroups.go:467] waiting for 15s after terminating instance

I0403 10:40:23.779129 23960 instancegroups.go:501] Validating the cluster.

I0403 10:40:24.601875 23960 instancegroups.go:540] Cluster validated; revalidating in 10s to make sure it does not flap.

I0403 10:40:35.248158 23960 instancegroups.go:537] Cluster validated.

I0403 10:40:35.248192 23960 rollingupdate.go:234] Rolling update completed for cluster "sparkandassociates.net"!

다시 파드1,2에서 EC2 메타데이터를 확인해보자.

(sparkandassociates:harbor) [root@kops-ec2 ~]# kops get instances

ID NODE-NAME STATUS ROLES STATE INTERNAL-IP INSTANCE-GROUP MACHINE-TYPE

i-066cf2f8937746e50 i-066cf2f8937746e50 UpToDate node 172.30.83.26 nodes-ap-northeast-2c.sparkandassociates.net c5a.2xlarge

i-0c421069027ec2d2d i-0c421069027ec2d2d UpToDate node 172.30.34.113 nodes-ap-northeast-2a.sparkandassociates.net c5a.2xlarge

i-0d3de3051f46d267d i-0d3de3051f46d267d UpToDate control-plane 172.30.55.185 control-plane-ap-northeast-2a.masters.sparkandassociates.net c5a.2xlarge

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl get pod -l app=netshoot-pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

netshoot-pod-7757d5dd99-qhgjv 1/1 Running 0 8m1s 172.30.49.190 i-0c421069027ec2d2d <none> <none>

netshoot-pod-7757d5dd99-x5lts 1/1 Running 0 17m 172.30.83.71 i-066cf2f8937746e50 <none> <none>

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get nodes i-0c421069027ec2d2d -o yaml | grep topology.kubernetes.io/zone

topology.kubernetes.io/zone: ap-northeast-2a

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get nodes i-066cf2f8937746e50 -o yaml | grep topology.kubernetes.io/zone

topology.kubernetes.io/zone: ap-northeast-2c

(sparkandassociates:harbor) [root@kops-ec2 ~]#

# EC2 meta 보안을 제거한 노드 i-0c421069027ec2d2d 에 배포된 pod에서 ec2 metadata 조회가 가능하다!!

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl exec -it $PODNAME1 -- curl 169.254.169.254 ;echo

1.0

2007-01-19

2007-03-01

2007-08-29

2007-10-10

2007-12-15

2008-02-01

2008-09-01

2009-04-04

2011-01-01

2011-05-01

2012-01-12

2014-02-25

2014-11-05

2015-10-20

2016-04-19

2016-06-30

2016-09-02

2018-03-28

2018-08-17

2018-09-24

2019-10-01

2020-10-27

2021-01-03

2021-03-23

2021-07-15

2022-09-24

latest

## i-066cf2f8937746e50 노드에 배포된 netshoot pod에서는 조회 불가하다.

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl exec -it $PODNAME2 -- curl 169.254.169.254 ;echo

(sparkandassociates:harbor) [root@kops-ec2 ~]#

## 다시 pod 1에서 토큰정보를 얻어보자.

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl exec -it $PODNAME1 -- curl 169.254.169.254/latest/meta-data/iam/security-credentials/ ;echo

nodes.sparkandassociates.net

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl exec -it $PODNAME1 -- curl 169.254.169.254/latest/meta-data/iam/security-credentials/nodes.$KOPS_CLUSTER_NAME | jq

{

"Code": "Success",

"LastUpdated": "2023-04-03T01:40:01Z",

"Type": "AWS-HMAC",

"AccessKeyId": "ASIA3NGF...UUFH6IL5",

"SecretAccessKey": "avvnBTS+zHB...55vl9Qp",

"Token": "IQoJb3JpZ2luX2VjEPL//////////wEaDmFwLW5vcnRoZWFzdC0yIkgwRgIhAO1bRK...xjCJ3aihBjqwARkFYS+ye6qItlZqbjxOZbA4CEE79Pnn8Qt3UuRn+QqyFW1b7cseWPf24+LlKuyefBUENCpsoNNdJyF0+8FRCl2bG3vWRxfDl0TjlMmjlcB/k/tkdB8NrhAbjA/Y1g7q1va0Zgvu5so1R6yWFPrOSpp6PL6smibnVGb120++BLMC1VDgUEKM6wBX5mkJ2azjuCcWdj7Qbq3VXBNOGw/PCBVpF4jwbofcQxVKaxEvJc1m",

"Expiration": "2023-04-03T08:15:25Z"

}👉 도전과제1 boto3 통해 AWS 서비스 제어

파드에서 탈취한 EC2 메타데이터 IAM role token 정보를 활용해서

python boto3를 통해 SDK로 AWS 서비스를 사용해보자.

boto3배포

# boto3 사용을 위한 파드 생성

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: boto3-pod

spec:

replicas: 2

selector:

matchLabels:

app: boto3

template:

metadata:

labels:

app: boto3

spec:

containers:

- name: boto3

image: jpbarto/boto3

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 확인

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get pod -o wide -l app=boto3

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

boto3-pod-7944d7b4db-d4z4n 1/1 Running 0 2m35s 172.30.58.42 i-0c421069027ec2d2d <none> <none>

boto3-pod-7944d7b4db-gnrpj 1/1 Running 0 12s 172.30.83.72 i-066cf2f8937746e50 <none> <none>

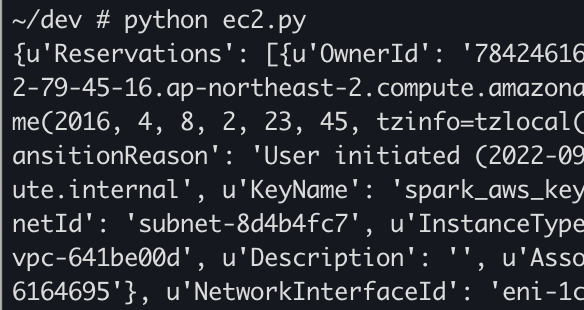

탈취한 POD내에서 인스턴스 정보조회

순한맛으로 인스턴스 정보조회부터 해보자.

"인스턴스 정보 조회하는 코드"

import boto3

ec2 = boto3.client('ec2', region_name = 'ap-northeast-2')

response = ec2.describe_instances()

print(response)sample 코드 설명엔 region이 빠져있는데 이럴경우 에러가 나므로 boto3.client 에 region_name을 반드시 넣어주자.

(ref: https://boto3.amazonaws.com/v1/documentation/api/latest/guide/ec2-example-managing-instances.html)

인스턴스 모든 상세정보가 출력된다.

보안이 설정된 노드에서 생성된 pod에서 실행

credential 정보가 없다고 나온다.

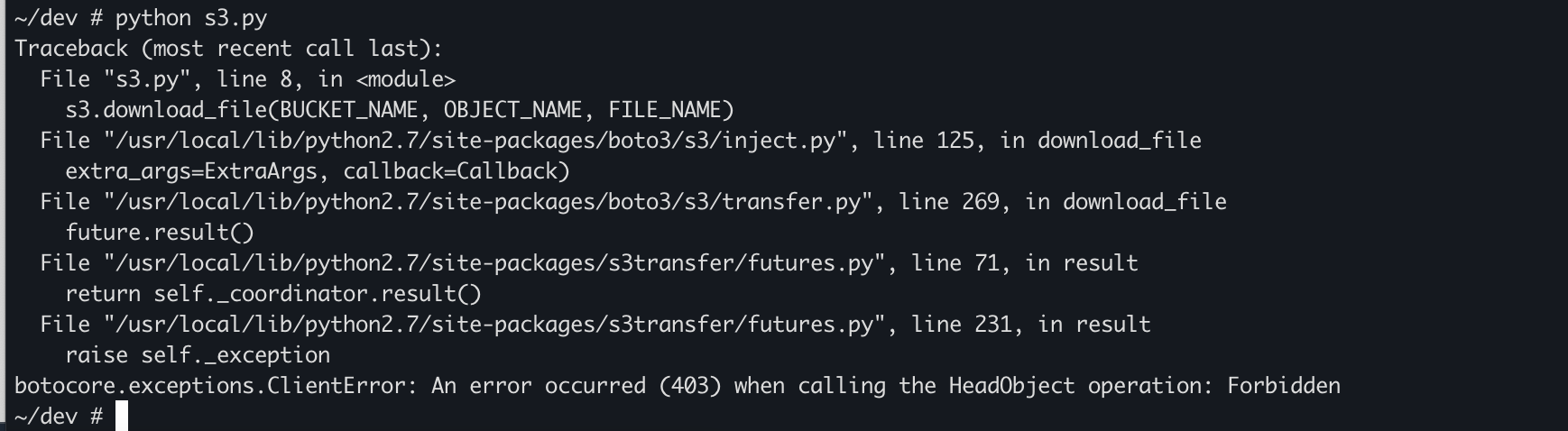

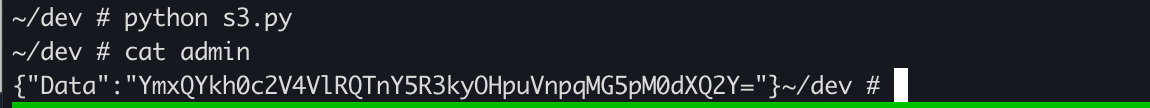

탈취한 POD내에서 S3 서비스 접근 및 파일 다운로드

"s3 filedownload 코드"

import boto3

s3 = boto3.client('s3')

s3.download_file('BUCKET_NAME', 'OBJECT_NAME', 'FILE_NAME')S3 "pkos2" 버킷내에 admin secret 파일을 다운로드 받아보자.

# cat s3.py

import boto3

BUCKET_NAME = 'pkos2'

OBJECT_NAME = 'sparkandassociates.net/secrets/admin'

FILE_NAME = 'admin'

s3 = boto3.client('s3', region_name = 'ap-northeast-2')

s3.download_file(BUCKET_NAME, OBJECT_NAME, FILE_NAME)

~/dev #

# 다운로드 코드 실행

~/dev # python s3.py

403에러가 발생하는데

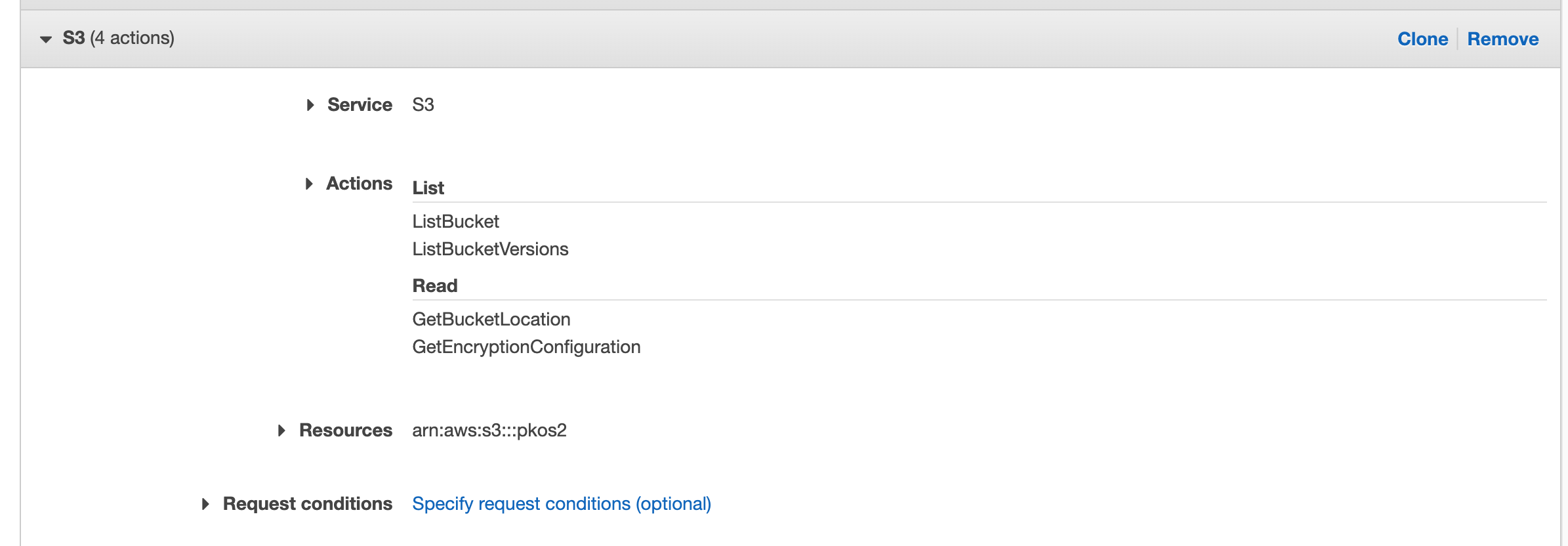

해당 EC2 인스턴스 IAM role에 S3 권한이 부족해서 그렇다.

권한을 추가해주자.

현재 조회권한만 있고

object get 권한이 없어 object 다운로드가 불가하다.

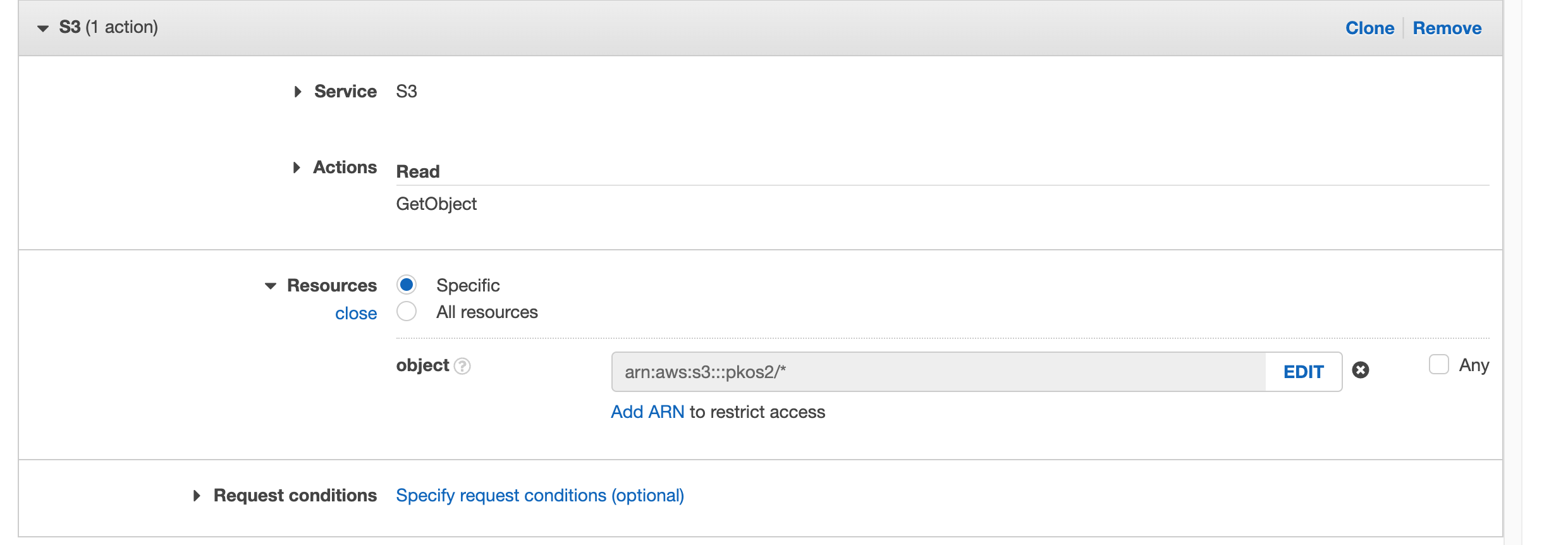

"GetObject" 권한을 추가해준다.

권한을 추가하고 다시 다운로드 코드 실행.

탈취한 POD 내에서 S3 버킷에서 파일을 다운로드 받았다.

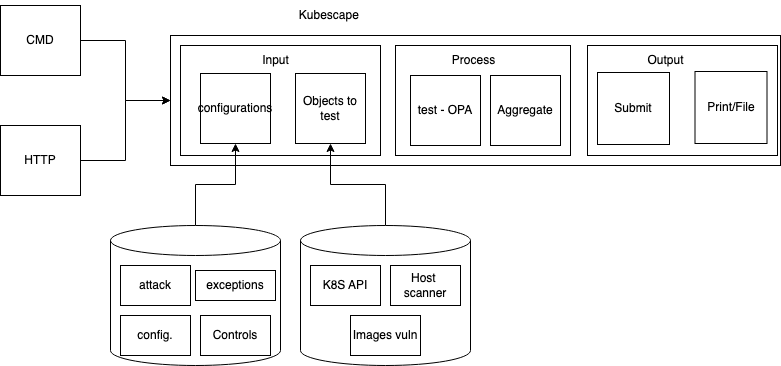

kubescape

kubescape 는 k8s 클러스터의 취약점을 점검해주는 툴이며

yaml, helm 차트를 진단한다는 점이 특징이다.

(이미지출처 : https://github.com/kubescape/kubescape/blob/master/docs/architecture.md )

kubescape 설치

curl -s https://raw.githubusercontent.com/kubescape/kubescape/master/install.sh | /bin/bash

## download artifacts

kubescape download artifacts

(sparkandassociates:harbor) [root@kops-ec2 ~]# tree ~/.kubescape/

/root/.kubescape/

├── allcontrols.json

├── armobest.json

├── attack-tracks.json

├── cis-eks-t1.2.0.json

├── cis-v1.23-t1.0.1.json

├── controls-inputs.json

├── devopsbest.json

├── exceptions.json

├── mitre.json

└── nsa.json

# 제공하는 정책은 아래와 같이 확인가능하다.

kubescape list controls

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubescape list controls

+------------+---------------------------------------------------------------+------------------------------------+------------+

| CONTROL ID | CONTROL NAME | DOCS | FRAMEWORKS |

+------------+---------------------------------------------------------------+------------------------------------+------------+

| C-0001 | Forbidden Container Registries | https://hub.armosec.io/docs/c-0001 | |

+------------+---------------------------------------------------------------+------------------------------------+------------+

| C-0002 | Exec into container | https://hub.armosec.io/docs/c-0002 | |

+------------+---------------------------------------------------------------+------------------------------------+------------+

| C-0004 | Resources memory limit and | https://hub.armosec.io/docs/c-0004 | |

| | request | | |

+------------+---------------------------------------------------------------+------------------------------------+------------+

| C-0005 | API server insecure port is | https://hub.armosec.io/docs/c-0005 | |

| | enabled | | |

+------------+---------------------------------------------------------------+------------------------------------+------------+

| C-0007 | Data Destruction | https://hub.armosec.io/docs/c-0007 | |

+------------+---------------------------------------------------------------+------------------------------------+------------+

| C-0009 | Resource limits | https://hub.armosec.io/docs/c-0009 | |

...

scan

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubescape scan --enable-host-scan --verbosehost-scanner 라는 파드가 각 노드마다 기동되며 클러스터 점검을 진행한다.

kubescape-host-scanner host-scanner-j4t5z 1/1 Running 0 6s

kubescape-host-scanner host-scanner-n2v78 1/1 Running 0 6s

kubescape-host-scanner host-scanner-v7j7d 1/1 Running 0 6sscan 결과를 아래와 같이 확인할 수 있다.

Controls: 65 (Failed: 35, Passed: 22, Action Required: 8)

Failed Resources by Severity: Critical — 0, High — 83, Medium — 370, Low — 128

+----------+-------------------------------------------------------+------------------+---------------+--------------------+

| SEVERITY | CONTROL NAME | FAILED RESOURCES | ALL RESOURCES | % RISK-SCORE |

+----------+-------------------------------------------------------+------------------+---------------+--------------------+

| Critical | API server insecure port is enabled | 0 | 1 | 0% |

| Critical | Disable anonymous access to Kubelet service | 0 | 3 | 0% |

| Critical | Enforce Kubelet client TLS authentication | 0 | 6 | 0% |

| Critical | CVE-2022-39328-grafana-auth-bypass | 0 | 1 | 0% |

| High | Forbidden Container Registries | 0 | 65 | Action Required * |

| High | Resources memory limit and request | 0 | 65 | Action Required * |

| High | Resource limits | 49 | 65 | 76% |

| High | Applications credentials in configuration files | 0 | 147 | Action Required * |

| High | List Kubernetes secrets | 20 | 108 | 19% |

| High | Host PID/IPC privileges | 1 | 65 | 1% |

| High | HostNetwork access | 6 | 65 | 8% |

| High | Writable hostPath mount | 3 | 65 | 4% |

| High | Insecure capabilities | 0 | 65 | 0% |

| High | HostPath mount | 3 | 65 | 4% |

| High | Resources CPU limit and request | 0 | 65 | Action Required * |

| High | Instance Metadata API | 0 | 0 | 0% |

| High | Privileged container | 1 | 65 | 1% |

| High | CVE-2021-25742-nginx-ingress-snippet-annotation-vu... | 0 | 1 | 0% |

| High | Workloads with Critical vulnerabilities exposed to... | 0 | 0 | Action Required ** |

| High | Workloads with RCE vulnerabilities exposed to exte... | 0 | 0 | Action Required ** |

| High | CVE-2022-23648-containerd-fs-escape | 0 | 3 | 0% |

| High | RBAC enabled | 0 | 1 | 0% |

| High | CVE-2022-47633-kyverno-signature-bypass | 0 | 0 | 0% |

| Medium | Exec into container | 2 | 108 | 2% |

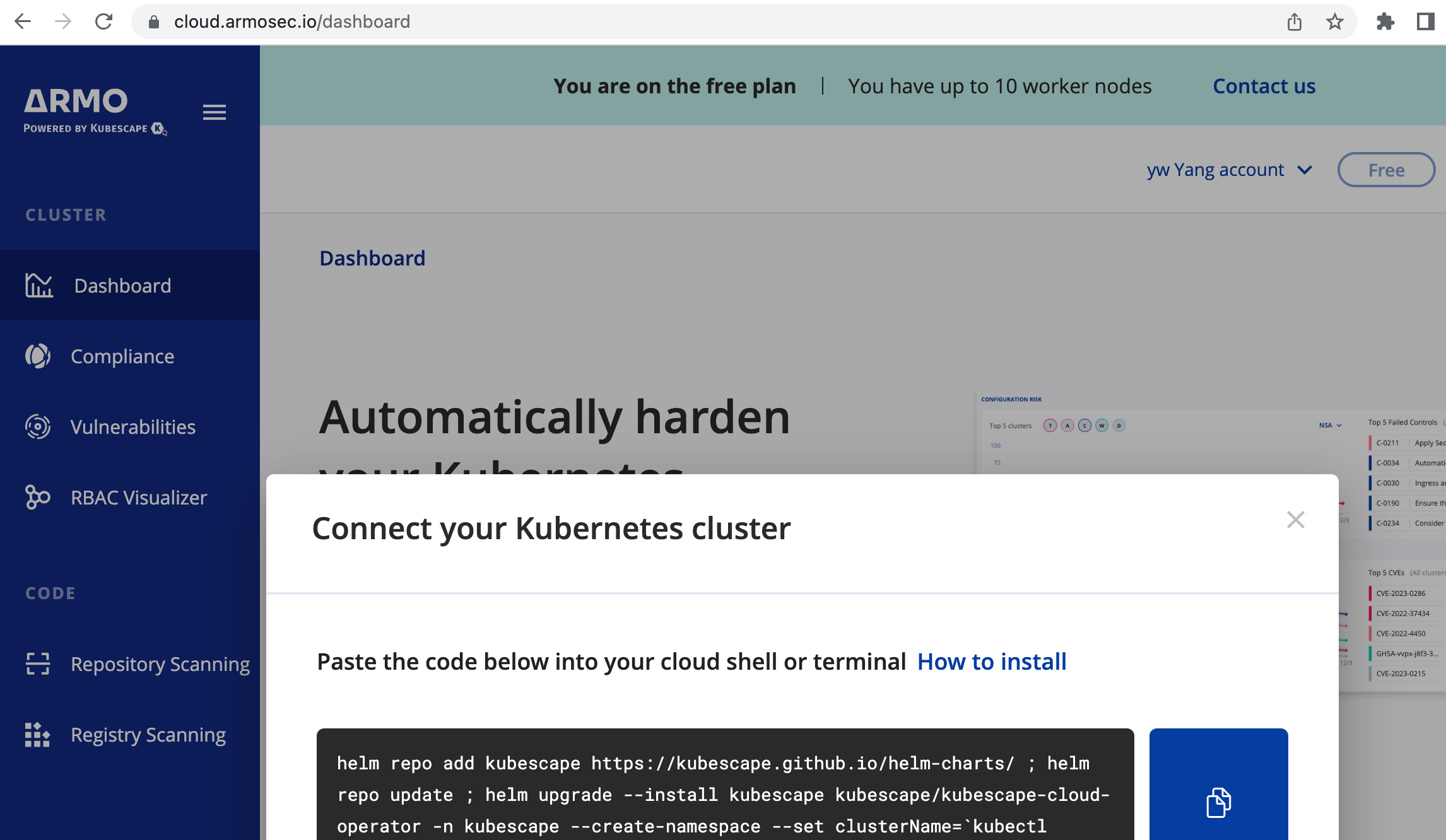

| Medium | Data Destruction | 9 | 108 | 8% |👉 도전과제2 : kubescape armo 웹 사용

kubescape에서 armo 라는 웹서비스를 제공한다.

브라우저에서 portal.armo.cloud 접속

회원가입후 아래 진단을 원하는 클러스터에 실행할

helm repo, chart install 명령을 안내해주며, 그대로 복사해서 실행해주면 된다.

내 클러스터에 실행

(sparkandassociates:harbor) [root@kops-ec2 ~]# helm repo add kubescape https://kubescape.github.io/helm-charts/ ; helm repo update ; helm upgrade --install kubescape kubescape/kubescape-cloud-operator -n kubescape --create-namespace --set clusterName=`kubectl config current-context` --set account=a014fa2a-98a6df

"kubescape" has been added to your repositories

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kubescape" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "argo" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Release "kubescape" does not exist. Installing it now.

NAME: kubescape

LAST DEPLOYED: Mon Apr 3 17:03:15 2023

NAMESPACE: kubescape

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing kubescape-cloud-operator version 1.10.8.

You can see and change the values of your's recurring configurations daily scan in the following link:

https://cloud.armosec.io/settings/assets/clusters/scheduled-scans?cluster=sparkandassociates-net

> kubectl -n kubescape get cj kubescape-scheduler -o=jsonpath='{.metadata.name}{"\t"}{.spec.schedule}{"\n"}'

You can see and change the values of your's recurring images daily scan in the following link:

https://cloud.armosec.io/settings/assets/images

> kubectl -n kubescape get cj kubevuln-scheduler -o=jsonpath='{.metadata.name}{"\t"}{.spec.schedule}{"\n"}'

See you!!!

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl -n kubescape get all

NAME READY STATUS RESTARTS AGE

pod/gateway-5b987fff9f-98shv 1/1 Running 0 22h

pod/kollector-0 1/1 Running 0 22h

pod/kubescape-6884bcf5b7-22vtp 1/1 Running 0 22h

pod/kubescape-scheduler-28009247-dbbzv 0/1 Completed 0 9h

pod/kubevuln-6d964b688c-m45jm 1/1 Running 0 22h

pod/kubevuln-scheduler-28009808-mfblx 0/1 Completed 0 10m

pod/operator-867c5bcdff-gj7v8 1/1 Running 0 22h

pod/otel-collector-5f69f464d7-cr48x 1/1 Running 0 22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/gateway ClusterIP 100.68.29.246 <none> 8001/TCP,8002/TCP 22h

service/kubescape ClusterIP 100.69.30.16 <none> 8080/TCP 22h

service/kubevuln ClusterIP 100.69.146.86 <none> 8080/TCP,8000/TCP 22h

service/operator ClusterIP 100.64.97.181 <none> 4002/TCP 22h

service/otel-collector ClusterIP 100.64.43.12 <none> 4317/TCP 22h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/gateway 1/1 1 1 22h

deployment.apps/kubescape 1/1 1 1 22h

deployment.apps/kubevuln 1/1 1 1 22h

deployment.apps/operator 1/1 1 1 22h

deployment.apps/otel-collector 1/1 1 1 22h

NAME DESIRED CURRENT READY AGE

replicaset.apps/gateway-5b987fff9f 1 1 1 22h

replicaset.apps/kubescape-6884bcf5b7 1 1 1 22h

replicaset.apps/kubevuln-6d964b688c 1 1 1 22h

replicaset.apps/operator-867c5bcdff 1 1 1 22h

replicaset.apps/otel-collector-5f69f464d7 1 1 1 22h

NAME READY AGE

statefulset.apps/kollector 1/1 22h

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

cronjob.batch/kubescape-scheduler 47 20 * * * False 0 9h 22h

cronjob.batch/kubevuln-scheduler 8 6 * * * False 0 10m 22h

NAME COMPLETIONS DURATION AGE

job.batch/kubescape-scheduler-28009247 1/1 6s 9h

job.batch/kubevuln-scheduler-28009808 1/1 4s 10m

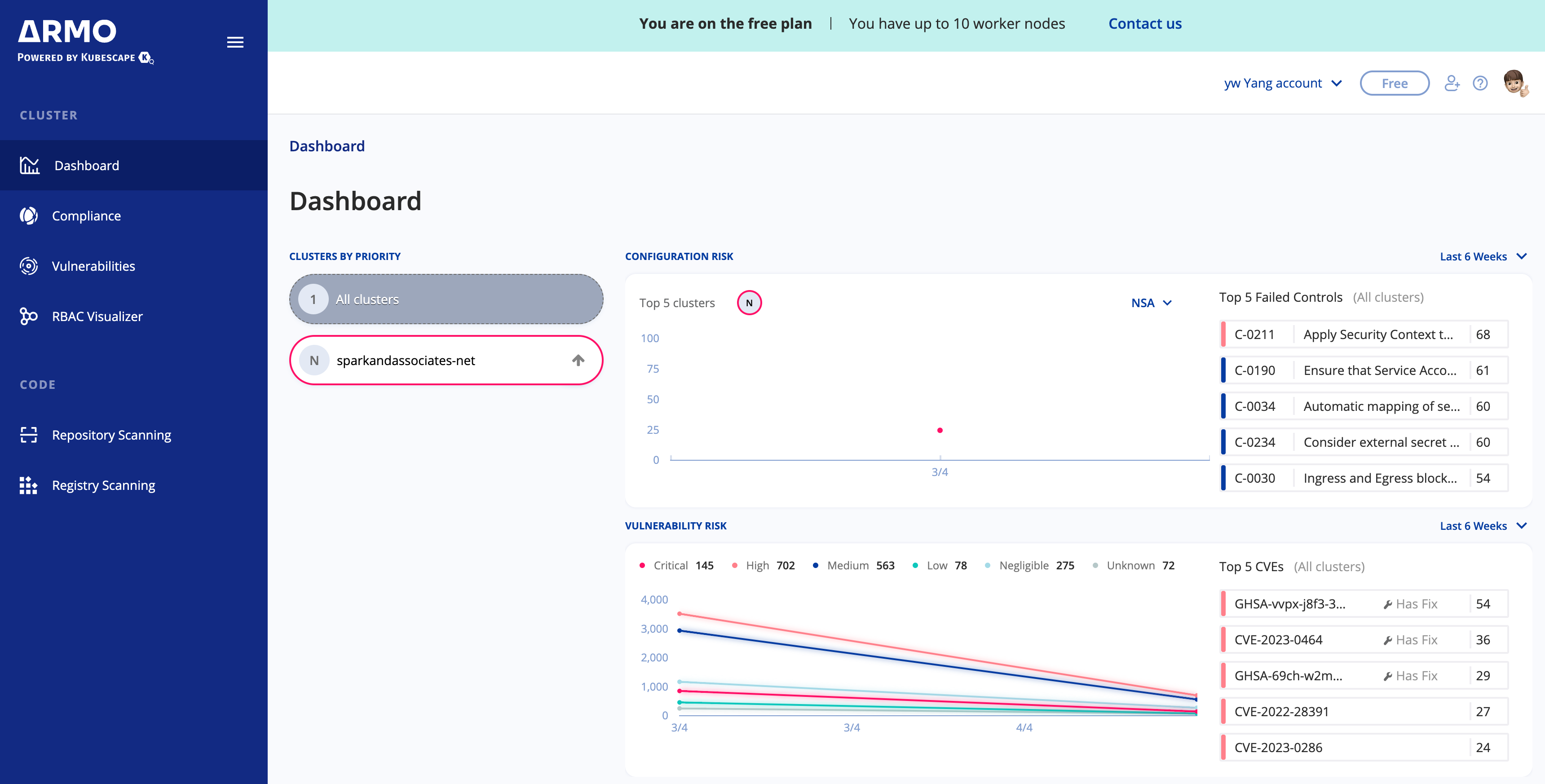

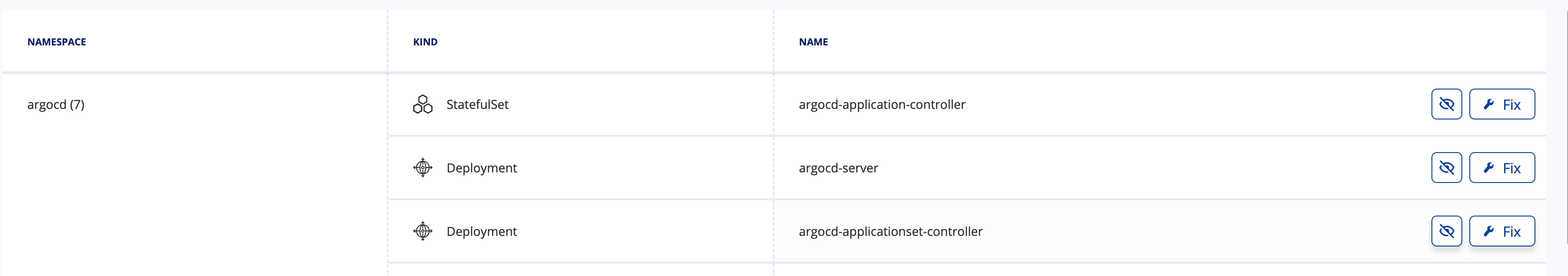

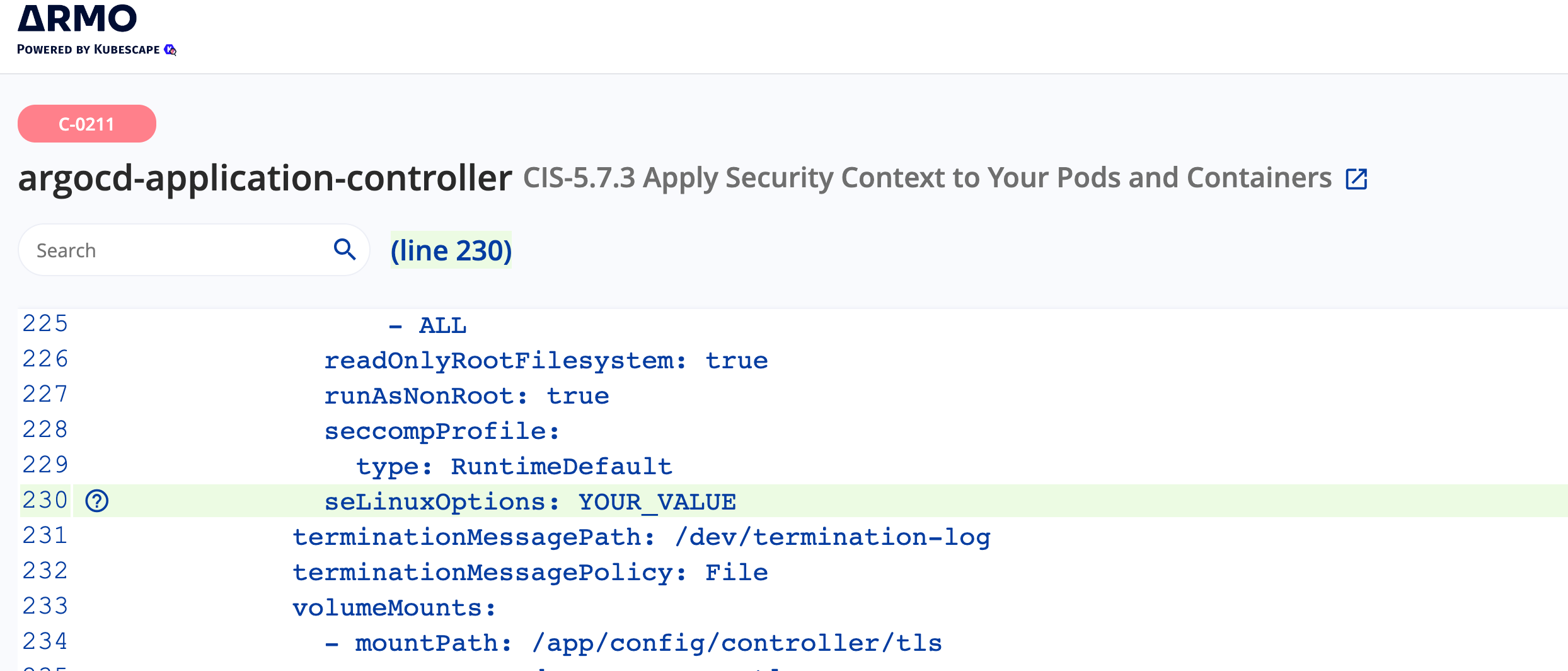

ARMO 웹서비스에 내가 등록 한 클러스터 리스트와 정보가 보이며

해당 클러스터의 보안 취약점들이 스캐닝되서 결과로 보여진다.

"FIX" 버튼을 누르게 되면 yaml 에디터가 열리면서 거기 취약점을 개선할 수 있도록

해당 라인에 하이라이트와 값을 넣으라는 안내가 나온다.

다만, 보안스캔이라는건 일반적인 보안 기준에 맞춰 안내하는 것이므로

내가 사용하는 환경 및 구성에 맞춰 필요한 부분만 적용을 하고 그외 부분은 "Ignore" 를 체크하여 skip 하면 된다.

SecurityContext

예전에 CKS 자격증 취득할때 사용했던 경험이 생각나 killer 문제를 다시 꺼내어 보았다.

먼저 아래와 같이 deployment 이용해서 pod을 배포한다.

apiVersion: apps/v1

kind: Deployment

metadata:

name: immutable-deployment

labels:

app: immutable-deployment

spec:

replicas: 1

selector:

matchLabels:

app: immutable-deployment

template:

metadata:

labels:

app: immutable-deployment

spec:

containers:

- image: busybox:1.32.0

command: ['sh', '-c', 'tail -f /dev/null']

imagePullPolicy: IfNotPresent

name: busybox

restartPolicy: Always배포한 pod의 / 경로에 파일을 생성해본다.

(sparkandassociates:harbor) [root@kops-ec2 ~]# k exec immutable-deployment-698dc94df9-xsdpt -- touch /abc.txt

(sparkandassociates:harbor) [root@kops-ec2 ~]# k exec immutable-deployment-698dc94df9-xsdpt -- ls -al /abc.txt

-rw-r--r-- 1 root root 0 Apr 7 13:56 /abc.txt

(sparkandassociates:harbor) [root@kops-ec2 ~]#이번에는 security context의 readOnlyRootFilesystem 을 적용해보자.

apiVersion: apps/v1

kind: Deployment

metadata:

name: immutable-deployment

labels:

app: immutable-deployment

spec:

replicas: 1

selector:

matchLabels:

app: immutable-deployment

template:

metadata:

labels:

app: immutable-deployment

spec:

containers:

- image: busybox:1.32.0

command: ['sh', '-c', 'tail -f /dev/null']

imagePullPolicy: IfNotPresent

name: busybox

securityContext: # add

readOnlyRootFilesystem: true # add

volumeMounts: # add

- mountPath: /tmp # add

name: temp-vol # add

volumes: # add

- name: temp-vol # add

emptyDir: {} # add

restartPolicy: Always재생성후 파일을 다시 생성해보자.

(sparkandassociates:harbor) [root@kops-ec2 ~]# k delete -f 1.yaml

deployment.apps "immutable-deployment" deleted

(sparkandassociates:harbor) [root@kops-ec2 ~]# k create -f 1.yaml

deployment.apps/immutable-deployment created

(sparkandassociates:harbor) [root@kops-ec2 ~]#

(sparkandassociates:harbor) [root@kops-ec2 ~]# k exec immutable-deployment-6dc8987698-7stxq -- touch /abc.txt

touch: /abc.txt: Read-only file system

command terminated with exit code 1

(sparkandassociates:harbor) [root@kops-ec2 ~]#

Read-only file system 이라는 에러와 함께 생성에 실패한다. 즉, 보안적용이 된것이다.

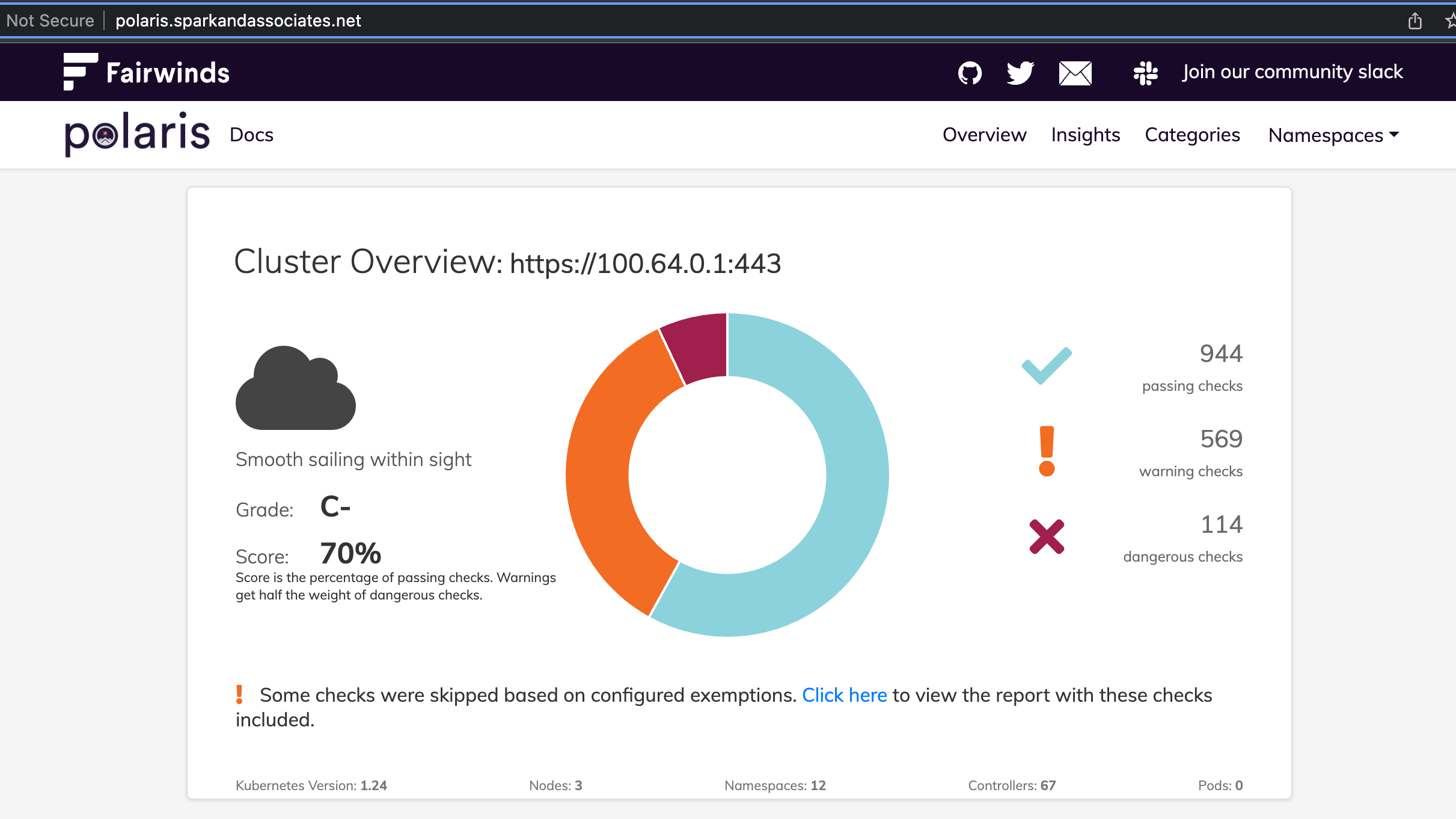

👉 도전과제3 : Polaris 사용

polaris 는 보안점검 도구이며 웹 서비스를 제공한다.

# 설치

kubectl create ns polaris

#

cat <<EOT > polaris-values.yaml

dashboard:

replicas: 1

service:

type: LoadBalancer

EOT

# 배포

helm repo add fairwinds-stable https://charts.fairwinds.com/stable

helm install polaris fairwinds-stable/polaris --namespace polaris --version 5.7.2 -f polaris-values.yaml

# CLB에 ExternanDNS 로 도메인 연결

kubectl annotate service polaris-dashboard "external-dns.alpha.kubernetes.io/hostname=polaris.$KOPS_CLUSTER_NAME" -n polaris

# 웹 접속 주소 확인 및 접속

(sparkandassociates:harbor) [root@kops-ec2 ~]# echo -e "Polaris Web URL = http://polaris.$KOPS_CLUSTER_NAME"

Polaris Web URL = http://polaris.sparkandassociates.net

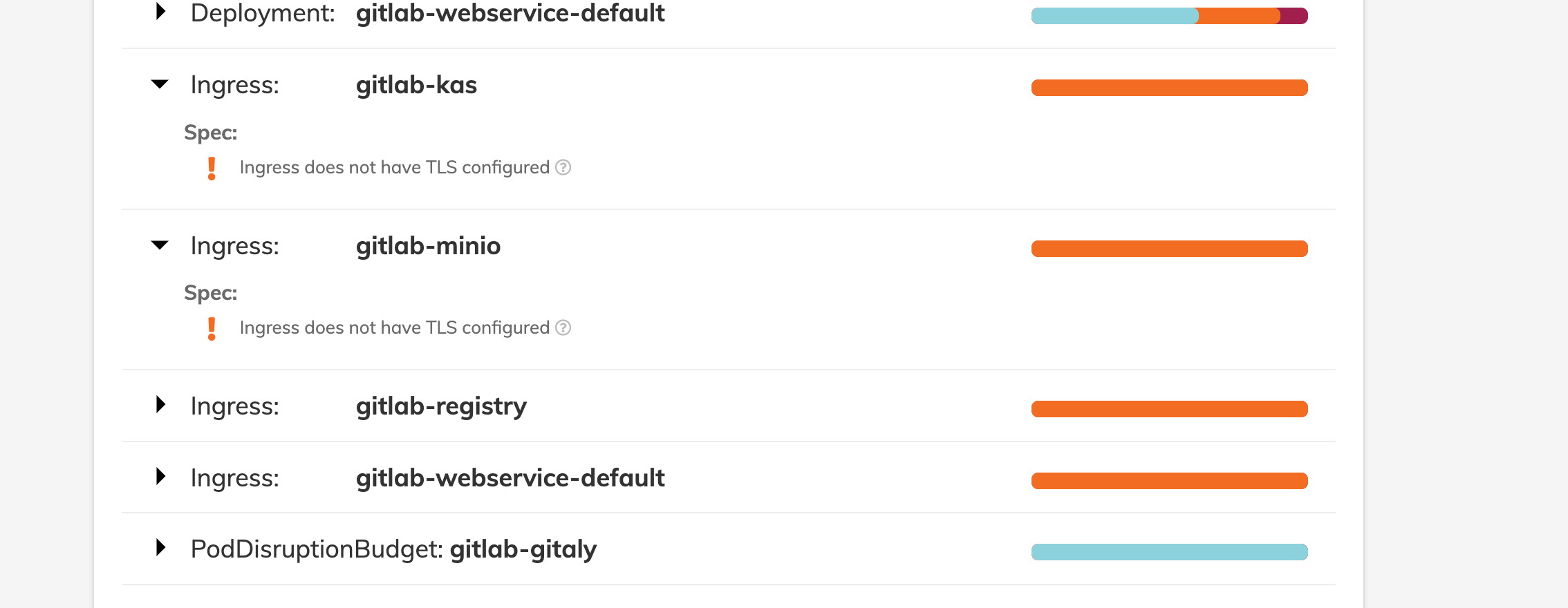

이렇게 보안취약점을 안내해주고 가이드도 제공한다.

온프레미스 환경에서 돌린다는 점에서 보안적으로 좋으나

사용성이나 보안진단, 조치가이드 등은 앞서 사용한 kubescape armo 가 더 뛰어난듯 하다.

👉 도전과제4 : SA (Service Account) 생성 및 role 할당

신규 서비스 어카운트(SA) 생성 후 '클러스터 수준(모든 네임스페이스 포함)에서 읽기 전용'의 권한을 주고 테스트

(sparkandassociates:harbor) [root@kops-ec2 ~]# k create sa master

(sparkandassociates:harbor) [root@kops-ec2 ~]# kubectl create clusterrole pod-reader --verb=get,list,watch --resource=pods

clusterrole.rbac.authorization.k8s.io/pod-reader created

(sparkandassociates:harbor) [root@kops-ec2 ~]# k create clusterrolebinding master --clusterrole pod-reader --serviceaccount default:master

clusterrolebinding.rbac.authorization.k8s.io/master created

# 확인

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get sa master -o yaml

apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: "2023-04-07T14:13:33Z"

name: master

namespace: harbor

resourceVersion: "5061627"

uid: 4527c4ba-f8e6-4987-a86a-007538ede6ab

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get clusterrole pod-reader -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: "2023-04-07T14:23:06Z"

name: pod-reader

resourceVersion: "5063951"

uid: 9b4f48a6-810c-40fb-99e6-68276adf9ece

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- list

- watch

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get clusterrolebindings master

NAME ROLE AGE

master ClusterRole/pod-reader 52s

(sparkandassociates:harbor) [root@kops-ec2 ~]# k get clusterrolebindings master -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: "2023-04-07T14:25:21Z"

name: master

resourceVersion: "5064502"

uid: 1485d98d-d7ed-410f-833a-05a3716530f2

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: pod-reader

subjects:

- kind: ServiceAccount

name: master

namespace: default

생성한 SA 인 "master" 계정의 권한 확인은 kubectl 의 auth can-i 를 활용하면 된다.

## pod 생성 권한 확인 -> "no"

(sparkandassociates:harbor) [root@kops-ec2 ~]# k auth can-i create pod --as system:serviceaccount:default:master

no

## pod read 권한 -> "yes"

(sparkandassociates:harbor) [root@kops-ec2 ~]# k auth can-i get pod --as system:serviceaccount:default:master

yes

## 부여하지 않은 secret 권한 추가 확인 -> "no"

(sparkandassociates:harbor) [root@kops-ec2 ~]# k auth can-i get secret --as system:serviceaccount:default:master

no