반도체 박막 두께 분석

배경

최근 고사양 반도체 수요가 많아지면서 반도체를 수직으로 적층하는 3차원 공정이 많이 연구되고 있습니다. 반도체 박막을 수십 ~ 수백 층 쌓아 올리는 공정에서는 박막의 결함으로 인한 두께와 균일도가 저하되는 문제가 있습니다. 이는 소자 구조의 변형을 야기하며 성능 하락의 주요 요인이 됩니다. 이를 사전에 방지하기 위해서는 박막의 두께를 빠르면서도 정확히 측정하는 것이 중요합니다.

박막의 두께를 측정하기 위해 광스펙트럼 분석이 널리 사용되고 있습니다. 하지만 광 스펙트럼을 분석하기 위해서는 관련 지식을 많이 가진 전문가가 필요하며 분석과정에 많은 컴퓨팅자원이 필요합니다. 빅데이터 분석을 통해 이를 해결하고자 반도체 소자의 두께 분석 알고리즘 경진대회를 개최합니다.

배경 자료

반도체 박막은 얇은 반도체 막으로 박막의 종류와 두께는 반도체 소자의 특성을 결정짓는 중요한 요소 중 하나입니다. 박막의 두께를 측정하는 방법으로 반사율 측정이 널리 사용되며 반사율은 입사광 세기에 대한 반사광 세기의 비율로 정해집니다. (반사율 = 반사광/입사광) 반사율은 빛의 파장에 따라 변하며 파장에 따른 반사율의 분포를 반사율 스펙트럼이라고 합니다.

구조 설명

이번 대회에서 분석할 소자는 질화규소(layer_1)/이산화규소(layer_2)/질화규소(layer_3)/이산화규소(layer_4)/규소(기판) 총 5층 구조로 되어 있습니다. 대회의 목적은 기판인 규소를 제외한 layer_1 ~ layer_4의 두께를 예측하는 것으로 학습 데이터 파일에는 각 층의 두께와 반사율 스펙트럼이 포함되어 있습니다

데이터 설명

train.csv 파일에는 4층 박막의 두께와 파장에 따른 반사율 스펙트럼이 주어집니다. 헤더의 이름에 따라 layer_1 ~ 4는 해당 박막의 두께,

데이터값인 0 ~ 225은 빛의 파장에 해당하는 반사율이 됩니다. 헤더 이름인 0~225은 파장을 뜻하며 비식별화 처리가 되어있어 실제 값과는 다릅니다.

import tensorflow as tf

from tensorflow.keras import Sequential

from tensorflow.keras import layers

import numpy as np

import matplotlib.pyplot as plt

import osuse_colab = True

assert use_colab in [True, False]from google.colab import drive

drive.mount('/content/drive')#Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount("/content/drive", force_remount=True).데이터 로드

- 새로운 버전의 Colab파일에선 왼쪽의 폴더 tree에서 직접 드라이브 마운트를 진행해야합니다.

train_d = np.load("/content/drive/MyDrive/Dataset/semicon_train_data.npy")

test_d = np.load("/content/drive/MyDrive/Dataset/semicon_test_data.npy")

# train data path

# train_d = np.load("./semicon_train_data.npy")

# test data path

# train_d = np.load("./semicon_test_data.npy")

print(train_d.shape, test_d.shape)(729000, 230) (81000, 230)print(train_d[1].shape)# (230,)print(test_d[0].shape)# (230,)# Raw Data 확인

print(train_d[1000])

# print(test_d[0])[7.00000000e+01 4.00000000e+01 1.50000000e+02 1.10000000e+02

4.02068880e-01 4.05173400e-01 4.22107550e-01 4.35197980e-01

4.66261860e-01 4.67785980e-01 4.76300870e-01 5.07203700e-01

5.23296600e-01 5.31541170e-01 5.23319200e-01 5.32340650e-01

5.38513840e-01 5.62478700e-01 5.74338900e-01 5.62083540e-01

5.83467800e-01 5.88462350e-01 5.89606940e-01 5.93186560e-01

5.93241930e-01 5.98241150e-01 5.87171000e-01 5.92055500e-01

6.06527860e-01 5.87087870e-01 5.94492100e-01 5.73233500e-01

5.94926300e-01 5.76777600e-01 5.70286300e-01 5.76913000e-01

5.52754400e-01 5.61735150e-01 5.52500000e-01 5.23800200e-01

5.11056840e-01 5.11732600e-01 4.92525460e-01 4.73373200e-01

4.57444160e-01 4.53428800e-01 4.19270130e-01 3.87709920e-01

3.87221520e-01 3.49848450e-01 3.16229370e-01 3.13858700e-01

2.80085700e-01 2.61263000e-01 2.30250820e-01 1.98457850e-01

1.53500440e-01 1.36026740e-01 1.08726785e-01 8.95344000e-02

6.58975300e-02 5.09539300e-02 3.69604570e-02 2.88708470e-02

1.54857090e-02 1.99594010e-02 2.33170990e-02 3.26487760e-02

5.89796340e-02 6.33707600e-02 9.20634640e-02 1.24182590e-01

1.63985540e-01 1.81501220e-01 2.16830660e-01 2.57668080e-01

2.86428720e-01 3.21613600e-01 3.65077380e-01 3.85984180e-01

4.33254400e-01 4.36655340e-01 4.60312520e-01 4.99771740e-01

5.03849100e-01 5.48682200e-01 5.58029060e-01 5.62947630e-01

5.99234340e-01 5.96399550e-01 6.04513000e-01 6.19093400e-01

6.38402700e-01 6.64816900e-01 6.56996000e-01 6.66098600e-01

6.69130500e-01 6.70661600e-01 6.80202360e-01 6.92821600e-01

6.96852400e-01 7.02015160e-01 7.00507460e-01 7.00250800e-01

7.11669300e-01 6.96838260e-01 7.08974360e-01 7.19114100e-01

7.18034700e-01 7.00589540e-01 7.05873250e-01 6.92893500e-01

7.14911160e-01 6.85250640e-01 6.82136830e-01 6.81311400e-01

6.78019200e-01 6.67259900e-01 6.78185700e-01 6.52875660e-01

6.34932940e-01 6.36031400e-01 6.16502100e-01 5.91461400e-01

5.74673350e-01 5.57246200e-01 5.42328200e-01 5.19422200e-01

5.03134850e-01 4.81939820e-01 4.45872100e-01 4.17206170e-01

4.05306970e-01 3.55970500e-01 3.27808440e-01 2.89738060e-01

2.44837760e-01 2.16494500e-01 1.69062380e-01 1.23260050e-01

1.03297760e-01 6.77379500e-02 3.89807700e-02 2.52380610e-02

2.59922780e-02 1.38827340e-02 1.90851740e-02 6.68724550e-02

7.21453900e-02 1.14929430e-01 1.77696590e-01 2.11153720e-01

2.55038900e-01 2.95599580e-01 3.21243700e-01 3.74151170e-01

3.91486200e-01 4.27665320e-01 4.61769700e-01 4.75576340e-01

4.78055980e-01 5.16503040e-01 5.30934450e-01 5.23902500e-01

5.51259300e-01 5.65235300e-01 5.67701600e-01 5.70858800e-01

5.76418300e-01 5.75021860e-01 6.01967200e-01 5.79546750e-01

5.81157900e-01 5.93576100e-01 5.84070600e-01 6.04394850e-01

5.87139800e-01 5.93731460e-01 5.91678200e-01 5.63344300e-01

5.69886800e-01 5.46404960e-01 5.43537260e-01 5.42394760e-01

5.42762940e-01 5.26218060e-01 4.89471520e-01 4.72608000e-01

4.59845570e-01 4.39476280e-01 4.24489440e-01 4.16756120e-01

4.11724180e-01 3.81418440e-01 3.60050380e-01 3.37973600e-01

3.20442830e-01 2.97488420e-01 2.85940900e-01 2.82688900e-01

2.78886770e-01 2.60735480e-01 2.64526520e-01 2.38374640e-01

2.46523140e-01 2.54422630e-01 2.63443470e-01 2.77559100e-01

3.05792480e-01 3.04755750e-01 3.47745950e-01 3.42683080e-01

3.59969050e-01 3.90264000e-01 4.21746500e-01 4.33448700e-01

4.50429620e-01 4.94950400e-01 4.90516330e-01 5.15429500e-01

5.46570300e-01 5.64438460e-01 5.68110800e-01 5.71491600e-01

5.81447660e-01 5.84012000e-01 6.05881900e-01 5.98874870e-01

5.97313170e-01 5.95610300e-01]- 데이터를 분석하여 모델에 학습할 수 있는 형태로 정리합니다.

train = train_d[:,4:] # 729000, 230 4번째 데이터부터 가져온다.

label = train_d[:,:4]

t_train = test_d[:,4:] # 81000, 230개 4번째 까지 데이터를 가져온다.

t_label = test_d[:,:4]batch_size = 256

# for train

train_dataset = tf.data.Dataset.from_tensor_slices((train, label))

train_dataset = train_dataset.shuffle(10000).repeat().batch(batch_size=batch_size)

print(train_dataset)

# for test

test_dataset = tf.data.Dataset.from_tensor_slices((t_train, t_label))

test_dataset = test_dataset.batch(batch_size=batch_size)

print(test_dataset)#<_BatchDataset element_spec=(TensorSpec(shape=(None, 226), dtype=tf.float64, name=None), TensorSpec(shape=(None, 4), dtype=tf.float64, name=None))>

#<_BatchDataset element_spec=(TensorSpec(shape=(None, 226), dtype=tf.float64, name=None), TensorSpec(shape=(None, 4), dtype=tf.float64, name=None))>모델 구성

- 현재 가장 간단한 모델로 구성되어 있습니다.

- 데이터셋 자체를 가장 잘 학습할 수 있는 모델을 구현해 학습을 진행합니다.

8 * 8 * 4#256input_tensor = layers.Input(shape=(226,))

x = layers.Dense(8 * 8 * 4, activation='relu')(input_tensor)

x = layers.Reshape(target_shape=(8, 8, 4))(x)

output_tensor = layers.Dense(4)(x)

model = tf.keras.Model(input_tensor, output_tensor)모델 학습

- 4개층의 박막의 두께를 예측하는 모델을 학습해봅시다.

# the save point

if use_colab:

checkpoint_dir ='./drive/My Drive/train_ckpt/semiconductor/exp1'

if not os.path.isdir(checkpoint_dir):

os.makedirs(checkpoint_dir)

else:

checkpoint_dir = 'semiconductor/exp1'

cp_callback = tf.keras.callbacks.ModelCheckpoint(checkpoint_dir,

save_weights_only=True,

monitor='val_loss',

mode='auto',

save_best_only=True,

verbose=1)model.compile(loss='mae', #mse

optimizer='adam',

metrics=['mae']) #msehistory = model.fit(train_dataset,

steps_per_epoch=len(train) / batch_size, # train data의 길이 // batch 길이

epochs=100,

validation_data=test_dataset,

validation_steps=len(t_train) / batch_size)Epoch 1/100

2847/2847 [==============================] - 44s 12ms/step - loss: 98.3696 - mae: 98.3696 - val_loss: 55.0984 - val_mae: 55.0984

Epoch 2/100

2847/2847 [==============================] - 33s 12ms/step - loss: 41.6744 - mae: 41.6744 - val_loss: 32.9359 - val_mae: 32.9359

Epoch 3/100

2847/2847 [==============================] - 35s 12ms/step - loss: 25.3576 - mae: 25.3576 - val_loss: 18.7295 - val_mae: 18.7295

Epoch 4/100

2847/2847 [==============================] - 33s 12ms/step - loss: 18.0173 - mae: 18.0173 - val_loss: 14.7011 - val_mae: 14.7011

Epoch 5/100

2847/2847 [==============================] - 33s 12ms/step - loss: 15.6218 - mae: 15.6218 - val_loss: 12.6688 - val_mae: 12.6688

Epoch 6/100

2847/2847 [==============================] - 33s 12ms/step - loss: 14.1532 - mae: 14.1532 - val_loss: 11.6533 - val_mae: 11.6533

Epoch 7/100

2847/2847 [==============================] - 33s 12ms/step - loss: 13.1076 - mae: 13.1076 - val_loss: 10.7831 - val_mae: 10.7831

Epoch 8/100

2847/2847 [==============================] - 34s 12ms/step - loss: 12.3764 - mae: 12.3764 - val_loss: 10.1933 - val_mae: 10.1933

Epoch 9/100

2847/2847 [==============================] - 33s 11ms/step - loss: 11.7947 - mae: 11.7947 - val_loss: 9.5747 - val_mae: 9.5747

Epoch 10/100

2847/2847 [==============================] - 34s 12ms/step - loss: 11.2987 - mae: 11.2987 - val_loss: 9.3836 - val_mae: 9.3836

Epoch 11/100

2847/2847 [==============================] - 33s 12ms/step - loss: 10.8654 - mae: 10.8654 - val_loss: 9.2192 - val_mae: 9.2192

Epoch 12/100

2847/2847 [==============================] - 33s 12ms/step - loss: 10.5522 - mae: 10.5522 - val_loss: 8.2976 - val_mae: 8.2976

Epoch 13/100

2847/2847 [==============================] - 32s 11ms/step - loss: 10.2725 - mae: 10.2725 - val_loss: 8.2708 - val_mae: 8.2708

Epoch 14/100

2847/2847 [==============================] - 34s 12ms/step - loss: 10.0172 - mae: 10.0172 - val_loss: 7.6924 - val_mae: 7.6924

Epoch 15/100

2847/2847 [==============================] - 33s 12ms/step - loss: 9.7965 - mae: 9.7965 - val_loss: 7.5464 - val_mae: 7.5464

Epoch 16/100

2847/2847 [==============================] - 33s 12ms/step - loss: 9.6332 - mae: 9.6332 - val_loss: 7.3972 - val_mae: 7.3972

Epoch 17/100

2847/2847 [==============================] - 33s 11ms/step - loss: 9.4276 - mae: 9.4276 - val_loss: 7.2775 - val_mae: 7.2775

Epoch 18/100

2847/2847 [==============================] - 32s 11ms/step - loss: 9.2707 - mae: 9.2707 - val_loss: 7.1137 - val_mae: 7.1137

Epoch 19/100

2847/2847 [==============================] - 33s 12ms/step - loss: 9.1206 - mae: 9.1206 - val_loss: 6.9142 - val_mae: 6.9142

Epoch 20/100

2847/2847 [==============================] - 34s 12ms/step - loss: 8.9705 - mae: 8.9705 - val_loss: 6.8319 - val_mae: 6.8319

Epoch 21/100

2847/2847 [==============================] - 35s 12ms/step - loss: 8.8938 - mae: 8.8938 - val_loss: 6.7131 - val_mae: 6.7131

Epoch 22/100

2847/2847 [==============================] - 34s 12ms/step - loss: 8.7869 - mae: 8.7869 - val_loss: 6.4702 - val_mae: 6.4702

Epoch 23/100

2847/2847 [==============================] - 36s 13ms/step - loss: 8.6596 - mae: 8.6596 - val_loss: 6.5114 - val_mae: 6.5114

Epoch 24/100

2847/2847 [==============================] - 37s 13ms/step - loss: 8.5861 - mae: 8.5861 - val_loss: 6.3033 - val_mae: 6.3033

Epoch 25/100

2847/2847 [==============================] - 33s 12ms/step - loss: 8.4791 - mae: 8.4791 - val_loss: 6.2558 - val_mae: 6.2558

Epoch 26/100

2847/2847 [==============================] - 35s 12ms/step - loss: 8.3915 - mae: 8.3915 - val_loss: 6.1372 - val_mae: 6.1372

Epoch 27/100

2847/2847 [==============================] - 34s 12ms/step - loss: 8.3137 - mae: 8.3137 - val_loss: 6.0292 - val_mae: 6.0292

Epoch 28/100

2847/2847 [==============================] - 33s 12ms/step - loss: 8.2411 - mae: 8.2411 - val_loss: 6.2980 - val_mae: 6.2980

Epoch 29/100

2847/2847 [==============================] - 32s 11ms/step - loss: 8.1478 - mae: 8.1478 - val_loss: 6.0103 - val_mae: 6.0103

Epoch 30/100

2847/2847 [==============================] - 32s 11ms/step - loss: 8.0947 - mae: 8.0947 - val_loss: 5.9317 - val_mae: 5.9317

Epoch 31/100

2847/2847 [==============================] - 33s 12ms/step - loss: 8.0531 - mae: 8.0531 - val_loss: 5.8171 - val_mae: 5.8171

Epoch 32/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.9609 - mae: 7.9609 - val_loss: 5.9632 - val_mae: 5.9632

Epoch 33/100

2847/2847 [==============================] - 34s 12ms/step - loss: 7.9161 - mae: 7.9161 - val_loss: 5.9289 - val_mae: 5.9289

Epoch 34/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.8580 - mae: 7.8580 - val_loss: 5.7152 - val_mae: 5.7152

Epoch 35/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.8196 - mae: 7.8196 - val_loss: 5.6733 - val_mae: 5.6733

Epoch 36/100

2847/2847 [==============================] - 33s 11ms/step - loss: 7.7775 - mae: 7.7775 - val_loss: 5.9246 - val_mae: 5.9246

Epoch 37/100

2847/2847 [==============================] - 35s 12ms/step - loss: 7.6940 - mae: 7.6940 - val_loss: 5.4900 - val_mae: 5.4900

Epoch 38/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.6567 - mae: 7.6567 - val_loss: 5.6425 - val_mae: 5.6425

Epoch 39/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.6151 - mae: 7.6151 - val_loss: 5.5744 - val_mae: 5.5744

Epoch 40/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.5781 - mae: 7.5781 - val_loss: 5.4983 - val_mae: 5.4983

Epoch 41/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.5228 - mae: 7.5228 - val_loss: 5.4053 - val_mae: 5.4053

Epoch 42/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.4723 - mae: 7.4723 - val_loss: 5.3742 - val_mae: 5.3742

Epoch 43/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.3971 - mae: 7.3971 - val_loss: 5.8738 - val_mae: 5.8738

Epoch 44/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.4046 - mae: 7.4046 - val_loss: 5.3615 - val_mae: 5.3615

Epoch 45/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.3576 - mae: 7.3576 - val_loss: 5.2890 - val_mae: 5.2890

Epoch 46/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.3018 - mae: 7.3018 - val_loss: 5.4128 - val_mae: 5.4128

Epoch 47/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.2977 - mae: 7.2977 - val_loss: 5.2234 - val_mae: 5.2234

Epoch 48/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.2586 - mae: 7.2586 - val_loss: 5.2990 - val_mae: 5.2990

Epoch 49/100

2847/2847 [==============================] - 34s 12ms/step - loss: 7.1975 - mae: 7.1975 - val_loss: 5.1710 - val_mae: 5.1710

Epoch 50/100

2847/2847 [==============================] - 32s 11ms/step - loss: 7.1891 - mae: 7.1891 - val_loss: 5.0942 - val_mae: 5.0942

Epoch 51/100

2847/2847 [==============================] - 34s 12ms/step - loss: 7.1484 - mae: 7.1484 - val_loss: 4.9883 - val_mae: 4.9883

Epoch 52/100

2847/2847 [==============================] - 33s 12ms/step - loss: 7.1019 - mae: 7.1019 - val_loss: 5.4390 - val_mae: 5.4390

Epoch 53/100

2847/2847 [==============================] - 35s 12ms/step - loss: 7.0700 - mae: 7.0700 - val_loss: 5.0882 - val_mae: 5.0882

Epoch 54/100

2847/2847 [==============================] - 34s 12ms/step - loss: 7.0510 - mae: 7.0510 - val_loss: 5.0578 - val_mae: 5.0578

Epoch 55/100

2847/2847 [==============================] - 35s 12ms/step - loss: 7.0202 - mae: 7.0202 - val_loss: 5.0349 - val_mae: 5.0349

Epoch 56/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.9821 - mae: 6.9821 - val_loss: 5.2390 - val_mae: 5.2390

Epoch 57/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.9828 - mae: 6.9828 - val_loss: 5.0327 - val_mae: 5.0327

Epoch 58/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.9384 - mae: 6.9384 - val_loss: 5.0759 - val_mae: 5.0759

Epoch 59/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.8780 - mae: 6.8780 - val_loss: 4.9260 - val_mae: 4.9260

Epoch 60/100

2847/2847 [==============================] - 37s 13ms/step - loss: 6.8704 - mae: 6.8704 - val_loss: 4.8593 - val_mae: 4.8593

Epoch 61/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.8608 - mae: 6.8608 - val_loss: 4.8831 - val_mae: 4.8831

Epoch 62/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.8275 - mae: 6.8275 - val_loss: 5.1727 - val_mae: 5.1727

Epoch 63/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.7859 - mae: 6.7859 - val_loss: 4.8546 - val_mae: 4.8546

Epoch 64/100

2847/2847 [==============================] - 33s 11ms/step - loss: 6.7657 - mae: 6.7657 - val_loss: 4.8685 - val_mae: 4.8685

Epoch 65/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.7401 - mae: 6.7401 - val_loss: 4.8768 - val_mae: 4.8768

Epoch 66/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.7265 - mae: 6.7265 - val_loss: 4.6834 - val_mae: 4.6834

Epoch 67/100

2847/2847 [==============================] - 33s 11ms/step - loss: 6.6693 - mae: 6.6693 - val_loss: 4.8329 - val_mae: 4.8329

Epoch 68/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.6614 - mae: 6.6614 - val_loss: 4.7936 - val_mae: 4.7936

Epoch 69/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.6552 - mae: 6.6552 - val_loss: 4.8079 - val_mae: 4.8079

Epoch 70/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.6337 - mae: 6.6337 - val_loss: 4.7778 - val_mae: 4.7778

Epoch 71/100

2847/2847 [==============================] - 33s 11ms/step - loss: 6.6061 - mae: 6.6061 - val_loss: 4.7467 - val_mae: 4.7467

Epoch 72/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.5800 - mae: 6.5800 - val_loss: 4.8474 - val_mae: 4.8474

Epoch 73/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.5879 - mae: 6.5879 - val_loss: 4.6442 - val_mae: 4.6442

Epoch 74/100

2847/2847 [==============================] - 33s 11ms/step - loss: 6.5656 - mae: 6.5656 - val_loss: 4.7916 - val_mae: 4.7916

Epoch 75/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.5286 - mae: 6.5286 - val_loss: 4.5983 - val_mae: 4.5983

Epoch 76/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.4862 - mae: 6.4862 - val_loss: 4.6008 - val_mae: 4.6008

Epoch 77/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.4822 - mae: 6.4822 - val_loss: 4.5863 - val_mae: 4.5863

Epoch 78/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.4512 - mae: 6.4512 - val_loss: 4.6937 - val_mae: 4.6937

Epoch 79/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.4517 - mae: 6.4517 - val_loss: 4.7971 - val_mae: 4.7971

Epoch 80/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.4411 - mae: 6.4411 - val_loss: 4.6173 - val_mae: 4.6173

Epoch 81/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.4007 - mae: 6.4007 - val_loss: 4.6712 - val_mae: 4.6712

Epoch 82/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.3860 - mae: 6.3860 - val_loss: 4.5905 - val_mae: 4.5905

Epoch 83/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.3750 - mae: 6.3750 - val_loss: 4.5082 - val_mae: 4.5082

Epoch 84/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.3653 - mae: 6.3653 - val_loss: 4.6231 - val_mae: 4.6231

Epoch 85/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.3490 - mae: 6.3490 - val_loss: 4.5700 - val_mae: 4.5700

Epoch 86/100

2847/2847 [==============================] - 35s 12ms/step - loss: 6.3441 - mae: 6.3441 - val_loss: 4.5706 - val_mae: 4.5706

Epoch 87/100

2847/2847 [==============================] - 32s 11ms/step - loss: 6.3160 - mae: 6.3160 - val_loss: 4.4801 - val_mae: 4.4801

Epoch 88/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.2917 - mae: 6.2917 - val_loss: 4.4492 - val_mae: 4.4492

Epoch 89/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.2673 - mae: 6.2673 - val_loss: 4.4364 - val_mae: 4.4364

Epoch 90/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.2582 - mae: 6.2582 - val_loss: 4.5396 - val_mae: 4.5396

Epoch 91/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.2437 - mae: 6.2437 - val_loss: 4.4340 - val_mae: 4.4340

Epoch 92/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.2344 - mae: 6.2344 - val_loss: 4.5570 - val_mae: 4.5570

Epoch 93/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.2096 - mae: 6.2096 - val_loss: 4.5309 - val_mae: 4.5309

Epoch 94/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.2364 - mae: 6.2364 - val_loss: 4.4046 - val_mae: 4.4046

Epoch 95/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.1718 - mae: 6.1718 - val_loss: 4.4525 - val_mae: 4.4525

Epoch 96/100

2847/2847 [==============================] - 33s 11ms/step - loss: 6.1778 - mae: 6.1778 - val_loss: 4.4234 - val_mae: 4.4234

Epoch 97/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.1731 - mae: 6.1731 - val_loss: 4.4228 - val_mae: 4.4228

Epoch 98/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.1397 - mae: 6.1397 - val_loss: 4.3904 - val_mae: 4.3904

Epoch 99/100

2847/2847 [==============================] - 33s 12ms/step - loss: 6.1304 - mae: 6.1304 - val_loss: 4.4282 - val_mae: 4.4282

Epoch 100/100

2847/2847 [==============================] - 34s 12ms/step - loss: 6.1343 - mae: 6.1343 - val_loss: 4.4304 - val_mae: 4.4304# np.save("file/path.npy", history.numpy())

# history = np.load("histoy.npy", allow_pickle=True)

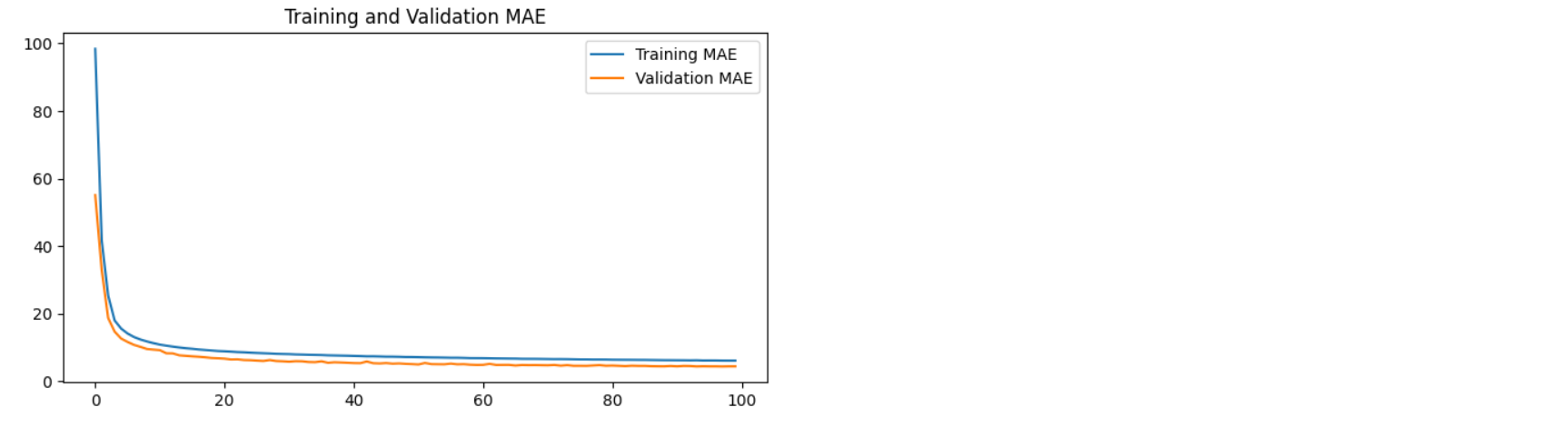

loss=history.history['mae'] # mse

val_loss=history.history['val_mae'] # val_mse

epochs_range = range(len(loss))

plt.figure(figsize=(8, 4))

plt.plot(epochs_range, loss, label='Training MAE') # MSE

plt.plot(epochs_range, val_loss, label='Validation MAE') # MSE

plt.legend(loc='upper right')

plt.title('Training and Validation MAE') # MSE

plt.show()

모델 평가

- Mean absolute error 를 이용해 모델이 정확히 예측하는지를 확인

# model.load_weights(checkpoint_dir)

results = model.evaluate(test_dataset)

print("MAE :", results[0]) # MAE#317/317 [==============================] - 1s 4ms/step - loss: 4.4304 - mae: 4.4304

#MAE : 4.430441379547119