Transfer Learning (VGG16)

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

import sys

import time

import shutil

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from PIL import Image

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras.preprocessing.image import ImageDataGenerator

Usage VGG16

def VGG16(include_top=True,

weights='imagenet',

input_tensor=None,

input_shape=None,

pooling=None,

classes=1000,

**kwargs):

"""Instantiates the VGG16 architecture.

Optionally loads weights pre-trained on ImageNet.

Note that the data format convention used by the model is

the one specified in your Keras config at `~/.keras/keras.json`.

# Arguments

include_top: whether to include the 3 fully-connected

layers at the top of the network.

weights: one of `None` (random initialization),

'imagenet' (pre-training on ImageNet),

or the path to the weights file to be loaded.

input_tensor: optional Keras tensor

(i.e. output of `layers.Input()`)

to use as image input for the model.

input_shape: optional shape tuple, only to be specified

if `include_top` is False (otherwise the input shape

has to be `(224, 224, 3)`

(with `channels_last` data format)

or `(3, 224, 224)` (with `channels_first` data format).

It should have exactly 3 input channels,

and width and height should be no smaller than 32.

E.g. `(200, 200, 3)` would be one valid value.

pooling: Optional pooling mode for feature extraction

when `include_top` is `False`.

- `None` means that the output of the model will be

the 4D tensor output of the

last convolutional block.

- `avg` means that global average pooling

will be applied to the output of the

last convolutional block, and thus

the output of the model will be a 2D tensor.

- `max` means that global max pooling will

be applied.

classes: optional number of classes to classify images

into, only to be specified if `include_top` is True, and

if no `weights` argument is specified.

# Returns

A Keras model instance.

# Raises

ValueError: in case of invalid argument for `weights`,

or invalid input shape.

"""

Load VGG16 conv base

conv_base = tf.keras.applications.VGG16(weights='imagenet',

include_top=False,

input_shape=(150, 150, 3))

conv_base.summary()

Model: "vgg16"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

input_1 (InputLayer) [(None, 150, 150, 3)] 0

block1_conv1 (Conv2D) (None, 150, 150, 64) 1792

block1_conv2 (Conv2D) (None, 150, 150, 64) 36928

block1_pool (MaxPooling2D) (None, 75, 75, 64) 0

block2_conv1 (Conv2D) (None, 75, 75, 128) 73856

block2_conv2 (Conv2D) (None, 75, 75, 128) 147584

block2_pool (MaxPooling2D) (None, 37, 37, 128) 0

block3_conv1 (Conv2D) (None, 37, 37, 256) 295168

block3_conv2 (Conv2D) (None, 37, 37, 256) 590080

block3_conv3 (Conv2D) (None, 37, 37, 256) 590080

block3_pool (MaxPooling2D) (None, 18, 18, 256) 0

block4_conv1 (Conv2D) (None, 18, 18, 512) 1180160

block4_conv2 (Conv2D) (None, 18, 18, 512) 2359808

block4_conv3 (Conv2D) (None, 18, 18, 512) 2359808

block4_pool (MaxPooling2D) (None, 9, 9, 512) 0

block5_conv1 (Conv2D) (None, 9, 9, 512) 2359808

block5_conv2 (Conv2D) (None, 9, 9, 512) 2359808

block5_conv3 (Conv2D) (None, 9, 9, 512) 2359808

block5_pool (MaxPooling2D) (None, 4, 4, 512) 0

=================================================================

Total params: 14,714,688

Trainable params: 14,714,688

Non-trainable params: 0

_________________________________________________________________

Build a our model based on VGG16 conv base

model = tf.keras.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(5, activation='softmax'))

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

vgg16 (Functional) (None, 4, 4, 512) 14714688

flatten (Flatten) (None, 8192) 0

dense (Dense) (None, 256) 2097408

dense_1 (Dense) (None, 5) 1285

=================================================================

Total params: 16,813,381

Trainable params: 16,813,381

Non-trainable params: 0

_________________________________________________________________

Freeze conv base

for var in model.variables:

if var.name == 'block1_conv1/kernel:0':

conv1_1_w = var

if var.name == 'dense/kernel:0':

dense_w = var

print(conv1_1_w)

<tf.Variable 'block1_conv1/kernel:0' shape=(3, 3, 3, 64) dtype=float32, numpy=

array([[[[ 4.29470569e-01, 1.17273867e-01, 3.40129584e-02, ...,

-1.32241577e-01, -5.33475243e-02, 7.57738389e-03],

[ 5.50379455e-01, 2.08774377e-02, 9.88311544e-02, ...,

-8.48205537e-02, -5.11389151e-02, 3.74943428e-02],

[ 4.80015397e-01, -1.72696680e-01, 3.75577137e-02, ...,

-1.27135560e-01, -5.02991639e-02, 3.48965675e-02]],

[[ 3.73466998e-01, 1.62062630e-01, 1.70863140e-03, ...,

-1.48207128e-01, -2.35300660e-01, -6.30356818e-02],

[ 4.40074533e-01, 4.73412387e-02, 5.13819456e-02, ...,

-9.88498852e-02, -2.96195745e-01, -7.04357103e-02],

[ 4.08547401e-01, -1.70375049e-01, -4.96297423e-03, ...,

-1.22360572e-01, -2.76450396e-01, -3.90796512e-02]],

[[-6.13601133e-02, 1.35693997e-01, -1.15694344e-01, ...,

-1.40158370e-01, -3.77666801e-01, -3.00509870e-01],

[-8.13870355e-02, 4.18543853e-02, -1.01763301e-01, ...,

-9.43124294e-02, -5.05662560e-01, -3.83694321e-01],

[-6.51455522e-02, -1.54351532e-01, -1.38038069e-01, ...,

-1.29404560e-01, -4.62243795e-01, -3.23985279e-01]]],

[[[ 2.74769872e-01, 1.48350164e-01, 1.61559835e-01, ...,

-1.14316158e-01, 3.65494519e-01, 3.39938998e-01],

[ 3.45739067e-01, 3.10493708e-02, 2.40750551e-01, ...,

-6.93419054e-02, 4.37116861e-01, 4.13171440e-01],

[ 3.10477257e-01, -1.87601492e-01, 1.66595340e-01, ...,

-9.88388434e-02, 4.04058546e-01, 3.92561197e-01]],

[[ 3.86807770e-02, 2.02298447e-01, 1.56414255e-01, ...,

-5.20089604e-02, 2.57149011e-01, 3.71682674e-01],

[ 4.06322069e-02, 6.58102185e-02, 2.20311403e-01, ...,

-3.78979952e-03, 2.69412428e-01, 4.09505904e-01],

[ 5.02023660e-02, -1.77571565e-01, 1.51188180e-01, ...,

-1.40649760e-02, 2.59300828e-01, 4.23764467e-01]],

[[-3.67223352e-01, 1.61688417e-01, -8.99365395e-02, ...,

-1.45945460e-01, -2.71823555e-01, -2.39718184e-01],

[-4.53501314e-01, 4.62574959e-02, -6.67438358e-02, ...,

-1.03502415e-01, -3.45792353e-01, -2.92486250e-01],

[-4.03383434e-01, -1.74399972e-01, -1.09849639e-01, ...,

-1.25688612e-01, -3.14026326e-01, -2.32839763e-01]]],

[[[-5.74681684e-02, 1.29344285e-01, 1.29030216e-02, ...,

-1.41449392e-01, 2.41099641e-01, 4.55602147e-02],

[-5.86349145e-02, 3.16787697e-02, 7.59588331e-02, ...,

-1.05017252e-01, 3.39550197e-01, 9.86374393e-02],

[-5.08716851e-02, -1.66002661e-01, 1.56279504e-02, ...,

-1.49742723e-01, 3.06801915e-01, 8.82701725e-02]],

[[-2.62249678e-01, 1.71572417e-01, 5.44555223e-05, ...,

-1.22728683e-01, 2.44687453e-01, 5.32913655e-02],

[-3.30669671e-01, 5.47101051e-02, 4.86797579e-02, ...,

-8.29023942e-02, 2.95466095e-01, 7.44469985e-02],

[-2.85227507e-01, -1.66666731e-01, -7.96697661e-03, ...,

-1.09780088e-01, 2.79203743e-01, 9.46525261e-02]],

[[-3.50096762e-01, 1.38710454e-01, -1.25339806e-01, ...,

-1.53092295e-01, -1.39917329e-01, -2.65075237e-01],

[-4.85030204e-01, 4.23195846e-02, -1.12076312e-01, ...,

-1.18306056e-01, -1.67058021e-01, -3.22241962e-01],

[-4.18516338e-01, -1.57048807e-01, -1.49133086e-01, ...,

-1.56839803e-01, -1.42874300e-01, -2.69694626e-01]]]],

dtype=float32)>

print(dense_w)

<tf.Variable 'dense/kernel:0' shape=(8192, 256) dtype=float32, numpy=

array([[-6.77704811e-05, -2.31294706e-02, 7.60229304e-03, ...,

8.39843228e-03, 2.20995881e-02, -1.67809427e-02],

[-2.24653445e-03, 1.38033219e-02, 1.90266967e-02, ...,

4.77955118e-03, -8.19554180e-03, 1.75078325e-02],

[ 1.14755780e-02, -1.06887575e-02, 1.29164383e-02, ...,

-2.29659472e-02, 2.21658535e-02, -1.31650921e-02],

...,

[-2.37071719e-02, -5.27622737e-03, -1.17897894e-02, ...,

2.27380209e-02, 9.72433016e-03, 1.97466724e-02],

[ 6.02534786e-03, 8.62229988e-03, -1.14720445e-02, ...,

-2.64674164e-02, 1.04047954e-02, 7.53303617e-03],

[-6.95938803e-03, -1.71137918e-02, -3.41273844e-05, ...,

2.29775570e-02, -2.06831582e-02, 1.16364844e-02]], dtype=float32)>

for layer in model.layers:

if layer.name == 'vgg16':

layer.trainable = False

print("variable name: {}, trainable: {}".format(layer.name, layer.trainable))

Load a Google Flower Dataset

DATASET_PATH='../../datasets/flower'

if not os.path.isdir(DATASET_PATH):

os.makedirs(DATASET_PATH)

import urllib.request

u = urllib.request.urlopen(url='https://www.dropbox.com/s/1tqczockfgdnz8z/flower.zip?dl=1')

data = u.read()

u.close()

with open('flower.zip', "wb") as f :

f.write(data)

print('Data has been downloaded')

shutil.move(os.path.join('flower.zip'), os.path.join(DATASET_PATH))

file_path = os.path.join(DATASET_PATH, 'flower.zip')

import zipfile

zip_ref = zipfile.ZipFile(file_path, 'r')

zip_ref.extractall(DATASET_PATH)

zip_ref.close()

print('Data has been extracted.')

else:

print('Data has already been downloaded and extracted.')

base_dir = os.path.join(DATASET_PATH, 'flower')

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

test_dir = os.path.join(base_dir, 'test')

print(train_dir)

print(validation_dir)

print(test_dir)

class_name = sorted(os.listdir(train_dir))

for name in class_name:

print(name)

num_train = 0

num_val = 0

num_test = 0

for name in class_name:

train_path = os.path.join(train_dir, name)

val_path = os.path.join(validation_dir, name)

test_path = os.path.join(test_dir, name)

print("Number of {} class: for train: {} / for validation: {} / for test: {}".format(name,

len(os.listdir(train_path)),

len(os.listdir(val_path)),

len(os.listdir(train_path))))

num_train += len(os.listdir(train_path))

num_val += len(os.listdir(val_path))

num_test += len(os.listdir(test_path))

print('--------')

print("Total training images:", num_train)

print("Total validation images:", num_val)

print("Total test images:", num_test)

Set hyperparameters

batch_size = 16

Setting image generator

train_datagen = ImageDataGenerator(rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

val_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(train_dir,

target_size=(150, 150),

batch_size=batch_size,

class_mode='categorical')

Found 3009 images belonging to 5 classes.

val_generator = val_datagen.flow_from_directory(validation_dir,

target_size=(150, 150),

batch_size=batch_size,

class_mode='categorical')

test_generator = test_datagen.flow_from_directory(test_dir,

target_size=(150, 150),

batch_size=batch_size,

class_mode='categorical')

Compile a model

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(

train_generator,

steps_per_epoch=int(np.ceil(num_train / float(batch_size))),

epochs=10,

validation_data=val_generator,

validation_steps=int(np.ceil(num_val / float(batch_size)))

)

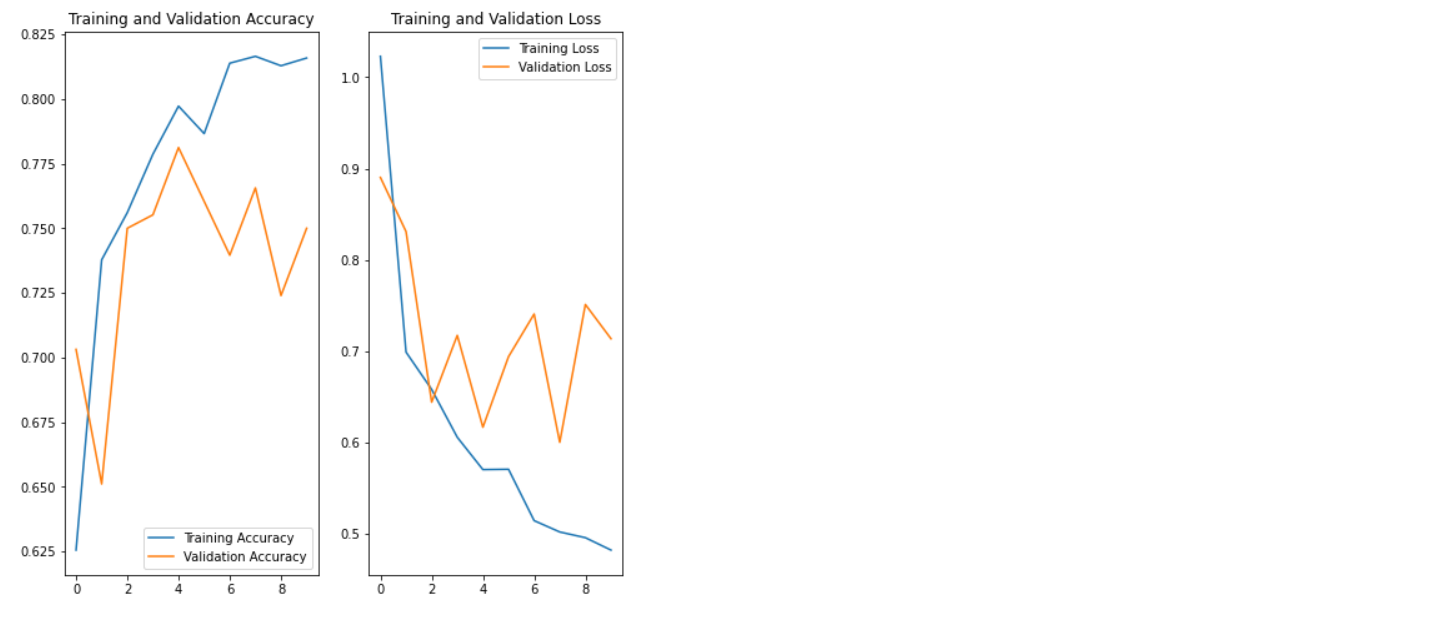

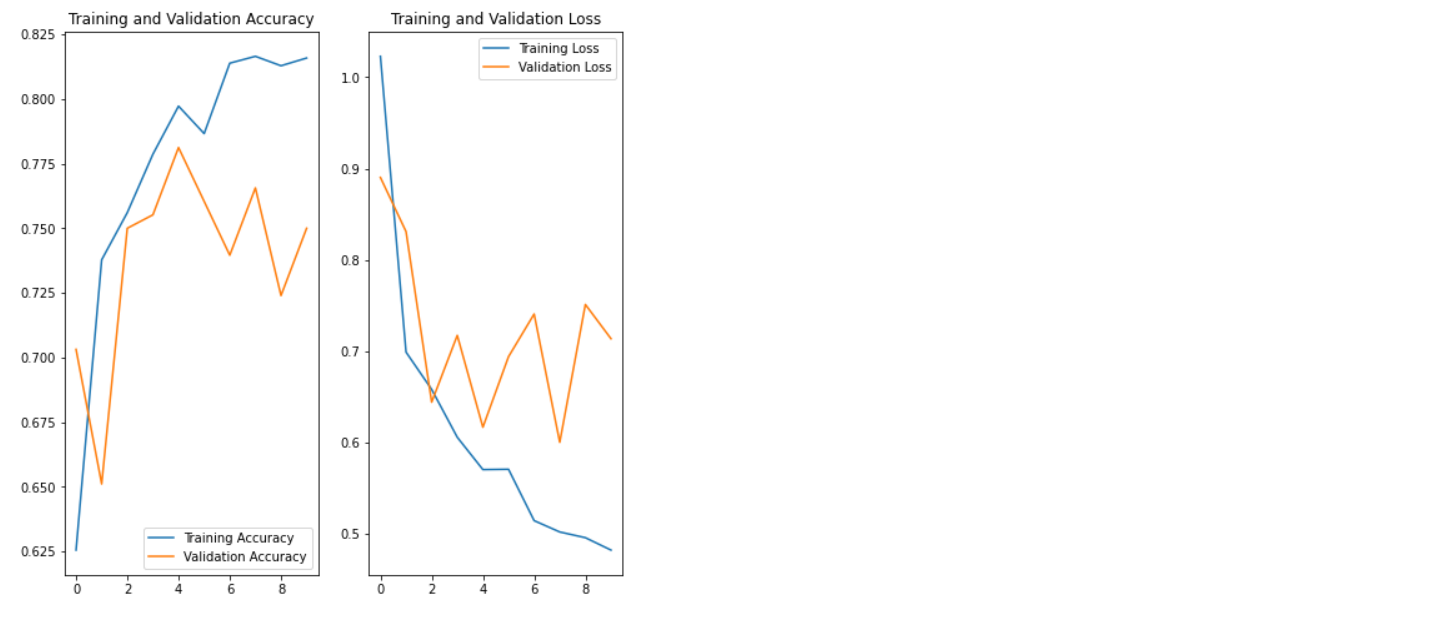

Epoch 1/10

189/189 [==============================] - 43s 168ms/step - loss: 1.0230 - accuracy: 0.6255 - val_loss: 0.8902 - val_accuracy: 0.7031

Epoch 2/10

189/189 [==============================] - 32s 167ms/step - loss: 0.6989 - accuracy: 0.7378 - val_loss: 0.8309 - val_accuracy: 0.6510

Epoch 3/10

189/189 [==============================] - 31s 165ms/step - loss: 0.6581 - accuracy: 0.7561 - val_loss: 0.6440 - val_accuracy: 0.7500

Epoch 4/10

189/189 [==============================] - 32s 166ms/step - loss: 0.6056 - accuracy: 0.7787 - val_loss: 0.7172 - val_accuracy: 0.7552

Epoch 5/10

189/189 [==============================] - 32s 168ms/step - loss: 0.5702 - accuracy: 0.7973 - val_loss: 0.6166 - val_accuracy: 0.7812

Epoch 6/10

189/189 [==============================] - 31s 165ms/step - loss: 0.5706 - accuracy: 0.7866 - val_loss: 0.6939 - val_accuracy: 0.7604

Epoch 7/10

189/189 [==============================] - 31s 164ms/step - loss: 0.5143 - accuracy: 0.8139 - val_loss: 0.7406 - val_accuracy: 0.7396

Epoch 8/10

189/189 [==============================] - 32s 166ms/step - loss: 0.5019 - accuracy: 0.8166 - val_loss: 0.6001 - val_accuracy: 0.7656

Epoch 9/10

189/189 [==============================] - 32s 167ms/step - loss: 0.4956 - accuracy: 0.8129 - val_loss: 0.7511 - val_accuracy: 0.7240

Epoch 10/10

189/189 [==============================] - 31s 166ms/step - loss: 0.4820 - accuracy: 0.8159 - val_loss: 0.7136 - val_accuracy: 0.7500

for var in model.variables:

if var.name == 'block1_conv1/kernel:0':

conv1_1_w_1 = var

if var.name == 'dense/kernel:0':

dense_w_1 = var

Visualizing results of the training

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(10)

plt.figure(figsize=(8, 8))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

Evaluation for Test dataset

history = model.evaluate(test_generator)

print("loss value: {:.3f}".format(history[0]))

print("accuracy value: {:.3f}".format(history[1]))