import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras import callbacks

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

tf.__version__

데이터 로드

- TF에서 제공하는 데이터셋을 load해 간단한 전처리 진행

(train_data, train_labels), (test_data, test_labels) = \

tf.keras.datasets.fashion_mnist.load_data()

print(train_data.shape, train_labels.shape)

train_data, valid_data, train_labels, valid_labels = \

train_test_split(train_data, train_labels, test_size=0.1, shuffle=True)

print(train_data.shape, train_labels.shape)

print(valid_data.shape, valid_labels.shape)

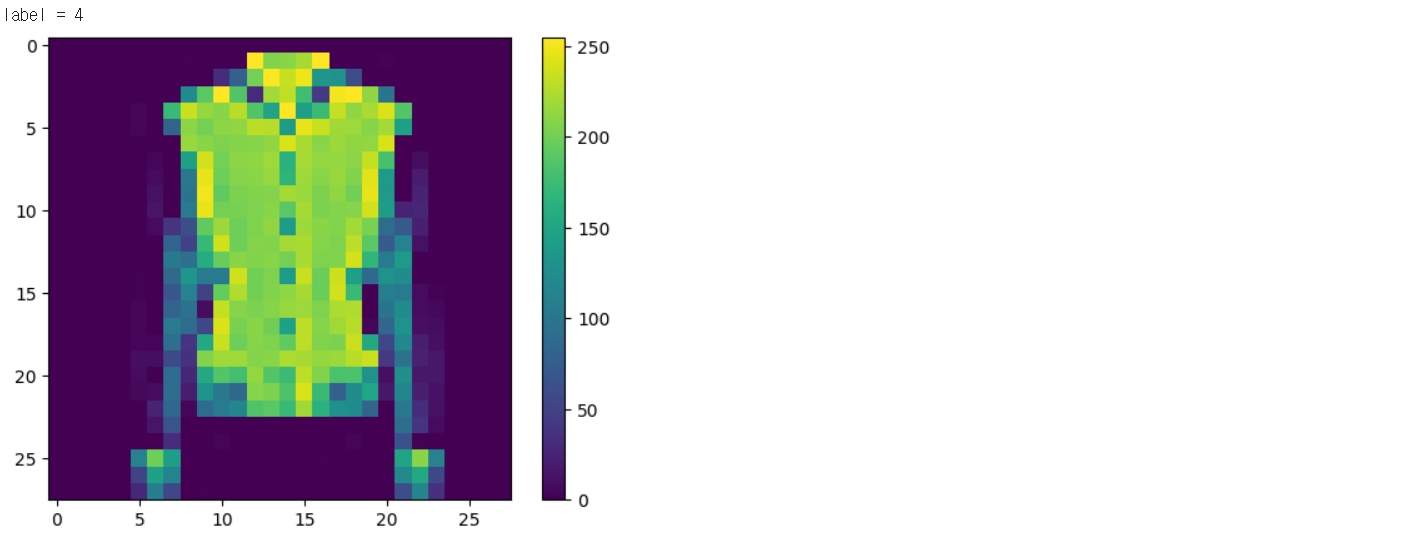

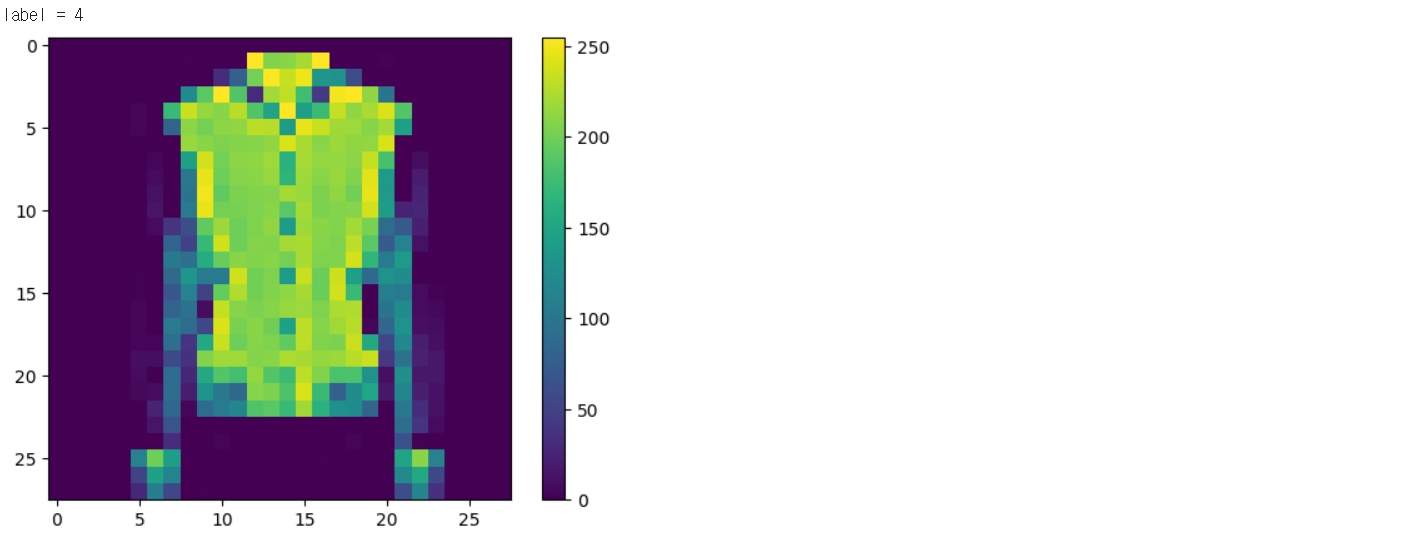

index = 5901

print("label = {}".format(valid_labels[index]))

plt.imshow(valid_data[index].reshape(28, 28))

plt.colorbar()

plt.show()

train_data = train_data / 255.

train_data = train_data.reshape(-1, 28 * 28)

train_data = train_data.astype(np.float32)

train_labels = train_labels.astype(np.int32)

test_data = test_data / 255.

test_data = test_data.reshape(-1, 28 * 28)

test_data = test_data.astype(np.float32)

test_labels = test_labels.astype(np.int32)

valid_data = valid_data / 255.

valid_data = valid_data.reshape(-1, 28 * 28)

valid_data = valid_data.astype(np.float32)

valid_labels = valid_labels.astype(np.int32)

print(train_data.shape, train_labels.shape)

print(test_data.shape, test_labels.shape)

print(valid_data.shape, valid_labels.shape)

학습에 사용할 tf.data.Dataset 구성

- 학습에 잘 적용할 수 있도록 label 처리

- 데이터셋 구성

def one_hot_label(image, label):

label = tf.one_hot(label, depth=10)

return image, label

batch_size = 32

train_dataset = tf.data.Dataset.from_tensor_slices((train_data, train_labels))

train_dataset = train_dataset.shuffle(buffer_size=10000)

train_dataset = train_dataset.map(one_hot_label)

train_dataset = train_dataset.repeat().batch(batch_size=batch_size)

print(train_dataset)

test_dataset = tf.data.Dataset.from_tensor_slices((test_data, test_labels))

test_dataset = test_dataset.map(one_hot_label)

test_dataset = test_dataset.batch(batch_size=batch_size)

print(test_dataset)

valid_dataset = tf.data.Dataset.from_tensor_slices((valid_data, valid_labels))

valid_dataset = valid_dataset.map(one_hot_label)

valid_dataset = valid_dataset.batch(batch_size=batch_size)

print(valid_dataset)

모델 구성

model = tf.keras.Sequential([

layers.Dense(64, kernel_initializer=tf.keras.initializers.HeUniform(),

kernel_regularizer=tf.keras.regularizers.L2(0.0001)),

layers.BatchNormalization(),

layers.Activation("relu"),

layers.Dropout(0.5),

layers.Dense(32, kernel_initializer=tf.keras.initializers.HeUniform(),

kernel_regularizer=tf.keras.regularizers.L2(0.0001)),

layers.BatchNormalization(),

layers.Activation("relu"),

layers.Dropout(0.5),

layers.Dense(16, kernel_initializer=tf.keras.initializers.HeUniform(),

kernel_regularizer=tf.keras.regularizers.L2(0.0001)),

layers.BatchNormalization(),

layers.Activation("relu"),

layers.Dense(10),

])

model.compile(optimizer=tf.keras.optimizers.Adam(1e-4),

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

predictions = model(train_data[0:1], training=False)

print("Predictions: ", predictions.numpy())

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

dense (Dense) (1, 64) 50240

batch_normalization (Batch (1, 64) 256

Normalization)

activation (Activation) (1, 64) 0

dropout (Dropout) (1, 64) 0

dense_1 (Dense) (1, 32) 2080

batch_normalization_1 (Bat (1, 32) 128

chNormalization)

activation_1 (Activation) (1, 32) 0

dropout_1 (Dropout) (1, 32) 0

dense_2 (Dense) (1, 16) 528

batch_normalization_2 (Bat (1, 16) 64

chNormalization)

activation_2 (Activation) (1, 16) 0

dense_3 (Dense) (1, 10) 170

=================================================================

Total params: 53466 (208.85 KB)

Trainable params: 53242 (207.98 KB)

Non-trainable params: 224 (896.00 Byte)

_________________________________________________________________

모델 학습 구성

check_point_cb = callbacks.ModelCheckpoint('fashion_mnist_model.h5',

save_best_only=True,

verbose=1)

early_stopping_cb = callbacks.EarlyStopping(patience=10,

monitor='val_loss',

restore_best_weights=True,

verbose=1)

max_epochs = 100

history = model.fit(train_dataset,

epochs=max_epochs,

steps_per_epoch=len(train_data) // batch_size,

validation_data=valid_dataset,

validation_steps=len(valid_data) // batch_size,

callbacks=[check_point_cb, early_stopping_cb]

)

Epoch 1/100

1680/1687 [============================>.] - ETA: 0s - loss: 1.8866 - accuracy: 0.3592

Epoch 1: val_loss improved from inf to 1.35230, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 27s 11ms/step - loss: 1.8853 - accuracy: 0.3600 - val_loss: 1.3523 - val_accuracy: 0.7495

Epoch 2/100

18/1687 [..............................] - ETA: 10s - loss: 1.6272 - accuracy: 0.4792/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py:3103: UserWarning: You are saving your model as an HDF5 file via `model.save()`. This file format is considered legacy. We recommend using instead the native Keras format, e.g. `model.save('my_model.keras')`.

saving_api.save_model(

1685/1687 [============================>.] - ETA: 0s - loss: 1.4218 - accuracy: 0.5672

Epoch 2: val_loss improved from 1.35230 to 0.95672, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 1.4215 - accuracy: 0.5672 - val_loss: 0.9567 - val_accuracy: 0.7871

Epoch 3/100

1687/1687 [==============================] - ETA: 0s - loss: 1.1770 - accuracy: 0.6316

Epoch 3: val_loss improved from 0.95672 to 0.75706, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 7ms/step - loss: 1.1770 - accuracy: 0.6316 - val_loss: 0.7571 - val_accuracy: 0.7968

Epoch 4/100

1684/1687 [============================>.] - ETA: 0s - loss: 1.0440 - accuracy: 0.6617

Epoch 4: val_loss improved from 0.75706 to 0.66723, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 7ms/step - loss: 1.0442 - accuracy: 0.6617 - val_loss: 0.6672 - val_accuracy: 0.8107

Epoch 5/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.9719 - accuracy: 0.6803

Epoch 5: val_loss improved from 0.66723 to 0.59835, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.9722 - accuracy: 0.6802 - val_loss: 0.5983 - val_accuracy: 0.8175

Epoch 6/100

1687/1687 [==============================] - ETA: 0s - loss: 0.9129 - accuracy: 0.6979

Epoch 6: val_loss improved from 0.59835 to 0.56844, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.9129 - accuracy: 0.6979 - val_loss: 0.5684 - val_accuracy: 0.8284

Epoch 7/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.8799 - accuracy: 0.7058

Epoch 7: val_loss improved from 0.56844 to 0.54611, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.8803 - accuracy: 0.7055 - val_loss: 0.5461 - val_accuracy: 0.8319

Epoch 8/100

1687/1687 [==============================] - ETA: 0s - loss: 0.8536 - accuracy: 0.7158

Epoch 8: val_loss improved from 0.54611 to 0.52750, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.8536 - accuracy: 0.7158 - val_loss: 0.5275 - val_accuracy: 0.8334

Epoch 9/100

1687/1687 [==============================] - ETA: 0s - loss: 0.8268 - accuracy: 0.7255

Epoch 9: val_loss improved from 0.52750 to 0.51081, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.8268 - accuracy: 0.7255 - val_loss: 0.5108 - val_accuracy: 0.8386

Epoch 10/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.8107 - accuracy: 0.7318

Epoch 10: val_loss improved from 0.51081 to 0.49830, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.8103 - accuracy: 0.7320 - val_loss: 0.4983 - val_accuracy: 0.8379

Epoch 11/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.7928 - accuracy: 0.7361

Epoch 11: val_loss improved from 0.49830 to 0.49690, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.7923 - accuracy: 0.7363 - val_loss: 0.4969 - val_accuracy: 0.8384

Epoch 12/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.7868 - accuracy: 0.7385

Epoch 12: val_loss improved from 0.49690 to 0.48387, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7867 - accuracy: 0.7385 - val_loss: 0.4839 - val_accuracy: 0.8443

Epoch 13/100

1687/1687 [==============================] - ETA: 0s - loss: 0.7689 - accuracy: 0.7449

Epoch 13: val_loss improved from 0.48387 to 0.47823, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.7689 - accuracy: 0.7449 - val_loss: 0.4782 - val_accuracy: 0.8421

Epoch 14/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.7628 - accuracy: 0.7463

Epoch 14: val_loss improved from 0.47823 to 0.47363, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7627 - accuracy: 0.7464 - val_loss: 0.4736 - val_accuracy: 0.8436

Epoch 15/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.7465 - accuracy: 0.7528

Epoch 15: val_loss improved from 0.47363 to 0.46710, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7466 - accuracy: 0.7528 - val_loss: 0.4671 - val_accuracy: 0.8446

Epoch 16/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.7388 - accuracy: 0.7560

Epoch 16: val_loss improved from 0.46710 to 0.46406, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7385 - accuracy: 0.7562 - val_loss: 0.4641 - val_accuracy: 0.8486

Epoch 17/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.7294 - accuracy: 0.7589

Epoch 17: val_loss improved from 0.46406 to 0.45721, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7295 - accuracy: 0.7589 - val_loss: 0.4572 - val_accuracy: 0.8491

Epoch 18/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.7235 - accuracy: 0.7599

Epoch 18: val_loss improved from 0.45721 to 0.45413, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7236 - accuracy: 0.7599 - val_loss: 0.4541 - val_accuracy: 0.8508

Epoch 19/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.7156 - accuracy: 0.7651

Epoch 19: val_loss improved from 0.45413 to 0.45155, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 18s 10ms/step - loss: 0.7154 - accuracy: 0.7651 - val_loss: 0.4515 - val_accuracy: 0.8498

Epoch 20/100

1687/1687 [==============================] - ETA: 0s - loss: 0.7162 - accuracy: 0.7643

Epoch 20: val_loss did not improve from 0.45155

1687/1687 [==============================] - 14s 8ms/step - loss: 0.7162 - accuracy: 0.7643 - val_loss: 0.4586 - val_accuracy: 0.8459

Epoch 21/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.7086 - accuracy: 0.7666

Epoch 21: val_loss improved from 0.45155 to 0.44700, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7085 - accuracy: 0.7667 - val_loss: 0.4470 - val_accuracy: 0.8483

Epoch 22/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.7045 - accuracy: 0.7673

Epoch 22: val_loss did not improve from 0.44700

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7046 - accuracy: 0.7672 - val_loss: 0.4487 - val_accuracy: 0.8489

Epoch 23/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6985 - accuracy: 0.7701

Epoch 23: val_loss improved from 0.44700 to 0.44265, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6988 - accuracy: 0.7699 - val_loss: 0.4426 - val_accuracy: 0.8531

Epoch 24/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6889 - accuracy: 0.7735

Epoch 24: val_loss improved from 0.44265 to 0.43872, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6886 - accuracy: 0.7736 - val_loss: 0.4387 - val_accuracy: 0.8519

Epoch 25/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6864 - accuracy: 0.7735

Epoch 25: val_loss improved from 0.43872 to 0.43230, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6863 - accuracy: 0.7734 - val_loss: 0.4323 - val_accuracy: 0.8566

Epoch 26/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6871 - accuracy: 0.7737

Epoch 26: val_loss did not improve from 0.43230

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6868 - accuracy: 0.7739 - val_loss: 0.4404 - val_accuracy: 0.8506

Epoch 27/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6769 - accuracy: 0.7776

Epoch 27: val_loss did not improve from 0.43230

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6768 - accuracy: 0.7776 - val_loss: 0.4374 - val_accuracy: 0.8529

Epoch 28/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6840 - accuracy: 0.7749

Epoch 28: val_loss did not improve from 0.43230

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6840 - accuracy: 0.7749 - val_loss: 0.4345 - val_accuracy: 0.8549

Epoch 29/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6788 - accuracy: 0.7788

Epoch 29: val_loss did not improve from 0.43230

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6785 - accuracy: 0.7790 - val_loss: 0.4328 - val_accuracy: 0.8514

Epoch 30/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6714 - accuracy: 0.7797

Epoch 30: val_loss did not improve from 0.43230

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6715 - accuracy: 0.7796 - val_loss: 0.4349 - val_accuracy: 0.8561

Epoch 31/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6676 - accuracy: 0.7800

Epoch 31: val_loss improved from 0.43230 to 0.43181, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 15s 9ms/step - loss: 0.6678 - accuracy: 0.7799 - val_loss: 0.4318 - val_accuracy: 0.8544

Epoch 32/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6640 - accuracy: 0.7817

Epoch 32: val_loss improved from 0.43181 to 0.42382, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 9ms/step - loss: 0.6640 - accuracy: 0.7816 - val_loss: 0.4238 - val_accuracy: 0.8573

Epoch 33/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6670 - accuracy: 0.7816

Epoch 33: val_loss did not improve from 0.42382

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6669 - accuracy: 0.7817 - val_loss: 0.4279 - val_accuracy: 0.8539

Epoch 34/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6595 - accuracy: 0.7837

Epoch 34: val_loss improved from 0.42382 to 0.42215, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6596 - accuracy: 0.7836 - val_loss: 0.4222 - val_accuracy: 0.8573

Epoch 35/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6551 - accuracy: 0.7850

Epoch 35: val_loss did not improve from 0.42215

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6553 - accuracy: 0.7850 - val_loss: 0.4240 - val_accuracy: 0.8583

Epoch 36/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6541 - accuracy: 0.7870

Epoch 36: val_loss did not improve from 0.42215

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6544 - accuracy: 0.7870 - val_loss: 0.4296 - val_accuracy: 0.8558

Epoch 37/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6549 - accuracy: 0.7841

Epoch 37: val_loss improved from 0.42215 to 0.42087, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6547 - accuracy: 0.7842 - val_loss: 0.4209 - val_accuracy: 0.8595

Epoch 38/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6474 - accuracy: 0.7894

Epoch 38: val_loss improved from 0.42087 to 0.41692, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6472 - accuracy: 0.7895 - val_loss: 0.4169 - val_accuracy: 0.8620

Epoch 39/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.6471 - accuracy: 0.7869

Epoch 39: val_loss did not improve from 0.41692

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6467 - accuracy: 0.7870 - val_loss: 0.4232 - val_accuracy: 0.8610

Epoch 40/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6437 - accuracy: 0.7886

Epoch 40: val_loss did not improve from 0.41692

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6435 - accuracy: 0.7887 - val_loss: 0.4208 - val_accuracy: 0.8591

Epoch 41/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6419 - accuracy: 0.7901

Epoch 41: val_loss did not improve from 0.41692

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6419 - accuracy: 0.7901 - val_loss: 0.4183 - val_accuracy: 0.8583

Epoch 42/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6399 - accuracy: 0.7906

Epoch 42: val_loss did not improve from 0.41692

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6399 - accuracy: 0.7905 - val_loss: 0.4245 - val_accuracy: 0.8570

Epoch 43/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6456 - accuracy: 0.7901

Epoch 43: val_loss improved from 0.41692 to 0.41269, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6455 - accuracy: 0.7901 - val_loss: 0.4127 - val_accuracy: 0.8620

Epoch 44/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6362 - accuracy: 0.7916

Epoch 44: val_loss improved from 0.41269 to 0.41099, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6362 - accuracy: 0.7916 - val_loss: 0.4110 - val_accuracy: 0.8623

Epoch 45/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6365 - accuracy: 0.7912

Epoch 45: val_loss improved from 0.41099 to 0.40983, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6365 - accuracy: 0.7912 - val_loss: 0.4098 - val_accuracy: 0.8665

Epoch 46/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6336 - accuracy: 0.7949

Epoch 46: val_loss improved from 0.40983 to 0.40962, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6335 - accuracy: 0.7950 - val_loss: 0.4096 - val_accuracy: 0.8608

Epoch 47/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6354 - accuracy: 0.7922

Epoch 47: val_loss improved from 0.40962 to 0.40934, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6354 - accuracy: 0.7922 - val_loss: 0.4093 - val_accuracy: 0.8648

Epoch 48/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6326 - accuracy: 0.7918

Epoch 48: val_loss did not improve from 0.40934

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6326 - accuracy: 0.7918 - val_loss: 0.4107 - val_accuracy: 0.8638

Epoch 49/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6290 - accuracy: 0.7953

Epoch 49: val_loss improved from 0.40934 to 0.40505, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6288 - accuracy: 0.7954 - val_loss: 0.4051 - val_accuracy: 0.8651

Epoch 50/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6242 - accuracy: 0.7945

Epoch 50: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6243 - accuracy: 0.7945 - val_loss: 0.4092 - val_accuracy: 0.8656

Epoch 51/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6224 - accuracy: 0.7960

Epoch 51: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6225 - accuracy: 0.7960 - val_loss: 0.4057 - val_accuracy: 0.8633

Epoch 52/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6285 - accuracy: 0.7931

Epoch 52: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6286 - accuracy: 0.7930 - val_loss: 0.4059 - val_accuracy: 0.8638

Epoch 53/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6216 - accuracy: 0.7966

Epoch 53: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6217 - accuracy: 0.7965 - val_loss: 0.4063 - val_accuracy: 0.8660

Epoch 54/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6181 - accuracy: 0.7976

Epoch 54: val_loss did not improve from 0.40505

1687/1687 [==============================] - 16s 9ms/step - loss: 0.6181 - accuracy: 0.7976 - val_loss: 0.4075 - val_accuracy: 0.8640

Epoch 55/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6198 - accuracy: 0.7964

Epoch 55: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6199 - accuracy: 0.7965 - val_loss: 0.4104 - val_accuracy: 0.8623

Epoch 56/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6186 - accuracy: 0.7981

Epoch 56: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6183 - accuracy: 0.7981 - val_loss: 0.4086 - val_accuracy: 0.8630

Epoch 57/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6181 - accuracy: 0.7968

Epoch 57: val_loss did not improve from 0.40505

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6180 - accuracy: 0.7969 - val_loss: 0.4087 - val_accuracy: 0.8643

Epoch 58/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6190 - accuracy: 0.7974

Epoch 58: val_loss improved from 0.40505 to 0.40263, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6192 - accuracy: 0.7972 - val_loss: 0.4026 - val_accuracy: 0.8628

Epoch 59/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6106 - accuracy: 0.7976

Epoch 59: val_loss did not improve from 0.40263

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6106 - accuracy: 0.7976 - val_loss: 0.4152 - val_accuracy: 0.8608

Epoch 60/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6179 - accuracy: 0.7963

Epoch 60: val_loss did not improve from 0.40263

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6179 - accuracy: 0.7963 - val_loss: 0.4053 - val_accuracy: 0.8661

Epoch 61/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6140 - accuracy: 0.7976

Epoch 61: val_loss did not improve from 0.40263

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6140 - accuracy: 0.7977 - val_loss: 0.4048 - val_accuracy: 0.8641

Epoch 62/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6122 - accuracy: 0.7997

Epoch 62: val_loss did not improve from 0.40263

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6122 - accuracy: 0.7997 - val_loss: 0.4035 - val_accuracy: 0.8675

Epoch 63/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6078 - accuracy: 0.8007

Epoch 63: val_loss improved from 0.40263 to 0.39922, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6081 - accuracy: 0.8006 - val_loss: 0.3992 - val_accuracy: 0.8671

Epoch 64/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6084 - accuracy: 0.8007

Epoch 64: val_loss did not improve from 0.39922

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6084 - accuracy: 0.8007 - val_loss: 0.4019 - val_accuracy: 0.8646

Epoch 65/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6022 - accuracy: 0.8031

Epoch 65: val_loss did not improve from 0.39922

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6022 - accuracy: 0.8031 - val_loss: 0.4036 - val_accuracy: 0.8638

Epoch 66/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6036 - accuracy: 0.8015

Epoch 66: val_loss improved from 0.39922 to 0.39657, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6034 - accuracy: 0.8015 - val_loss: 0.3966 - val_accuracy: 0.8707

Epoch 67/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6051 - accuracy: 0.7997

Epoch 67: val_loss did not improve from 0.39657

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6048 - accuracy: 0.7998 - val_loss: 0.4001 - val_accuracy: 0.8670

Epoch 68/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6053 - accuracy: 0.8030

Epoch 68: val_loss did not improve from 0.39657

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6053 - accuracy: 0.8030 - val_loss: 0.4028 - val_accuracy: 0.8650

Epoch 69/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6042 - accuracy: 0.8031

Epoch 69: val_loss did not improve from 0.39657

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6042 - accuracy: 0.8031 - val_loss: 0.4035 - val_accuracy: 0.8661

Epoch 70/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6072 - accuracy: 0.8021

Epoch 70: val_loss improved from 0.39657 to 0.39515, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6071 - accuracy: 0.8021 - val_loss: 0.3951 - val_accuracy: 0.8681

Epoch 71/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.5970 - accuracy: 0.8036

Epoch 71: val_loss did not improve from 0.39515

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5972 - accuracy: 0.8035 - val_loss: 0.3957 - val_accuracy: 0.8703

Epoch 72/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6035 - accuracy: 0.8031

Epoch 72: val_loss did not improve from 0.39515

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6037 - accuracy: 0.8030 - val_loss: 0.3984 - val_accuracy: 0.8658

Epoch 73/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6013 - accuracy: 0.8038

Epoch 73: val_loss did not improve from 0.39515

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6013 - accuracy: 0.8038 - val_loss: 0.3977 - val_accuracy: 0.8651

Epoch 74/100

683/1687 [===========>..................] - ETA: 6s - loss: 0.5873 - accuracy: 0.8068

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(len(acc))

plt.figure(figsize=(8, 8))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Valid Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Valid Loss')

plt.show()

results = model.evaluate(test_dataset)