Multi-variable Linear Regression

import tensorflow as tf

from tensorflow.keras import layers, Sequential

import numpy as np

import matplotlib.pyplot as plt

tf.__version__

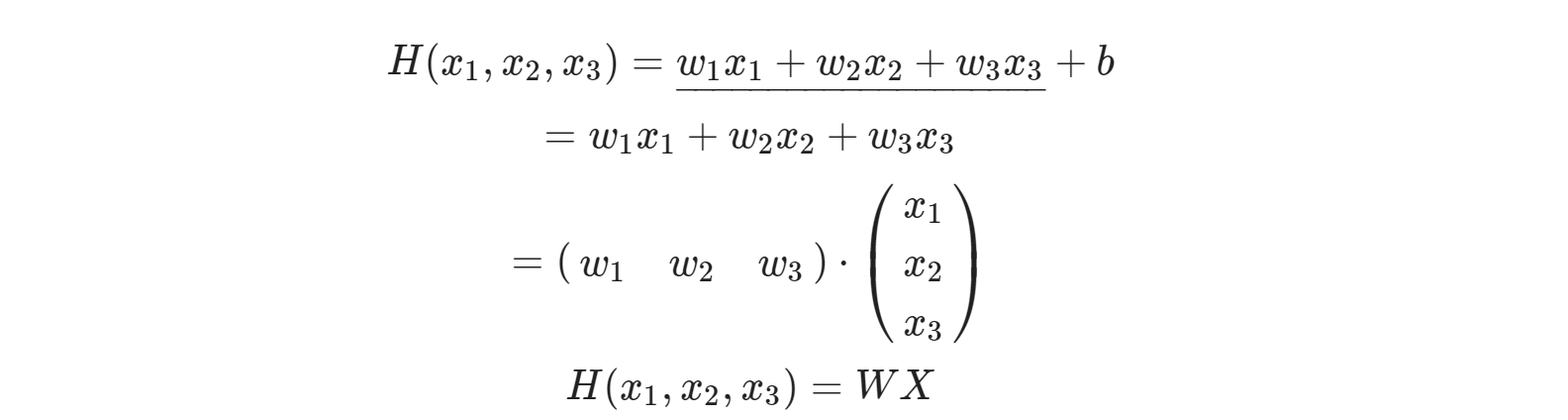

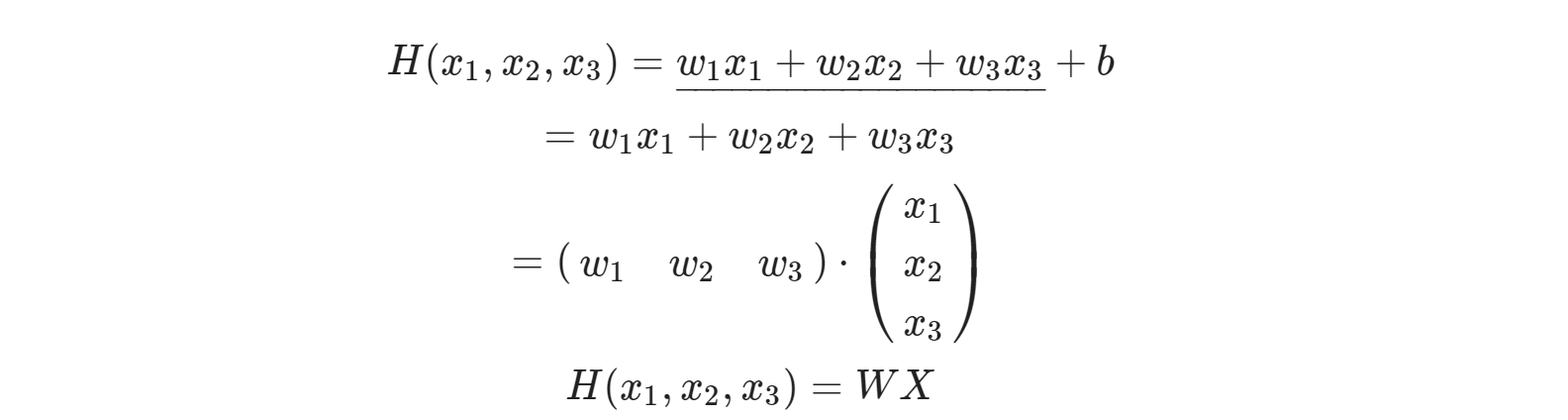

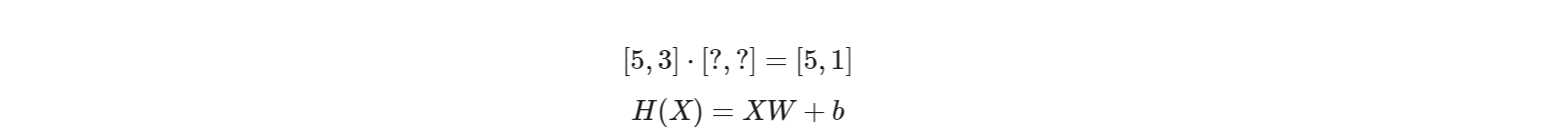

Hypothesis

x_data = [

[1., 0., 3., 0., 5.],

[0., 2., 0., 4., 0.]

]

y_data = [1, 2, 3, 4, 5]

W = tf.Variable(tf.random.normal([1, 2], -1.0, 1.0))

b = tf.Variable(tf.random.normal([1], -1.0, 1.0))

print(np.array(x_data).shape, np.array(y_data).shape, W.shape, b.shape)

learning_rate = tf.Variable(0.001)

for i in range(100):

with tf.GradientTape() as tape:

hypothesis = tf.matmul(W, x_data) + b

cost = tf.reduce_mean(tf.square(hypothesis - y_data))

W_grad, b_grad = tape.gradient(cost, [W, b])

W.assign_sub(learning_rate * W_grad)

b.assign_sub(learning_rate * b_grad)

if i % 10 == 0:

print("step: {:3} \t cost: {:5.4f} \t w[0][0]: {:5.4f} \t w[0][1]: {:5.4f} \t b: {:5.4f}".format(

i, cost.numpy(), W.numpy()[0][0], W.numpy()[0][1], b.numpy()[0]))

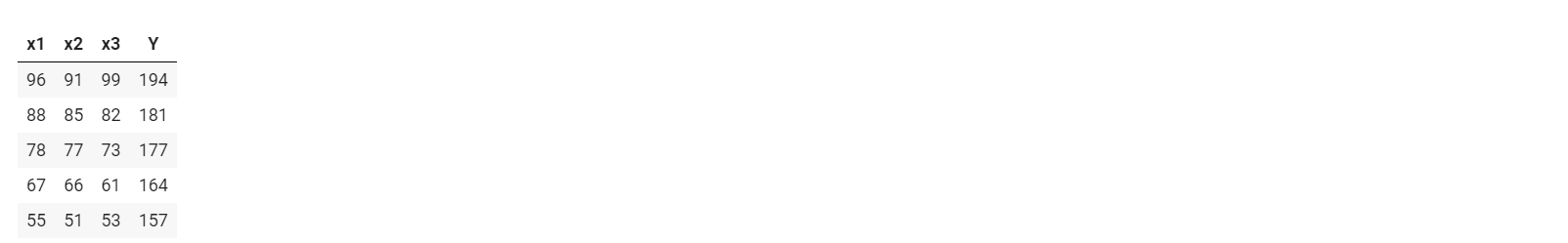

Test Score

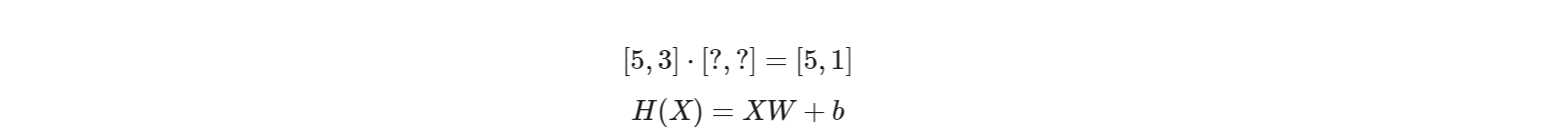

Hypothesis using matrix

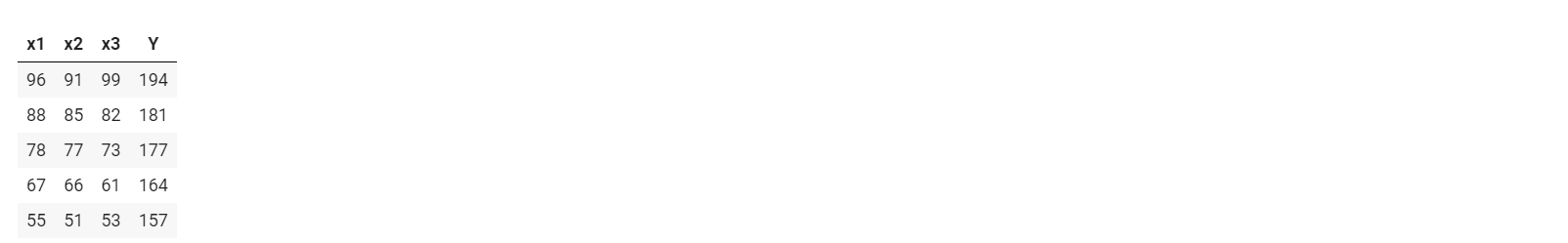

data = np.array([

[ 96., 91., 99., 194. ],

[ 88., 85., 82., 181. ],

[ 78., 77., 73., 177. ],

[ 67., 66., 61., 164. ],

[ 55., 51., 53., 157. ]

], dtype=np.float32)

X = data[:, :-1]

print(X.shape)

y = data[:, [-1]]

print(y.shape)

W = tf.Variable(tf.random.normal([3, 1]))

b = tf.Variable(tf.random.normal([1]))

learning_rate = 0.00001

def predict(X):

return tf.matmul(X, W) + b

print("epoch | cost")

n_epochs = 1000

for i in range(n_epochs):

with tf.GradientTape() as tape:

cost = tf.reduce_mean((tf.square(predict(X) - y)))

W_grad, b_grad = tape.gradient(cost, [W, b])

W.assign_sub(learning_rate * W_grad)

b.assign_sub(learning_rate * b_grad)

if i % 100 == 0:

print("{:5} | {:10.4f}".format(i, cost.numpy()))

데이터를 기반으로예측해보자

def predict(X):

return tf.matmul(X, W) + b

predict(X).numpy()

W

predict([[ 89., 95., 92.],[ 84., 92., 85.]]).numpy()

with Tensorflow

data = np.array([

[ 96., 91., 99., 194. ],

[ 88., 85., 82., 181. ],

[ 78., 77., 73., 177. ],

[ 67., 66., 61., 164. ],

[ 55., 51., 53., 157. ]

], dtype=np.float32)

X = data[:, :-1]

y = data[:, [-1]]

dataset = tf.data.Dataset.from_tensor_slices((X, y))

dataset = dataset.batch(batch_size=1)

model = Sequential([

layers.Dense(1, activation='linear')

])

model.compile(optimizer='adam',

loss='mse',

metrics=['mse'])

model.fit(dataset, epochs=1000)

tf.keras.utils.plot_model(model, show_shapes=True)

test_loss, test_mae = model.evaluate(X, y, verbose=0)

print('Test MSE:', test_mae)

for x, y in dataset:

print(x)

print(y)

print(model(x))

break