Hyperparameter Tuning

The process of finding the right combination of hyperparameters to maximize the model performance

Hyperparameter tuning methods

- Random Search

- Grid Search

- Each iteration tries a combination of hyperparameters in a specific order. It fits the model on each combination, records the model performance, and returns the best model with the best hyperparameters.

- Each iteration tries a combination of hyperparameters in a specific order. It fits the model on each combination, records the model performance, and returns the best model with the best hyperparameters.

- Bayesian Optimization

- Tree-structured Parzen estimators(TPE)

Hyperparameter tuning algorithms

- Hyperband

- Population-based Training(PBT)

- a hybrid of Random Search and manual tuning

- Many neural networks run in parallel but they are not fully independent of each other.

- It uses the information from the rest of the networks to refine the hyperparameters and determine which hyperparameter to use based on the rests

Useful libraries for hyperparameter optimization

-

Optuma

- Efficiently search large spaces and prune unpromising trials for faster results

-

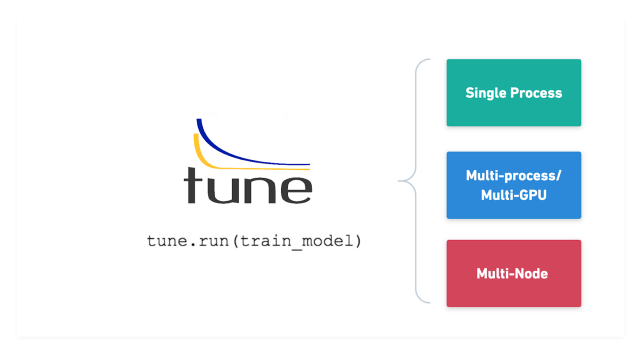

Ray Tune

Tune is a Python library for experiment execution and hyperparameter tuning at any scale. You can tune your favorite machine learning framework (PyTorch

, XGBoost, Scikit-Learn, TensorFlow and Keras, and more) by running state of the art algorithms such as Population Based Training (PBT) and HyperBand/ASHA.Two Benefits

- They maximize model performance: e.g., DeepMind uses PBT to achieve superhuman performance on StarCraft; Waymo uses PBT to enable self-driving cars.

- They minimize training costs: HyperBand and ASHA converge to high-quality configurations in half the time taken by previous approaches; population-based data augmentation algorithms cut costs by orders of magnitude.

cf) ASHA: one of the popular early stopping algorithm

To be brief, Ray Tune scales your training from a single machine to a large distributed cluster without changing your code.

- Simplifies scaling

- It allows to use all of the cores and GPUs on the machine

- So it makes it perform parallel asynchronous hyperparameter tuning.

- Flexible

- Supports any ML framework(PyTorch, TensorFlow ..)

- It provides a flexible interface for optimization algorithms,

- We can visualize with MLFlow and TensorBoard.

ref) https://neptune.ai/blog/hyperparameter-tuning-in-python-complete-guide