Batch size and Learning rate

https://openreview.net/pdf?id=B1Yy1BxCZ

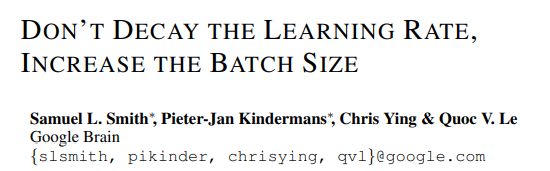

1. Batch Size

- small: converges quickly at the cost of noise in the training process

- large: converges slowly with accurate estimates of the error gradient

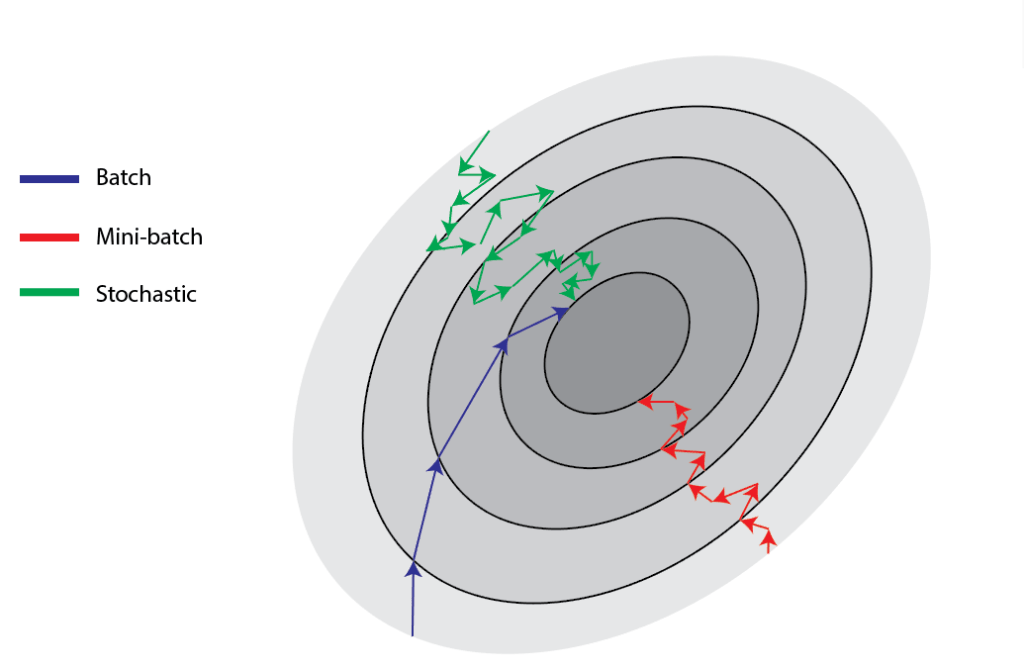

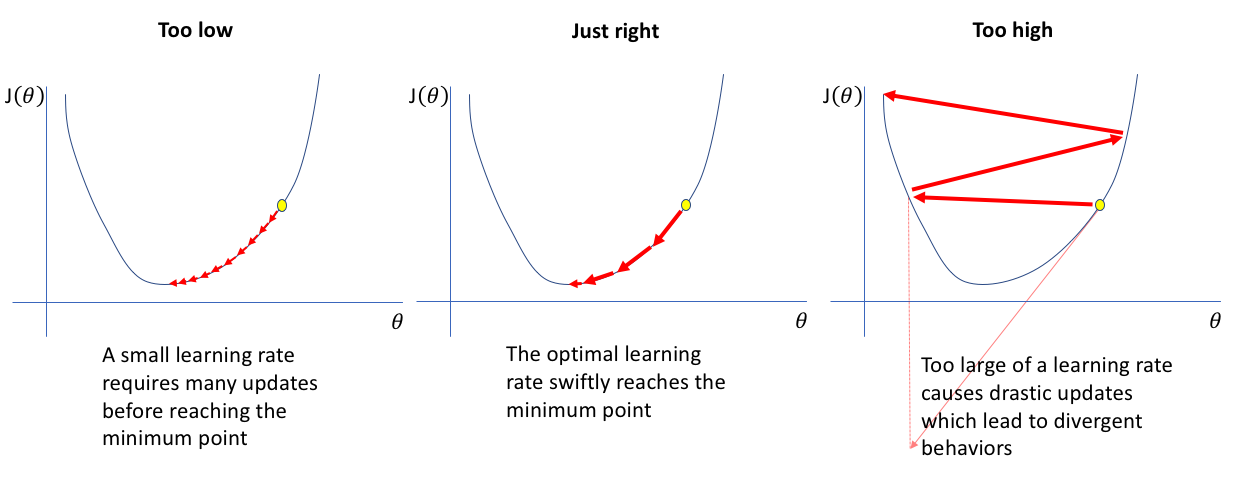

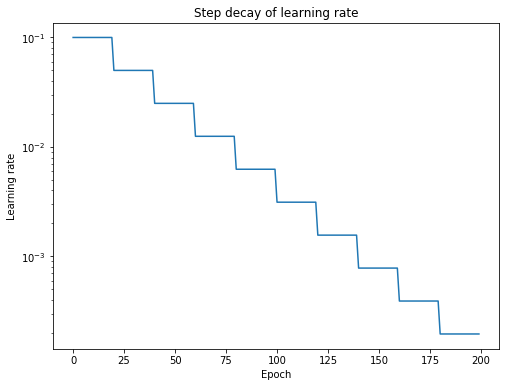

2. Learning Rate

The most popular form of learning rate annealing is a step decay

where the learning rate is reduced by some percentage after a set number of training epochs.

https://www.jeremyjordan.me/nn-learning-rate/

https://www.baeldung.com/cs/learning-rate-batch-size

https://inhovation97.tistory.com/32

Bag of Tricks for Image Classification with Convolutional Neural Networks

https://arxiv.org/abs/1812.01187

- Increase Batch size

- Linear scaling learning rate

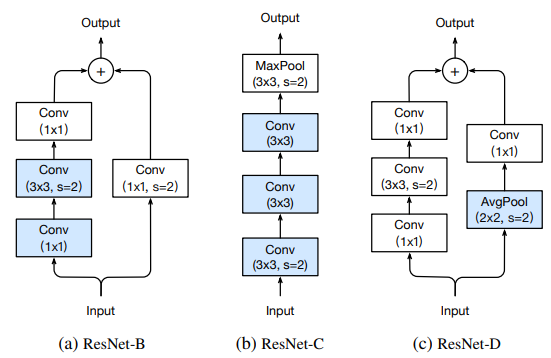

- Model Architecture Tweaks

“A model tweak is a minor adjustment to the network architecture, such as changing the stride of a particular convolution layer. Such a tweak often barely changes the computational complexity but might have a non-negligible effect on the model accuracy.”

- Training Refinements

- Cosine Learning rate Decay

- Label smoothing

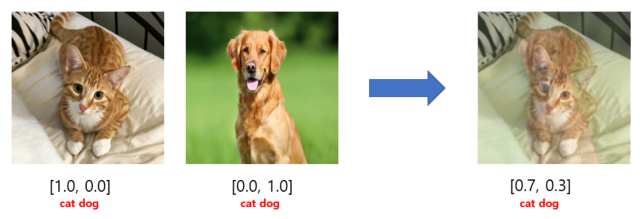

- Mixup training

https://norman3.github.io/papers/docs/bag_of_tricks_for_image_classification.html