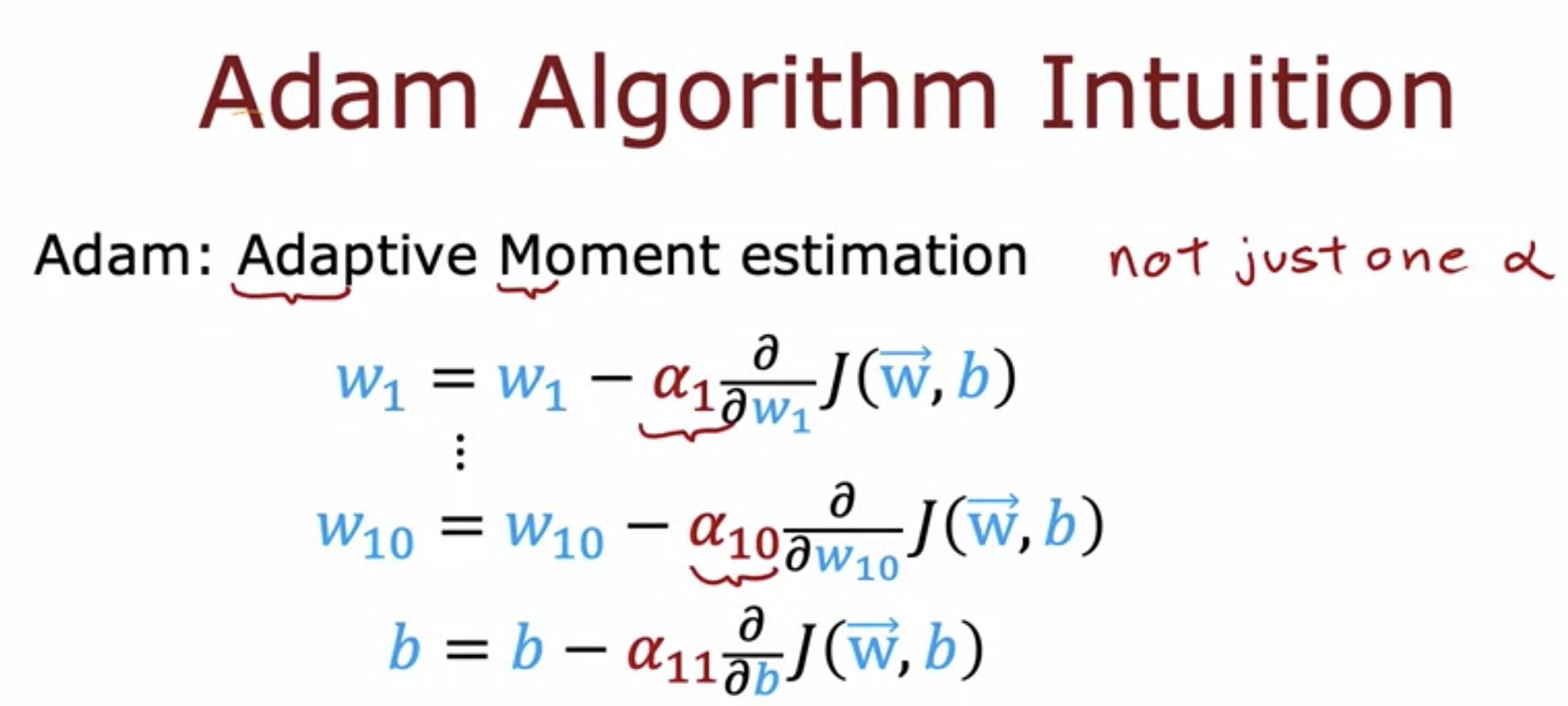

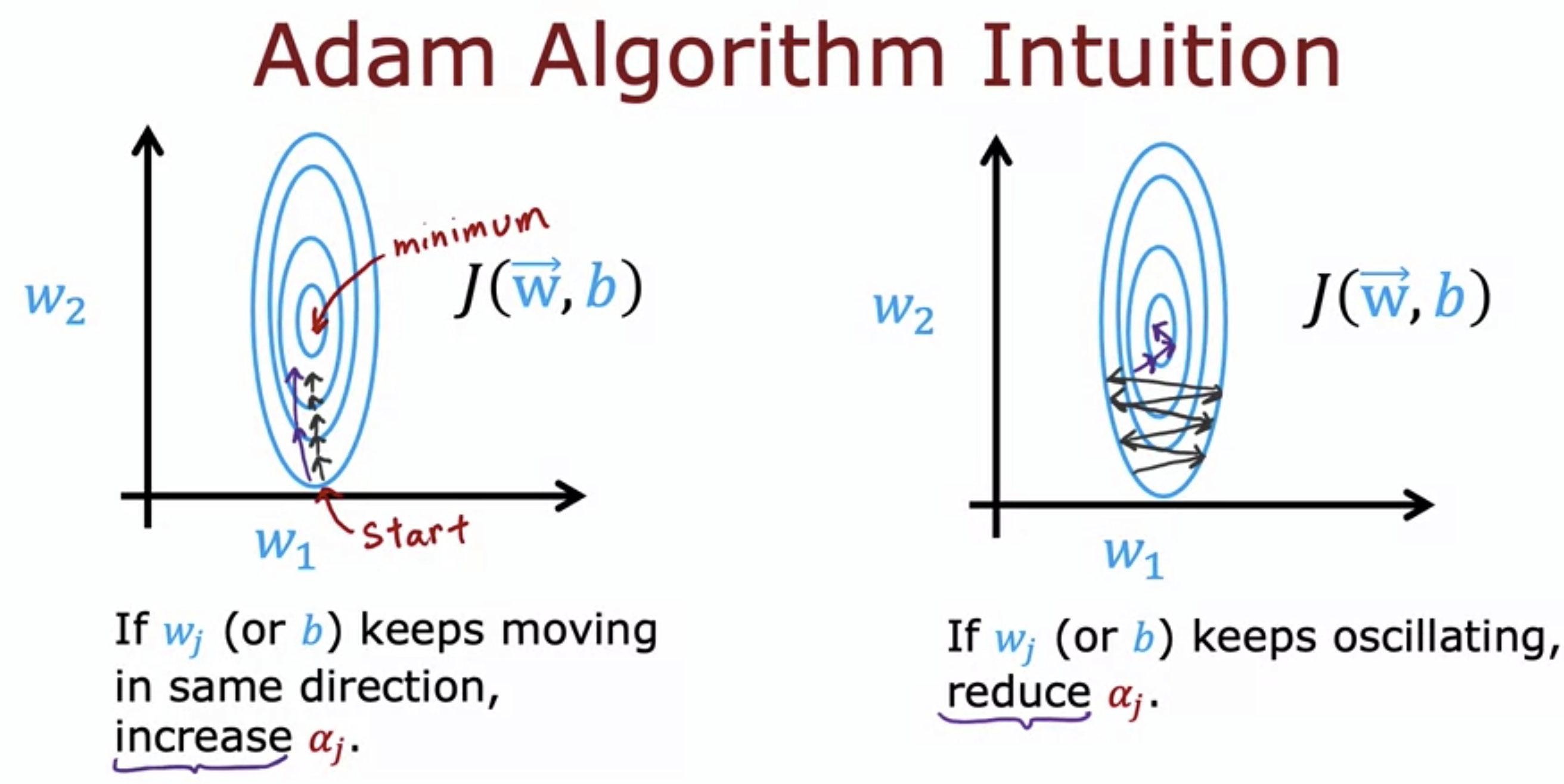

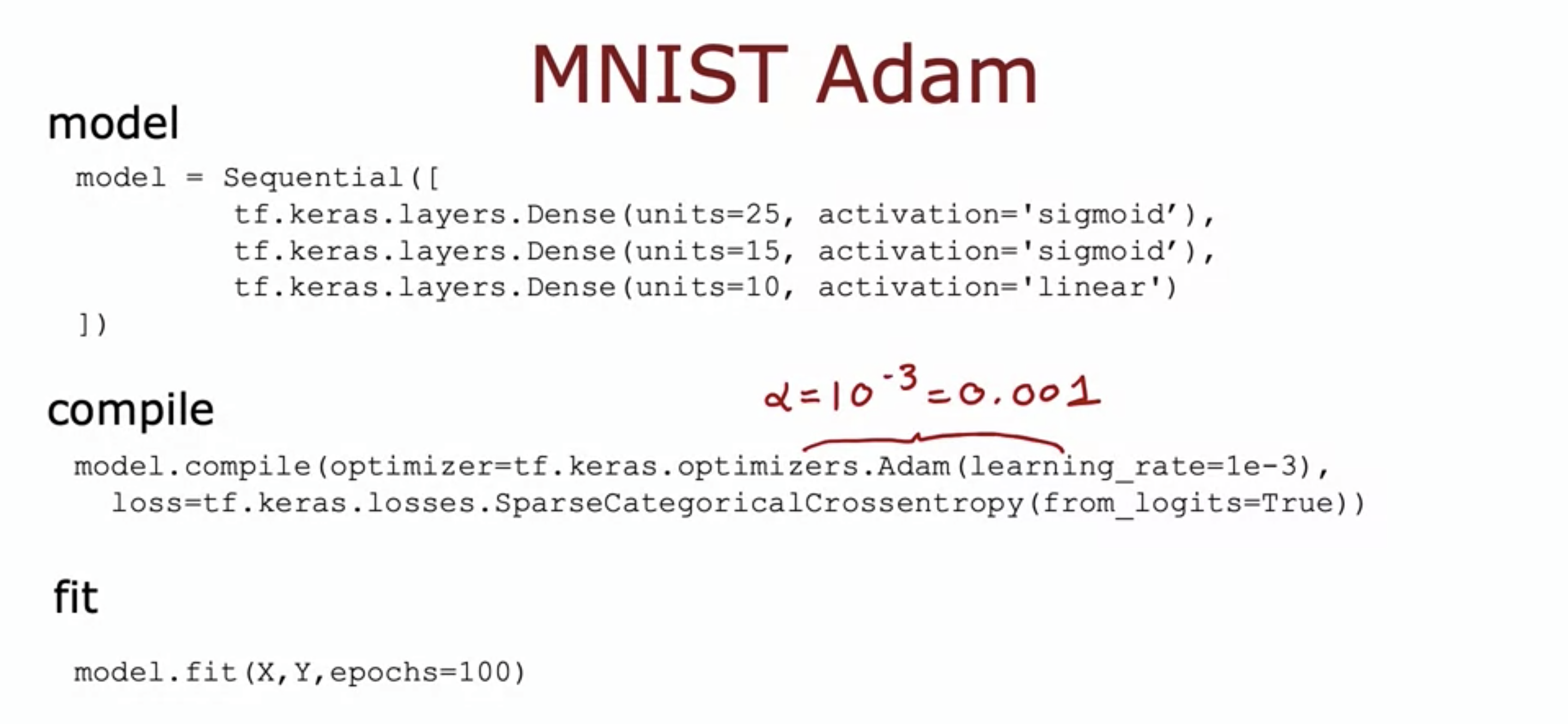

1. Advanced Optimization

- Adam optimizer for faster gradient descent.

- If w_j (or b) keeps moving in the same direction, we increase the learning rate a_j.

- If w_j (or b) keeps oscillating, we reduce a_j.

- another parameter in compile.

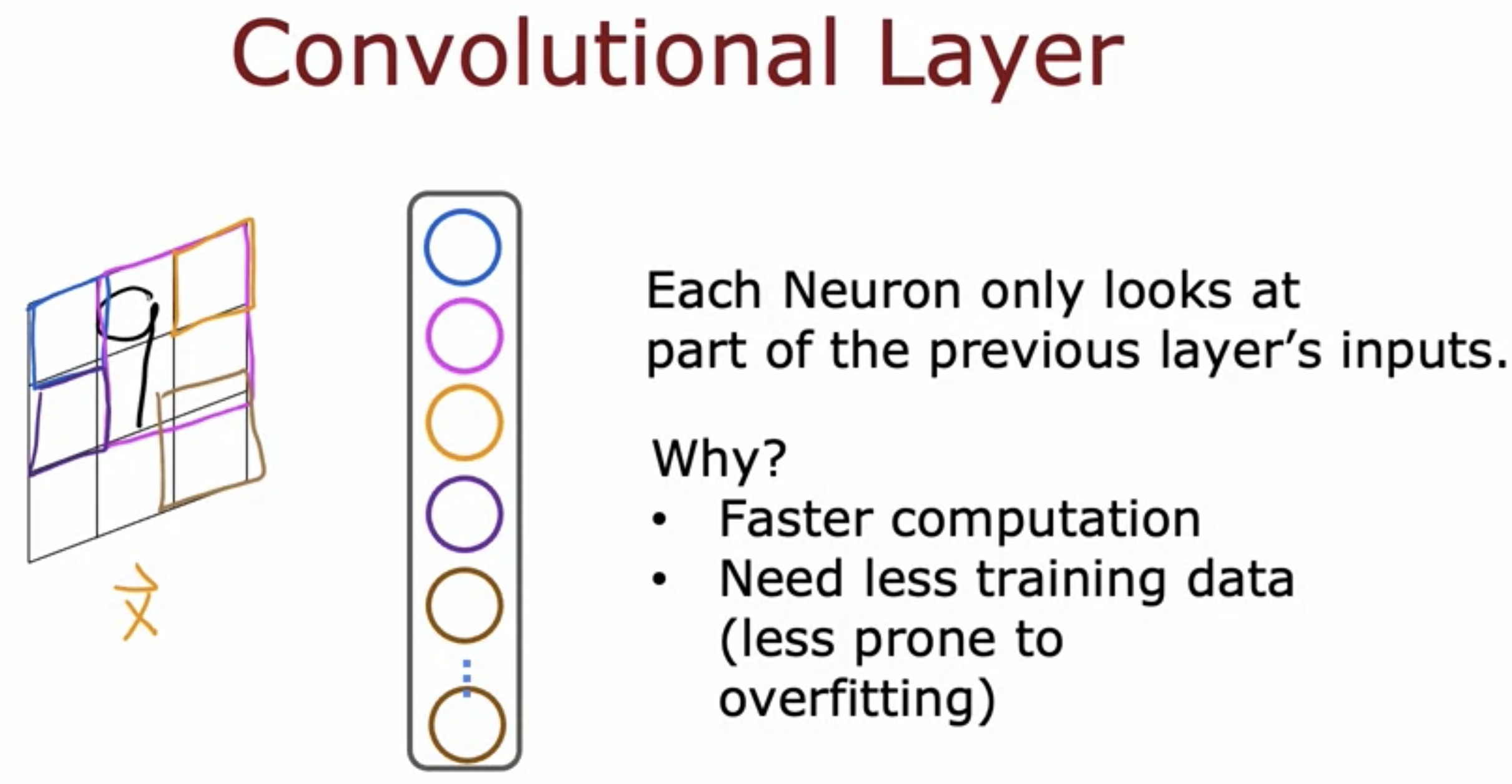

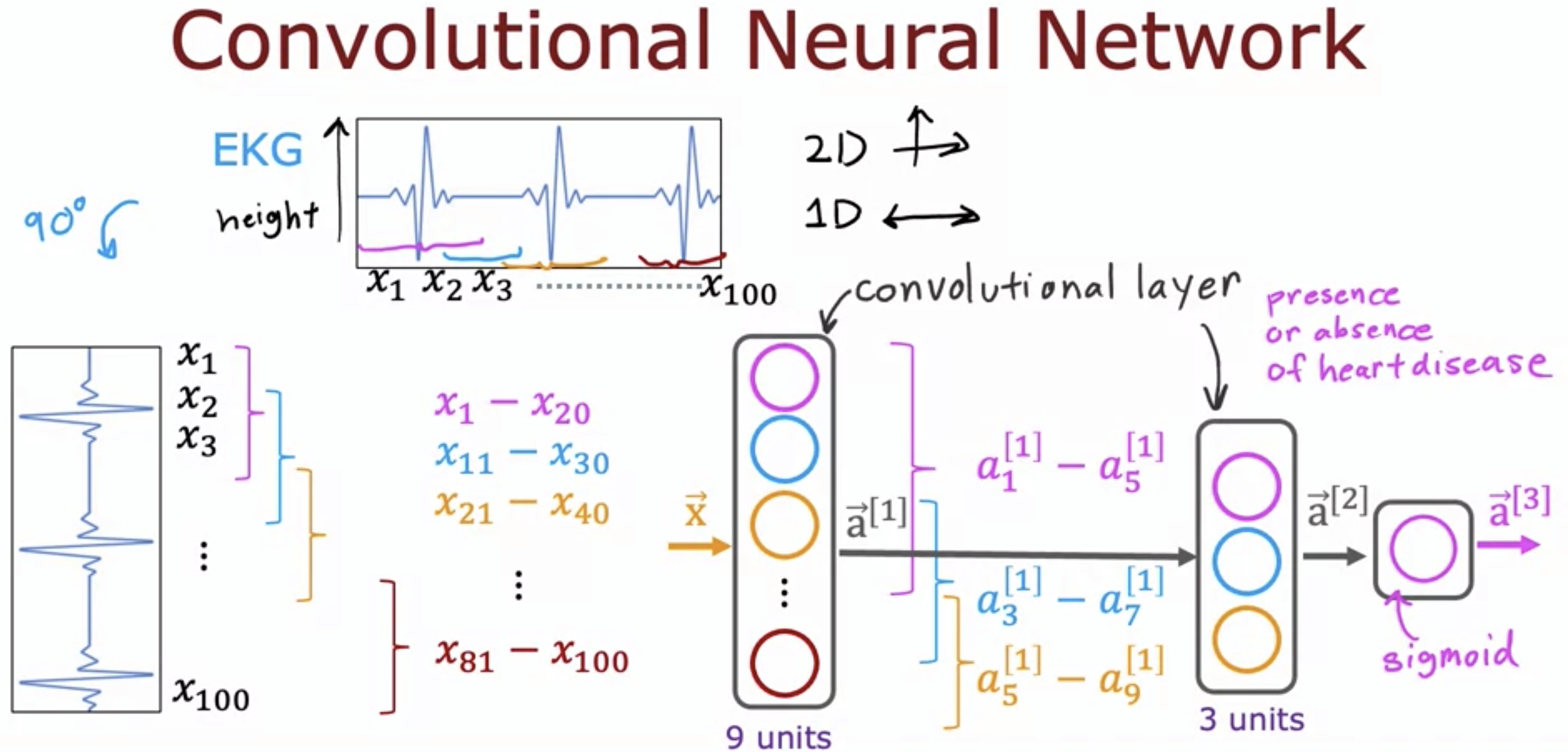

2. Additional Layer Types

- Each neuron only looks at small parts of the previous layer's activation.

- This leads to faster computation, needs less training data.

- In this example, Xx is the height of the signal at any point of time.

- In the first convolutional layer, each neuron only looks at a part of the original input.

- The second layer can also be a convolutional layer, each neuron only looking at a small number of neurons in the previous layer.

- Only the last layer takes in all activation inputs from the previous layer.