Performance Evaluation Metrics

-

In the world of machine learning, performance evaluation metrics play a critical role in determining the effectiveness of a model. Metrics such as precision, recall, and the F1 score are widely used to evaluate classification models, especially when the dataset is imbalanced.

-

This comprehensive guide breaks down these concepts, explains their formulas, and shows you how to implement them step-by-step using Python and scikit-learn. Let’s dive in!

What Are Precision, Recall, and F1 Score?

Before jumping into the calculations and code, let’s define these terms:

-

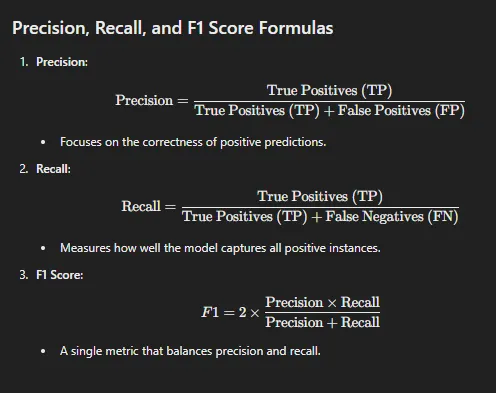

Precision: Measures the accuracy of positive predictions.

- It answers the question, “Of all the items the model labeled as positive, how many were actually positive?”

-

Recall (Sensitivity): Measures the model’s ability to find all the positive instances.

- It answers the question, “Of all the actual positives, how many did the model correctly identify?”

-

F1 Score: The harmonic mean of precision and recall. It balances the two metrics into a single number, making it especially useful when precision and recall are in trade-off.

Why Accuracy Isn’t Always Enough

While accuracy is often the first metric to evaluate, it can be misleading in imbalanced datasets. For example:

Imagine a dataset where 99% of the data belongs to Class A and only 1% to Class B.

A model that always predicts Class A would have 99% accuracy but would completely fail to detect Class B.

In such scenarios, precision, recall, and F1 score provide deeper insights.

Precision, Recall, and F1 Score Formulas

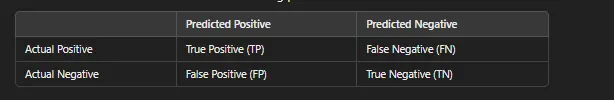

Visualizing Metrics Using a Confusion Matrix

-

The confusion matrix is essential to understanding precision and recall. Here’s a basic structure:

-

True Positive (TP): Correctly predicted positives.

-

False Positive (FP): Incorrectly predicted positives (Type I Error).

-

False Negative (FN): Missed positives (Type II Error).

-

True Negative (TN): Correctly predicted negatives.

-

Real-Life Example: Precision and Recall Trade-offs

Scenario 1: Email Spam Classifier

- Precision matters more: Minimizing false positives is critical to avoid classifying important emails as spam.

Scenario 2: Cancer Detection

- Recall matters more: Minimizing false negatives ensures that no cases of cancer go undetected.

Trade-Offs

- Increasing precision may decrease recall and vice versa. The F1 score balances this trade-off.

Multi-Class Classification: How It’s Different

In multi-class classification (more than two classes), precision, recall, and F1 scores are calculated for each class individually. Then, overall metrics are computed using either:

-

Macro Average

-

Takes the unweighted mean of individual metrics across classes.

-

Treats all classes equally.

-

-

Weighted Average

-

Weights each class’s metric by the number of true instances in that class.

-

Accounts for class imbalance.

-

Step-by-Step Implementation Using Python

- Here’s how to calculate precision, recall, and F1 score in Python using scikit-learn.

Step 1: Import Necessary Libraries

from sklearn.metrics import precision_score, recall_score, f1_score, classification_reportStep 2: Train a Classifier

Use any classifier like Logistic Regression or Decision Tree. Here’s an example:

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

# Load dataset

data = load_digits()

X_train, X_test, y_train, y_test = train_test_split(data.data, data.target, test_size=0.3, random_state=42)

# Train logistic regression model

model = LogisticRegression(max_iter=10000)

model.fit(X_train, y_train)

# Make predictions

y_pred = model.predict(X_test)Step 3: Compute Metrics

# Precision, Recall, F1 Score for each class

print("Precision (Per Class):", precision_score(y_test, y_pred, average=None))

print("Recall (Per Class):", recall_score(y_test, y_pred, average=None))

print("F1 Score (Per Class):", f1_score(y_test, y_pred, average=None))

# Macro and Weighted Averages

print("Macro Precision:", precision_score(y_test, y_pred, average='macro'))

print("Weighted Precision:", precision_score(y_test, y_pred, average='weighted'))

print("Macro Recall:", recall_score(y_test, y_pred, average='macro'))

print("Weighted Recall:", recall_score(y_test, y_pred, average='weighted'))

print("Macro F1 Score:", f1_score(y_test, y_pred, average='macro'))

print("Weighted F1 Score:", f1_score(y_test, y_pred, average='weighted'))

# Classification Report

print("Classification Report:\n", classification_report(y_test, y_pred))Output

- The classification_report gives a summary of all metrics for each class and their macro/weighted averages.

Key Takeaways

-

Choose the Right Metric

-

Use precision for problems where false positives are critical.

-

Use recall for problems where false negatives are critical.

-

Use F1 score when precision and recall are equally important.

-

-

Handle Class Imbalance

-

Use weighted averages for imbalanced datasets.

-

se macro averages for balanced datasets.

-

-

Understand the Trade-offs

- High precision might mean low recall and vice versa. Balance them with the F1 score.

Final Thoughts

Understanding and implementing precision, recall, and F1 score is crucial for evaluating your machine learning models effectively. By mastering these metrics, you’ll gain better insights into your model’s performance, especially in imbalanced datasets or multi-class setups.

FAQs

-

What is the difference between precision and recall?

Precision focuses on the correctness of positive predictions, while recall measures the model’s ability to identify all positive instances. -

When should I use F1 score?

F1 score is useful when you need a balance between precision and recall, especially in scenarios with no clear trade-off preference. -

What is a good F1 score?

A good F1 score depends on the context. Scores closer to 1 indicate better performance.