Reference

💻 딥러닝의 깊이 있는 이해를 위한 머신러닝 강의 11강

📄 Various Types of Supervision in Machine Learning

📄 Weak Supervision (Part I)

📄 Snorkel — A Weak Supervision System

Weak supervision과 Semi-supervised learning의 개념을 제대로 이해하고 싶어서 위의 강의와 포스팅을 참고하여 공부하였습니다.

이번 게시글은 개념과 용어를 정리하는 정도로 포스팅할 예정입니다.

제가 잘못 이해하여 내용에 오류가 있을 수 있습니다. 그럴 경우 댓글로 알려주시면 감사하겠습니다.

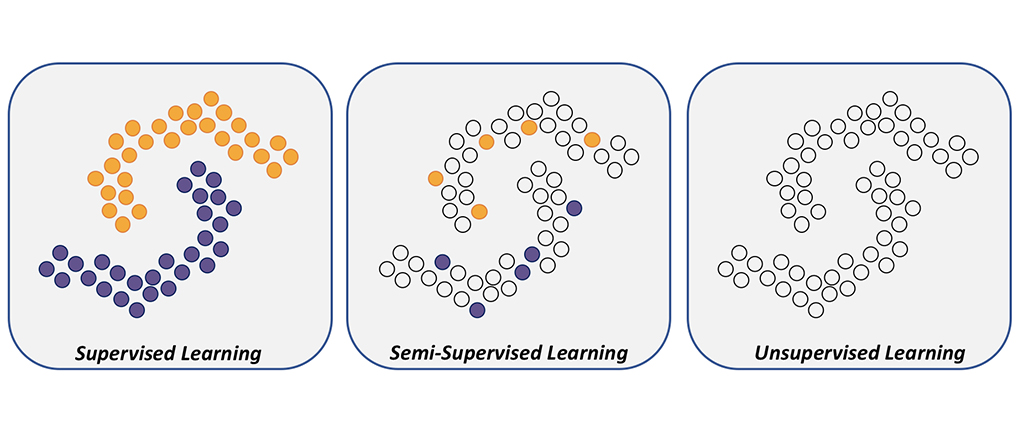

머신러닝 모델을 훈련하기 위해 다양한 종류의 supervision learning 기법이 있습니다.

data 기반 machine learing model은 label이 지정도니 sample의 사용을 기반으로 분류됩니다.

Supervised: the model uses a set of (x, y) for training, where x is the feature vector and y is the associated label.

지도 학습의 경우, 모델은 학습을 위해 set를 사용하고, 여기서 x는 feature vector이고, y는 label입니다.

Unsupervised: the model uses just the feature vectors with no label information for training.

비지도 학습의 경우, 모델은 학습을 위해 label 정보없이 feature vector만 사용합니다.

unlabeled data에서 pattern을 학습하는 것입니다.

Semi-Supervised: a combination of labeled and unlabeled samples are used for training.

준지도 학습의 경우, label이 지정된 sample과 label 지정되지 않은 smaple의 조합이 training에 사용됩니다.

위의 supervised와 unsupervised learning 기법이 섞였다고 보면 됩니다.

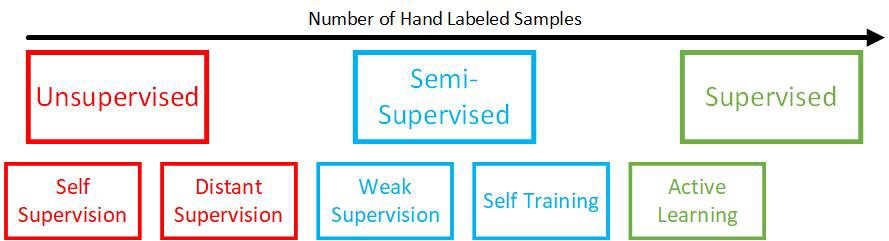

위 그림은 label을 붙인 sample 수에 기반한 supervision strategies입니다.

일반적으로 최고의 성능은 supervised model에서 나옵니다.

이런 전통적인 supervision에서는 의사 결정 경계에 더 가까운 data point를 식별하고, 더 가치 있는 정보를 기반으로 학습할 수 있도록 희망하며 해당 data에 대해 domain expert의 시간을 더 우선시 했습니다.

그러나 단점은 존재합니다. 다들 잘 아시겠지만, label이 있는 sample은 구성하기에 많은 비용이 듭니다.

또한, labeling하는 작업은 domain이 필요한 경우 더 비용이 많이 들며 작업은 시간이 지남에 따라 변경될 수 있습니다.

수동(인간이 작업한 경우)으로 label이 지정된 training data는 정적이며, 시간이 지남에 따라 변경 사항에 적응하지 않는다는 특징이 있습니다.

그래서 이 문제를 다루기 위해 몇 가지 접근 방식이 나왔습니다.

그중 저는 Weak Supervision과 Semi-Supervised의 개념에 대해 정리해보려 합니다.

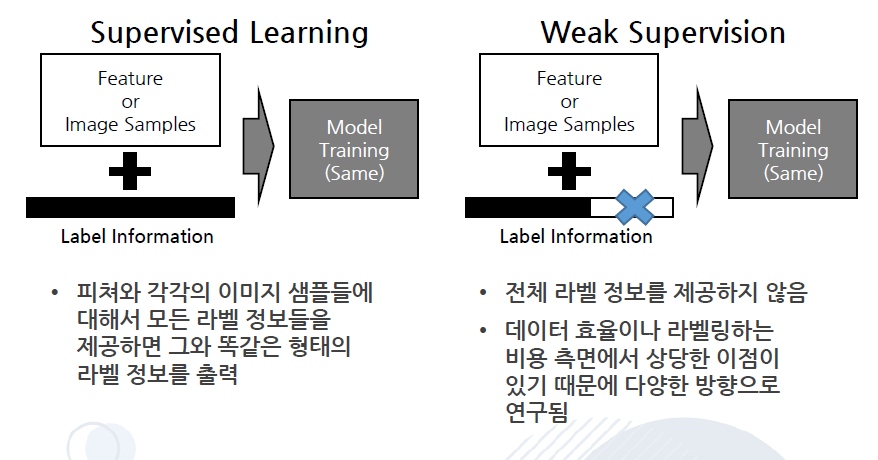

Weak supervision

위키백과의 힘을 빌려 개념의 정의를 가져오겠습니다.

“Weak Supervision is a branch of machine learning where noisy, limited, or imprecise sources are used to provide supervision signal for labeling large amounts of training data in a supervised learning setting.”

weak supervision 은 supervised learning에서 많은 양의 training data에 label을 지정하기 위해 그리고 supervision signal을 제공하기 위해 noise가 있거나 제한적이거나 부정확한 sources가 사용되는 machine learning의 한 분야입니다.

Weak supervision은 data labeling의 data labeling bottleneck 현상을 해결하기 위해 이런 접근 방식이 개발되었다고 합니다.

weak supervision 전체 data가 label은 달려 있는 상태지만, 최종 목표 label의 일부분만 제공되고 있는 상황입니다.

label이 훨씬 더 저렴한 가격으로 더 많은 데이터에서 얻을 수 있다면 우리는 거기서 더 많은 정보를 얻을 수 있게 됩니다.

더 쉽게 얘기하면 프로그래밍을 통해 data point에 label을 지정하는 방식입니다.

이 방법은 완벽하지 않다고 하는데 그러한 이유로는

- Domain heuristics (e.g. common patterns, rules of thumb, etc.)

- Existing ground-truth data that is not an exact fit for the task at hand, but close enough to be useful (traditionally called “distant supervision”)

- Unreliable non-expert annotators (e.g. crowdsourcing)

등이 있다고 합니다.

heuristics, functions, distributions, domain knowledge 등을 사용하여 classifier에 noise label을 제공할 수 있습니다.

classifier는 training을 위해 각 resource에서 제공하는 noiser가 있는 label을 사용합니다. 그리고 Snorkel이 유명한 data labeling library입니다.

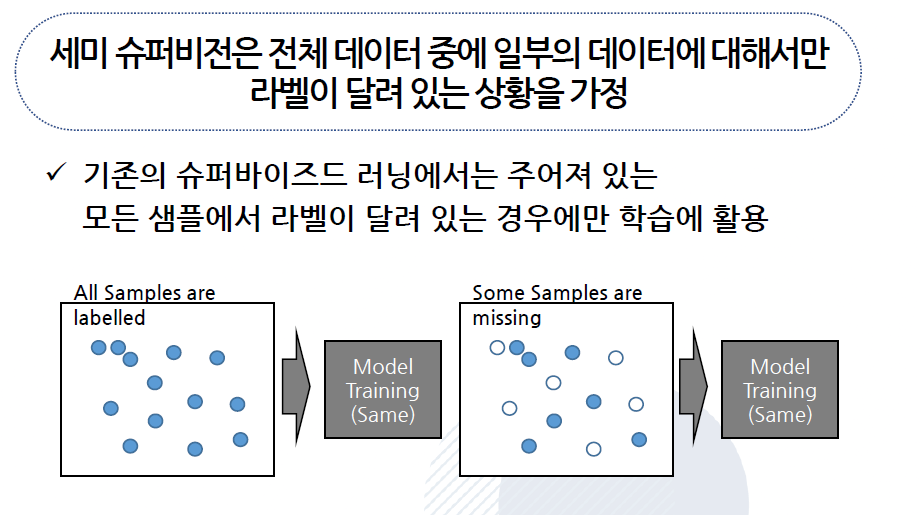

Semi supervision

그렇다면 semi supervision은 무엇일까요?

semi supervision은 supervised learning과 unsupervised learing이 약간 mix되어 있는 형태입니다.

unlabeled data(label 없이 단순히 feature만 존재하는 data)를 활용합니다.

supervised learning에서 활용한 labeled data, unlabeled new data까지 모두 학습에 활용하는 machine learing 기법입니다.

unlabeled data의 경우 인터넷을 통해 많은 데이터를 확보할 수 있으며, 이를 semi supervision에서 효율적으로 활용할 수 있다면 많은 데이터를 활용할 수 있다는 장점이 있습니다.

이 경우 일부분만 label이 있는 data를 supervised learning으로 학습할 수 있습니다.

기본적으로 label이 없는 data는 학습이 불가능하기 때문에 label이 있는 데이터인 극소수의 데이터만 실제 학습에 활용했습니다.

하지만 semi-supervised learning의 경우, 사용할 수 있는 데이터의 양 자체가 훨씬 더 많아질 수 있습니다.

학습 방법

예를 들어, 댓글 10,000개를 모아 긍정/부정 감정 분석을 진행하려고 합니다.

그러나 이전에 수동으로 label(positive/negative)을 할당한 문장이 50개뿐입니다.

나머지 data에 label을 지정하는 대신, 다른 방법을 선택합니다.

-

50개의 label이 지정된 예제를 사용하여 supervised model을 구축합니다.

사용 가능한 sample의 수가 적기 때문에 model의 성능이 저하될 수 있습니다.

-

label이 지정되지 않은 data로 unsupervised model을 구축하여 위의 sample을 두 개의 cluster로 그룹화합니다.

데이터는 자연스럽게 여러 개의 작은 cluster를 형성할 수 있으며, 두 그룹으로 강제할 경우 의도했던 positive/negative로만 분류되지 않을 수 있습니다.

-

label이 지정된 data와 label이 지정되지 않은 모든 data를 사용하여 semi-supervised model을 구축합니다.

그러면 50개의 예지를 사용하여 나머지 data에 label을 지정하고, supervision sentiment prediction model을 구축할 때 더 큰 데이터셋을 제공합니다.

많은 학습 유형이 있지만 그중 하나의 예시를 들었습니다.

이렇게 개념의 정의만 보고는 사실 아직 정확히 이해하기 힘든 것 같습니다.

저는 weak supervision과 semi supervision에 대한 논문을 읽어보며 조금 더 알아보려고 합니다.

아래 그림을 통해

- sueprvised learning

- semi-supervised learning

- unsupervised learning

의 개념에 대해 이해하는데 도움이 되셨으면 좋겠습니다.