Nesterov-accelerated Adam (NAdam)

Introduction

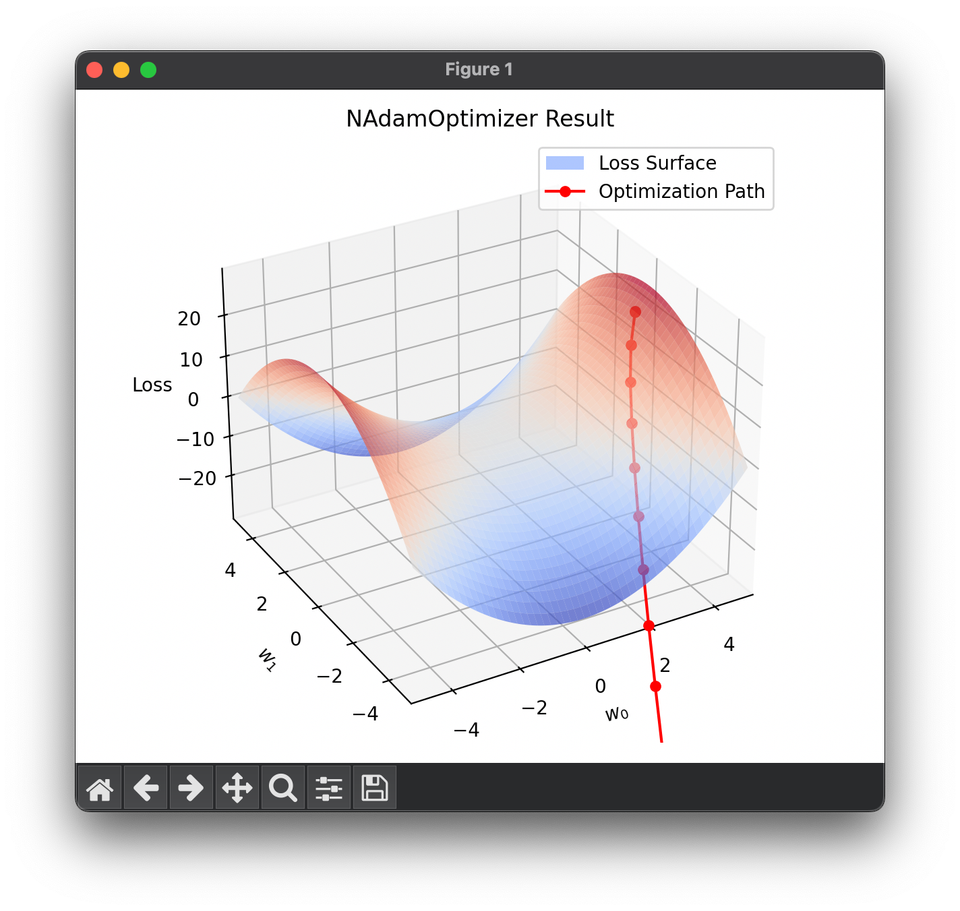

NAdam, or Nesterov-accelerated Adaptive Moment Estimation, is an optimization algorithm that combines the techniques of Adam and Nesterov momentum. It was developed to enhance the convergence properties of the Adam optimizer by integrating the predictive update step characteristic of Nesterov accelerated gradient (NAG), thus potentially leading to faster and more stable convergence in training deep learning models.

Background and Theory

Adam Optimization

Adam is an optimizer that calculates adaptive learning rates for each parameter by estimating the first (the mean) and second (the variance) moments of the gradients. Its update rule is:

where:

- is the parameter vector at time step ,

- is the learning rate,

- and are bias-corrected estimates of the first and second moments of the gradients,

- is a small constant added for numerical stability.

Nesterov Momentum

Nesterov momentum is a variant of the momentum update that provides a "look-ahead" capability, making it more responsive to changes in the gradient. The Nesterov update is generally formulated as:

where is the momentum coefficient.

NAdam Algorithm

NAdam incorporates the benefits of both Adam's adaptive learning rates and Nesterov momentum's anticipatory updates. The update equations for NAdam are a modification of the Adam update equations, using the Nesterov momentum term as follows:

where is the gradient at time step , and is the exponential decay rate for the first moment estimates.

Procedural Steps

- Initialize Parameters: Define initial parameters , learning rate , , , momentum , and .

- Compute Gradients: For each parameter , calculate the gradient of the loss function at iteration .

- Update Biased Moments:

- First moment (mean) estimate:

- Second moment (variance) estimate:

- Correct Bias in Moments:

- Correct first moment:

- Correct second moment:

- Nesterov Update:

- Use Nesterov's lookahead to adjust the gradients:

- Use Nesterov's lookahead to adjust the gradients:

Applications

NAdam is employed in scenarios where the benefits of both Adam and Nesterov momentum are desired, such as in training complex neural networks where faster convergence may reduce training time and improve model performance.

Strengths and Limitations

Strengths:

- Combines the advantages of adaptive learning rates with the predictive updates of Nesterov momentum.

- Can lead to faster convergence compared to using Adam alone.

Limitations:

- More sensitive to the setting of hyperparameters.

- The benefits over Adam or Nesterov alone are not guaranteed and can depend heavily on the specific problem and data characteristics.

Advanced Topics

Explorations into further modifications of NAdam might include adaptive adjustments of the hyperparameters during training, or combining it with other regularization methods to enhance model generalization.

References

- Dozat, Timothy. "Incorporating Nesterov Momentum into Adam." ICLR Workshop, 2016.