AdamW Optimization

Introduction

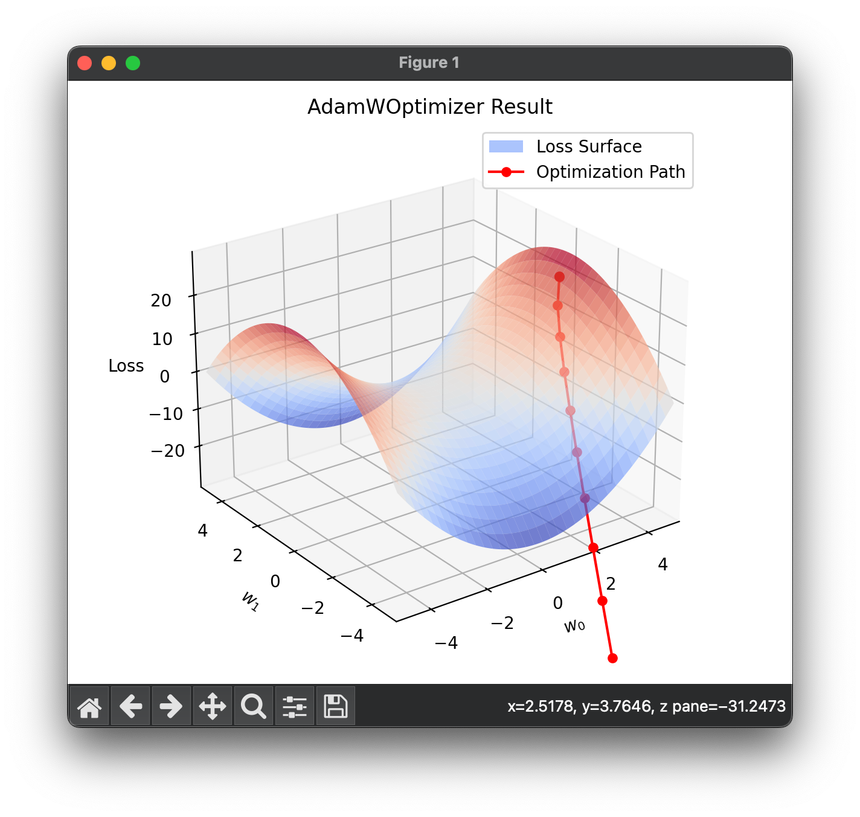

AdamW is an extension of the Adam optimization algorithm, specifically designed to better handle weight decay in the training of deep learning models. Introduced by Ilya Loshchilov and Frank Hutter in their paper "Decoupled Weight Decay Regularization," it modifies the traditional Adam optimizer by decoupling the weight decay from the optimization steps. This change addresses issues with the original Adam's approach to L2 regularization, which can lead to suboptimal application of weight decay, especially under adaptive learning rate scenarios.

Background and Theory

Adam Basics

The Adam optimizer is a method that computes adaptive learning rates for each parameter. It does so by estimating the first (mean) and second (variance) moments of the gradients. The original Adam update rule is given by:

where:

- is the parameter vector at time step ,

- is the learning rate,

- and are bias-corrected estimates of the first and second moments of the gradients,

- is a small constant added for numerical stability.

Decoupled Weight Decay

In traditional L2 regularization, the weight decay is directly incorporated into the update rule as a part of the gradient:

where is the weight decay coefficient. Adam applies this adjusted gradient to compute moment estimates, which mixes the effects of weight decay with adaptive learning rates.

AdamW modifies this by separating the weight decay:

This ensures that the weight decay is applied directly to the weights, and not through the gradient, allowing the optimizer to preserve the benefits of adaptive learning rates while effectively implementing weight decay.

Procedural Steps

- Initialize Parameters: Set initial parameters , learning rate , weight decay , , (exponential decay rates for moment estimates), and .

- Compute Gradients: Calculate the gradient of the loss function with respect to the parameters at each iteration .

- Update Moment Estimates:

- Update biased first moment estimate:

- Update biased second raw moment estimate:

- Correct Bias in Moments:

- Correct bias in first moment:

- Correct bias in second moment:

- Apply Weight Decay: Modify parameters by directly applying weight decay: .

- Update Parameters:

- Apply the adaptive learning rate to update the parameters:

Applications

AdamW is widely used in training deep neural networks where precise control over regularization is crucial, such as in convolutional neural networks (CNNs) and recurrent neural networks (RNNs). It is particularly beneficial in tasks where avoiding overfitting while maintaining fast convergence is important.

Strengths and Limitations

Strengths:

- Improves generalization by preventing overfitting through more effective application of weight decay.

- Maintains adaptive learning rate benefits, helping in faster convergence particularly in complex networks.

Limitations:

- Requires careful tuning of hyperparameters such as the learning rate and weight decay coefficient.

- May still be susceptible to issues common with adaptive learning rate methods, such as poor convergence behavior on some problems.

Advanced Topics

Further exploration into AdamW can involve hybrid approaches that combine it with other regularization techniques, or adapting it to specific types of neural network architectures to optimize performance.

References

- Loshchilov, Ilya, and Frank Hutter. "Decoupled Weight Decay Regularization." arXiv preprint arXiv:1711.05101 (2017).