Local Outlier Factor (LOF)

Introduction

The Local Outlier Factor (LOF) algorithm is a density-based outlier detection method used in data analysis and machine learning to identify anomalous data points by measuring the local deviation of density of a given data point with respect to its neighbors. Unlike global outlier detection methods, LOF considers the local density variation, making it effective in detecting outliers in datasets with varying densities.

Background and Theory

LOF relies on the concept of local density, where the density around a particular data point is compared to the densities of its neighbors. An outlier is then identified if the density around a data point is significantly lower than the density around its neighbors. This method is particularly useful in datasets where the notion of density varies across observations.

Mathematical Formulation

The LOF of a data point is calculated based on its local reachability density (LRD) and those of its neighbors. The key steps and formulas involved are as follows:

-

k-Distance: For each data point , the k-distance is defined as the distance to the nearest neighbor.

-

Reachability Distance: The reachability distance of a data point from point is defined as the maximum of the k-distance of and the actual distance between and , denoted as . It is given by:

-

Local Reachability Density (LRD): The LRD of a data point is the inverse of the average reachability distance of the data point from its neighbors, indicating the density around this point. It is computed as:

where is the set of the nearest neighbors of .

-

Local Outlier Factor: Finally, the LOF of a data point is the ratio of the average LRD of its neighbors to its own LRD. A LOF significantly greater than 1 indicates an outlier. It is given by:

Procedural Steps

- Data Preparation: Normalize the dataset if necessary, as LOF is sensitive to the scale of the data.

- k-Neighbors Identification: For each data point, identify the nearest neighbors based on a chosen distance metric.

- Compute Reachability Distances: Calculate the reachability distance between each data point and its neighbors.

- Calculate LRDs: Compute the local reachability density for each data point.

- Compute LOFs: Calculate the LOF score for each data point by comparing its density to the densities of its neighbors.

- Outlier Identification: Identify outliers as those data points whose LOF scores significantly deviate from 1.

Implementation

Parameters

n_neighbors:int, default = 10

Number of neighbors to estimate the local densities

threshold:float, default = 1.5

Cutoff value that determines whether a data point is considered an outlier

Examples

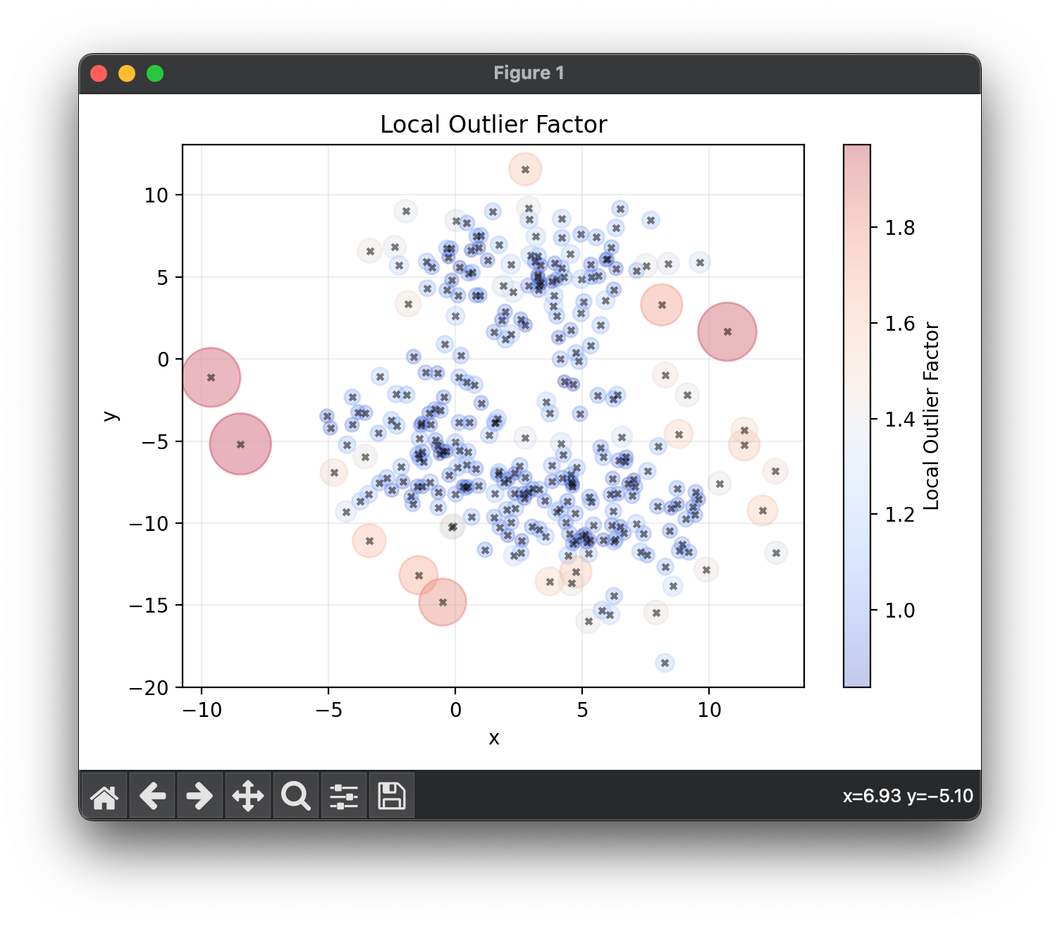

from luma.preprocessing.outlier import LocalOutlierFactor

from sklearn.datasets import make_blobs

import matplotlib.pyplot as plt

import numpy as np

np.random.seed(10)

X, y = make_blobs(n_samples=300, cluster_std=3, centers=3)

lof = LocalOutlierFactor(n_neighbors=10, threshold=1.5)

lof.fit(X)

scores = lof.get_scores(X)

scores_amp = np.exp(scores**2) * 20

sc = plt.scatter(

X[:, 0], X[:, 1], s=scores_amp, c=scores, cmap="coolwarm", alpha=0.3

)

plt.scatter(X[:, 0], X[:, 1], s=10, c="black", marker="x", alpha=0.5)

plt.colorbar(sc, label="Local Outlier Factor")

plt.xlabel("x")

plt.ylabel("y")

plt.title("Local Outlier Factor")

plt.grid(alpha=0.2)

plt.tight_layout()

plt.show()

Applications

- Anomaly Detection: Identifying anomalies in various domains such as fraud detection, network security, and health monitoring.

- Data Cleaning: Removing outliers from datasets prior to further analysis or model training.

- Unsupervised Learning: Enhancing clustering algorithms by identifying and possibly excluding outliers.

Strengths and Limitations

Strengths

- Local Sensitivity: Effectively identifies outliers in datasets with regions of varying density.

- Versatility: Applicable to any domain or dataset where outlier detection is necessary.

Limitations

- Parameter Selection: The choice of (the number of neighbors) can significantly impact the effectiveness of the algorithm, requiring careful tuning.

- Computational Complexity: The algorithm can be computationally intensive, especially with large datasets and high dimensionality.

- Scale Sensitivity: Results can vary with the scale of the data, necessitating preprocessing steps like normalization.

Advanced Topics

- Distance Metric Customization: Exploring different distance metrics (e.g., Manhattan, Euclidean, Minkowski) to better capture the structure of the data.

- Integration with Clustering Algorithms: Using LOF scores to weight or filter data points during clustering to improve cluster quality.

References

- Breunig, Markus M., et al. "LOF: identifying density-based local outliers." Proceedings of the 2000 ACM SIGMOD international conference on Management of data. 2000.

- Kriegel, Hans-Peter, Peer Kröger, and Arthur Zimek. "Outlier detection techniques." Tutorial at KDD. 2010.