Canonical Correlation Analysis (CCA)

Introduction

Canonical Correlation Analysis (CCA) is a multivariate statistical method concerned with understanding the relationships between two sets of variables. It was first introduced by Harold Hotelling in the 1930s. CCA seeks to identify and quantify the correlations between linear combinations of the variables in two datasets. The goal is to find pairs of canonical variates—linear combinations of variables within each dataset—that are maximally correlated with each other across the two sets.

Background and Theory

Mathematical Foundations

Consider two sets of variables and , where is the number of observations, is the number of variables in the first set, and is the number of variables in the second set.

CCA seeks to find vectors and such that the correlations between the projections and are maximized. These projections are known as canonical variates.

The correlation coefficient to be maximized is defined as:

The objective is to find and that maximize .

Optimization Problem

The maximization of can be transformed into an eigenvalue problem. By setting up the Lagrangian for this optimization problem, we obtain the following generalized eigenvalue problems:

where is the canonical correlation. Solving these eigenvalue problems gives us the canonical coefficients and for each pair of canonical variates.

Number of Canonical Correlations

The number of possible pairs of canonical variates is the minimum of and . However, not all these pairs may be significant. The significance of the canonical correlations can be assessed using statistical tests, such as Bartlett's test of sphericity.

Procedural Steps

- Preprocessing: Standardize both sets of variables so that each has mean 0 and variance 1.

- Compute Covariance Matrices: Calculate the covariance matrices , , and .

- Solve the Eigenvalue Problems: Solve the generalized eigenvalue problems to find the canonical coefficients and .

- Calculate Canonical Variates: Compute the canonical variates and for each significant pair of canonical correlations.

- Assess Significance: Use statistical tests to assess the significance of the canonical correlations.

Implementation

Parameters

n_components:intDimensionality of low-space

Notes

CCArequires two distinct datasetsXandY, in whichYis not a target variable.- Due to its uniqueness in its parameters,

CCAmay not be compatible with several meta estimators. transform()andfit_transform()returns a 2-tuple ofMatrix

Examples

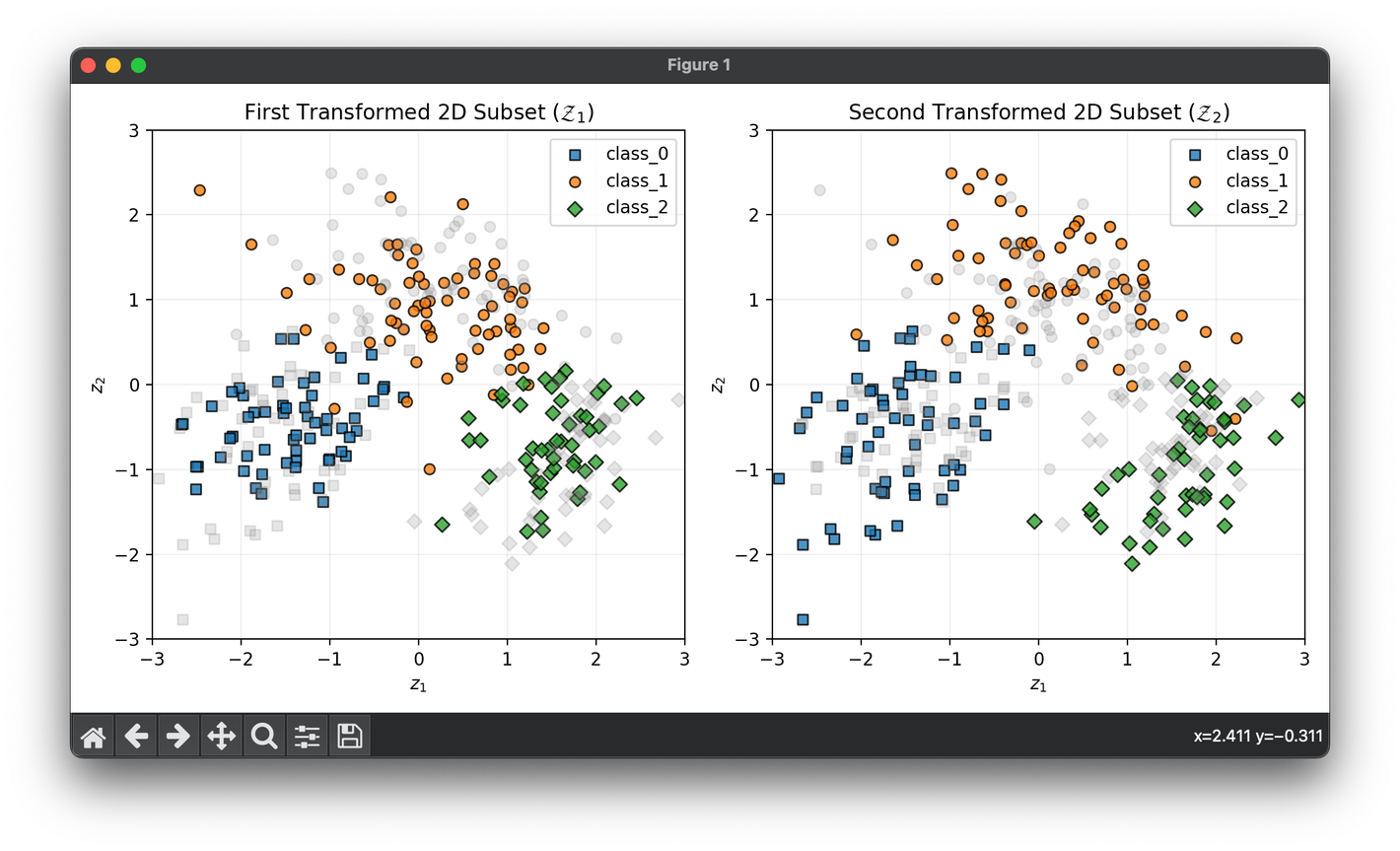

Test with the split wine datasets, each on and space respectively:

from luma.reduction.linear import CCA

from luma.preprocessing.scaler import StandardScaler

from sklearn.datasets import load_wine

import matplotlib.pyplot as plt

import numpy as np

data_df = load_wine()

X = data_df.data

y = data_df.target

sc = StandardScaler()

X_std = sc.fit_transform(X)

X1, X2 = X_std[:, :7], X_std[:, 7:]

cca = CCA(n_components=2)

Z1, Z2 = cca.fit_transform(X1, X2)

fig = plt.figure(figsize=(10, 5))

ax1 = fig.add_subplot(1, 2, 1)

ax2 = fig.add_subplot(1, 2, 2)

for cl, lb, m in zip(np.unique(y), data_df.target_names, ["s", "o", "D"]):

Z1_cl, Z2_cl = Z1[y == cl], Z2[y == cl]

ax1.scatter(

Z1_cl[:, 0],

Z1_cl[:, 1],

label=lb,

edgecolors="black",

marker=m,

alpha=0.8

)

ax2.scatter(

Z2_cl[:, 0],

Z2_cl[:, 1],

label=lb,

edgecolors="black",

marker=m,

alpha=0.8

)

ax1.scatter(Z2_cl[:, 0], Z2_cl[:, 1], color="gray", marker=m, alpha=0.2)

ax2.scatter(Z1_cl[:, 0], Z1_cl[:, 1], color="gray", marker=m, alpha=0.2)

ax1.set_xlabel(r"$z_1$")

ax1.set_ylabel(r"$z_2$")

ax1.set_title(r"First Transformed 2D Subset ($\mathcal{Z}_1$)")

ax1.set_xlim(-3, 3)

ax1.set_ylim(-3, 3)

ax1.grid(alpha=0.2)

ax1.legend()

ax2.set_xlabel(r"$z_1$")

ax2.set_ylabel(r"$z_2$")

ax2.set_title(r"Second Transformed 2D Subset ($\mathcal{Z}_2$)")

ax2.set_xlim(-3, 3)

ax2.set_ylim(-3, 3)

ax2.grid(alpha=0.2)

ax2.legend()

plt.tight_layout()

plt.show()

Applications

CCA is widely used in various fields, including psychology, where it might be used to understand the relationship between cognitive tests and brain activity patterns; in finance, to discover links between different sets of economic indicators; and in bioinformatics, for integrating different types of genomic data to uncover biological relationships.

Strengths and Limitations

Strengths

- Versatility: CCA can be applied to many types of data and in various fields.

- Insightful: It provides insights into the relationships between sets of variables that might not be apparent from direct correlation analysis.

Limitations

- Data Requirements: Requires large datasets to compute reliable correlations and canonical variates.

- Interpretation Challenges: The interpretation of canonical variates can sometimes be non-intuitive, especially when the original variables are not easily relatable.

- Assumptions: Assumes linear relationships between the sets of variables.

Advanced Topics

- Regularized CCA: For high-dimensional data, regularization techniques can be applied to the canonical coefficients to prevent overfitting.

- Kernel CCA: Extends CCA to nonlinear relationships by applying the kernel method, allowing the analysis of more complex data structures.

- Sparse CCA: Aims to achieve sparse representations of the canonical variates, making the results easier to interpret by selecting only a subset of all available variables.

References

- Hotelling, H. "Relations Between Two Sets of Variates." Biometrika, vol. 28, no. 3/4, 1936, pp. 321–377.

- Hardoon, D. R., Szedmak, S., and Shawe-Taylor, J. "Canonical Correlation Analysis: An Overview with Application to Learning Methods." Neural Computation, vol. 16, no. 12, 2004, pp. 2639–2664.

- Thompson, B. "Canonical Correlation Analysis." Encyclopedia of Statistics in Behavioral Science, 2005.