Recursive Feature Elimination (RFE)

Recursive Feature Elimination (RFE) is a feature selection method used in machine learning to identify and select features by recursively considering smaller and smaller sets of features. It is a backward selection technique that ranks features by their importance, eliminates the least important features, and builds a model on those that remain. This process repeats until the desired number of features is selected. RFE is particularly useful when dealing with datasets where the number of features is much larger than the number of observations or when identifying the most significant features is crucial for model interpretation.

Introduction

Feature selection is a critical step in the machine learning pipeline. It not only helps in improving the model's performance by eliminating irrelevant or redundant features but also in reducing the computational cost and enhancing model interpretability. RFE stands out among feature selection techniques for its ability to efficiently identify the most relevant features for a given predictive model.

Background and Theory

RFE is based on the idea that a model can be trained with the entire set of features and the importance of each feature can be obtained either through a coefficient in a linear model or through feature importance scores in tree-based models. The least important features are pruned from the current set of features, the model is retrained, and feature importance is assessed again. This process is repeated until a specified number of features is reached.

Steps Involved in RFE:

- Train the Model: Train the model on the entire set of features and compute the importance of each feature.

- Rank and Eliminate: Rank the features by their importance, remove the least important feature(s).

- Reiterate: Re-train the model on the reduced set of features.

- Termination: Repeat steps 2 and 3 until the desired number of features is obtained.

Mathematical Formulation

Let the initial set of features be denoted by and the target number of features by . The RFE algorithm seeks to find a subset with that optimizes the performance of a given machine learning model. The selection process involves iteratively reducing the feature set size based on the feature importance scores, , derived from the model.

The feature elimination step at each iteration can be defined as:

where represents the set of features at iteration , starting with .

Implementation

Parameters

estimator:Estimator

An estimator to fit and evaluate

n_features:intfloat, default = 1

Number of features to select (0~1 value for proportion)

metric:Evaluator, default = None

Scoring metric for selecting features

step_size:int, default = 1

Number of features to eliminate in each step

cv:int, default = 5

K-fold size for cross validation (0 to disable CV)

shuffle:bool, default = True

Whether to shuffle the dataset

fold_type:FoldType, default =KFold

Fold type

random_state:int, default = None

Seed for splitting the data

Examples

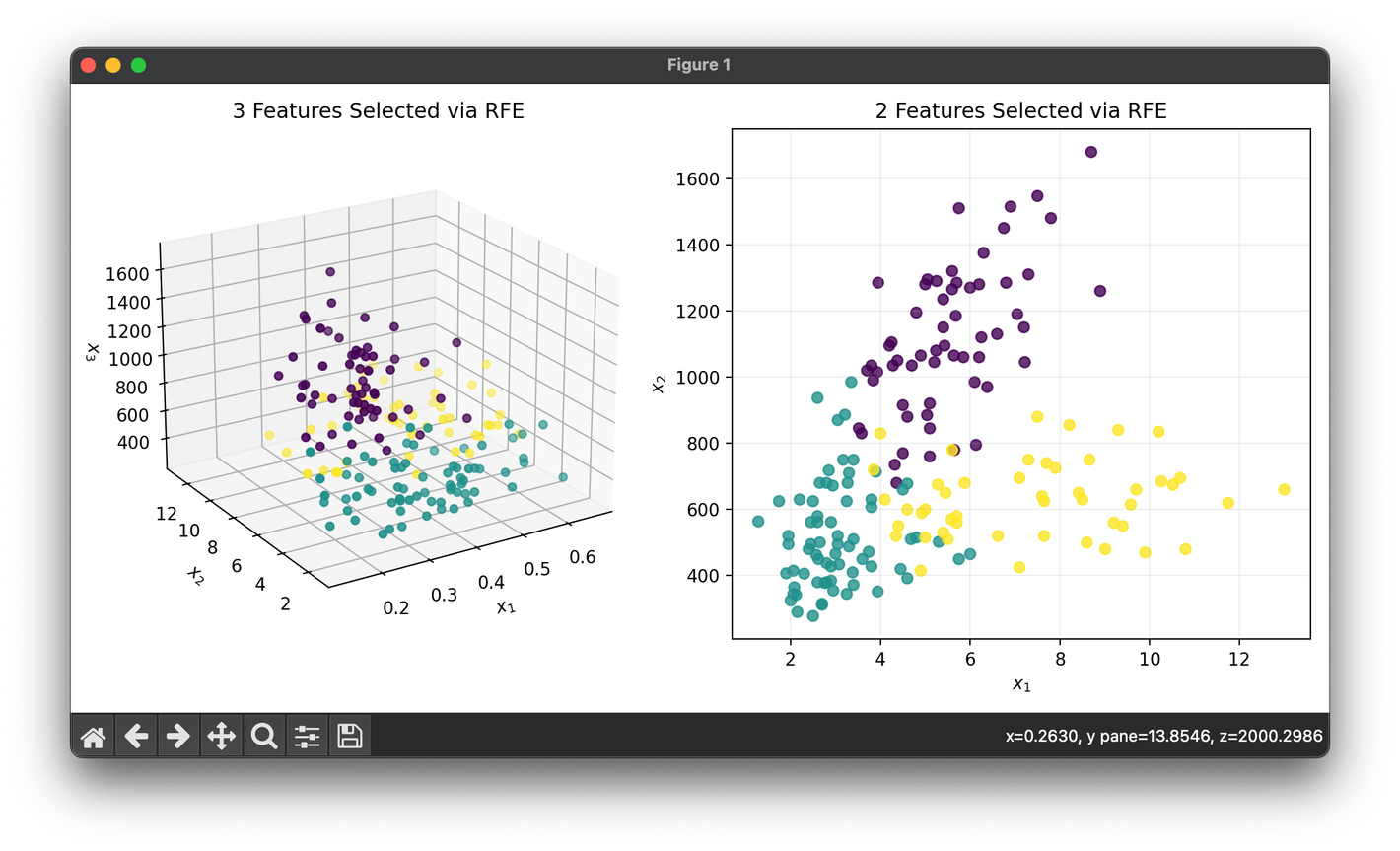

Test on the wine dataset:

from luma.reduction.selection import RFE

from luma.classifier.discriminant import LDAClassifier

from luma.model_selection.fold import StratifiedKFold

from sklearn.datasets import load_wine

import matplotlib.pyplot as plt

X, y = load_wine(return_X_y=True)

model = RFE(estimator=LDAClassifier(),

n_features=3,

step_size=1,

cv=10,

shuffle=True,

fold_type=StratifiedKFold,

random_state=42,

verbose=True)

X_3d = model.fit_transform(X, y)

model.set_params(n_features=2)

X_2d = model.fit_transform(X, y)

fig = plt.figure(figsize=(10, 5))

ax1 = fig.add_subplot(1, 2, 1, projection='3d')

ax2 = fig.add_subplot(1, 2, 2)

ax1.scatter(X_3d[:, 0], X_3d[:, 1], X_3d[:, 2], c=y)

ax1.set_xlabel(r"$x_1$")

ax1.set_ylabel(r"$x_2$")

ax1.set_zlabel(r"$x_3$")

ax1.set_title(f"3 Features Selected via {type(model).__name__}")

ax2.scatter(X_2d[:, 0], X_2d[:, 1], c=y, alpha=0.8)

ax2.set_xlabel(r"$x_1$")

ax2.set_ylabel(r"$x_2$")

ax2.set_title(f"2 Features Selected via {type(model).__name__}")

ax2.grid(alpha=0.2)

plt.tight_layout()

plt.show()

Applications

- Predictive Modeling: In scenarios where model performance and interpretability are critical, such as in healthcare for disease prediction.

- Dimensionality Reduction: When dealing with high-dimensional data in fields like genomics, finance, or image processing.

- Feature Importance Analysis: To understand the impact of various features on the prediction outcomes, which can be crucial for business decisions or scientific research.

Strengths and Limitations

Strengths:

- Efficiency: Efficiently identifies the subset of the most informative features.

- Flexibility: Can be used with any model that assigns importance scores to features.

- Improved Performance: Helps in improving the model's performance by eliminating noise and redundancy.

Limitations:

- Computationally Intensive: Can be computationally expensive for models with a large number of features.

- Model Dependency: The selection of features is dependent on the model used, which may not generalize across different models.

- Greedy Algorithm: Being a greedy approach, it may not always find the optimal subset of features.

Advanced Topics

- Cross-Validation RFE (CV-RFE): Integrating cross-validation into RFE can help in evaluating the generalizability of the selected feature subset and determining the optimal number of features more reliably.

- RFE with Model Selection: Combining RFE with model selection techniques like grid search can optimize both the feature subset and model parameters simultaneously.

References

- I. Guyon, J. Weston, S. Barnhill, and V. Vapnik, "Gene selection for cancer classification using support vector machines," Machine Learning, 46(1-3): 389-422, 2002.

- R. Kohavi and G. H. John, "Wrappers for feature subset selection," Artificial Intelligence, 97(1-2): 273-324, 1997.