Sequential Forward Selection (SFS)

Sequential Forward Selection (SFS) is a heuristic algorithm used in machine learning for feature selection. It is designed to reduce the dimensionality of the input feature set to improve the efficiency and performance of machine learning models. SFS iteratively adds features to a model based on a specified criterion until adding new features no longer improves the model's performance or until a predetermined number of features is reached.

Introduction

Feature selection is crucial in machine learning to eliminate irrelevant or redundant features, reduce computational complexity, and enhance model interpretability. Among various feature selection techniques, Sequential Forward Selection stands out for its simplicity and effectiveness in selecting a subset of relevant features that contribute most to the prediction accuracy of a model.

Background and Theory

Sequential Forward Selection starts with an empty set of features and sequentially adds features to the set. At each step, it selects the feature that, when added to the set, offers the most significant improvement in model performance. The process is repeated until a specified number of features is selected or adding new features does not improve model performance.

Steps Involved in SFS:

- Initialization: Begin with an empty set of features.

- Feature Selection: At each iteration, evaluate all features not yet included in the set and determine which single feature, when added, would improve the model's performance the most.

- Model Evaluation: Assess the performance of the model using a predefined metric (e.g., accuracy, F1 score) with the current set of features.

- Iteration: Repeat the selection and evaluation process until the inclusion of additional features does not result in improved performance or until reaching the desired number of features.

Mathematical Formulation

Let represent the dataset with features . The objective is to find a subset that maximizes the performance metric , subject to , where is the desired number of features.

The selection of the feature to add at each iteration can be mathematically defined as:

where is the set of features selected up to the -th iteration, and is the performance metric of the model trained with the features in .

Implementation

Parameters

estimator:Estimator

An estimator to fit and evaluate

n_features:floatint, default = 1

Number of features to select (0~1 value for proportion)

metric:Evaluator

Scoring metric for selecting features

test_size:float, default = 0.2

Proportional size of the validation set

cv:int, default = 5

K-fold size for cross validation (0 to disable CV)

shuffle:bool, default = True

Whether to shuffle the dataset

stratify:bool, default = False

Whether to perform stratified split

fold_type:FoldType, default =KFold

Fold type

random_state:float

Seed for splitting the data

Examples

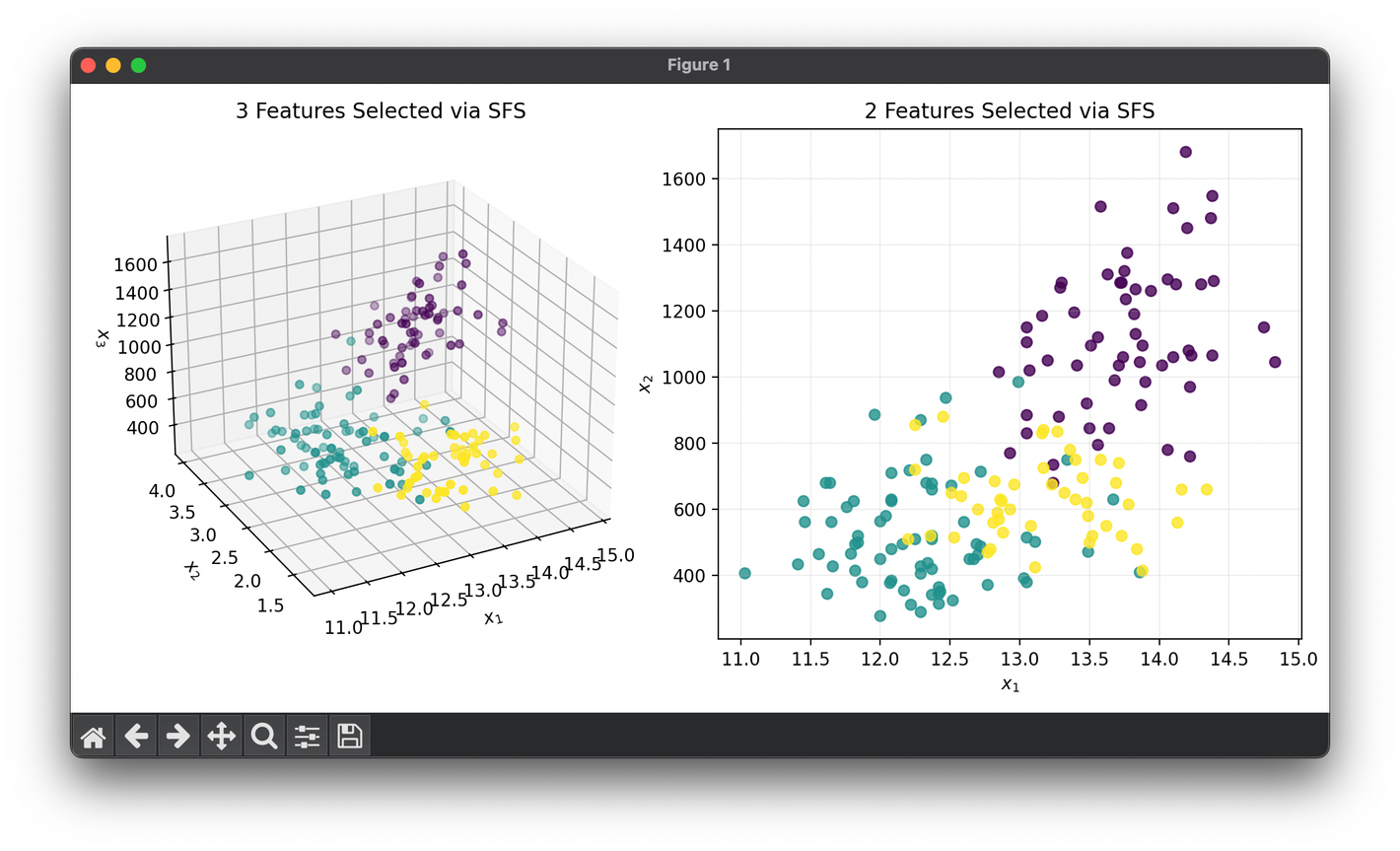

from luma.reduction.selection import SFS

from luma.classifier.discriminant import LDAClassifier

from luma.model_selection.fold import StratifiedKFold

from sklearn.datasets import load_wine

import matplotlib.pyplot as plt

X, y = load_wine(return_X_y=True)

model = SFS(estimator=LDAClassifier(),

n_features=3,

test_size=0.3,

cv=10,

shuffle=True,

stratify=True,

fold_type=StratifiedKFold,

random_state=42)

X_3d = model.fit_transform(X, y)

model.set_params(n_features=2)

X_2d = model.fit_transform(X, y)

fig = plt.figure(figsize=(10, 5))

ax1 = fig.add_subplot(1, 2, 1, projection='3d')

ax2 = fig.add_subplot(1, 2, 2)

ax1.scatter(X_3d[:, 0], X_3d[:, 1], X_3d[:, 2], c=y)

ax1.set_xlabel(r"$x_1$")

ax1.set_ylabel(r"$x_2$")

ax1.set_zlabel(r"$x_3$")

ax1.set_title(f"3 Features Selected via {type(model).__name__}")

ax2.scatter(X_2d[:, 0], X_2d[:, 1], c=y, alpha=0.8)

ax2.set_xlabel(r"$x_1$")

ax2.set_ylabel(r"$x_2$")

ax2.set_title(f"2 Features Selected via {type(model).__name__}")

ax2.grid(alpha=0.2)

plt.tight_layout()

plt.show()

Applications

Sequential Forward Selection is versatile and can be applied in various fields, including but not limited to:

- Bioinformatics: For gene selection in disease classification.

- Finance: In credit scoring models to select the most predictive features of creditworthiness.

- Natural Language Processing (NLP): To choose a subset of words or phrases that are most relevant for document classification or sentiment analysis.

Strengths and Limitations

Strengths:

- Simplicity: SFS is straightforward to implement and understand.

- Flexibility: It can be used with any classifier or regression model.

- Effectiveness: Often finds a good set of features, especially when the number of features is not too large.

Limitations:

- Computational Cost: The need to retrain the model at each step can be computationally expensive, especially with a large number of features.

- Greedy Nature: SFS is a greedy algorithm; it may not find the optimal feature set, especially in cases where features interact in complex ways.

- Overfitting Risk: Adding too many features without adequate regularization can lead to overfitting.

Advanced Topics

- Enhanced SFS Variants: Modified versions of SFS, like Sequential Floating Forward Selection (SFFS), attempt to overcome the limitations of the basic SFS by dynamically adding and removing features to find a better subset.

- Cross-validation Integration: Using cross-validation with SFS can help in assessing the generalization ability of the selected feature subset and avoiding overfitting.

References

- J. K. Pugh, L. H. Yang, and D. J. Montana, "A Fast Implementation of Sequential Forward Selection," Journal of Machine Learning Research, 2016.

- I. Guyon, A. Elisseeff, "An Introduction to Variable and Feature Selection," Journal of Machine Learning Research, 2003.