📌 본 내용은 Michigan University의 'Deep Learning for Computer Vision' 강의를 듣고 개인적으로 필기한 내용입니다. 내용에 오류나 피드백이 있으면 말씀해주시면 감사히 반영하겠습니다.

(Stanford의 cs231n과 내용이 거의 유사하니 참고하시면 도움 되실 것 같습니다)📌

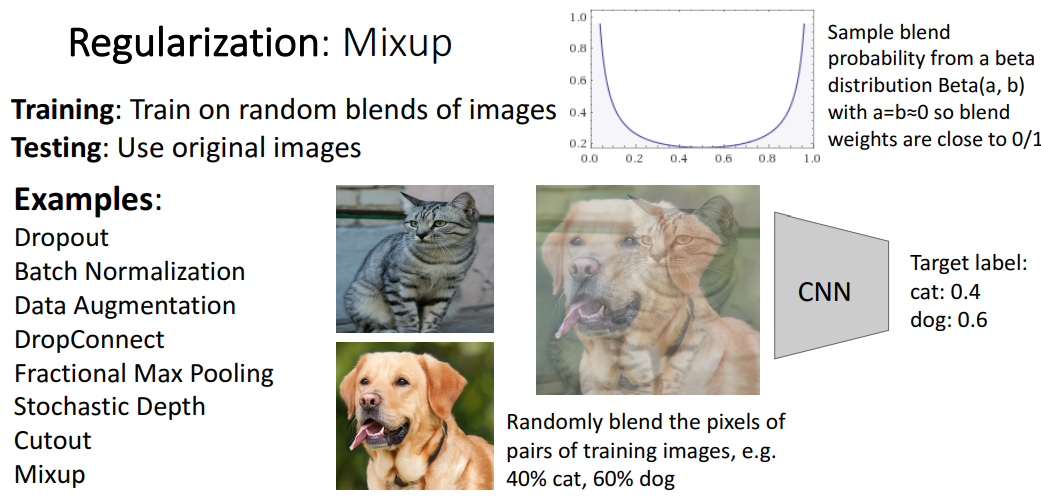

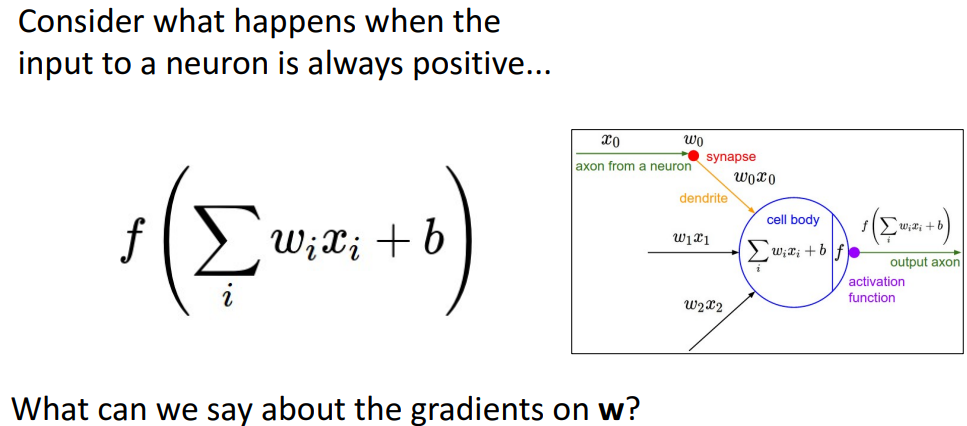

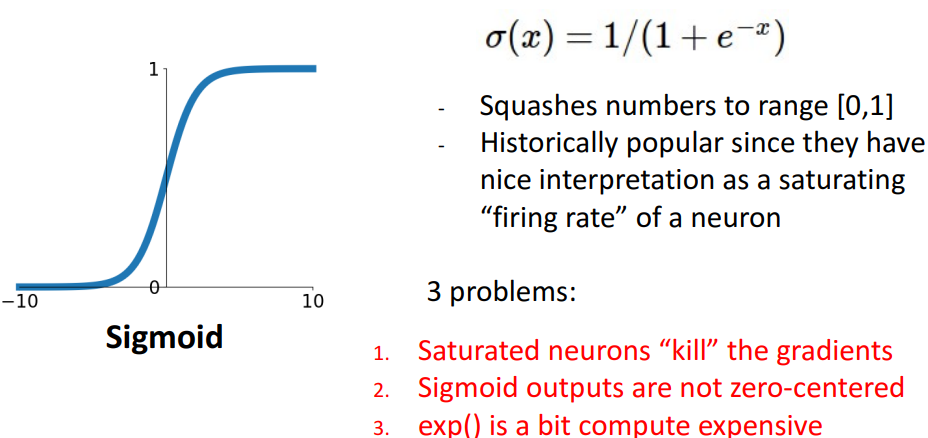

1. Activation Functions : Sigmoid

📍 3가지 문제점: saturated gradient, not zero-centered, 연산비용

-

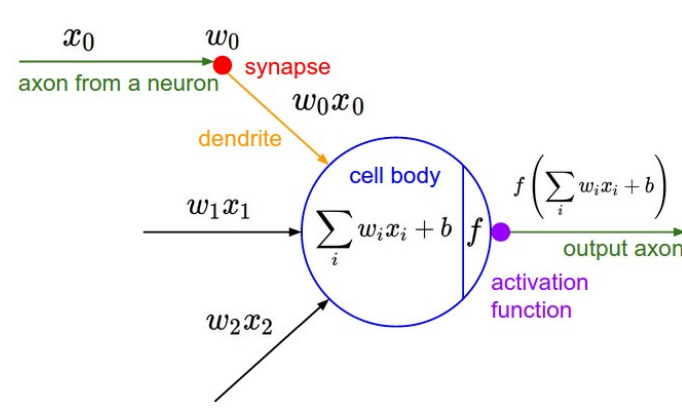

전체 흐름

- 해석

- axon from a neuron: 이전 뉴런에서의 입력

- cell body의 f: activation function으로 비선형화

- 없으면 단일 선형 layer로 축소되므로 반드시 필요

- 해석

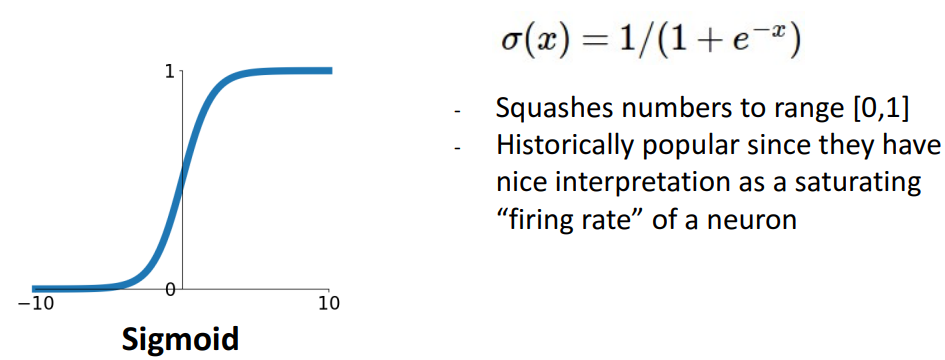

1) 소개

- 개념

- 가장 classic 함

- 존재 or 부재에 대한 확률적 해석

- 0~1사이로 만듦

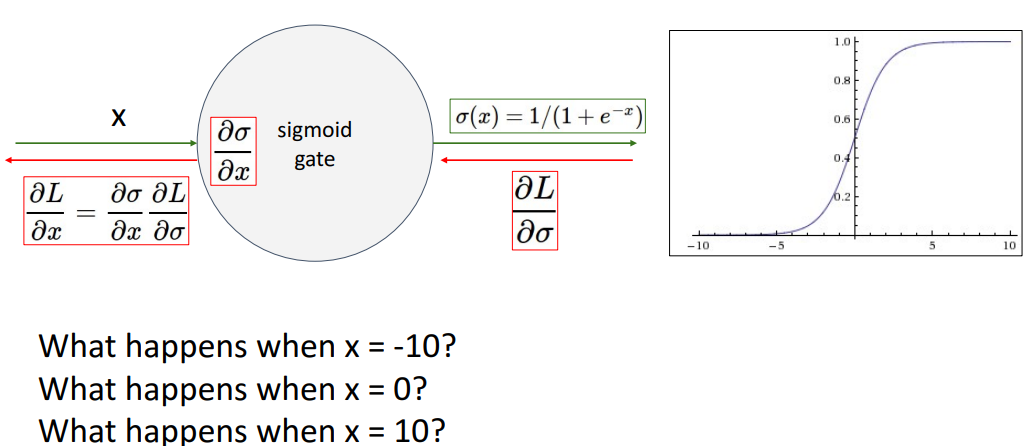

- “Firing rate” of neuron

-

다른 들어오는 뉴런으로부터 신호 받은 후, 일정 속도로 신호 발화

-

모든 입력의 총 속도에 비선형성 의존

⇒ sigmoid: 발화속도에 대한 비선형 의존성을 모델링 한 것

-

-

문제점 3가지

a. (젤 문제)포화된(Saturated) 뉴런들이 gradient를 죽임 (= 네트워크 훈련 어렵게 만듦)

- x가 매우 작을때

- local gradient(d/dx)가 0에 수렴

- downstream gradient(dL/dx)도 0에 수렴하게 됨 → 가중치 update도 0에 가까워짐(가중치 update행렬과 관련된 손실의 모든 기울기가 매우 낮을거라서) → 학습 느려짐

- x가 0일때

- x가 매우 클때

- gradient가 0에 수렴 → 매우 깊은 layer일때, 하위 layer에서 gradient 훈련 신호X (걍 0이라서)

- gradient가 0에 수렴 → 매우 깊은 layer일때, 하위 layer에서 gradient 훈련 신호X (걍 0이라서)

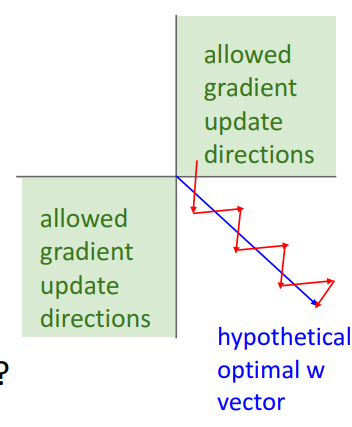

b. sigmoid output들은 zero-centered가 아님

- (원소 1개일때) 가정

- 모든 input neuron이 항상 +라면 W gradient는 어떻게 될까?

-

(local gradient)가 항상 +가 됨

-

(upstream gradient)가 항상 +가 됨 (모든 손실or 기울기가 양수, Wi에 대한 모든 손실 or 기울기가 양수)

⇒ W에 대한 모든 기울기가 동일 부호 갖게 됨

(=모두 양, 음이 된다는 제약 때매, 가중치의 특정값에 도달하는 경사하강법 단계 만들기 어려움)

-

부연설명

- W초기값이 원점이고, 손실 최소화 위한 가중치 값은 원점에서 오른쪽 하단으로 이동위해 W1은 +단계, W2는 -단계여야 하는데, 둘다 같은 부호면 해당 사분면에 정렬된 단계 수행방법 X

- 경사하강절차가 해당 방향으로 진행하는 방법

- 모든 경사가 위로 이동하는 지그재그 패턴

- 경사하강절차가 해당 방향으로 진행하는 방법

⇒ 결론) not zero-centered한 문제 때문에, train시 매 update마다 한쪽에 치우치니까 매우 불안정함

- W초기값이 원점이고, 손실 최소화 위한 가중치 값은 원점에서 오른쪽 하단으로 이동위해 W1은 +단계, W2는 -단계여야 하는데, 둘다 같은 부호면 해당 사분면에 정렬된 단계 수행방법 X

-

- 모든 input neuron이 항상 +라면 W gradient는 어떻게 될까?

- (원소 여러개일때) Minibatch일때

- not zero-centered한 문제 완화됨

- 미니배치에 대해 모두를 평균내면, 때로는 양수, 음수 나올수 있어서

- not zero-centered한 문제 완화됨

c. 지수함수의 계산비용이 비쌈

- 지수함수는 많은 clock cycle이 돌아서 비쌈

- cf) relu와 sigmoid 비교했을때, sigmoid가 훨 오래걸림

- x가 매우 작을때

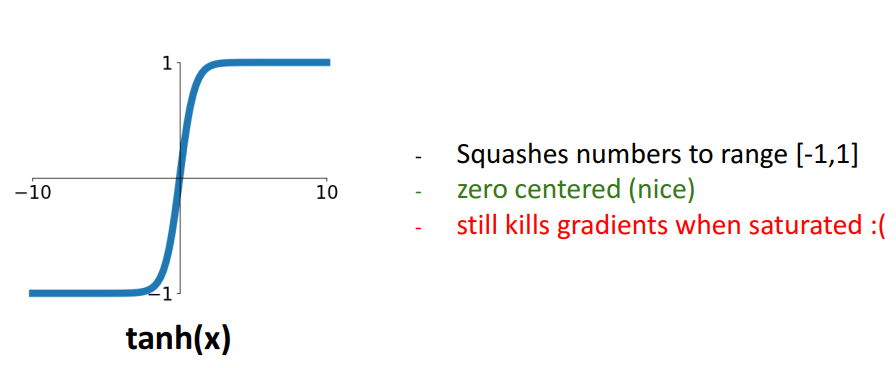

2. Activation Functions : Tanh

📍 문제점: saturated gradient

- 개념

- Scaled & Shifted version of Sigmoid

- [-1,1] 범위

- zero-centered함

- 여전히 saturated할때 gradient가 죽음

- saturating non-linearity를 neural network에 사용해야된다면, tanh>sigmoid 사용하는게 합리적

- 그래도 saturated 문제때매 엄청 좋은 선택X

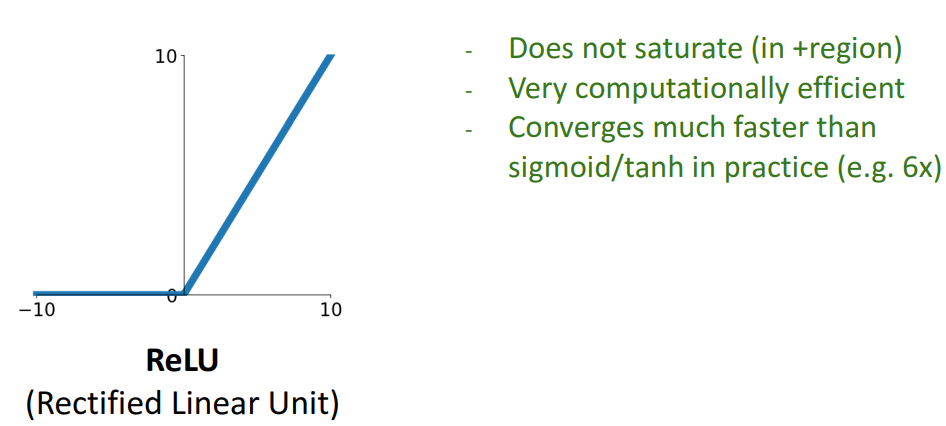

3. Activation Functions : ReLU, Leaky ReLU

📍 문제점: not zero-centered, -일때 gradient vanishing

1) relu

-

개념

- + 영역에서 saturate되지 않음 (=기울기 소실X, killing gradient X)

- 연산 비용 효율적 (cheapest 비선형함수)

- cf) binary와 같이 구현가능, 간단한 임계값만 고려해서 계산비용↓

- sigmoid, tanh보다 매우 빨리 수렴

- cf) 5000 layer같이 매우 깊은 layer면, sigmoid로 수렴하기 매우 힘들것 (batch norm 안쓸때)

-

문제점

-

Not zero-centered output (sigmoid와 동일문제)

- relu는 음수X, 모두 + or 0임

- 이런 문제가 있긴하지만 gradient vanishing처럼 심각한 문제는 아니라 괜찮음

-

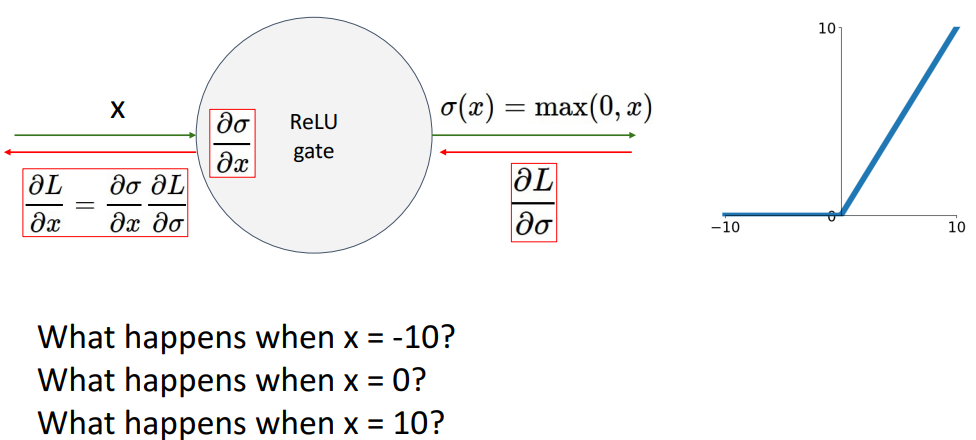

음수일때의 기울기 문제

-

x가 매우 작을때 (dead relu; x<0이면 완전히 학습X)

- local gradient(d/dx)가 0

- downstream gradient(dL/dx)도 0

- cf) 그러면 sigmoid보다 더 안좋은것 아닌가? (sigmoid는 0에 수렴하는데 여기선 아예 0인데?) → 그래도 completely 0이 아녀서 학습 가능

- cf) 그러면 sigmoid보다 더 안좋은것 아닌가? (sigmoid는 0에 수렴하는데 여기선 아예 0인데?) → 그래도 completely 0이 아녀서 학습 가능

-

x가 0일때

-

x가 매우 클때

- local gradient(d/dx)가 1

-

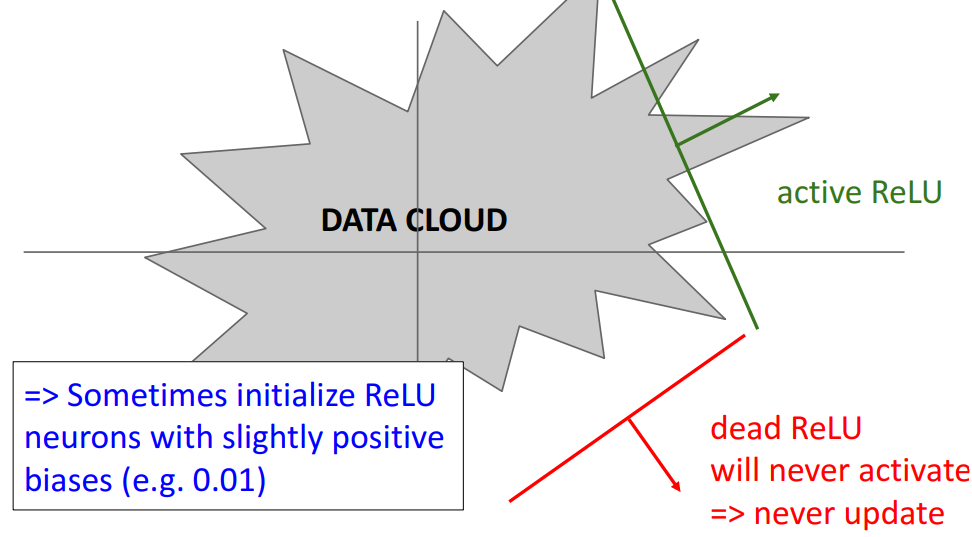

cf) Dead relu, Active relu

- active relu

- gradient 받고 정상적으로 train함

- dead relu

- 이 문제는 모든 데이터가 음수일때 발생, 일부가 + 이면 ㄱㅊ

- 절대 train 불가

- 극복 방법 (trick)

- 0.01같이 조금의 positive 기울기로 초기화 (Leaky relu)

- active relu

-

-

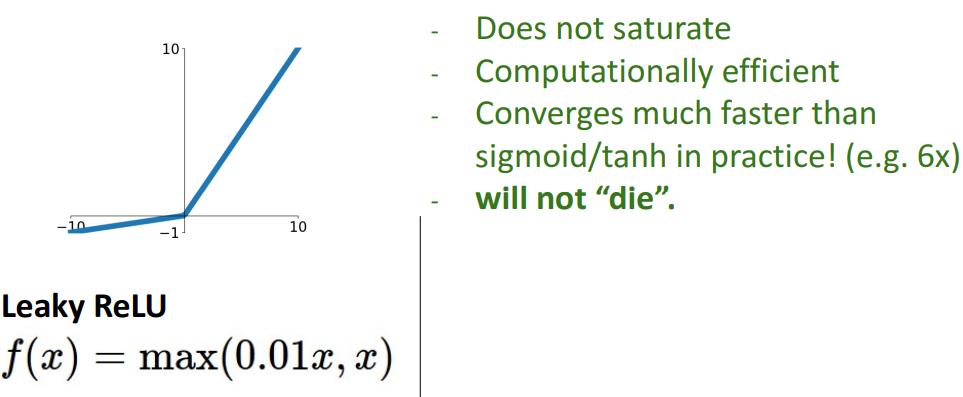

2) Leaky relu

- 개념

- 음수일때 작은 + 를 포함함

- 0.01 → hyperparameter임 (각자의 network에 맞게 학습 필요)

- 장점

- saturate되지 않음

- 효율적 연산 비용

- sigmoid, tanh보다 훨씬 빠른 수렴속도

- gradient vanishing되지 않음 ( local gradient가 0이 될 일이 없어서; 음, 양 모두에서)

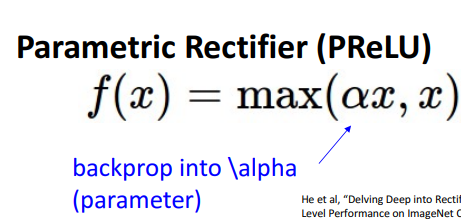

3) PReLU

- 개념

- leaky relu에서 이어진 것

- 를 학습해서 알맞게 가져옴 (learnable parameter)

- 스스로 학습 파라미터를 갖고 있는 비선형 함수

- backprop into \alpha

- 에 backprop해서 에 대한 손실 도함수 계산 후, 에 대한 gradient decent step 만들기

- 문제점) 0에서 미분 불가능 → 해결) 두 방향 중 한 쪽을 고르기 (자주발생X여서 신경안써도됨)

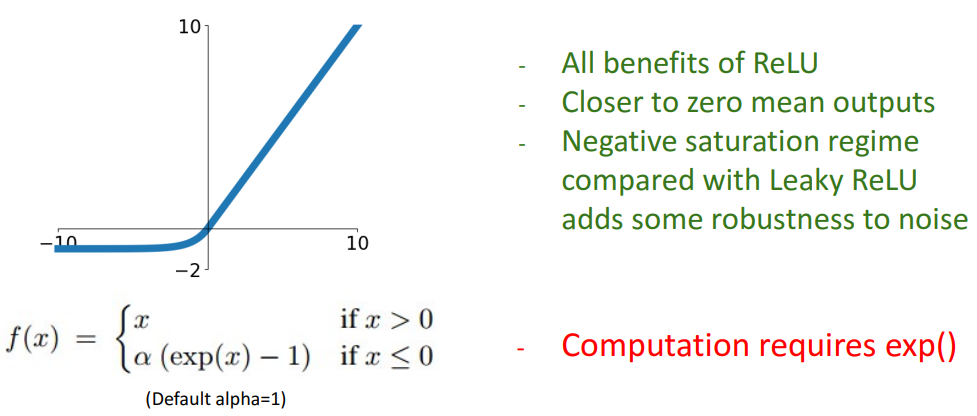

4) ELU (Exponential Linear Unit)

- 개념

- relu보다 더 부드럽고, zero-centered 경향 ↑

- 수식

- (if x≤0)

- (default =1)

- zero-gradient 피하기 위함

- 약간 sigmoid 모양

- (if x≤0)

- 문제점

- 여전히 지수함수 포함

- 때매 학습해야됨

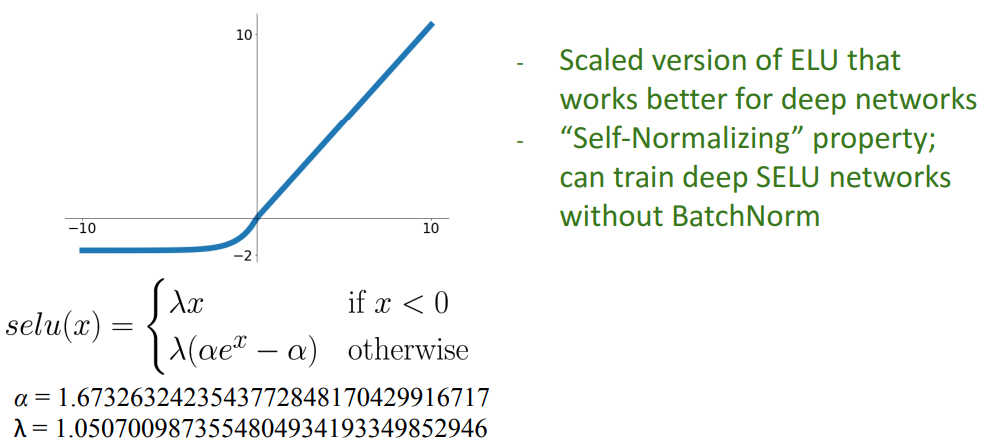

5) SELU (Scaled Exponential Linear Unit)

- 개념

- Scaled version of ELU

- batch norm 제외하고, 깊은 SELU 네트워크 학습 가능

- 장점

- deep neural network + SELU = self normalizing property

= layer가 깊어질수록 → 자기 정규화 속성 ↑

= 활성화 함수 잘 작동 ↑ & 유한한 값으로 수렴

= batch norm과 같은 정규화 제외 가능

- deep neural network + SELU = self normalizing property

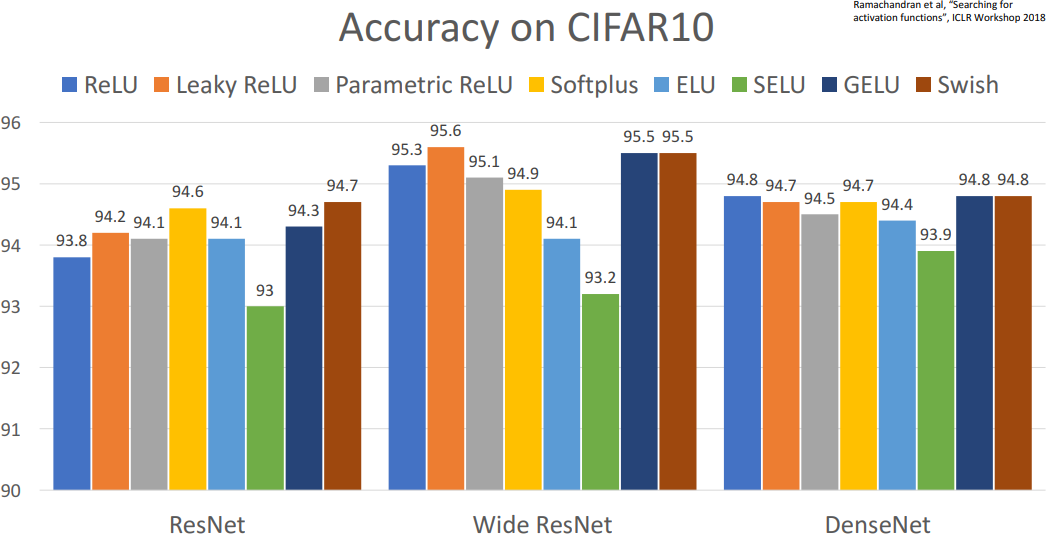

4. Activation Functions : 전체 비교

- 걍 relu써라

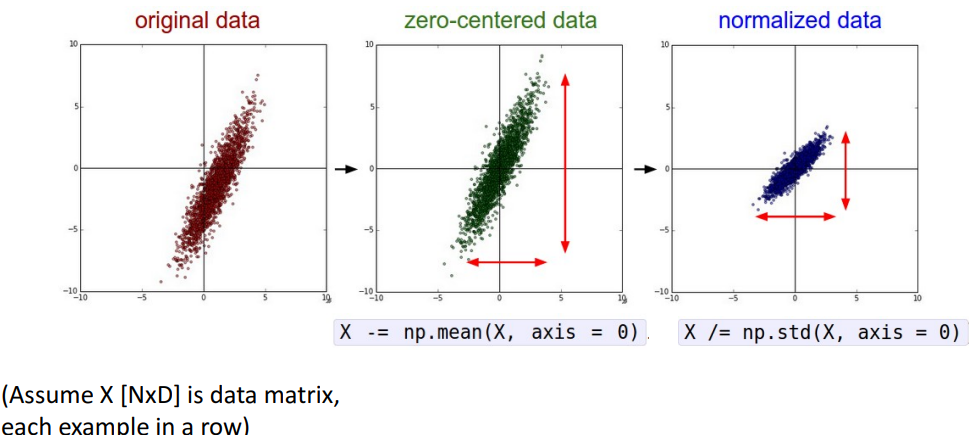

5. Data Preprocessing

1) 개념

- 더 효율적 training 위해

2) 방법 2가지

(image data인 경우)

a. zero-center : 평균을 빼서 원점으로 가져옴

- 이렇게 해야 하는 이유?

-

이전에 sigmoid의 문제점으로 gradient가 항상 + or -면, W update도 항상 + or - 되는 문제 지님

-

비슷하게, 여기서도 train data가 모두 + or - 면, W update도 모두 항상 + or -.

⇒ 제한적으로 update될 수 밖에 없음

-

b. normalized : 동일 분산 갖도록 크기 scaling (표준편차로 나눠서)

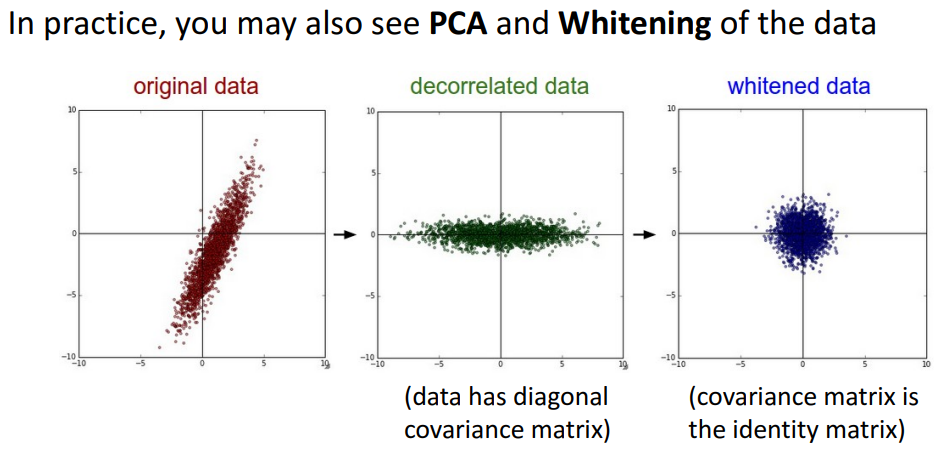

(input이 저차원, 이미지 아닌 경우)

-

원점 중심으로 옮기고 → rotate함

-

decorrelated data

- 공분산 matrix ?

-

whitened data

- identity matrix

-

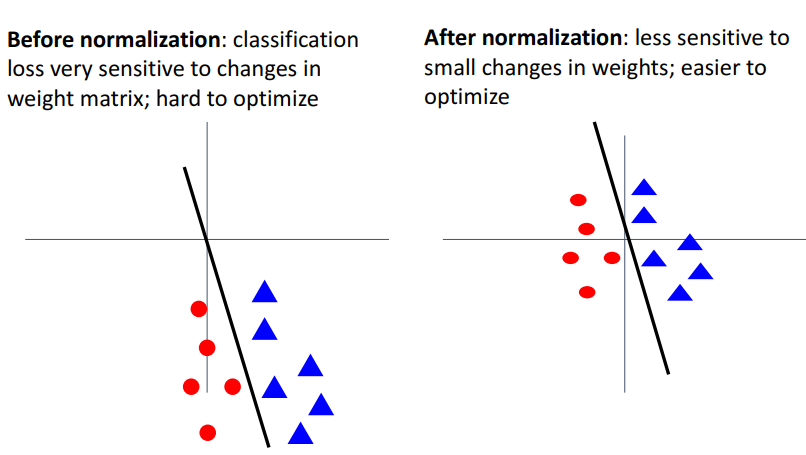

normalize 전, 후 비교

- (before norm) 원점으로부터 멀면, weight matrix의 작은 변화에도 큰 변화 발생→ optimization process 어렵게 만듦

- ex. -2x+1 일때 zero-centered 되지 않으면, 함수가 -2.1x+1로 바뀔때, 데이터 분류 상황이 많이 바뀜 → classification loss 많이 변화 → optimization process 어렵게 만듦

- (after norm) zero-centered 되어있어서, W의 작은 변화에 덜 민감

- (before norm) 원점으로부터 멀면, weight matrix의 작은 변화에도 큰 변화 발생→ optimization process 어렵게 만듦

3) 관련 질문들

- Q1. 이런 전처리를 train, test에 적용?

A1. 항상 train에 적용, test에서는 같은 정규화 사용

- Q2. batch-norm사용시에도 전처리 필요?

A2. batch norm을 모든 처리 이전 맨 첫단계에서 사용시 안해도 됨.

but 전처리를 직접 하는거 보단 성능 낮을듯

⇒ 실무에선 전처리 → batch norm 둘다 사용

6. Weight 초기화

📍 Xavier 출현배경 + Xavier에 대해

1) 방법 3가지 (모두 문제있음)

a. W=0, b=0으로 초기화

-

문제점

- 모든 output들이 0이되고, 모든 gradient가 동일해짐

= output은 input과 관련이 없어짐 ⇒ gradient =0이 돼서 totally stuck됨 - 대칭이 깨지지X (계속 같은 gradient 학습) → 학습 불가됨

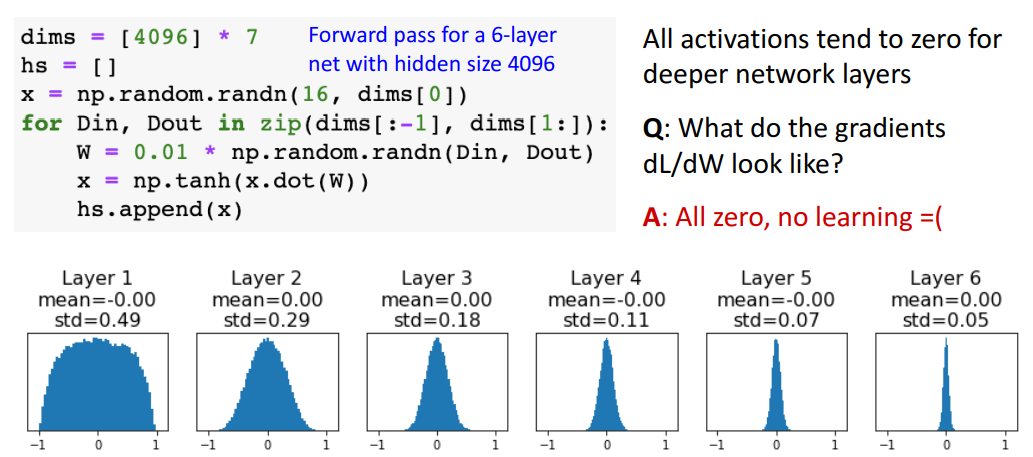

b. small random 숫자들로 초기화

- 모든 output들이 0이되고, 모든 gradient가 동일해짐

-

문제점

-

deeper network에서 문제 발생

= local gradient들이 모두 0이 됨 → downstream gradient도 0이 돼서 학습 X-

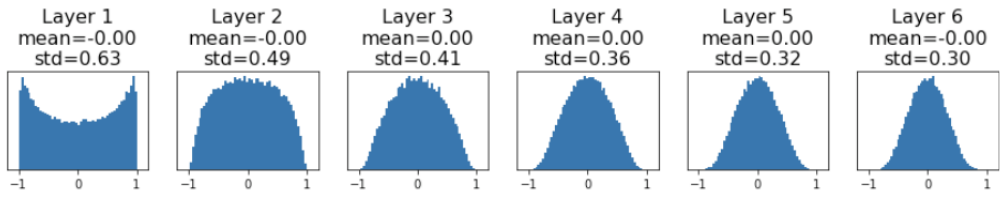

증명

- 해석

- 각 6개의 layer의 hidden unit값들을 시각화한것

- hidden state = W가 Din, Dout의 사이에 small random값으로 초기화 되어 x와 내적한값

- 이 hidden state들의 기울기가 점점 0에 수렴하게 됨

- cf) weight의 local gradient = 이전 layer의 activation

- 결과

- layer가 깊어질수록 activations가 0에 수렴 (학습에 매우 bad)

⇒ local gradient들이 모두 0이 됨 → downstream gradient도 0이 돼서 학습 X

- layer가 깊어질수록 activations가 0에 수렴 (학습에 매우 bad)

- 해석

-

-

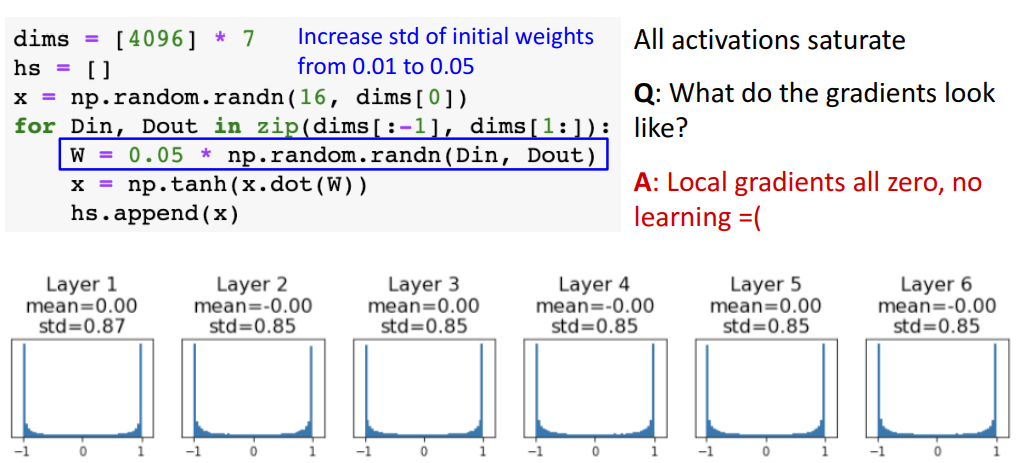

c. W를 조금 더 큰 숫자로 초기화

-

문제점

-

local gradient들이 모두 0이 됨 → downstream gradient도 0이 돼서 학습 X

-

증명

- 해석

- tanh로 인해 극단값으로 밀려남

- 결과

- local gradient들이 모두 0이 됨 → downstream gradient도 0이 돼서 학습 X

- 해석

-

-

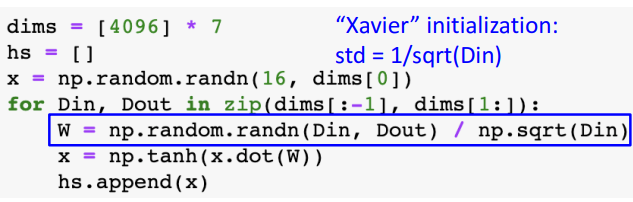

2) 해결 방법

a. Xavier Initialization

-

방법

- std = 1/sqrt(Din)

- 하이퍼파라미터 X

-

결과

!

- layer가 깊어져도 ㄱㅊ음

-

conv layer에서 적용 방법

-

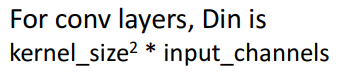

도함수

-

Xavier의 목표

-

output 의 activation 분산 = input의 activation 분산 하기!

(왜냐면 기존 초기화 방법들은 input과 output의 분산이 달라서 문제였어서)

-

증명

- 가정

- x,w는 모두 가우시안 분포를 따른다. (0 분산)

- 결과

-

Var() = 1/Din 이면, Var() = Var()이다

⇒ 따라서 Xavier 초기화 = 1/sqrt(Din) 이 된 것.

-

- 가정

-

-

-

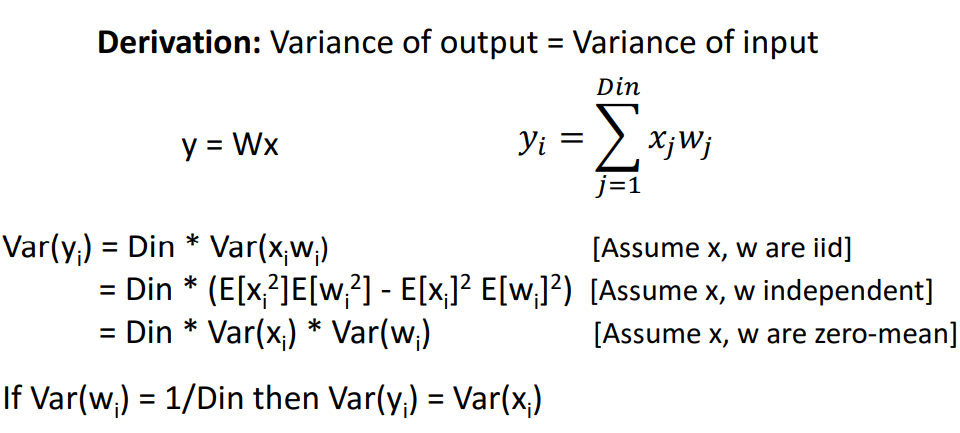

cf) ReLU로 input x와 W를 내적한다면?

- 결과

- Xavier에서 relu 작동 X

- 이유) Xavier는 x와 w가 zero-mean임을 가정하는데 relu는 그렇지 않아서 맞지 않음

- Xavier에서 relu 작동 X

- 결과

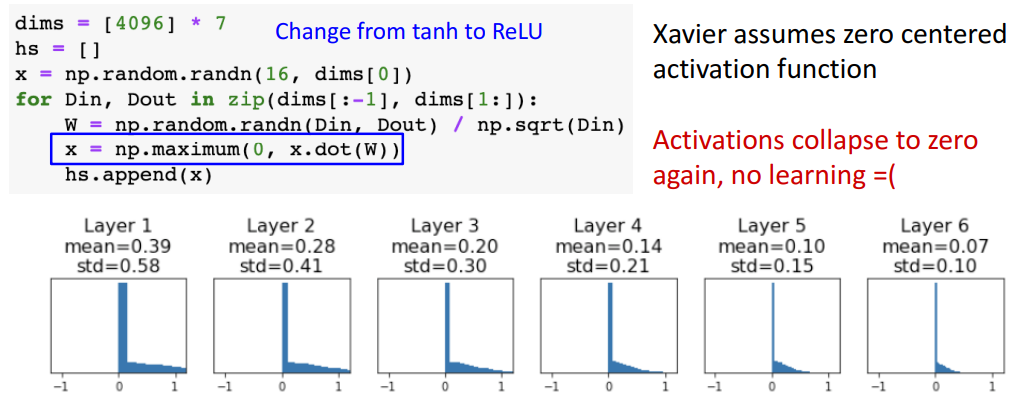

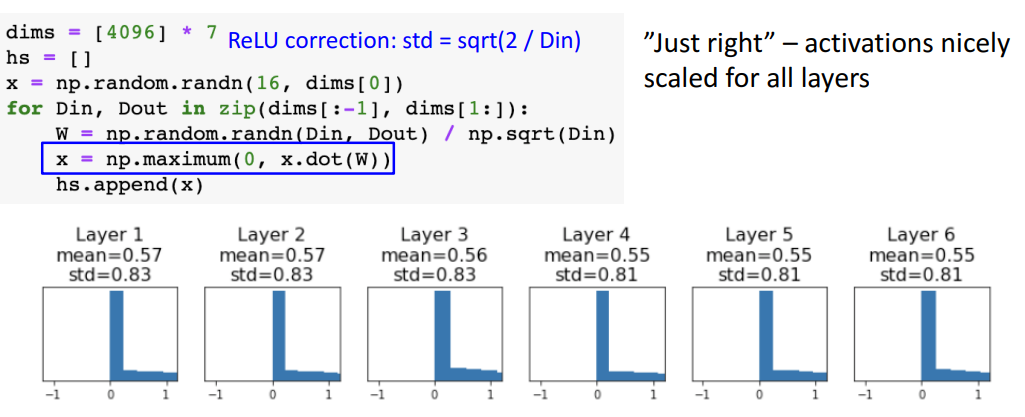

7. Weight 초기화 : Kaiming / MSRA 초기화

📍 relu그대로 사용대신, w초기화 방법 변경 → resnet에서 안맞는 부분 해결

1) 방법

- relu그대로 사용, 대신 weight초기화 변경

- (기존) std=1/sqrt(Din) → (변경) std=sqrt(2/Din)

- relu는 반을 죽이니까 걍 2배를 해도 됨 (뉴런의 절반이 죽을거라는 사실에 대해 조정)

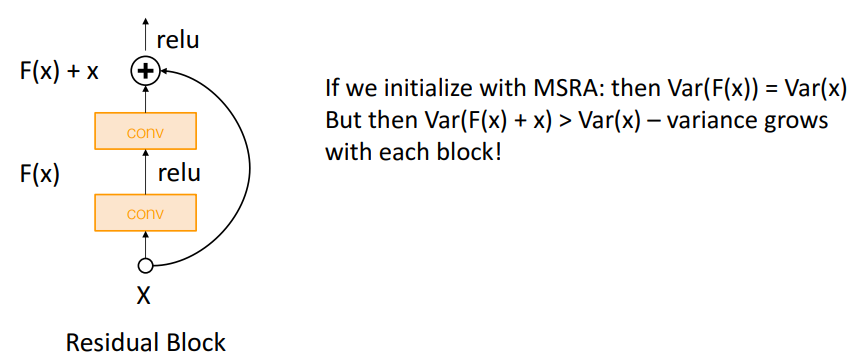

2) 문제점

-

VGG를 scratch 내면서 train 시킴 (지적받음)

-

Residual Network에선 유용 X

- 이유: residual connection 이후의 output에 input을 다시 넣어서 분산, 분포가 엄청 클 것

- ex. Var(F(x))(1번째 output에 대한 분산) = Var(x)(input에 대한 분산) (여기까진 정상) Var(F(x)+ x)(2번째 output에 대한 분산) >> Var(x)(input에 대한 분산) (residual로 input을 다시 넣어줘서 분산이 더 큼, 일치X)

- ex. Var(F(x))(1번째 output에 대한 분산) = Var(x)(input에 대한 분산) (여기까진 정상) Var(F(x)+ x)(2번째 output에 대한 분산) >> Var(x)(input에 대한 분산) (residual로 input을 다시 넣어줘서 분산이 더 큼, 일치X)

- 따라서, Xavier or MSRA에서 분산이 매우 크므로 → bad gradient → bad optimization

- 이유: residual connection 이후의 output에 input을 다시 넣어서 분산, 분포가 엄청 클 것

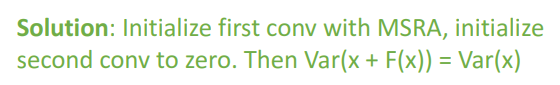

3) 해결책

- 첫번째 conv를 MSRA로 초기화

- 두번째 conv (last layer)를 0으로 초기화

⇒ Var(x+F(x)) = Var(x) 일치 가능

= 분산이 너무 커지지 않을수있음

4) 질문

- Q. (W 초기화 목적) 초기화의 idea가 손실함수의 global minimum에 도달하기 위함인가?

A. 아님, train 전엔 그 minimum이 어딘지 모름, 대신 모든 gradient가 초기화를 잘 행할 수 있도록 하는 것

= 잘못된 초기화 하면 zero gradient가 되어버릴 수 있어서

= lost landscape에서 flat한 곳에서 시작하여 걸어가지(train) 않도록 도와주는 작업

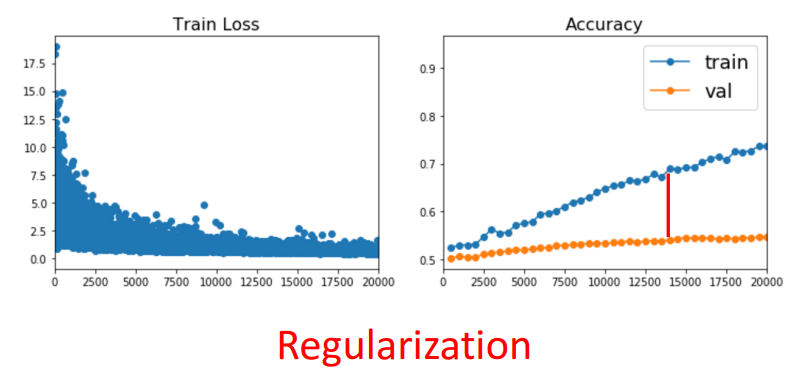

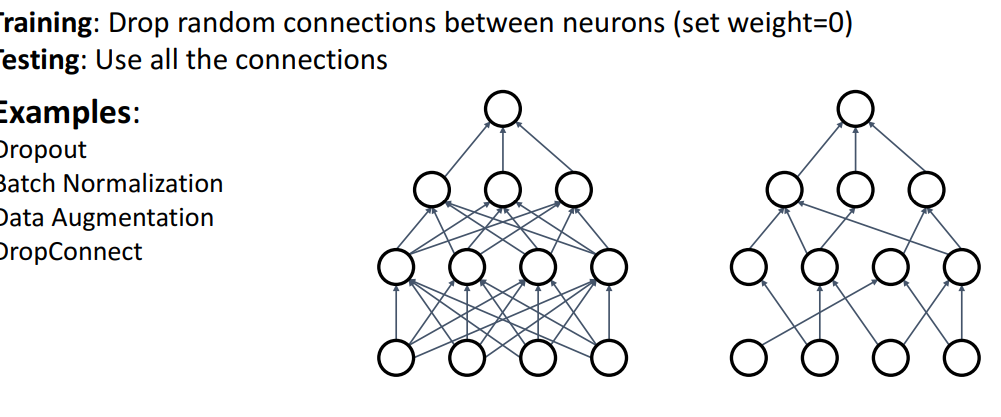

8. Regularization : Dropout

1) 사용 목적

- 과적합 방지

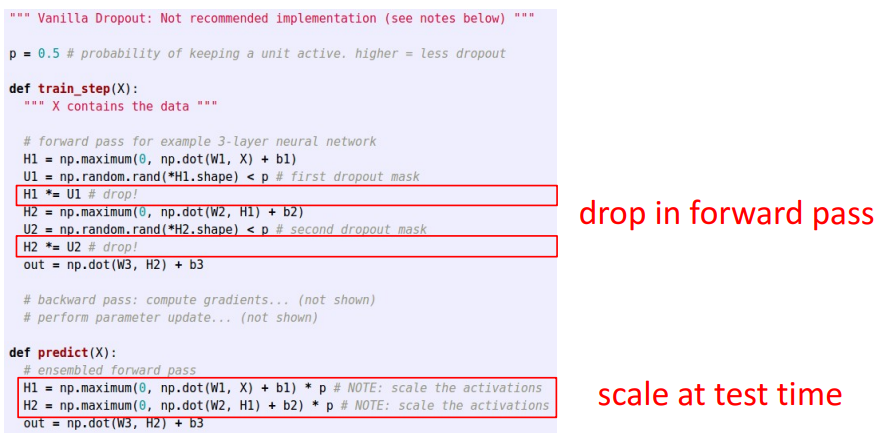

2) 방법

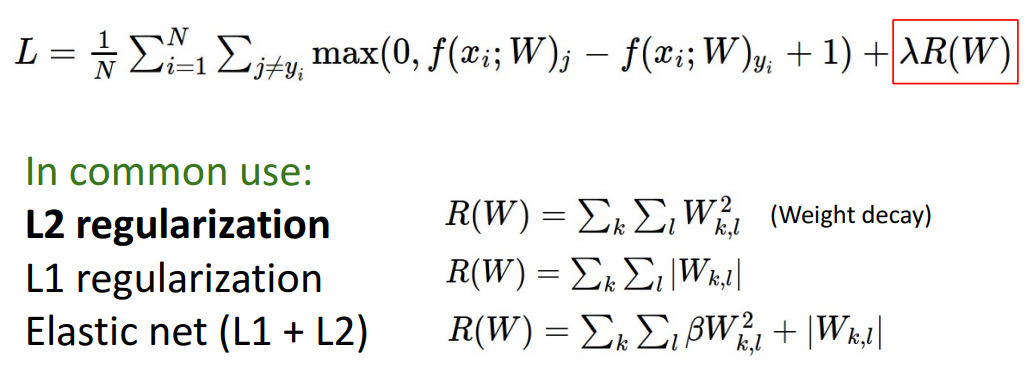

a. Loss뒤에 붙이기

- L2 norm → 젤 사용 多

b. Dropout

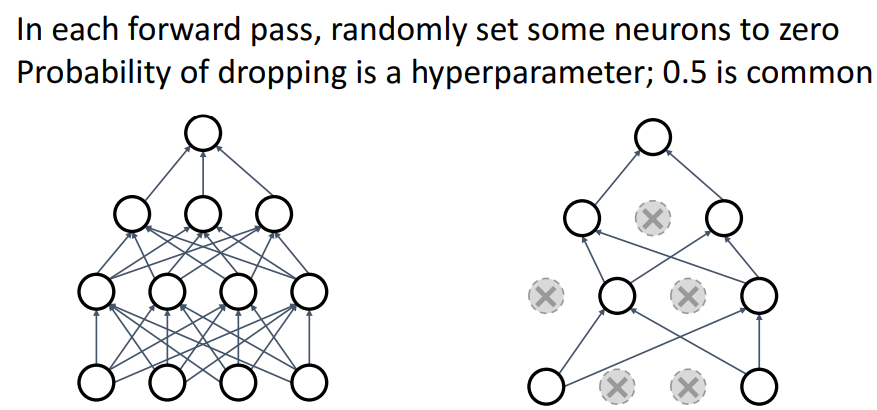

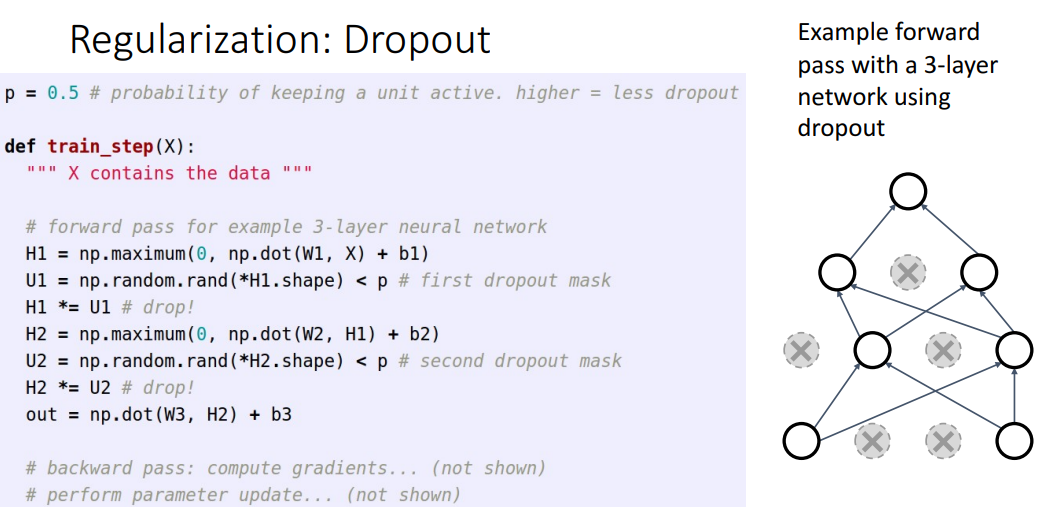

- 방법

- 각 layer마다 순전파 시, 랜덤하게 몇몇 뉴런들을 0으로 세팅

- 얼마나 drop할건지는 hyper parameter임; 0.5가 일반적

- 구현하기

-

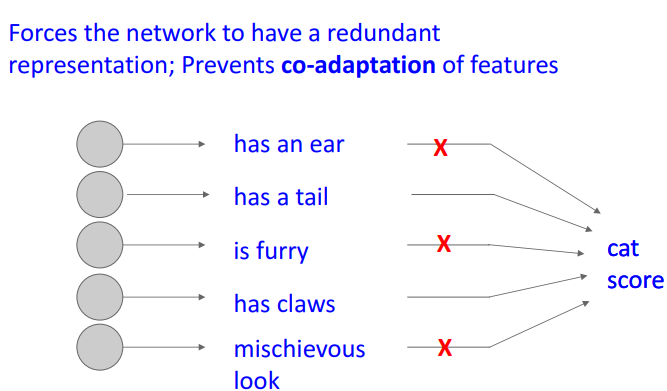

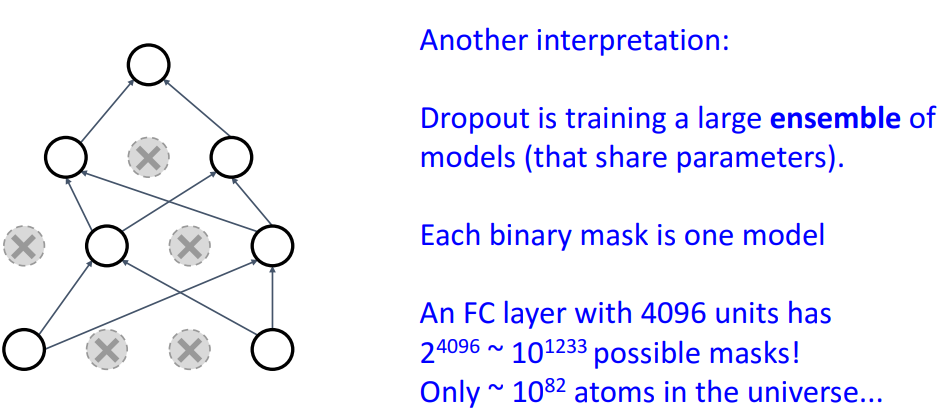

Dropout하는 이유 2가지

-

중복 적용하는 것 방지

- x의 feature잘 학습 위해, 필요없는 feature 덜 배우고 중복 노드 배우는걸 방지

⇒ 결론) 과적합 방지

-

앙상블처럼

- Dropout은 파라미터 공유하는 여러 Neural Network 앙상블 training

- 여러 submodel 만들어서, 앙상블처럼 최종 결론 투표 결정

-

-

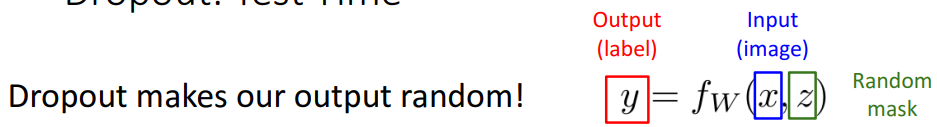

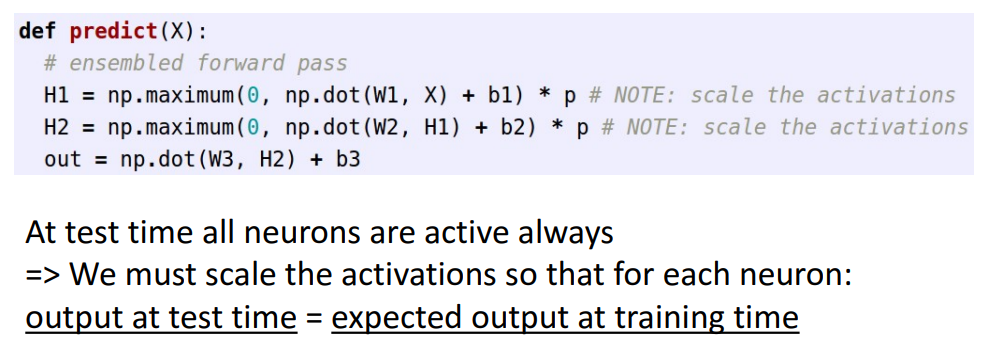

Test Time에서의 Dropout

-

문제점

- z=random변수 (순전파 이전에 정함)

- 결론) test시, random하게 뉴런을 끄게 되면 test마다 결과가 다 다르게 도출 이유) 각 forward pass마다 random하게 뉴런 떨어트려서

-

해결책

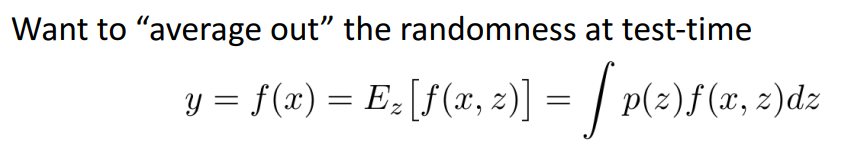

- 이러한 randomness (z) 를 평균내자!

-

해결방법

-

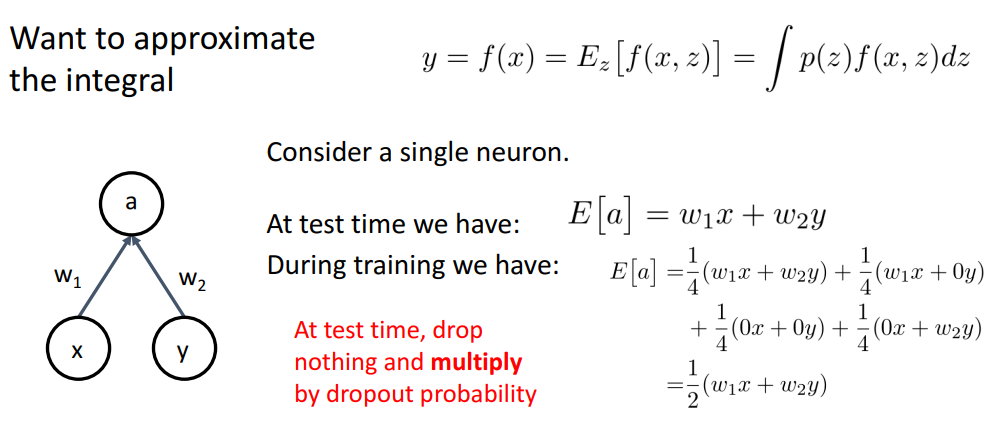

위의 integral 근사화 하는 방법

- (dropout시) 4개의 각기 다른 train시에 만들어진 random mask들 곱해짐

→ z (random 변수)에 대해서 평균내는 것

- (dropout시) 4개의 각기 다른 train시에 만들어진 random mask들 곱해짐

-

-

구현

- 방법

- test time에선 모든 뉴런들 사용

- but 각 뉴런을 drop할때 dropping 확률(p) 써서 layer의 output을 rescale함

- 방법

-

-

결론(전체 구현)

- train: 걍 그대로 dropout

- test: 적절한 확률(p) 사용해서 output을 rescale하고, randomness없앰

- 각 개별 layer에만 적용 가능

- stacking multiple dropout layer시 사용X

-

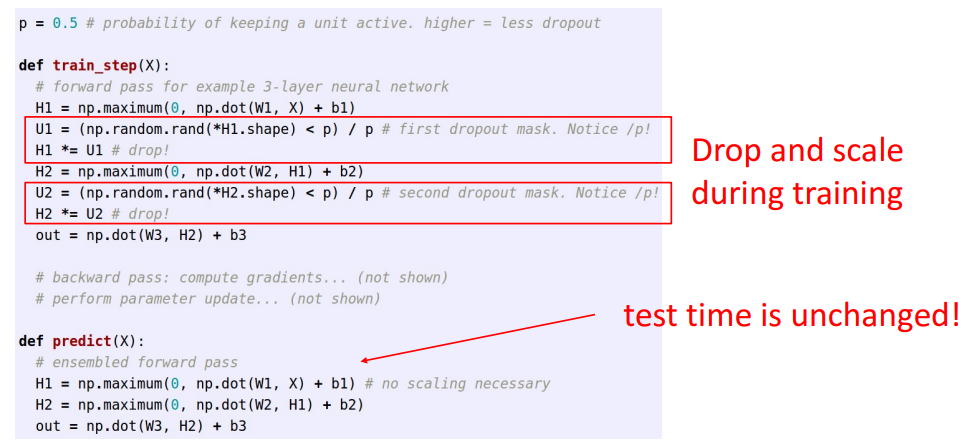

일반적인 구현 방법 (Inverted Dropout)

- 일반적으로,, train시 drop & scale 모두 함(뉴런을 1/2개 dropout시키고 남은 뉴런들을 2배함 ) test시에 모든 뉴런 사용 + 모든 normal weight matrix 사용

- 일반적으로,, train시 drop & scale 모두 함(뉴런을 1/2개 dropout시키고 남은 뉴런들을 2배함 ) test시에 모든 뉴런 사용 + 모든 normal weight matrix 사용

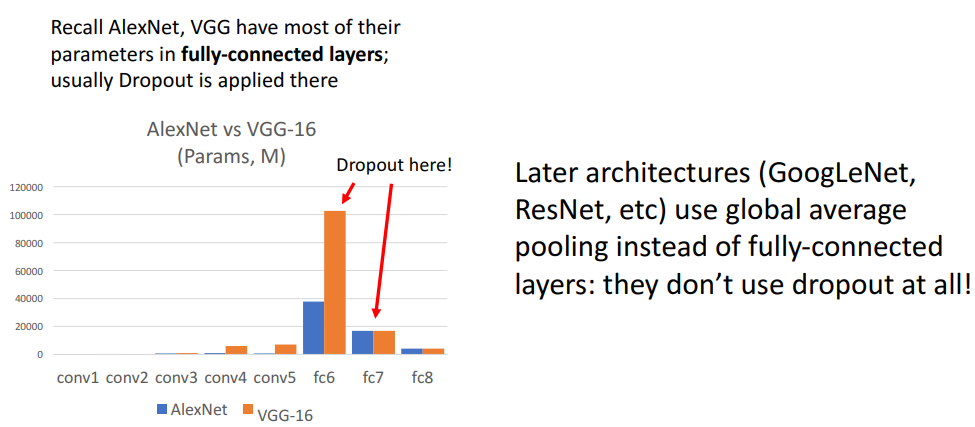

9. Dropout architectures

📍 Dropout이 아키텍처들에 어케 쓰이는지

- 결론

- AlexNet, VGG : 맨 윗단 레이어인 FCLayer에서 dropout적용

- 이외 최신 아키텍처: FCLayer를 줄였기에, dropout 사용할일 거의 없음

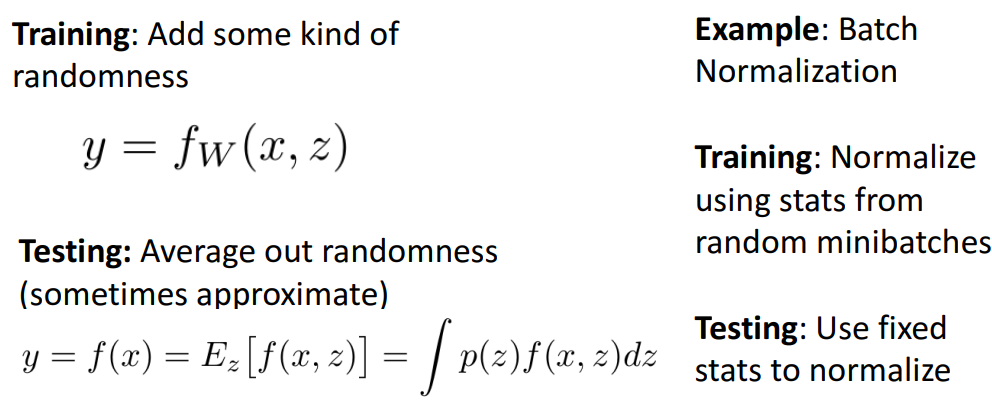

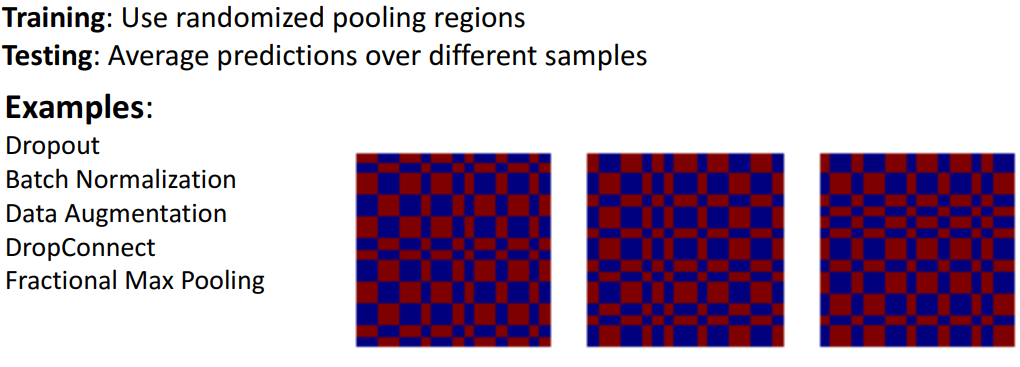

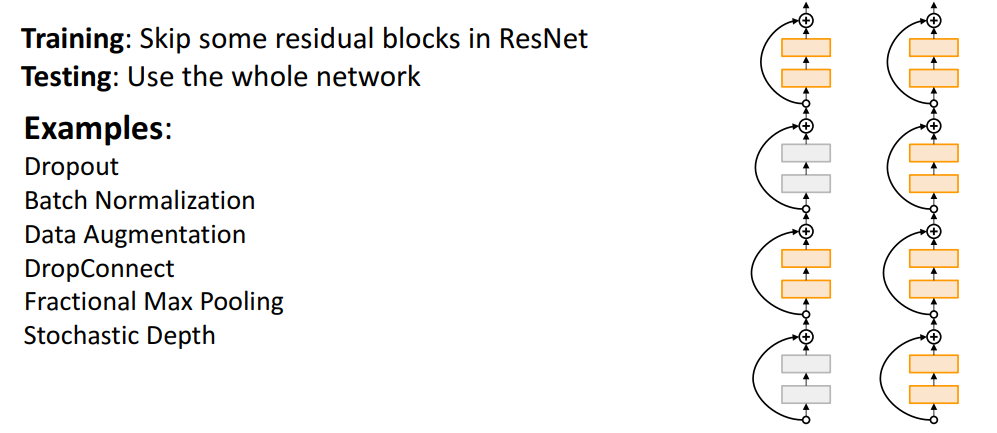

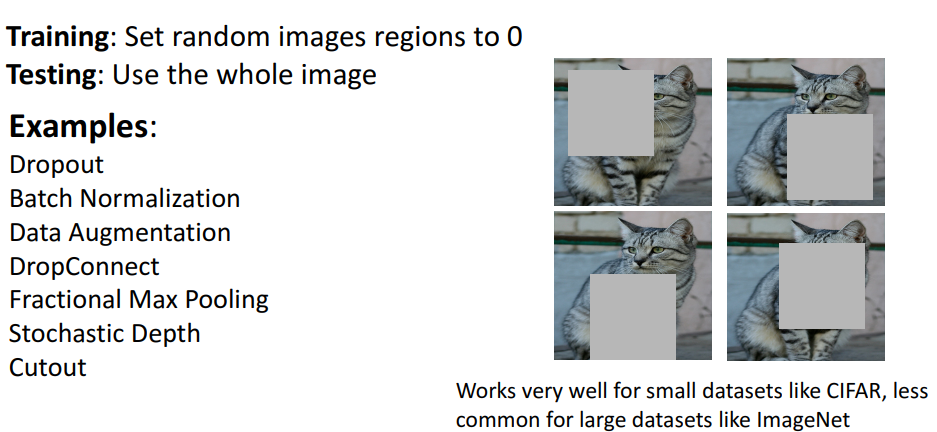

10. Regularization : A common pattern

-

Batch norm

- train (randomness추가)

- 랜덤 확률로 미니배치 사용해서 정규화

- test (average out randomness)

- 고정된 확률로 정규화

- train (randomness추가)

-

최신 아키텍처에는…

- dropout 사용X → 대신 batch norm or L2 정규화

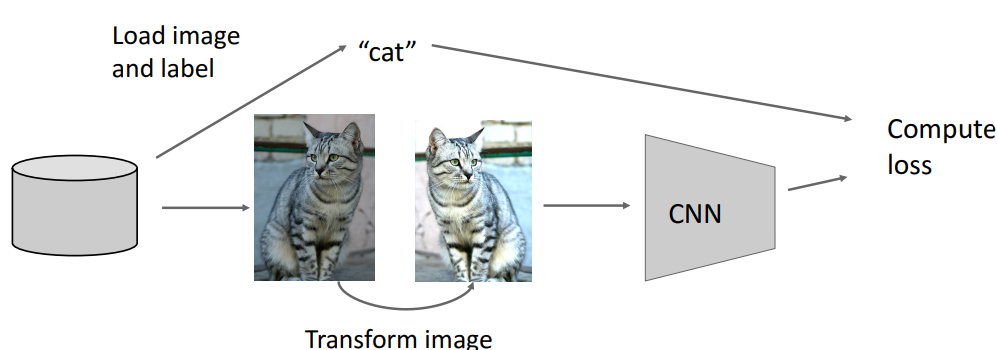

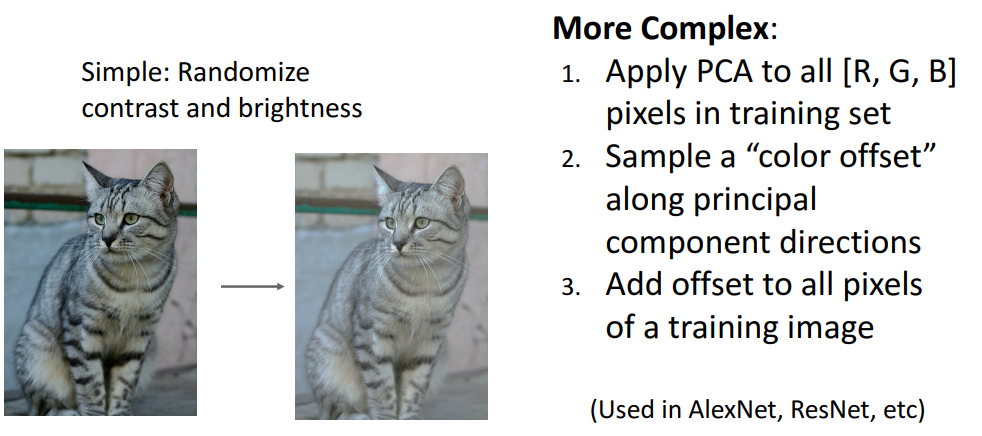

11. Regularization : Data Augmentation

📍 좌우대칭, 밝기조절 등

-

개념

- input data에 randomness를 부여하는 방법의 일종

- 비슷한 image이지만 CNN모델은 다른 이미지로 인식하며, 밝기조절 or 좌우 대칭 등을 randomness의 일종으로 볼 수 있음

-

방법

-

Horizontal Flips (좌우 대칭)

-

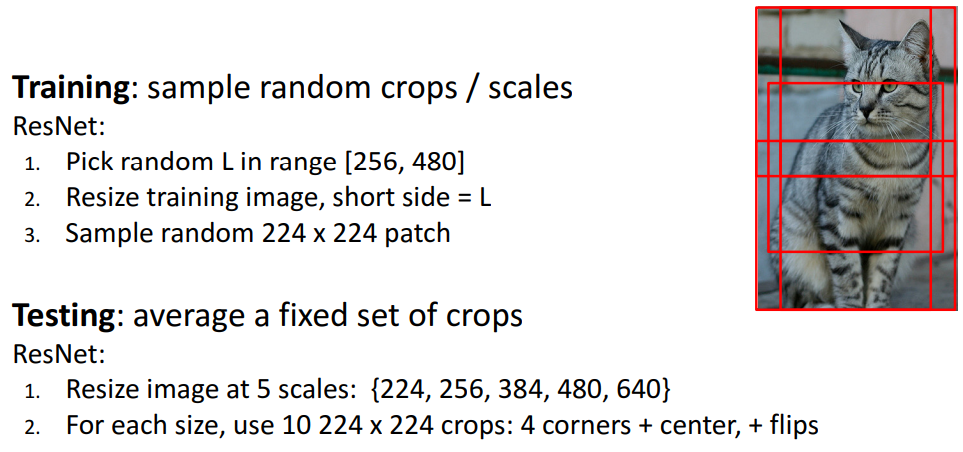

Random Crops & Scales (랜덤 자르기, 사이즈 조절)

- train

- 랜덤하게 이미지 잘라내고, 사이즈 조정

- test

- test용 이미지를 5개의 스케일로 만든 후, 224*224 사이즈의 이미지를 10개로 크롭하여 10개에 대한 분류 결과 투표시킴

- train

-

Color Jitter

-

RGB 픽셀에 대해 PCA 진행하여, 조도 조절하는 방법

-

-

12. Regularization : Drop Connect

13. Regularization : Fractional Pooling

14. Regularization : Stochastic Depth

15. Regularization : Cut out

16. Regularization : Mix up