📌 본 내용은 Michigan University의 'Deep Learning for Computer Vision' 강의를 듣고 개인적으로 필기한 내용입니다. 내용에 오류나 피드백이 있으면 말씀해주시면 감사히 반영하겠습니다.

(Stanford의 cs231n과 내용이 거의 유사하니 참고하시면 도움 되실 것 같습니다)📌

1. 선형 분류의 문제 & 해결

1) 문제점

-

linear classifier로 분류 안될때 너무 많음

-

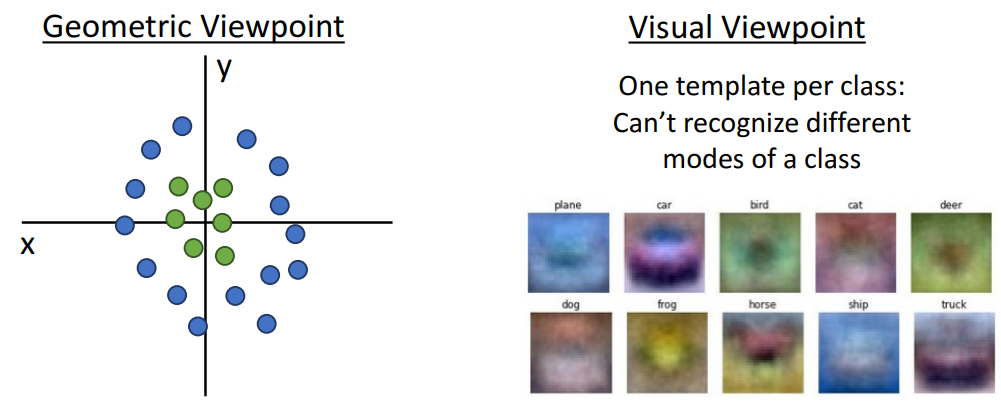

기하학적 관점 & 시각적 관점

- 기하학적 관점: 선형 분류 어려움

- 시각적 관점: 하나의 클래스의 여러 모드 인식불가

2) 해결책

-

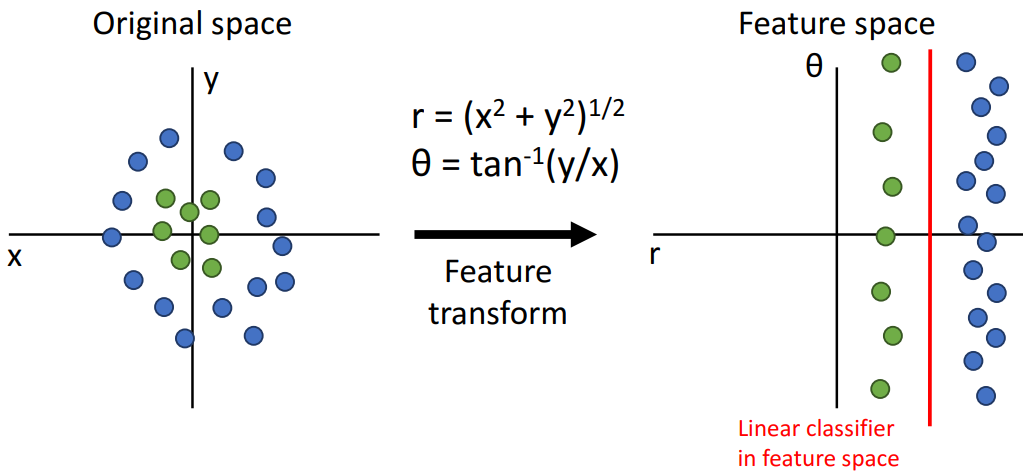

Feature Transform

-

특징

-

분류에 더 적합하도록 입력데이터에 적용 (제곱, tan함수사용)

- Original space

- 일반좌표 (cartesian)

- Feature space

- 극좌표 (polar)

- Feature transform(비선형성) 이후 정의되는 공간

- 선형분리 가능하게 됨 (선형 경계를 feature space에 삽입가능)

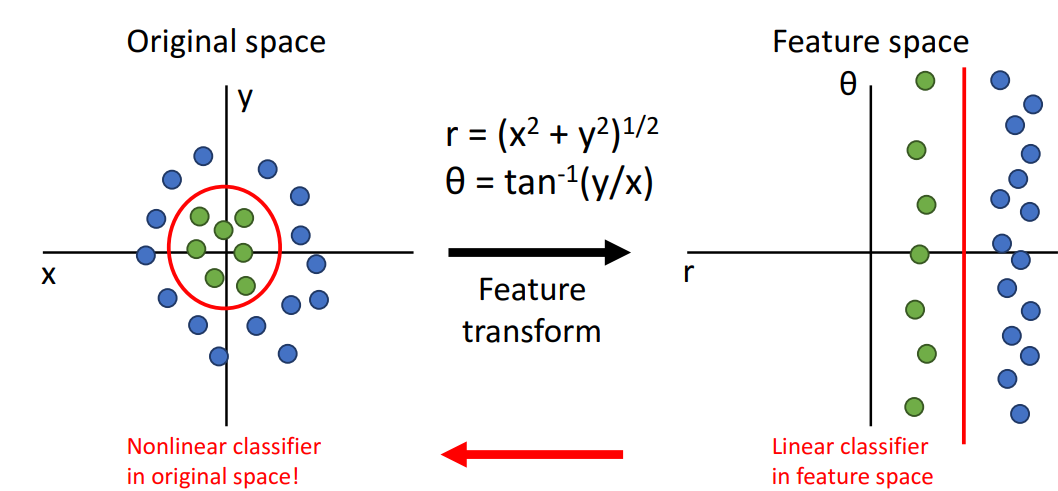

- Original space

-

다시 original space로 변경

- original space

- 비선형 되어버림

- original space

-

-

결론

- Data의 특성에 맞게 feature transform 선택시 선형분류 한계 극복가능

- feature transform을 더 광범위 하게 적용시에 더 조심하기

- 선형분류 모델에 적용하여 특징 부여 용이

-

2. Feature Representation

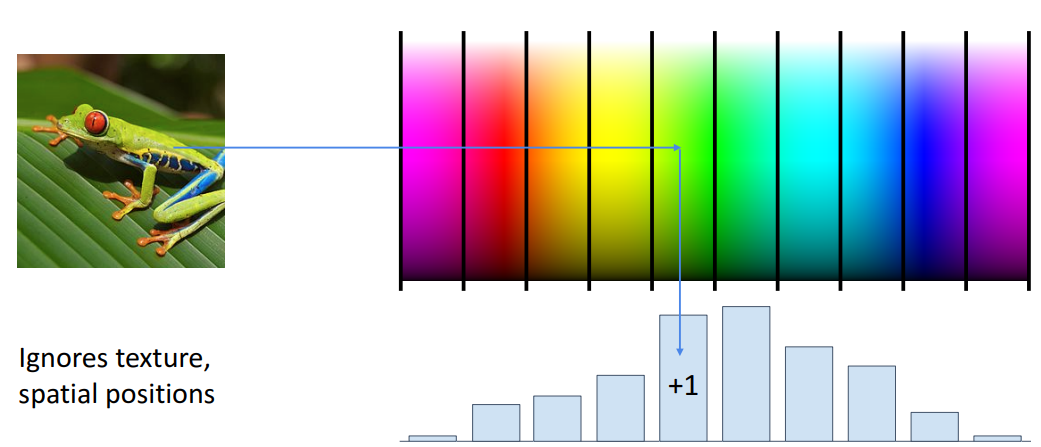

1) Color Histogram

- 특징

- 요약: 위치관계 분류 버리고, 색 분류만 하자 !

- RGB 스펙트럼 of color space 분할 & 이산bin

- input image의 각 픽셀에 대해 bin의 해당 위치에 할당

- normalize of histogram

- 이미지에 대한 모든 공간정보 버림 (spacially invariant) = 이미지에 어떤 유형의 색상이 있는지만 관심

- ex. car image (갈색배경, 빨간 차)

- car는 이미지에 각기 다른 위치

- 선형분류: 이러한 표현처리 어려움 but color histogram: 정확한 위치관계X, 항상 빨&갈 묶음

- ex. car image (갈색배경, 빨간 차)

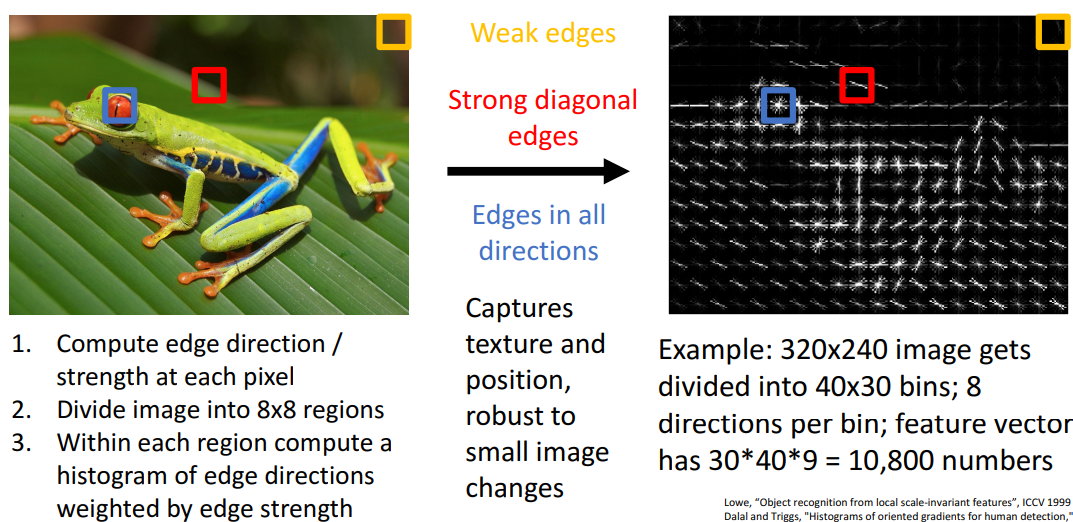

2) Histogram of Oriented Gradients (HoG)

- 특징

-

요약: input image의 모든 위치에서 local 방향 & 강도로 표현

-

color information 버림 (local edge & strength에만 관심)

-

=⇒ 1,2 잘 안쓰임: 이 2가지는 사람이 input data의 올바른 quality가 뭔제 생각해야돼서

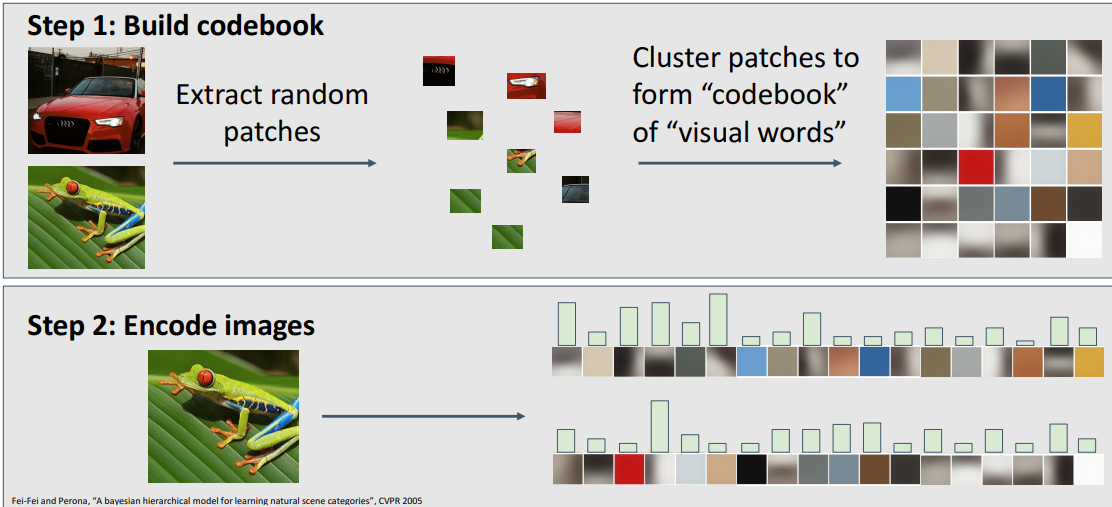

3) Bag of Words

-

개념

- 데이터기반 feature transform

- Step1: Build codebook

- input 이미지에서 random patch 추출 (다양한 scale, size에서)(모든 이미지를 무작위로 잘라냄)

→ codebook 만들기 & visual word로 표현 가능

(train set에 나타나는 많은 공통기능을 capture하거나, 인식할 수 있는 일종의 visual word 표현 배움)

- input 이미지에서 random patch 추출 (다양한 scale, size에서)(모든 이미지를 무작위로 잘라냄)

- Step2: Encode images

- 각 input image에 대한 히스토그램 계산

= 각 visual word가 해당 input image에 얼마나 나타났는지 알수O

- 각 input image에 대한 히스토그램 계산

- 데이터기반 feature transform

-

특징

- 사람이 기능적 형태(functional form)를 지정할 필요가 없기에, 매우 강력한 기능 표현 유형

- code book 단어들의 특징이 훈련 data로부터 학습되어 문제에 더 잘 적합 (앞의 방법들보다 더 편함)

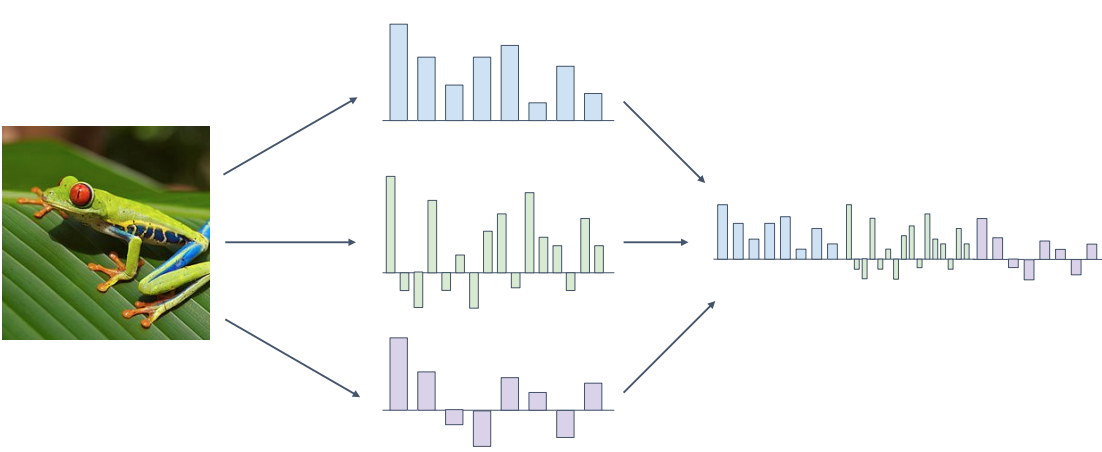

4) 여러개의 feature representation 사용 (모두 조합)

- color, edge등과 같이 input image에서 다양한 유형의 정보 알수 있음

- 이렇게 조합하는 방법이 computer vision에서 널리 쓰임

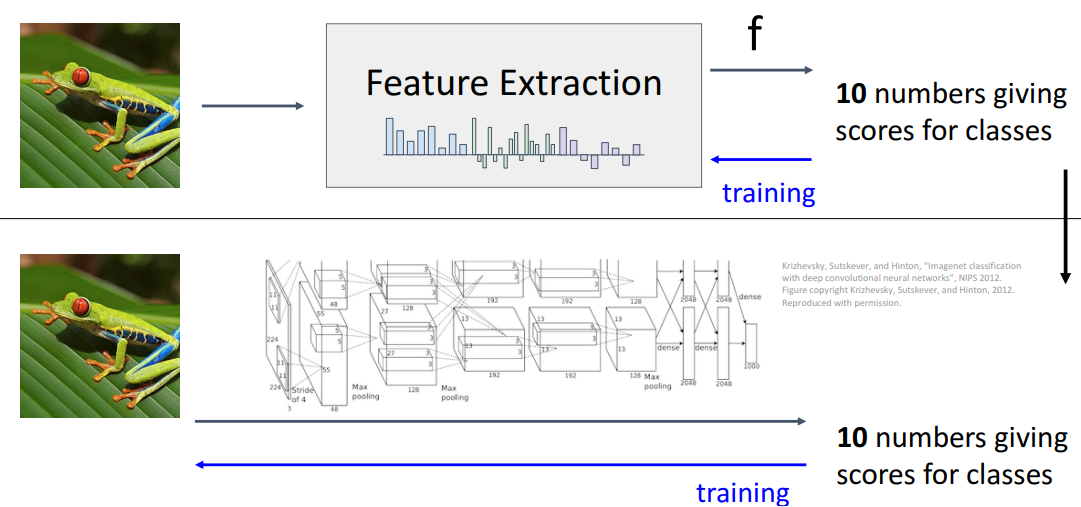

3. Image Features

1) 전체 흐름

raw image pixel

→ Feature Extraction →(f: 분류 성능 최대화 위해 스스로 조정 X)→ 10 numbers giving scores for classes → (training(학습가능모델)) → 다시 반복

=⇒ 결론) 더 나은 방법 사용 필요

(이미지 분류시, system의 모든 부분을 자동적 조정하여 성능 높임)

(Neural Network가 하는일에 대한 동기)

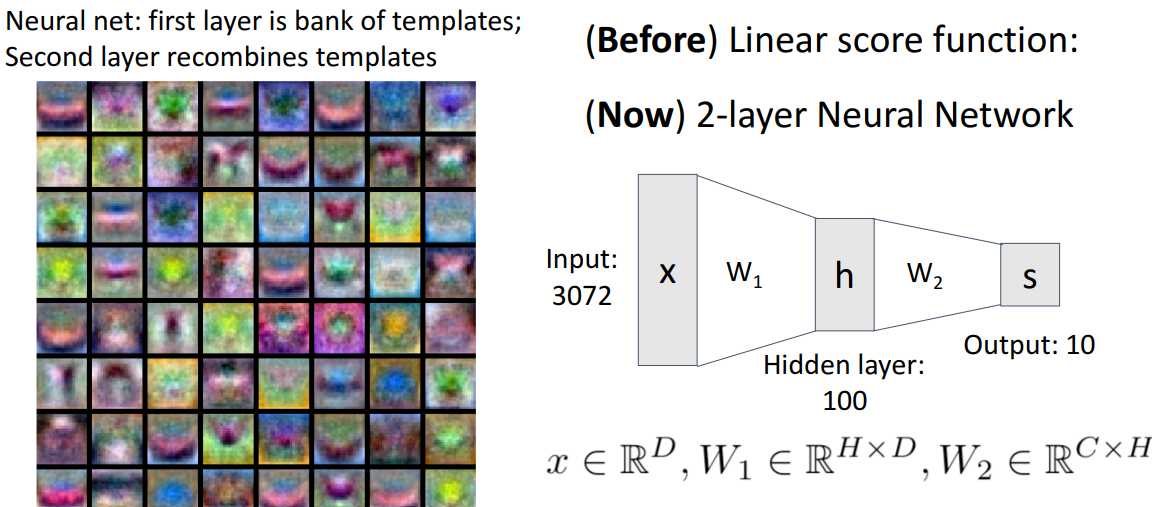

2) Image Features vs Neural Networks

- 앞서 설명한 feature representation과 다르지 X

- 분류 성능 높이기 위해 train시, 전체를 공동으로 조정하는것만 다름

4. Neural Networks

📍NN이 가장 강력한 1번째 이유 - W의 역할

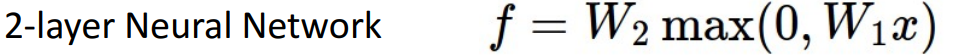

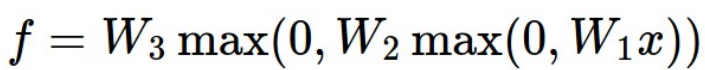

1) 개념

- layer 2개

- layer 3개

2) W의 역할

-

NN이 가장 강력한 1번째 이유

a. W의 이전 레이어에 대한 영향력 전달 역할

-

이전 레이어의 각 요소가 다음 레이어의 각 요소에 얼마나 영향미치는지 알려줌

-

말그대로 가중치인듯. 이전 레이어에 대한 가중치

ex. W1 = input layer x가 hidden layer h의 각 요소에 얼만큼 영향 미치는지

W2 = hidden layer의 각 요소가 출력 score의 각 요소에 얼만큼 영향 미치는지

-

-

Fully connected Neural Network

- 모든 요소들이 다른 요소들에 모두 완전히 연결되어 있어서

- 매우 dense하게 연결

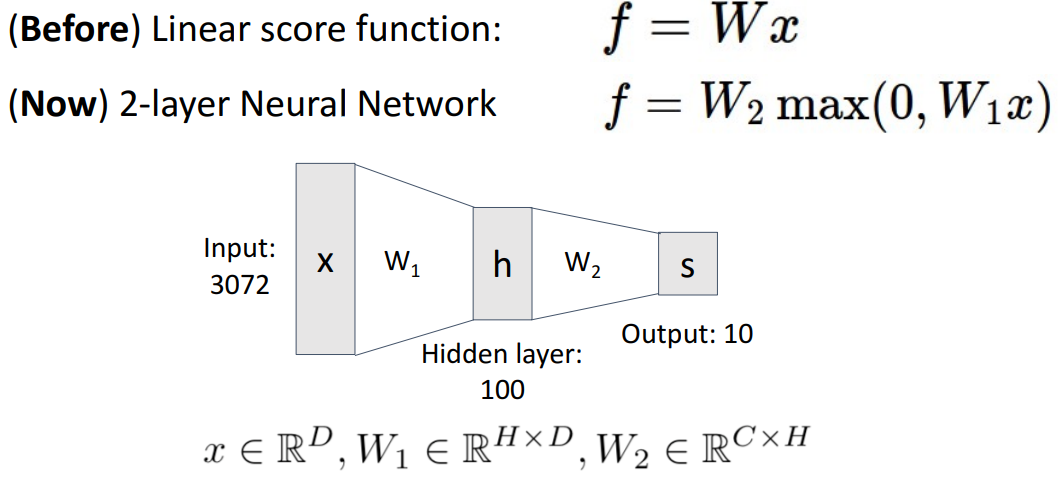

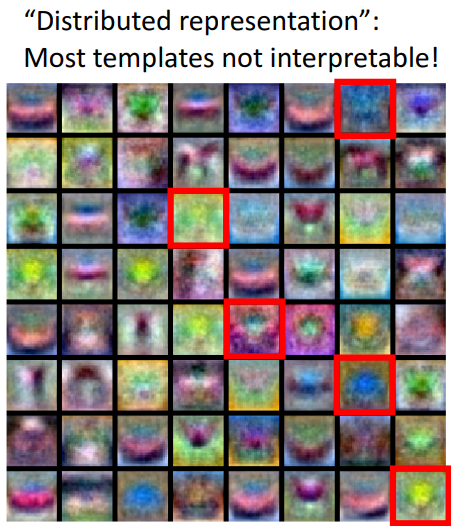

b. W의 template 역할

-

개념

-

Neural net: 1번째 layer = template 모음

2번째 layer = template 재조합

-

숨겨진 계층의 값 해석 방법

: 학습된 각 template이 입력이미지 x에 얼만큼 반응하는지

→ 내부에 대해 완벽히 해석X,

but 2-layer Neural Network System에서 확실히 식별할 수 있는 공간구조

-

class의 여러 mode별 다른 template사용 가능

: 선형분류에서 말의 머리가 2개 되는 문제 해결

-

분산 표현 (공간구조 파악가능 + 선형조합으로 이미지 정보 대충 파악가능)

: W1에서 학습하는 것들은 거의 인간이 해석하기 어려움

: 대신 어떤 종류의 공간구조 가지고 있으며, 신경망의 분산표현이라는 개념 사용해 이미지 나타냄

& 선형조합 통해 이미지 정보 알수O

-

-

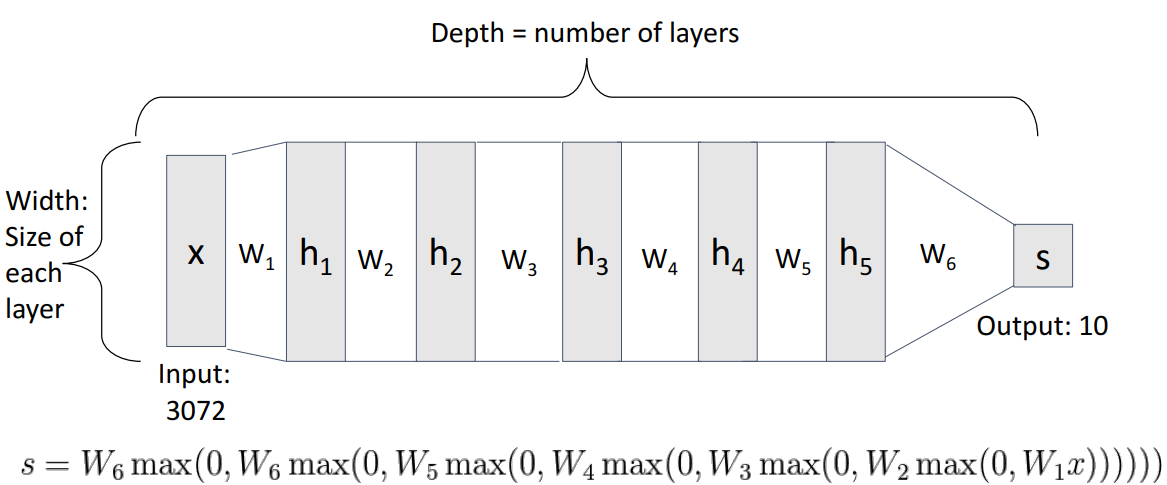

5. Deep Neural Network

- 해석

- Depth = number of layers

= weight matrix 개수

- Width = Size of each layer

= number of units

= 숨겨진 표현의 단위수, 차원수

- Depth = number of layers

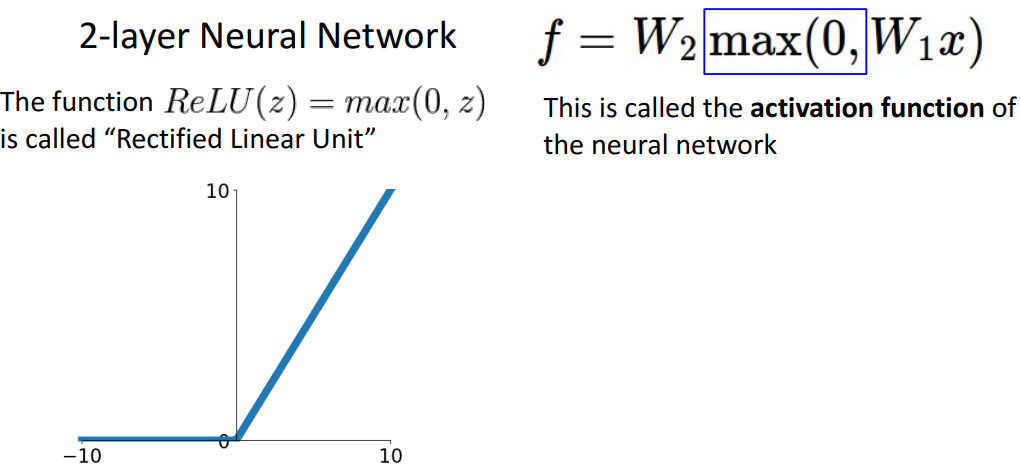

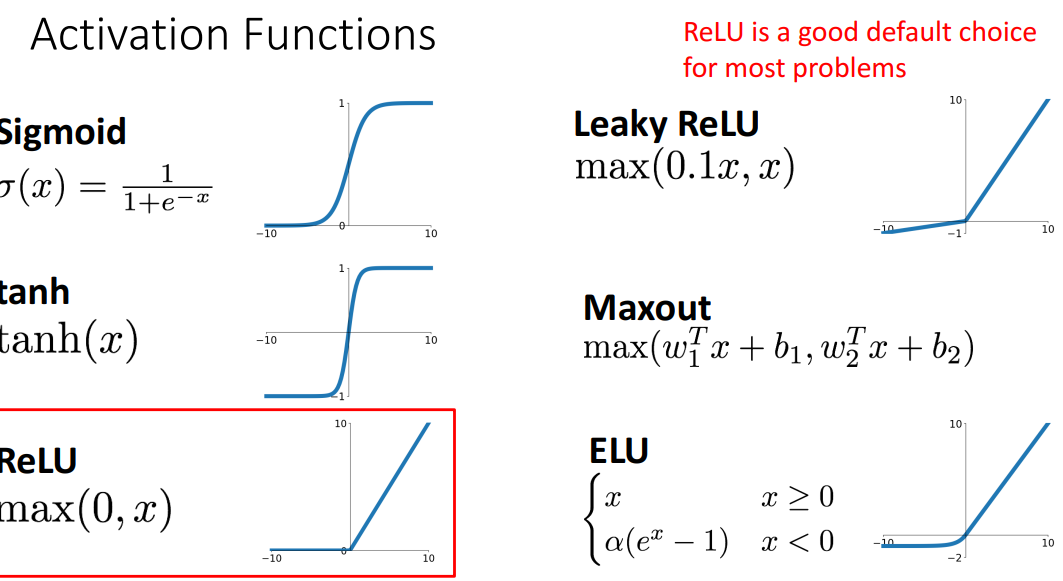

6. Activation Functions

1) 개념

- Neural Network에서 중요 역할 (선형 → 비선형)

- W1과 W2사이에 껴서 작동

- ex. relu

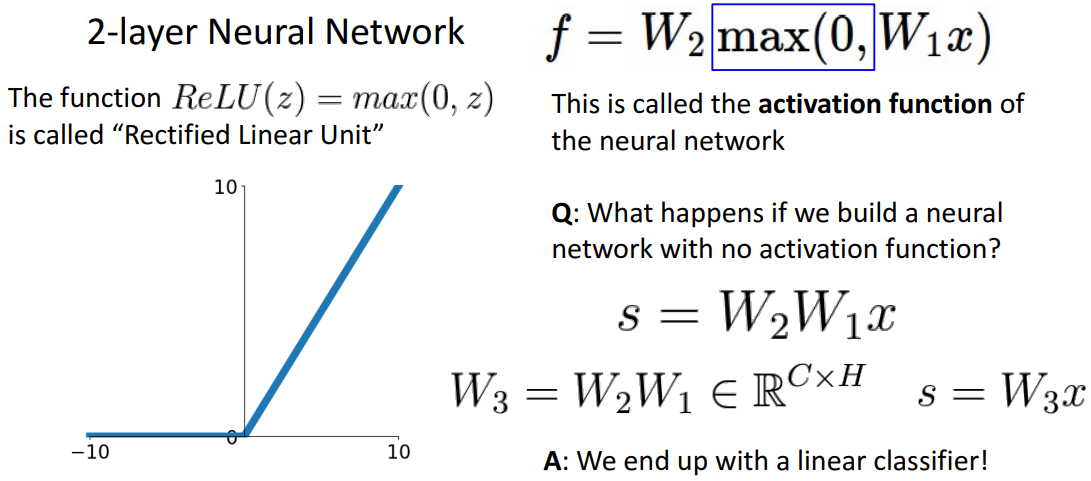

2) Question

-

Q. 활성화함수가 없으면 어케될까?

→ A. 여전히 선형분류 하게 된다.

ex. deep linear network (최적화에서 주로 쓰임)

3) 종류

- relu가 젤 많이 사용됨 (0에서 미분X, 다른 점에선 미분 편함)

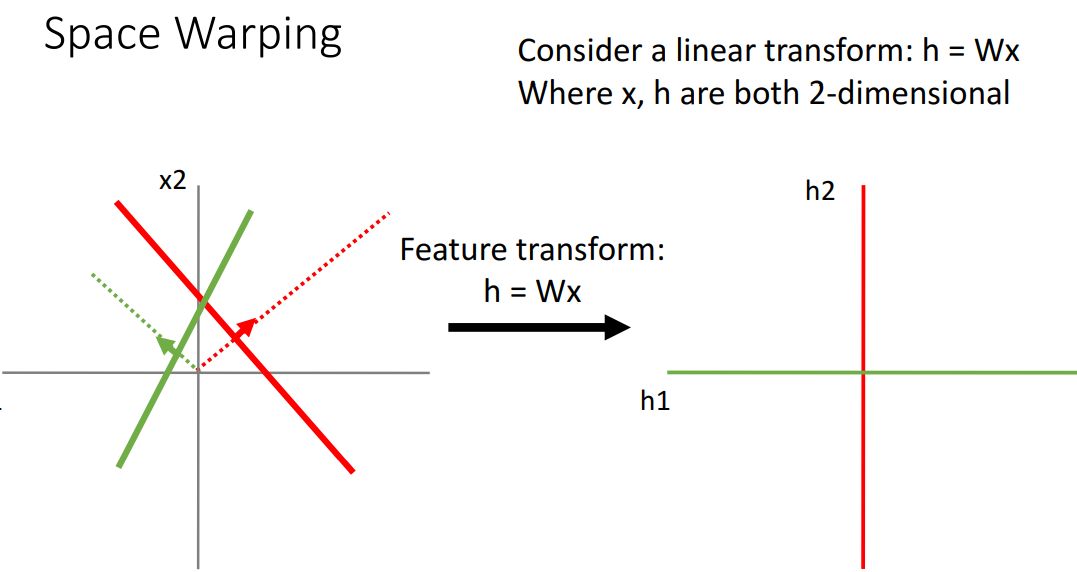

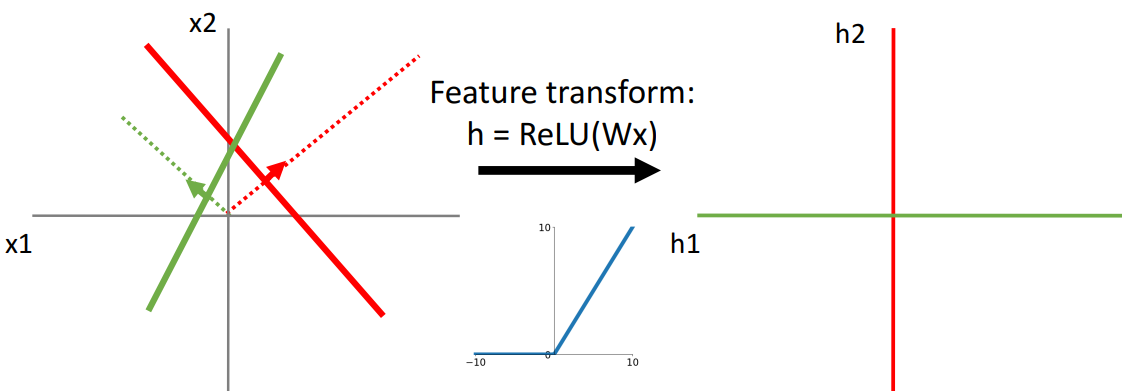

7. Space Warping

📍NN이 가장 강력한 2번째 이유 - 활성화함수로 비선형 분류 가능

- 기존) 분류 점수 예측 측면에서 설명

- 다른 관점) input 공간 왜곡

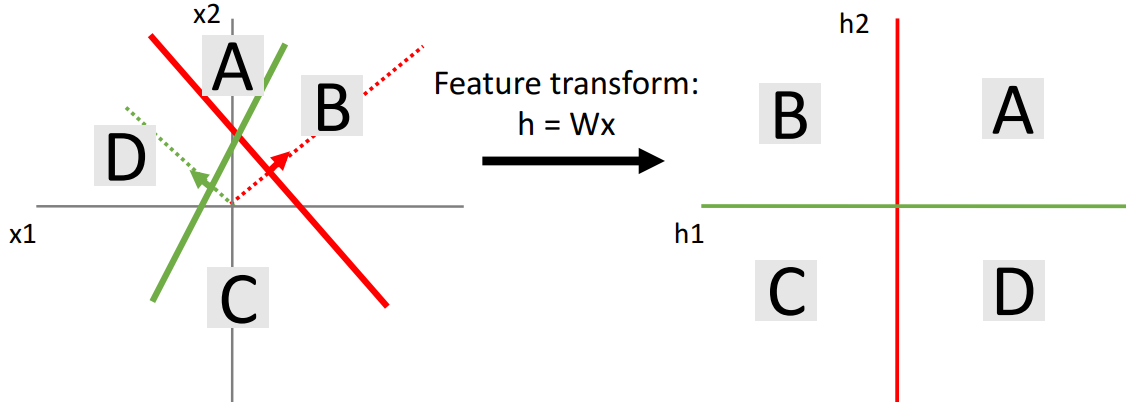

1) 개념

- 2차원 선형 공간에서는 2차원 선형변환하기

⇒ 입력공간에 2개선 그어져 있고, 이 선들은 4개 영역으로 나눠짐

⇒ 변환된 출력 공간에서 4개 사분면으로 변형

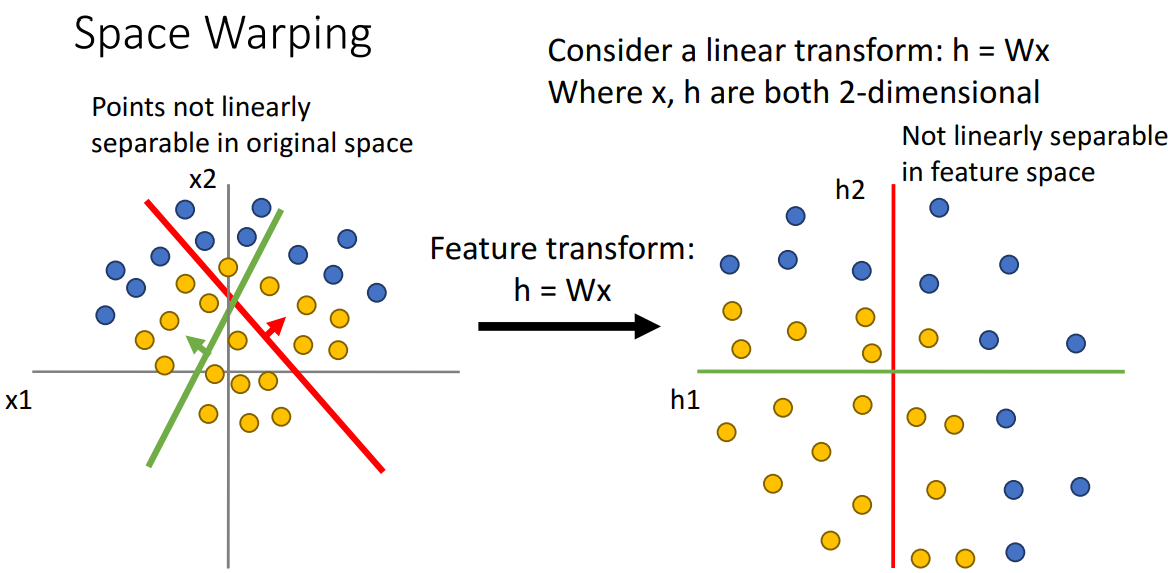

- 데이터 넣어서 해석해보기

⇒ 선형 변환시, 공간을 선형 왜곡(input data를 새롭게 표현)

⇒ 그치만 선형변환해도 여전히 선형분리가 안됨

-

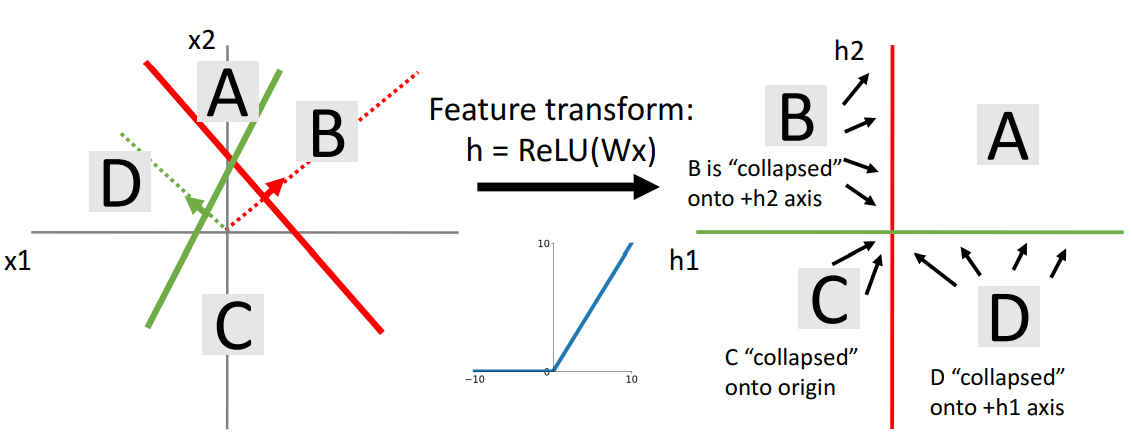

활성화 함수 적용 (비선형)

-

실제 적용 예시

- 해석

- B: ex. (-2,4)면 (0,4)됨

- D: ex. (4,-2)면 (4,0)됨

- C: ex. (-4,-4)면 (0,0)됨

- 해석

-

-

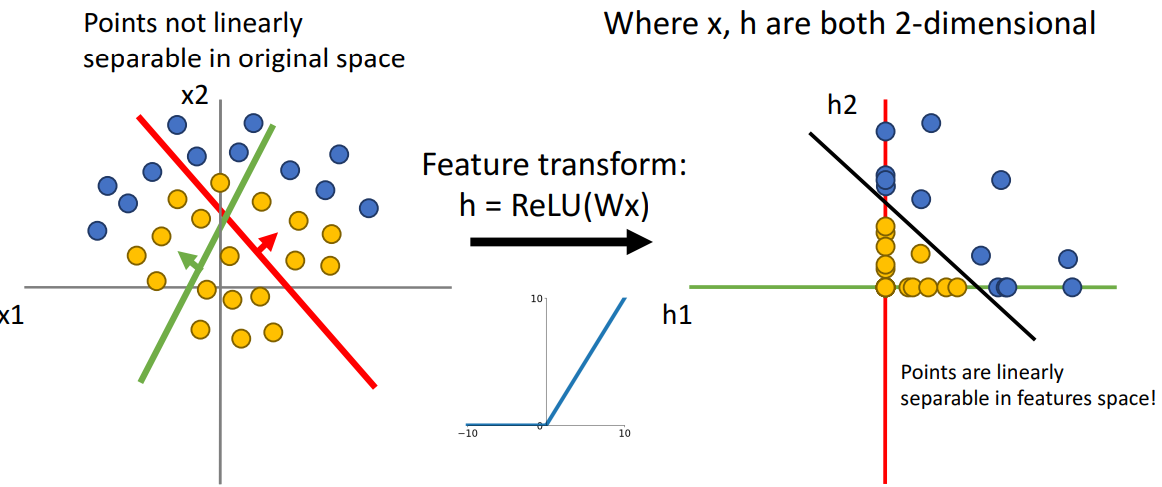

적용 후 결과

- 선형 분리 가능해짐

-

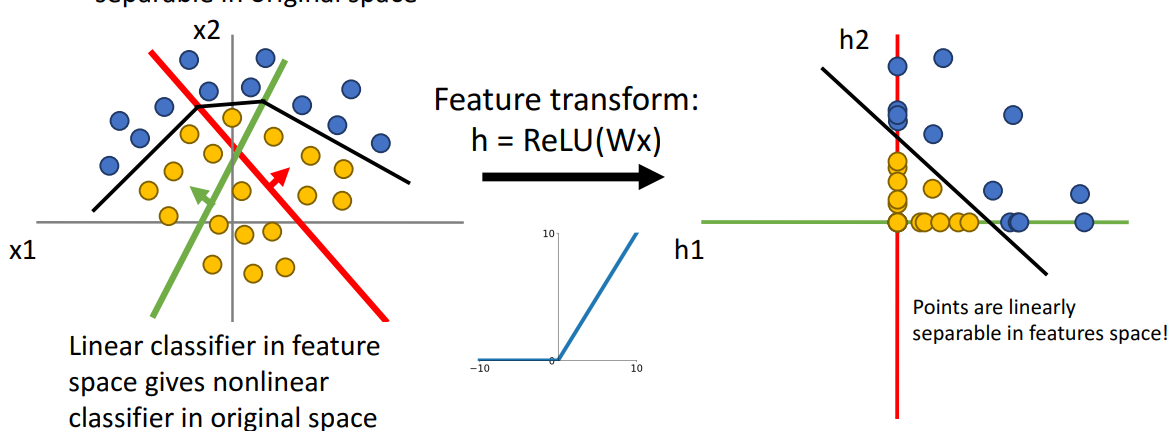

다시 original space로 돌릴때

-

original space에서 비선형 boundary 줄수 있음(=다시 돌릴때 비선형 분리 됨)

=⇒ 결론1) 여러 선형분류기와 같은 종류로 해석할때, 모든 종류의 공간을 자체적으로 접을수있음

→ 그리고 접힌 공간에서 선형분류 함

→ 과적합 발생 가능

=⇒ 결론2) Neural Network의 마지막은 linear하게 그려짐

→ Neural Network역할 = linear하게 긋기 전까지

→ feature변환 여러개 시도 후 마지막 선 그었을때 잘 분류

-

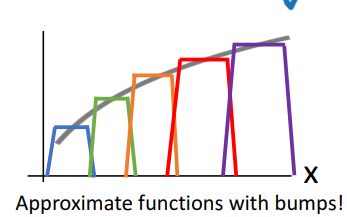

8. Universal Approximation

1) 개념

- Neural Network

- 제한된 입력공간에서 연속함수를 근사화하는 방법은 학습하는것

- 각 bump들을 합쳐서 NN 전반의 연산과정을 표현할 수 있음

- 더 나은 근사치 위해

- bump간 gap을 좁게 만들기 : fidelity조정

- 더 많은 bump 추가 가능

- 근데 이거 갖는다고 무조건 좋은건 아님