📌3/14 영상 강의 Naver API , 팀 스터디

Naver API

1. 네이버 API 사용 등록

- 네이버 개발자 센터

- https://developers.naver.com/apps/#/wizard/register?auth=true

- Application

- 어플리케이션 등록

- 어플리케이션 이름 ds_study

- 사용 API

- 검색

- 데이터랩(검색어트렌드)

- 데이터랩(쇼핑인사이트)

-환경추가

- WEB 설정

- http://localhost - Client ID: y6KOl0hqAheZ2YCUYuxr

- Client Secret: K4XOKgPETW

- https://developers.naver.com/apps/#/myapps/y6KOl0hqAheZ2YCUYuxr/overview

2. 네이버 검색 API 사용하기

- ulrlib : http 프로토콜에 따라서 서버의 요청/응답을 처리하기 위한 모듈

- urllib.request : 클라리언트의 요청을 처리하는 모듈

- urllib.parse : url 주소에 대한 분석

검색하기

블로그(blog), 책(book), 영화(movie), 카페(cafearticle), 쇼핑(shop), 백과사전(encyc)

원하는 검색 종류로 url 부분 수정한다

import os

import sys

import urllib.request

client_id = "나의 client_id"

client_secret = "나의 client_secret"

encText = urllib.parse.quote("머신러닝") #검색할 단어

url = "https://openapi.naver.com/v1/search/blog?query=" + encText # JSON 결과

# url = "https://openapi.naver.com/v1/search/blog.xml?query=" + encText # XML 결과

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

rescode = response.getcode()

if(rescode==200):

response_body = response.read()

print(response_body.decode('utf-8'))

else:

print("Error Code:" + rescode)

- 글자로 읽을 경우, decode utf-8 설정

print(response_body.decode("utf-8"))

3. 상품 검색

- 몰스킨

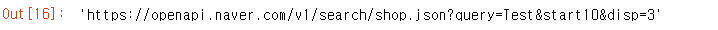

(1) gen_search_url()

def gen_search_url(api_node, search_text, start_num, disp_num):

base = "https://openapi.naver.com/v1/search"

node = "/" + api_node + ".json"

param_query = "?query=" + urllib.parse.quote(search_text)

param_start = "&start" + str(start_num)

param_disp = "&display=" + str(disp_num)

return base + node + param_query + param_start + param_disp

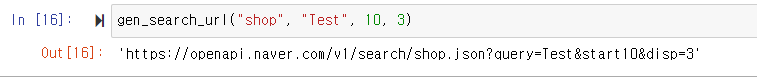

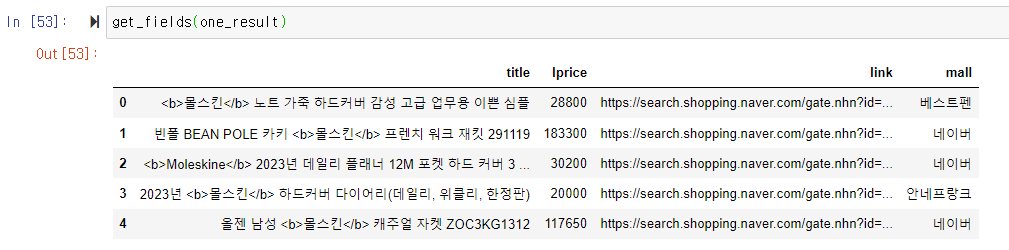

gen_search_url("shop", "Test", 10, 3)

(2) get_result_openpage()

import json

import datetime

def get_result_openpage(url):

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

print("[%s] Url Request Success" %datetime.datetime.now()) # %포메팅

return json.loads(response.read().decode("utf-8"))

url= gen_search_url("shop", "몰스킨", 1, 5)

one_result = get_result_openpage(url) # json 값은 dic 형태로 담겨있다

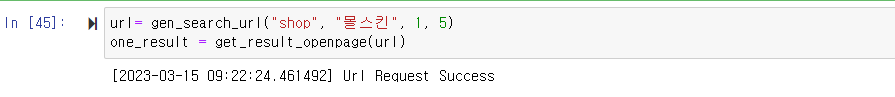

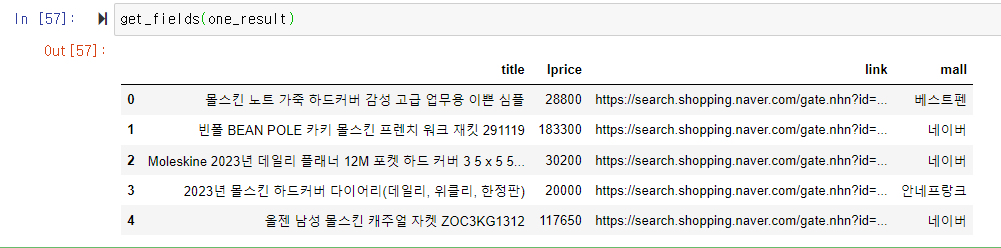

(3) get_fields()

import pandas as pd

def get_fields(json_data):

title = [each["title"] for each in json_data["items"]]

link = [each["link"] for each in json_data["items"]]

lprice = [each["lprice"] for each in json_data["items"]]

mall_Name = [each["mallName"] for each in json_data["items"]]

result_pd = pd.DataFrame({

"title" : title,

"link" : link,

"lprice" : lprice,

"mall" : mall_Name

}, columns=["title","lprice","link","mall"])

return result_pd

get_fields(one_result)

(4) delete_tag()

def delete_tag(input_str):

input_str = input_str.replace("<b>","")

input_str = input_str.replace("</b>","")

return input_strdef get_fields(json_data):

title = [delete_tag(each["title"]) for each in json_data["items"]]

link = [each["link"] for each in json_data["items"]]

lprice = [each["lprice"] for each in json_data["items"]]

mall_Name = [each["mallName"] for each in json_data["items"]]

result_pd = pd.DataFrame({

"title" : title,

"link" : link,

"lprice" : lprice,

"mall" : mall_Name

}, columns=["title","lprice","link","mall"])

return result_pd

get_fields(one_result)

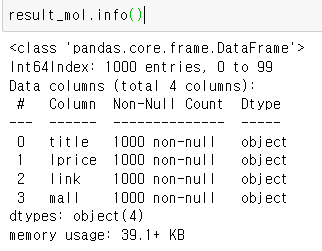

(5) actMain()

result_mol = []

for n in range(1, 1000, 100):

url = gen_search_url("shop", "몰스킨",n,100)

json_result = get_result_openpage(url)

pd_result = get_fields(json_result)

result_mol.append(pd_result)

result_mol = pd.concat(result_mol)

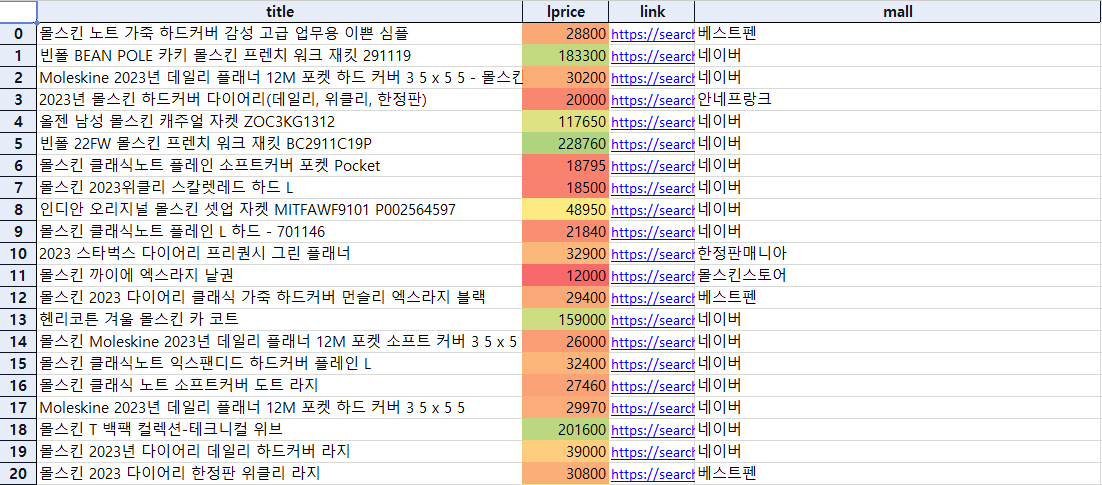

(6) to_excel()

!pip install xlsxwriter # 만약 설치 안되면 코랩에서 설치하자

writer = pd.ExcelWriter("../data/06_molskin_diary_in_naver_shop.xlsx", engine="xlsxwriter")

result_mol.to_excel(writer, sheet_name="Sheet1")

workbook = writer.book

worksheet = writer.sheets["Sheet1"]

worksheet.set_column("A:A", 4)

worksheet.set_column("B:B", 60)

worksheet.set_column("C:C", 10)

worksheet.set_column("D:D", 10)

worksheet.set_column("E:E", 50)

worksheet.set_column("F:F", 10)

worksheet.conditional_format("C2:C1001", {"type":"3_color_scale"})

writer.save()ls"../data/06_molskin_diary_in_naver_shop.xlsx"

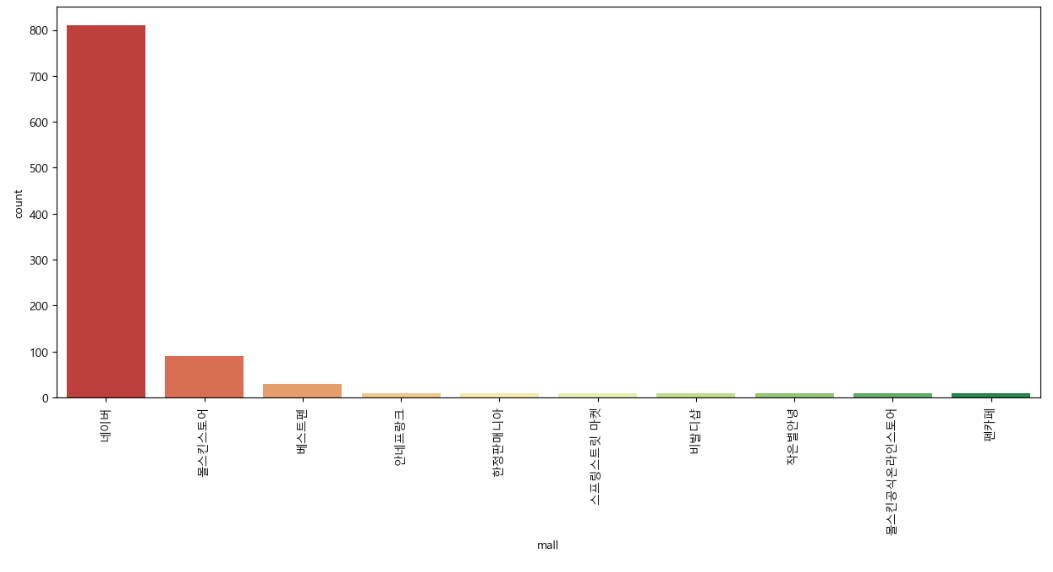

(7) 시각화

plt.figure(figsize=(15,6))

sns.countplot(

x = result_mol["mall"], # x= 꼭 명시해준다

data = result_mol,

palette = "RdYlGn",

order = result_mol["mall"].value_counts().index # 갯수를 세어줌

)

plt.xticks(rotation=90)

plt.show()

- countplot( )

지정한 컬럼 값의 갯수를 세어준다

x= 꼭 적어준다

👍마무리

함수로 데이터를 모으고 정리하니까 양이 많아도 금방 정리가 되는게 신기하고 기특하다

나 대신 일해주는 느낌

내 실력이 쑥쑥 늘어서 일꾼을 많이 만들고싶다