This article is about rethinking the concept of "resolution & receptive field" in deep CNNs.

Reference

1. OverFeat

2. Understanding the Effective Receptive Field in Deep Convolutional Neural Networks

1. Loss of Spatial Information

VGG and GoogleNet showed that deep convolutional neural networks are powerful in various tasks including image classification, object detection, semantic segmentation.

As the convolutional neural networks get deeper, we can think of two main problems. The first one, is about more practical issues: gradient vanishing problem and hardness of stable optimization. ResNet has solved this problem by introducing residual connections, which inspired a lot of network architectures afterward. The second one is about intrinsic property of convolution operation in multidimensional images: loss of spatial information.

In image classification task, loss of spatial information may not matter. However, VGG and GoogleNet still showed some weaknesses in classifying image which it's context is determined by the very small part of the image. Loss of spatial information much challenges the segmentation performance, since it requires pixel-wise fine output to perform semantic segmentation.

It has been thought that loss of spatial information is an unavoidable aspect of DCNN(Deep CNN), but OverFeat(2013, Pierre S et al.) have already shown some solutions to compensate the spatial information loss during pooling operation. In this article, interesting discussions about effectiveness of convolutional layer in capturing spatial information will be covered, too. It will provide an intuition about selecting a kernel size of the convolutional layer in the aspect of spatial information loss.

2. OverFeat: stitch-and-shift operation

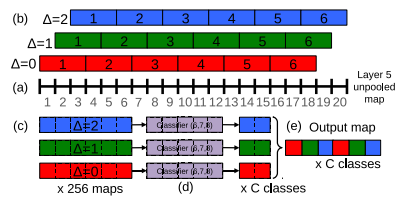

OverFeat resolves the spatial information loss during pooling operation, by introducing an interesting technique called stitch-and-shift pooling operation.

The figure above is a graphical illustration of 1-dimensional stitch-and-shift pooling operation of size 3. For three offset , slide is shifted by so three different combinations of representative feature are extracted. Tripeled feature maps are then 'stitched' to generate the final output map.

This process is quite similar to the notion of multi-head attention: a method that generates multiplied representation from given input features and thus enables model to extract rich and generalized output features. In aspect of locality information loss, stitch-and-shift operation compensates the information loss occured in the pooling operation.

3. Understanding the Effective Receptive Field in Deep Convolutional Neural Networks: Analyze the kernel size effect

Discussion begins from a simple question: "Is stack of two 3X3 convolution layes are identical to single 5X5 convoluion layer?"

Obviously we can say 'no', but it is hard to explain what makes the difference between two modules even though the size of each receptive field is identical.

Theoretically, stack of 3X3 CNN layer(without intercalating pooling layer) has same recpetive field compared to 5X5 CNN layer. However the behavioural difference inside the receptive layer exists, and this difference can be explained by introducing a novel concept called Effective Receptive Field(ERF).

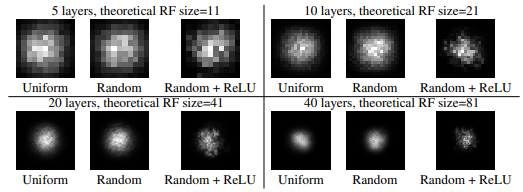

Authors of this paper theoretically derived that the input gradient signal decays with an exponential rate from the center of the receptive field. They defined the effective receptive field as the set of pixels which its signal(decay w.r.t the spatial distance) is inside the range of . They impirically measured the size of effective receptive field, and observed some interesting properties of ERF:

- Guassian distribution of ERF

- Shrinkage of ERF as model gets deeper

Thus, it indicates that stacks of 3x3 convolutional layers have smaller ERF compared to single 5x5 convolutional layer: which means higher spatial resolution. Therefore we can conclude that stacking more convolutional layer will behave with smaller ERF and somehow prevent the dilution of important features inside the receptive field.