(2023ICCV)EfficientViT, (2022ICLR)CosFormer를 읽고 이해한 Linear Attention

0

[Paper Review] Efficient and Scalable

목록 보기

26/30

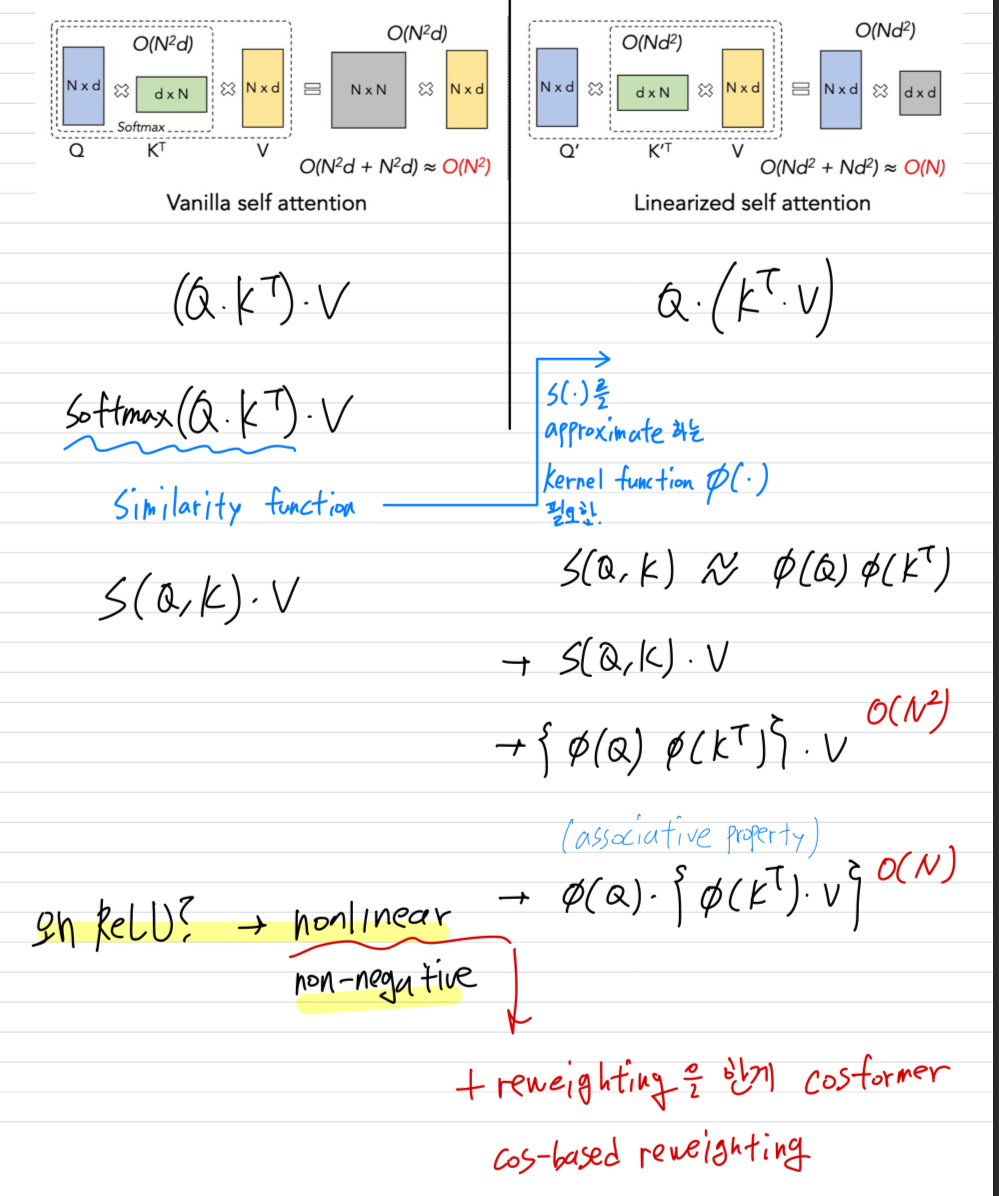

- softmax-based similarity function이 갖는 특징

- attention matrix are non-negative

- non-linear re-weighting scheme

- ReLU-based linear similarity function은 softmax-based similarity function이 갖는 특징의 대부분을 활용할 수 있음.

- attention matrix are non-negative

- non-linear, but not yet re-weighting scheme...

- CosFormer는 ReLU-based linear simlarity function의 2.에서 re-weighting scheme이 없는 것을 보완하기 위해 cos-based re-weighting mechanism을 제안함.

- attention matrix are non-negative

- non-linear, -based re-weighting scheme