NLP모델

1.BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

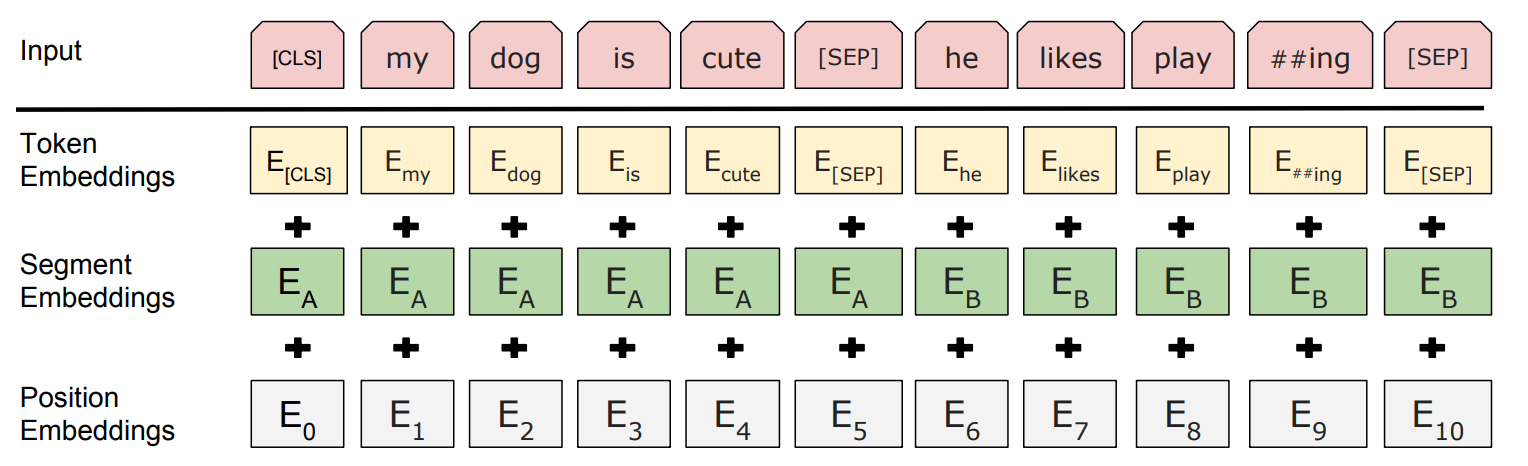

소개BERT(Bidirectional Encoder Representations from Transformers)구글이 2018년에 발표한 자연어 처리 모델트랜스포머 아키텍처를 기반으로 함기존 NLP 모델과 달리 BERT는 입력 텍스트를 양방향으로 이해함주요 특징양방향

2025년 1월 19일

2.📜Transformer: Attention is All You Need (2017)

Transformer: Introduction 자연어 처리(NLP)는 오랜 시간동안 순환신경망(RNN, LSTM)을 기반으로 발전함 이러한 모델들은 (1) 긴 문장에서 정보를 잃어버리고(Long-Term Dependency Problem), (2) 병렬 연산이 어렵다는

2025년 1월 30일

3.GPT-1 : Improving Language Understanding by Generative Pre-Training (2018)

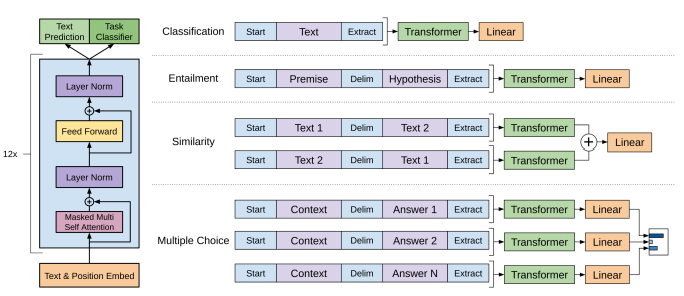

GPT-1: Introduction openAI에서 GPT-1(Generative Pre-trained Transformer) 개발 자연어 이해를 향상시키이 위해 사전학습(pre-traning)과 미세 조정(fine-tuning)의 두 가지 단계 활용 Backgroun

2025년 1월 31일

4.RoBERTa: A Robustly Optimized BERT Pretraining Approach

논문 링크: https://arxiv.org/pdf/1907.11692Facebook AI Research 팀에서 발표한 논문기존 BERT의 학습 방식에 대한 재검토와 여러 최적화 기법을 적용해 모델 성능을 향상시킴💡 주요 목표는 "BERT의 잠재력을 최대한

2025년 2월 2일

5.BERT를 활용한 이진 분류 실습(전처리 및 모델 학습)

전체 학습 흐름 주의할 점: .py에서 진행 argparses는 .ipynb에서 실행이 안됨 깃허브에서 풀코드 확인 가능 0. 라이브러리 불러오기 1. 데이터 설명 및 전처리 Fake News Detection: 가짜뉴스탐지 데이터셋 활용 데이터셋 구성: t

2025년 2월 3일