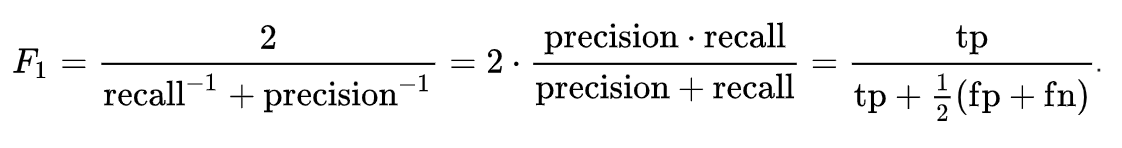

F1-score is the weighted average of precision and recall. Hence, since it takes both FP and FN into account, better the balance between precision and recall, higher the F1-score will be.

For example if Model A has precision of 0.9 and recall of 0.1 while Model B has precision and recall of 0.5, A will have F1-score of 0.18 while B has 0.5.

Scikit-learn provides f1_score() API. We will estimate the F1-score based on logistic-regression model we used for Titanic dataset.

Input

# F1-score

from sklearn.metrics import f1_score

f1 = f1_score(y_test, pred)

print('F1 Score : {0:4f}'.format(f1))Output

F1 Score : 0.796610As we did on the previous post, we will gradually adjust the threshold-value to see the changes in F1-score.

Input

# predict titanic with accuracy, confusion matrix, precision, recall, f1score

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, confusion_matrix

def get_clf_eval(y_test, pred):

confusion = confusion_matrix(y_test, pred)

accuracy = accuracy_score(y_test, pred)

precision = precision_score(y_test, pred)

recall = recall_score(y_test, pred)

f1 = f1_score(y_test, pred)

print('Confusion Matrix')

print(confusion)

print('Accuracy : ', accuracy)

print('Precision : ', precision)

print('Recall : ', recall)

print('F1-score : ', f1)

thresholds = [0.4, 0.45, 0.50, 0.55, 0.60]

pred_proba = lr_clf.predict_proba(X_test)

get_eval_by_threshold(y_test, pred_proba[:, 1].reshape(-1,1), thresholds)Output

Custom Threshold : 0.4

Confusion Matrix

[[97 21]

[11 50]]

Accuracy : 0.8212290502793296

Precision : 0.704225352112676

Recall : 0.819672131147541

F1-score : 0.7575757575757576

Custom Threshold : 0.45

Confusion Matrix

[[105 13]

[ 13 48]]

Accuracy : 0.8547486033519553

Precision : 0.7868852459016393

Recall : 0.7868852459016393

F1-score : 0.7868852459016392

Custom Threshold : 0.5

Confusion Matrix

[[108 10]

[ 14 47]]

Accuracy : 0.8659217877094972

Precision : 0.8245614035087719

Recall : 0.7704918032786885

F1-score : 0.7966101694915254

Custom Threshold : 0.55

Confusion Matrix

[[111 7]

[ 16 45]]

Accuracy : 0.8715083798882681

Precision : 0.8653846153846154

Recall : 0.7377049180327869

F1-score : 0.7964601769911505

Custom Threshold : 0.6

Confusion Matrix

[[113 5]

[ 17 44]]

Accuracy : 0.8770949720670391

Precision : 0.8979591836734694

Recall : 0.7213114754098361

F1-score : 0.8

As we can see on the results above, F1-score is highest at threshold of 0.6. However, we must also notice that the recall-score is also declining in large proportion (72%).